2000字深度理解Docker0

理解Docker0

测试

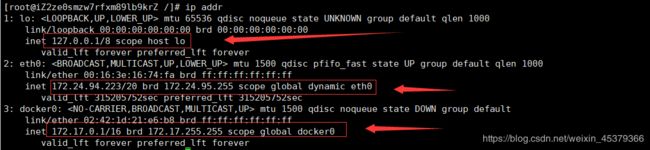

- 本机回环地址

- 阿里云内网地址

- docker0地址

#问题:docker是如何处理容器网络访问的?

> #[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker run -d -P --name tomcat01 tomcat

> #查看容器内部网络地址 ip addr , 发现容器启动的时候会得到一个 62: eth0@if63 的ip地址,这个地址是docker分配的!

>

> [root@iZ2ze0smzw7rfxm89lb9krZ /]# docker exec -it tomcat01 ip addr

> 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

> link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

> inet 127.0.0.1/8 scope host lo

> valid_lft forever preferred_lft forever

> 62: eth0@if63: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

> link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

> inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

> valid_lft forever preferred_lft forever

#linux 可以 ping 通容器内部

[root@iZ2ze0smzw7rfxm89lb9krZ /]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.105 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.067 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.069 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.066 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.065 ms

64 bytes from 172.17.0.2: icmp_seq=6 ttl=64 time=0.078 ms

64 bytes from 172.17.0.2: icmp_seq=7 ttl=64 time=0.068 ms

64 bytes from 172.17.0.2: icmp_seq=8 ttl=64 time=0.078 ms

64 bytes from 172.17.0.2: icmp_seq=9 ttl=64 time=0.069 ms

64 bytes from 172.17.0.2: icmp_seq=10 ttl=64 time=0.065 ms

64 bytes from 172.17.0.2: icmp_seq=11 ttl=64 time=0.064 ms

64 bytes from 172.17.0.2: icmp_seq=12 ttl=64 time=0.066 ms

^C

--- 172.17.0.2 ping statistics ---

12 packets transmitted, 12 received, 0% packet loss, time 10999ms

rtt min/avg/max/mdev = 0.064/0.071/0.105/0.014 ms

原理

1、我们每启动一个docker容器,docker就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0

- 再次测试

[root@iZ2ze0smzw7rfxm89lb9krZ /]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:16:3e:16:74:fa brd ff:ff:ff:ff:ff:ff

inet 172.24.94.223/20 brd 172.24.95.255 scope global dynamic eth0

valid_lft 315201564sec preferred_lft 315201564sec

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:1d:21:e6:b8 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

63: vethc98ce19@if62: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 76:cc:47:76:7e:e2 brd ff:ff:ff:ff:ff:ff link-netnsid 0

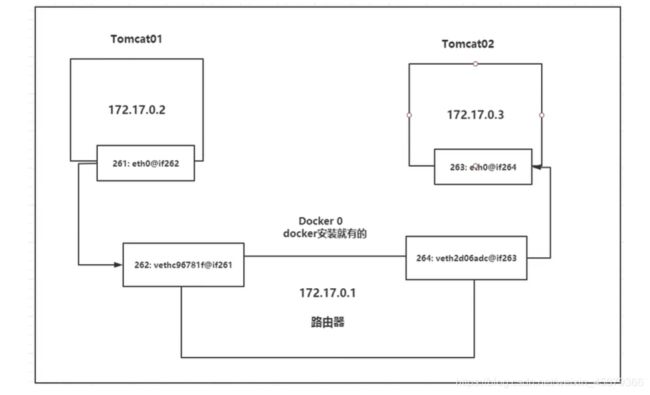

我们发现容器带来的网卡,都是一对对的

#evth-pair 就是一对的虚拟设备接口,他们都是成对出现的,一端连着协议,一端彼此相连

#正因为这个特性,通常用 evch-pair 充当一个桥梁

#OpenStac,Docker容器之间的连接,OVS的连接,都是使用 evth-pair 技术

2、我们来测试 tomcat01 和 tomcat02 是否可以ping通

[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker exec -it tomcat02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.116 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.093 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.099 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.089 ms

^C

--- 172.17.0.2 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.089/0.099/0.116/0.012 ms

#结论:容器和容器之间是可以ping通的!

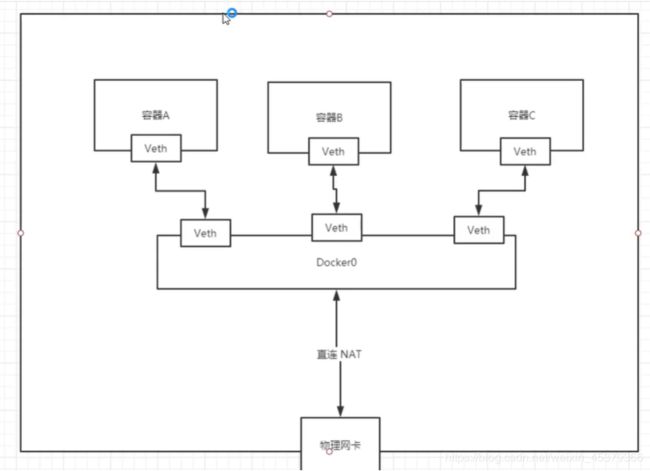

- 网络模型图

结论:tomcat01 和 tomcat02是公用的一个路由器 docker0

所有容器不指定网络的情况下,都是docker0路由的,docker会给我们容器分配一个默认的可用ip

Docker 使用的是Linux的桥接,宿主机中是一个Docker容器的网桥 --docker0

Docker中的所有网络接口都是虚拟的,虚拟接口转发效率高

只要容器删除,对应网卡就消失了

编写微服务,项目不重启,数据库ip换了,是否可以用服务名ping容器呢?

[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Name or service not known

#通过--link 就可以解决网络问题

[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker exec -it tomcat04 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.096 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.103 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.093 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=4 ttl=64 time=0.092 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=5 ttl=64 time=0.094 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=6 ttl=64 time=0.091 ms

^C

--- tomcat02 ping statistics ---

6 packets transmitted, 6 received, 0% packet loss, time 1003ms

rtt min/avg/max/mdev = 0.091/0.094/0.103/0.013 ms

[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker run -d -P --name tomcat04 --link tomcat02 tomcat

#反向可以ping通吗?

[root@iZ2ze0smzw7rfxm89lb9krZ /]# docker exec -it tomcat02 ping tomcat04

ping: tomcat04: Name or service not known

这个其实是tomcat04在本地配置了tomcat02

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker exec -it tomcat04 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 09f83138586d

172.17.0.2 18240a8e7136

现在用Docker,不建议使用–link

docker问题:不支持容器访问!

自定义网络(容器互联)

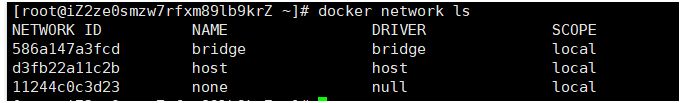

查看所有docker网络:

网络模式

bridge:桥接(默认)

none:不配置网络

host:主机模式(和宿主机共享网络)

container:容器网络连通(局限性很大)

测试:

#我们直接启动的命令

docker run -d -P --name tomcat01 tomcat

docker run -d -P --name tomcat01 --net bridge tomcat

#docker0 特点:默认的,服务名不能访问, --link可以打通连接

#我们可以自定义一个网络

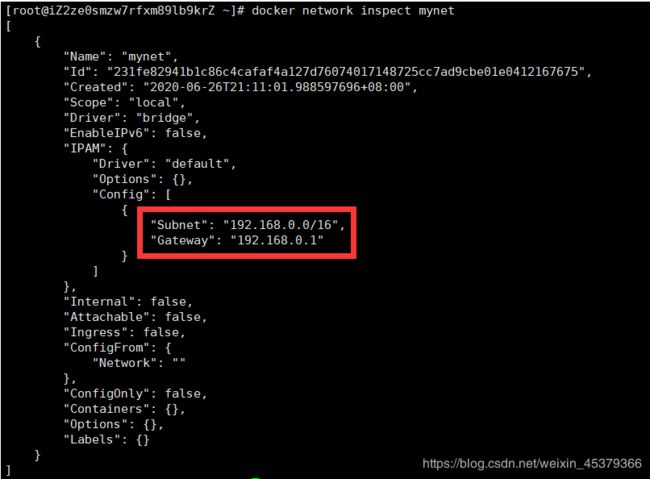

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

231fe82941b1c86c4cafaf4a127d76074017148725cc7ad9cbe01e0412167675

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

586a147a3fcd bridge bridge local

d3fb22a11c2b host host local

231fe82941b1 mynet bridge local

11244c0c3d23 none null local

[

{

"Name": "mynet",

"Id": "231fe82941b1c86c4cafaf4a127d76074017148725cc7ad9cbe01e0412167675",

"Created": "2020-06-26T21:11:01.988597696+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

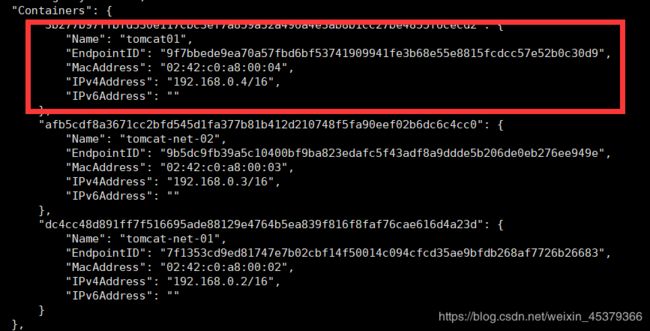

"afb5cdf8a3671cc2bfd545d1fa377b81b412d210748f5fa90eef02b6dc6c4cc0": {

"Name": "tomcat-net-02",

"EndpointID": "9b5dc9fb39a5c10400bf9ba823edafc5f43adf8a9ddde5b206de0eb276ee949e",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"dc4cc48d891ff7f516695ade88129e4764b5ea839f816f8faf76cae616d4a23d": {

"Name": "tomcat-net-01",

"EndpointID": "7f1353cd9ed81747e7b02cbf14f50014c094cfcd35ae9bfdb268af7726b26683",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

#再次测试 ping 连接

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.116 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.082 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.082 ms

64 bytes from 192.168.0.3: icmp_seq=4 ttl=64 time=0.082 ms

^C

--- 192.168.0.3 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.082/0.090/0.116/0.017 ms

#不使用 --link 也可以 ping 服务名

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.065 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.085 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.088 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.087 ms

^C

--- tomcat-net-02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3ms

rtt min/avg/max/mdev = 0.065/0.081/0.088/0.011 ms

#我们自定义的网络docker都已经帮我们维护好了对应的关系,所以我们平时使用这样的网络

#好处:不同的集群使用不同的网络,保证集群是安全和健康的

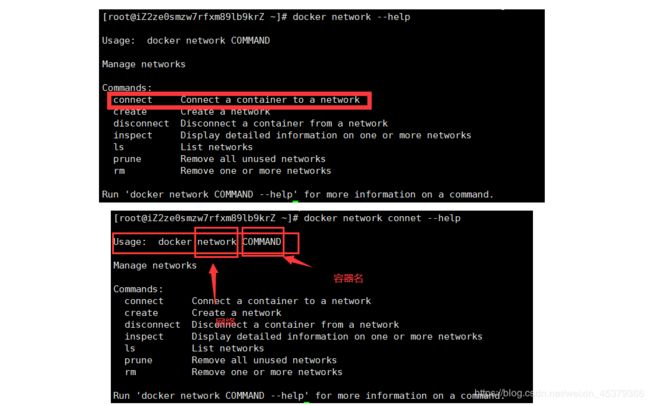

网络连通

#测试打通 tomcat01 -mynet

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker network connect mynet tomcat01

#连通之后,就是讲tomcat01 放到了mynet网络下

#一个容器,两个ip地址 eg:阿里云服务 公网IP、私网IP

#再次测试,成功ping通

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.095 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.086 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.085 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=4 ttl=64 time=0.081 ms

^C

--- tomcat-net-01 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3ms

rtt min/avg/max/mdev = 0.081/0.086/0.095/0.012 ms

#本质:将容器与网络打通,用connect命令

结论:假设要跨网络操作别人,就需要使用docker network connet 连通!

实战:部署Redis集群

#通过脚本创建六个redis配置

for port in $(seq 1 6); \

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >>/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

#启动redis

docker run -p 637${port}:6379 -p 1637${port}:16379 --name redis-${port} \

-v /mydata/redis/node-${port}/data:/data \

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.1${port} redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf; \

#创建集群

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas

Unrecognized option or bad number of args for: '--cluster-replicas'

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 97389a8715a42d0edb0642484096b0226f943de5 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 396bc052f0b375b8f988a240a1dfc9d78ca6282a 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: d418dab2cae2366a6fa72ad4edb7786e3497e3d8 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 8998fe70e1db9abdd7a6032e2747447ba11d5206 172.38.0.14:6379

replicates d418dab2cae2366a6fa72ad4edb7786e3497e3d8

S: b8d4fd94f5eedadda9df2b4227cfa6876bb66407 172.38.0.15:6379

replicates 97389a8715a42d0edb0642484096b0226f943de5

S: 0960f8a3973e12f41c20af6b7a51b57e53669377 172.38.0.16:6379

replicates 396bc052f0b375b8f988a240a1dfc9d78ca6282a

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 97389a8715a42d0edb0642484096b0226f943de5 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: d418dab2cae2366a6fa72ad4edb7786e3497e3d8 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: b8d4fd94f5eedadda9df2b4227cfa6876bb66407 172.38.0.15:6379

slots: (0 slots) slave

replicates 97389a8715a42d0edb0642484096b0226f943de5

M: 396bc052f0b375b8f988a240a1dfc9d78ca6282a 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 0960f8a3973e12f41c20af6b7a51b57e53669377 172.38.0.16:6379

slots: (0 slots) slave

replicates 396bc052f0b375b8f988a240a1dfc9d78ca6282a

S: 8998fe70e1db9abdd7a6032e2747447ba11d5206 172.38.0.14:6379

slots: (0 slots) slave

replicates d418dab2cae2366a6fa72ad4edb7786e3497e3d8

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3f729b2ab8d0 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 7 minutes ago Up 7 minutes 0.0.0.0:6375->6379/tcp, 0.0.0.0:16375->16379/tcp redis-5

bc507bca3631 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 7 minutes ago Up 7 minutes 0.0.0.0:6374->6379/tcp, 0.0.0.0:16374->16379/tcp redis-4

0a8bcf9b1cad redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 8 minutes ago Up 8 minutes 0.0.0.0:6373->6379/tcp, 0.0.0.0:16373->16379/tcp redis-3

88eef8075431 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 8 minutes ago Up 8 minutes 0.0.0.0:6372->6379/tcp, 0.0.0.0:16372->16379/tcp redis-2

788571c14d86 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 9 minutes ago Up 9 minutes 0.0.0.0:6371->6379/tcp, 0.0.0.0:16371->16379/tcp redis-1

70fdeb1d01dc redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 11 minutes ago Up 10 minutes 0.0.0.0:6376->6379/tcp, 0.0.0.0:16376->16379/tcp redis-6

[root@iZ2ze0smzw7rfxm89lb9krZ ~]# docker stop 0a8bcf9b1cad

0a8bcf9b1cad

172.38.0.13:6379> get a

^C

/data # redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

172.38.0.14:6379> cluster nodes

0960f8a3973e12f41c20af6b7a51b57e53669377 172.38.0.16:6379@16379 slave 396bc052f0b375b8f988a240a1dfc9d78ca6282a 0 1593180670569 6 connected

97389a8715a42d0edb0642484096b0226f943de5 172.38.0.11:6379@16379 master - 0 1593180671071 1 connected 0-5460

b8d4fd94f5eedadda9df2b4227cfa6876bb66407 172.38.0.15:6379@16379 slave 97389a8715a42d0edb0642484096b0226f943de5 0 1593180669566 5 connected

d418dab2cae2366a6fa72ad4edb7786e3497e3d8 172.38.0.13:6379@16379 master,fail - 1593180574570 1593180572864 3 connected

8998fe70e1db9abdd7a6032e2747447ba11d5206 172.38.0.14:6379@16379 myself,master - 0 1593180670000 7 connected 10923-16383

396bc052f0b375b8f988a240a1dfc9d78ca6282a 172.38.0.12:6379@16379 master - 0 1593180669000 2 connected 5461-10922

docker搭建redis集群完成!

我们使用了docker之后,所有的技术都会慢慢的简单起来

SpringBoot微服务打包Docker镜像

1、构建springboot项目

2、打包应用

3、编写dockerfile

4、构建镜像

5、发布运行!

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-mFbjITj4-1593182059720)(C:\Users\26572\AppData\Roaming\Typora\typora-user-images\image-20200626221240342.png)]](http://img.e-com-net.com/image/info8/22d460463ca44848992877c70148d8ef.jpg)