Speak之RDD介绍

参考: http://www.aboutyun.com/forum.php?mod=viewthread&tid=8371&extra=page%3D17

一、RDD 是什么(Resilient Distributed Dataset.)

RDD是弹性的分布式集合

RDD的弹性表现:

1、弹性之一:自动的进行内存和磁盘数据存储的切换;

2、弹性之二:基于Lineage的高效容错(第n个节点出错,会从第n-1个节点恢复,血统容错);

3、弹性之三:Task如果失败会自动进行特定次数的重试(默认4次);

4、弹性之四:Stage如果失败会自动进行特定次数的重试(可以只运行计算失败的阶段);只计算失败的数据分片;

5、checkpoint和persist

6、数据调度弹性:DAG TASK 和资源 管理无关

7、数据分片的高度弹性(人工自由设置分片函数),repartition

1、分片列表: 就是能够被切分, 和hadoop 一样, 能够切分的数据才可以进行并行计算

protected def getPartitions: Array[Partition]

2、有一个函数计算每一个分片,得到一个可遍历的结果

def compute(split:Partition, context: TaskContext):Interator[T]

3、对其他RDD的依赖列表, 依赖具体分为宽依赖和窄依赖,

protected def getDependencies: Seq[Dependcy[_]] = deps

4、可选: Key-Value型的RDD是根据哈希来分区的, 类似mapreduce当中的Partitioner中, 控制key分到哪个reduce。

@transient val partitioner:Option[Paritioner] = none

5、可选: 每一个分片优先计算位置(preferred locations), 比如HDFS中的block的所在位置应该是优先计算的位置。

指定优先位置,输入参数是split分片,输出结果是一组优先的节点位置

protected def getPreferredLocaltions(split: Partition): Seq[String] = Nil

2、多种RDD之间的转化

接下来看一个案例

val hdfsFile = sc.textFile(args(1))

val flatMapRdd = hdfsFile.flatMap(s => s.split(" "))

val filterRdd = flatMapRdd.filter(_.length == 2)

val mapRdd = filterRdd.map(word => (word, 1))

val reduce = mapRdd.reduceByKey(_ + _)3、我们首先看textFile的这个方法,进入SparkContext这个方法,找到它。

/**

* Read a text file from HDFS, a local file system (available on all nodes), or any

* Hadoop-supported file system URI, and return it as an RDD of Strings.

*/

def textFile(path: String, minPartitions: Int = defaultMinPartitions): RDD[String] = {

hadoopFile( //hadoopFile中有四个参数, 参考mapreduce

path, //数据源

classOf[TextInputFormat], //InputFormat

classOf[LongWritable], //Mapper的第一个类型

classOf[Text], //Mapper的第二个类型

minPartitions)

.map(pair => pair._2.toString)

//对hadoopFile后面加了一个map方法,取pair的第二个参数了,最后在shell里面我们看到它是一个MappredRDD了。

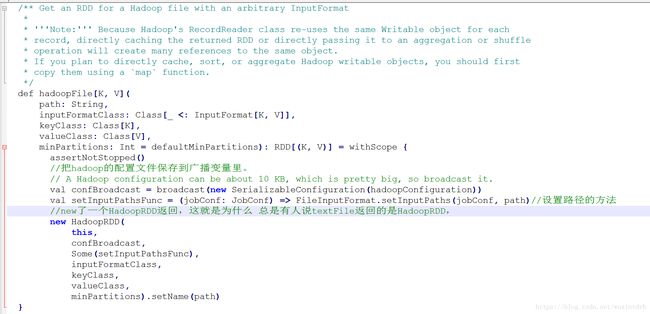

}3.1、看看hadoopFile方法,里面我们看到它做了3个操作。

1、把hadoop的配置文件保存到广播变量里。

2、设置路径的方法

3、new了一个HadoopRDD返回

3.2、接下来看看HadoopRDD这个类吧,我们重点看看它的getPartitions、compute、getPreferredLocations。

3.2.1、getPartitions

override def getPartitions: Array[Partition] = {

val jobConf = getJobConf()

// add the credentials here as this can be called before SparkContext initialized

SparkHadoopUtil.get.addCredentials(jobConf)

val inputFormat = getInputFormat(jobConf)

//调用的是inputFormat自带的getSplits方法来计算分片

val inputSplits = inputFormat.getSplits(jobConf, minPartitions)

val array = new Array[Partition](inputSplits.size) //Array 包含

for (i <- 0 until inputSplits.size) {

//把分片HadoopPartition包装到到array里面返回

array(i) = new HadoopPartition(id, i, inputSplits(i))

}

array

}3.2.2、我们接下来看compute方法,它的输入值是一个Partition,返回是一个Iterator[(K, V)]类型的数据,这里面我们只需要关注2点即可。

1、把Partition转成HadoopPartition,然后通过InputSplit创建一个RecordReader

2、重写Iterator的getNext方法,通过创建的reader调用next方法读取下一个值。

override def compute(theSplit: Partition, context: TaskContext): InterruptibleIterator[(K, V)] = {

val iter = new NextIterator[(K, V)] {

//将theSplit 转化成HadoopPartition

val split = theSplit.asInstanceOf[HadoopPartition]

logInfo("Input split: " + split.inputSplit)

val jobConf = getJobConf()

val inputMetrics = context.taskMetrics.getInputMetricsForReadMethod(DataReadMethod.Hadoop)

// Sets the thread local variable for the file's name

split.inputSplit.value match {

case fs: FileSplit => SqlNewHadoopRDDState.setInputFileName(fs.getPath.toString)

case _ => SqlNewHadoopRDDState.unsetInputFileName()

}

// Find a function that will return the FileSystem bytes read by this thread.

//Do this before creating RecordReader, because RecordReader's constructor might read some bytes

val bytesReadCallback = inputMetrics.bytesReadCallback.orElse {

split.inputSplit.value match {

case _: FileSplit | _: CombineFileSplit =>

SparkHadoopUtil.get.getFSBytesReadOnThreadCallback()

case _ => None

}

}

inputMetrics.setBytesReadCallback(bytesReadCallback)

//创建RecordReader

var reader: RecordReader[K, V] = null

val inputFormat = getInputFormat(jobConf)

HadoopRDD.addLocalConfiguration(

new SimpleDateFormat("yyyyMMddHHmm").format(createTime),

context.stageId,

theSplit.index,

context.attemptNumber,

jobConf)

reader = inputFormat.getRecordReader(split.inputSplit.value, jobConf, Reporter.NULL)

// Register an on-task-completion callback to close the input stream.

context.addTaskCompletionListener{ context => closeIfNeeded() }

//调用Reader的next方法

val key: K = reader.createKey()

val value: V = reader.createValue()

override def getNext(): (K, V) = {

try {

finished = !reader.next(key, value)

} catch {

case eof: EOFException =>

finished = true

}

if (!finished) {

inputMetrics.incRecordsRead(1)

}

(key, value)

}

override def close() {

if (reader != null) {

SqlNewHadoopRDDState.unsetInputFileName()

// Close the reader and release it. Note: it's very important that we don't close the

// reader more than once, since that exposes us to MAPREDUCE-5918 when running against

// Hadoop 1.x and older Hadoop 2.x releases. That bug can lead to non-deterministic

// corruption issues when reading compressed input.

try {

reader.close()

} catch {

case e: Exception =>

if (!ShutdownHookManager.inShutdown()) {

logWarning("Exception in RecordReader.close()", e)

}

} finally {

reader = null

}

if (bytesReadCallback.isDefined) {

inputMetrics.updateBytesRead()

} else if (split.inputSplit.value.isInstanceOf[FileSplit] ||

split.inputSplit.value.isInstanceOf[CombineFileSplit]) {

// If we can't get the bytes read from the FS stats, fall back to the split size,

// which may be inaccurate.

try {

inputMetrics.incBytesRead(split.inputSplit.value.getLength)

} catch {

case e: java.io.IOException =>

logWarning("Unable to get input size to set InputMetrics for task", e)

}

}

}

}

}

//返回Iterator

new InterruptibleIterator[(K, V)](context, iter)

}从这里我们可以看得出来compute方法是通过分片来获得Iterator接口,以遍历分片的数据。

getPreferredLocations方法就更简单了,直接调用InputSplit的getLocations方法获得所在的位置。