方式一:heartbeat基于haresources+NFS

方式二:heartbeat基于crm+NFS进行资源管理的高可用

方式三:corosync+pacemaker+NFS的高可用web

--------------------------------

storage上提供NFS共享

在sdb上划出一个1G的分区用作nfs的共享存储

[root@ns1 ~]# fdisk /dev/sdb [root@ns1 ~]# partx /dev/sdb1 [root@ns1 ~]# mkfs.ext4 /dev/sdb1 [root@ns1 ~]# e2label /dev/sdb1 NFS [root@ns1 ~]# vim /etc/fstab

![]()

[root@ns1 ~]# mount -a [root@ns1 ~]# vim /etc/exports

![]()

[root@ns1 ~]# service rpcbind restart [root@ns1 ~]# service nfs restart [root@ns1 ~]# chkconfig nfs on [root@ns1 ~]# echo "NFS" >>/nfs/index.html

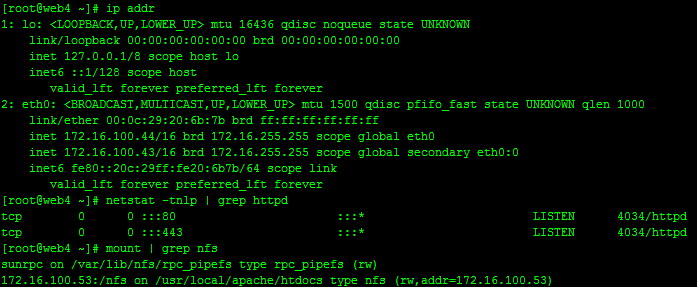

web3、web4 上尝试挂载NFS共享

web3上

[root@web3 ~]# mkdir /backup

[root@web3 ~]# mv /usr/local/apache/htdocs/* /backup/

[root@web3 ~]# yum -y install nfs-utils

{root@web3 ~]# mount -t nfs 172.16.100.53:/nfs /usr/local/apache/htdocs/

[root@web3 ~]# cp -a /backup/* /usr/local/apache/htdocs/

[root@web3 ~]# ll -d /usr/local/apache/htdocs/

drwxr-xr-x 3 4294967294 4294967294 4096 Sep 2 01:10 /usr/local/apache/htdocs/

解决办法:

[root@ns1 ~]# vim /etc/exports nfs server 上加上fsid=0

web4上进行同样操作(省略)

web3、web4 上测试挂载NFS共享

heartbeat基于 haresources 进行资源管理的高可用

两个节点都要:

编辑/etc/hosts文件

加入:

172.16.100.42

web3

172.16.100.44

web4

添加用户和组

[root@web3 ~]# groupadd haclient [root@web3 ~]# useradd -g haclient hacluster [root@web3 ~]# tar xvf libnet.tar.gz [root@web3 ~]# cd Libnet-latest/ [root@web3 Libnet-latest]# ./configure [root@web3 Libnet-latest]# make && make install

编译安装heartbeat

[root@web3 ~]# cd heartbeat-2.1.3 [root@web3 heartbeat-2.1.3]# ./configure [root@web3 heartbeat-2.1.3]# for file in `find ./ -name Makefile`;do sed -i 's/-Werror//g' $file ;done [root@web3 heartbeat-2.1.3]# make && make install [root@web3 ~]# chkconfig --add heartbeat

配置文件(一个节点写好后拷贝到另一个节点上):

1、密钥文件,600, authkeys

[root@web3 ha.d]# (umask 066;echo -e "auth 1 \n1 md5 `dd if=/dev/urandom bs=512 count=1 2>/dev/null | md5sum | egrep --color -o [0-9a-z]+ `" >/usr/local/etc/ha.d/authkeys)

2、heartbeat服务的配置配置ha.cf

[root@web3 ha.d]# cat /usr/local/etc/ha.d/ha.cf logfacility local0 #log接收设施 keepalive 1 #每次心跳信息的间隔时间,不加后缀默认单位是秒 deadtime 30 #多长时间没收到心跳信息就判断节点为不可用 warntime 20 #报警时间(应该小于deadtime) initdead 120 #初始化失败时间,此项至少要设置为deadtime的两倍。 udpport 694 #发心跳的udp端口 bcast eth0 #广播,发心跳的网口。如果大于2台机器,需要用组播方式 auto_failback on #是否回切,on off两个值 node web3 node web4 #配置节点,用uname –n查看出来的节点名。 ping 172.16.100.1 #一般ping网关地址,看网络是否通。 crm no #是否使用

3、资源管理配置文件haresources

[root@web3 ~]# cat /usr/local/etc/ha.d/haresources web3 IPaddr::172.16.100.43/16/eth0 Filesystem::172.16.100.53:/nfs::/usr/local/apache/htdocs/::nfs httpd

拷贝到远程节点

[root@web3 ~]# scp /usr/local/etc/ha.d/{authkeys,ha.cf,haresources} web4:/usr/local/etc/ha.d/

测试:

[root@ns1 ~]# vim /etc/exports

NFS上修改挂载权限

[root@ns1 ~]# service nfs restart

在两个节点上分别启动heartbeat

[root@web3 ~]# service heartbeat start [root@web4 ~]# service heartbeat start

资源都到了master节点(web3)上

故障转移测试;

web3上执行

[root@web3 ~]# /usr/local/lib/heartbeat/stonithd

所有资源都转到web4上了

日志:

heartbeat: [3555]: info: web3 wants to go standby [all] heartbeat: [3555]: info: standby: acquire [all] resources from web3 heartbeat: [3618]: info: acquire all HA resources (standby).ResourceManager[3631]: info: Acquiring resource group: web3 IPaddr::172.16.100.43/16/eth0 Filesystem::172.16.100.53:/nfs::/usr/local/apache/htdocs/::nfs httpd IPaddr[3658]: INFO: Resource is stopped ResourceManager[3631]: info: Running /usr/local/etc/ha.d/resource.d/IPaddr 172.16.100.43/16/eth0 start IPaddr[3756]: INFO: Using calculated netmask for 172.16.100.43: 255.255.0.0 IPaddr[3756]: INFO: eval ifconfig eth0:0 172.16.100.43 netmask 255.255.0.0 broadcast 172.16.255.255 IPaddr[3727]: INFO: Success Filesystem[3863]: INFO: Resource is stopped ResourceManager[3631]: info: Running /usr/local/etc/ha.d/resource.d/Filesystem 172.16.100.53:/nfs /usr/local/apache/htdocs/ nfs start Filesystem[3944]: INFO: Running start for 172.16.100.53:/nfs on /usr/local/apache/htdocs Filesystem[3933]: INFO: Success ResourceManager[3631]: info: Running /etc/init.d/httpd start heartbeat: [3618]: info: all HA resource acquisition completed (standby). heartbeat: [3555]: info: Standby resource acquisition done [all]. heartbeat: [3555]: info: remote resource transition completed.

2.4.3-heartbeat基于crm进行资源管理的高可用

[root@web3 ~]# cp /usr/local/etc/ha.d/ha.cf{,.bak}

[root@web3 ~]# cat /usr/local/etc/ha.d/ha.cf

logfacility local0 #log接收设施

keepalive 1 #每次心跳信息的间隔时间,不加后缀默认单位是秒

deadtime 30 #多长时间没收到心跳信息就判断节点为不可用

warntime 20 #报警时间(应该小于deadtime)

initdead 120 #初始化失败时间,此项至少要设置为deadtime的两倍。

udpport 694 #发心跳的udp端口

mcast eth0 225.0.111.11 694 1 0 #组播

auto_failback on #是否回切,on off两个值

node web3

node web4 #配置节点,用uname –n查看出来的节点名。

ping 172.16.100.1 #一般ping网关地址,看网络是否通。

crm respawn #使用crm管理资源,打开后haresources会失效

手动同步到另一节点

[root@web3 ~]# scp /usr/local/etc/ha.d/ha.cf web4:/usr/local/etc/ha.d/ha.cf

为haclustert添加密码

[root@web4 ~]# passwd hacluster

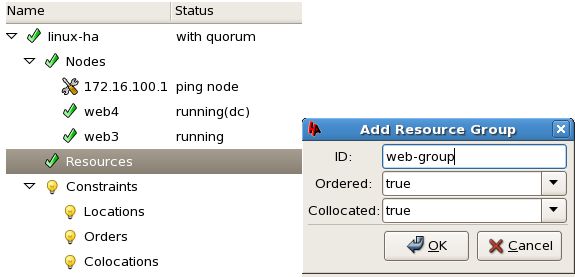

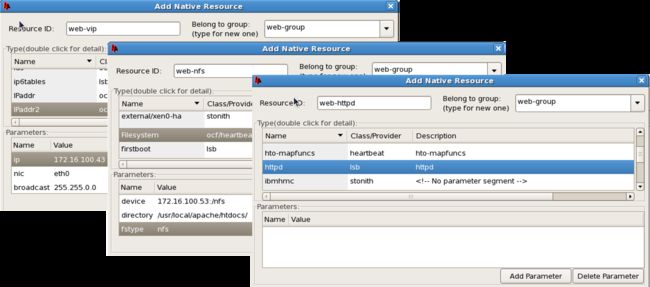

定义资源组:

在资源组里分别加入VIP、Filesystem、httpd 三个资源

启动资源组:

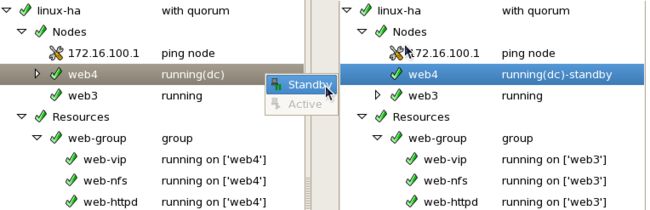

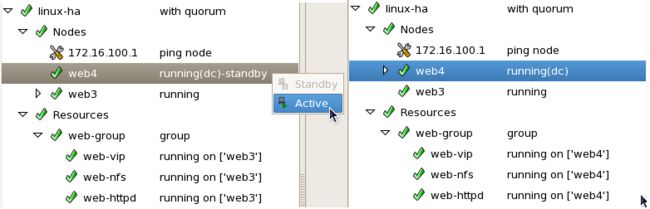

让当前运行服务的web4转为standby,资源顺利转移到web3上

2.4.3-corosync+pacemaker+NFS的高可用web

安装corosync和pacemaker

[root@web3 corosync]# ls

cluster-glue-1.0.6-1.6.el5.i386.rpm libesmtp-1.0.4-5.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm pacemaker-1.1.5-1.1.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm pacemaker-cts-1.1.5-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm pacemaker-libs-1.1.5-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm resource-agents-1.0.4-1.1.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

[root@web3 corosync]# yum -y --nogpgcheck localinstall *.rpm

[root@web3 corosync]# cp /etc/corosync/{corosync.conf.example,corosync.conf}

[root@web3 corosync]# vim /etc/corosync/corosync.conf

[root@web3 corosync]# cat /etc/corosync/corosync.conf

# Please read the corosync.conf.5 manual page

compatibility: whitetank

totem {

version: 2

secauth: on

threads: 2

interface {

ringnumber: 0

bindnetaddr: 172.16.0.0

mcastaddr: 226.97.10.11

mcastport: 5405

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: no

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

ver: 0

name: pacemaker

}

aisexec {

user: root

group: root

}

生成节点间通信时用到的认证密钥文件并复制到其它节点

[root@web3 ~]# cd /etc/corosync/ [root@web3 corosync]# corosync-keygen [root@web3 corosync]# scp -p corosync.conf authkey web4:/etc/corosync/

分别为两个节点创建corosync生成的日志所在的目录:

[root@web3 corosync]# mkdir /var/log/cluster [root@web3 corosync]# ssh web4 'mkdir /var/log/cluster'

启动集群

[root@web3 corosync]# /etc/init.d/corosync start [root@web3 corosync]# ssh web4 '/etc/init.d/corosync start'

禁用stonith、quorum

[root@web3 corosync]# crm configure property stonith-enabled=false

[root@web3 corosync]# crm configure property no-quorum-policy=ignore

[root@web3 corosync]# crm configure show

node web3

node web4

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

添加集群资源

[root@web3 corosync]# crm configure primitive web-vip ocf:heartbeat:IPaddr params ip=172.16.100.43

[root@web3 corosync]# crm configure primitive web-nfs ocf:heartbeat:Filesystem params device="172.16.100.53:/nfs" directory="/usr/local/apache/htdocs/" fstype="nfs" op start timeout=60s op stop timeout=60s

[root@web3 corosync]# crm configure primitive web-httpd lsb:httpd

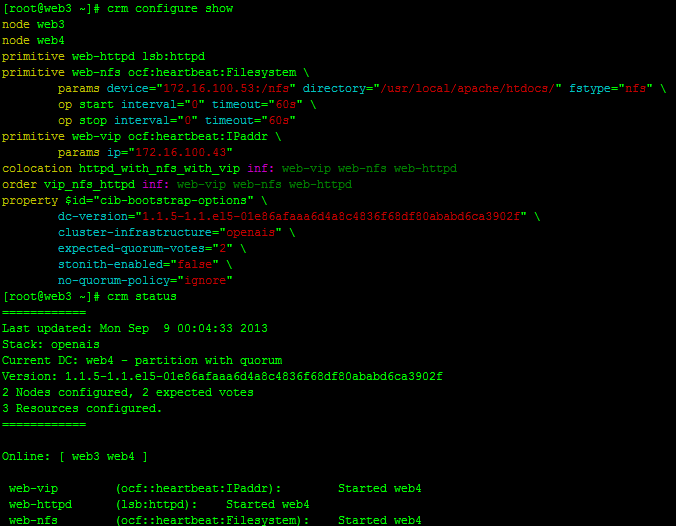

[root@web3 corosync]# crm configure show

node web3

node web4

primitive web-httpd lsb:httpd

primitive web-nfs ocf:heartbeat:Filesystem \

params device="172.16.100.53:/nfs" directory="/usr/local/apache/htdocs/" fstype="nfs" \

op start interval="0" timeout="60s" \

op stop interval="0" timeout="60s"

primitive web-vip ocf:heartbeat:IPaddr \

params ip="172.16.100.43"

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

排列约束

[root@web3 ~]# crm configure collocation httpd_with_nfs_with_vip inf: web-vip web-nfs web-httpd

顺序约束

[root@web3 ~]# crm configure order vip_nfs_httpd mandatory: web-vip web-nfs web-httpd

当前服务都运行在web4上

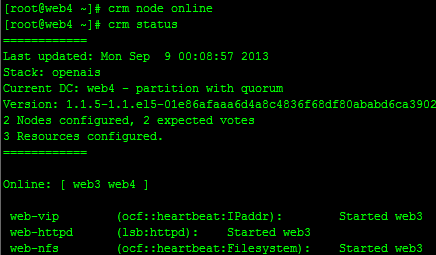

在web4上执行

[root@web4 ~]# crm node standby

资源都转到了web3上

节点web4再次上线:

资源仍在web3上