雷神博客

http://blog.csdn.net/leixiaohua1020/article/details/42658139

http://trac.ffmpeg.org/wiki/CompilationGuide/MacOSX

玩转 FFmpeg 的 7 个小技巧

http://itindex.net/detail/4071-ffmpeg

ffmpeg裁剪合并视频

http://itindex.net/detail/38530-ffmpeg-合并-视频

FFmpeg使用手册 - ffplay 的常用命令

http://blog.chinaunix.net/uid-11344913-id-5750263.html

FFMPeg一般使用的流程:

1、av_register_all();//注册所有文件格式和编解码库

2、avformat_network_init();//打开网络视频流

3、av_open_input_file();//读取文件头部把信息保存到AVFormatContext结构体

4、av_find_stream_info();//为pFormatCtx->streams填充上正确的信息

5、CODEC_TYPE_VIDEO;//通过判断得到视频流类型

6、avcodec_find_decoder();//查找解码器

7、avcodec_open();//打开编解码器

8、avcodec_alloc_frame();//分配空间保存帧数据

9、av_read_frame();//不断从流中提取帧数据

10、avcodec_decode_video();//解码视频流

11、avcodec_close();//关闭解码器

12、avformat_close_input_file();//关闭输入文件

一 、本播放器原理:

通过ffmpeg对视频进行解码,解码出每一帧图片,然后根据一定时间播放每一帧图

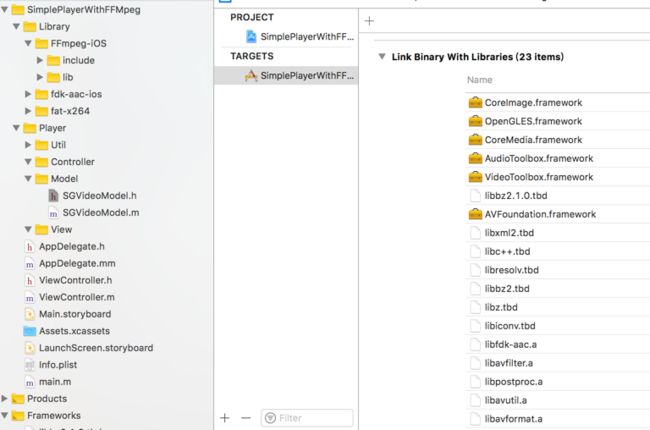

二 、iOS 集成 ffmpeg

1.下载脚本 [ffmpeg脚本](https://github.com/kewlbear/FFmpeg-iOS-build-script)

2. 根据上面链接的 README 进行编译

3.集成到项目,新建工程,将编译好的静态库以及头文件导入工程

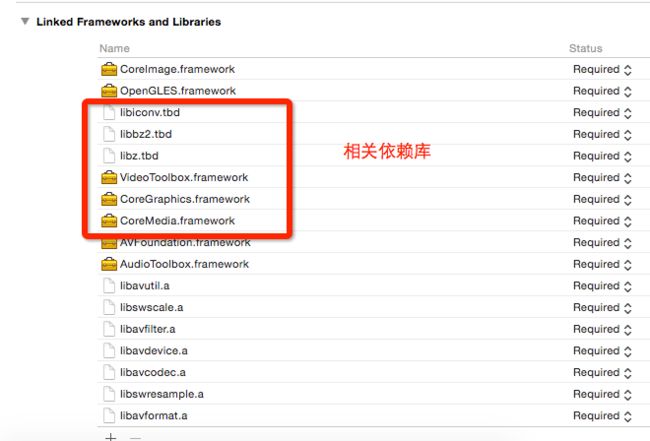

导入依赖库

设置头文件路径,路径一定要对,不然胡找不到头文件

先 command + B 编译一下,确保能编译成功

代码实现:

新建一个OC文件

#import

#import

@interface SGVideoModel : NSObject

/* 解码后的UIImage */

@property (nonatomic, strong, readonly) UIImage *currentImage;

/* 视频的frame高度 */

@property (nonatomic, assign, readonly) int sourceWidth, sourceHeight;

/* 输出图像大小。默认设置为源大小。 */

@property (nonatomic,assign) int outputWidth, outputHeight;

/* 视频的长度,单位为秒 */

@property (nonatomic, assign, readonly) double duration;

/* 视频的当前秒数 */

@property (nonatomic, assign, readonly) double currentTime;

/* 视频的帧率 */

@property (nonatomic, assign, readonly) double fps;

/* 视频路径。 */

- (instancetype)initWithVideo:(NSString *)videoPath;

/* 切换资源 */

- (void)replaceTheResources:(NSString *)videoPath;

/* 重播 */

- (void)replay;

/* 从视频流中读取下一帧。如果没有帧读取(视频),返回假。 */

- (BOOL)stepFrame;

/* 在指定的时间寻找最近的关键帧 */

- (void)seekTime:(double)seconds;

@end

.m

#import "SGVideoModel.h"

#include

#include

#include

@interface SGVideoModel()

{

AVFormatContext *formatCtx;

AVCodecContext *codecCtx;

AVFrame *frame;

AVStream *stream;

AVPacket packet;

AVPicture picture;

int videoStream;

int fps;

BOOL isReleaseResources;

}

@property (nonatomic, copy) NSString *currentPath;

@end

@implementation SGVideoModel

-(instancetype)initWithVideo:(NSString *)videoPath{

if (!(self=[super init])) return nil;

if ([self initializeResources:[videoPath UTF8String]]) {

self.currentPath = [videoPath copy];

return self;

}else{

return nil;

}

}

- (BOOL)initializeResources:(const char *)filePath{

isReleaseResources = NO;

AVCodec *pCodec;

//注册所有解码器

avcodec_register_all();

av_register_all();

avformat_network_init();

//打开视频文件

if (avformat_open_input(&formatCtx, filePath, NULL, NULL) !=0) {

NSLog(@"打开文件失败");

goto initError;

}

//检查数据流

if (avformat_find_stream_info(formatCtx, NULL)<0) {

NSLog(@"检查数据流失败");

goto initError;

}

//检查数据流,找到第一个视频流

if ((videoStream = av_find_best_stream(formatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, &pCodec, 0))<0) {

NSLog(@"没有找到第一个视频流");

goto initError;

}

//获取视频流编解码上下文的指针

stream = formatCtx->streams[videoStream];

codecCtx = stream->codec;

#if DEBUG

//打印视频流的详细信息

av_dump_format(formatCtx, videoStream, filePath, 0);

#endif

if (stream->avg_frame_rate.den && stream->avg_frame_rate.num) {

fps = av_q2d(stream->avg_frame_rate);

}else{

fps = 30;

}

//查找解码器

pCodec = avcodec_find_decoder(codecCtx->codec_id);

if (pCodec == NULL) {

NSLog(@"没有找到解码器");

goto initError;

}

//打开解码器

if (avcodec_open2(codecCtx, pCodec, NULL) < 0) {

NSLog(@"打开解码器失败");

goto initError;

}

//分配视频帧

frame = av_frame_alloc();

_outputWidth = codecCtx->width;

_outputHeight = codecCtx->height;

return YES;

initError:

return NO;

}

/* 在指定的时间寻找最近的关键帧 */

- (void)seekTime:(double)seconds{

if (formatCtx == NULL) {

[self initializeResources:[self.currentPath UTF8String]];

}

AVRational timeBase = formatCtx->streams[videoStream]->time_base;

int64_t targetFrame = (int64_t)((double)timeBase.den / timeBase.num*seconds);

avformat_seek_file(formatCtx, videoStream, 0, targetFrame, targetFrame, AVSEEK_FLAG_FRAME);

avcodec_flush_buffers(codecCtx);

}

/* 从视频流中读取下一帧。如果没有帧读取(视频),返回假。 */

- (BOOL)stepFrame{

int frameFinished = 0;

while (!frameFinished && av_read_frame(formatCtx, &packet) >= 0) {

if (packet.stream_index == videoStream) {

avcodec_decode_video2(codecCtx, frame, &frameFinished, &packet);

}

}

if (frameFinished == 0 && isReleaseResources == NO) {

[self releaseResources];

}

return frameFinished !=0;

}

/* 切换资源 */

- (void)replaceTheResources:(NSString *)videoPath{

if (!isReleaseResources) {

[self releaseResources];

}

self.currentPath = [videoPath copy];

[self initializeResources:[videoPath UTF8String]];

}

- (void)replay{

[self initializeResources:[self.currentPath UTF8String]];

}

#pragma mark 重写属性访问方法

- (void)setOutputWidth:(int)outputWidth{

if (_outputWidth == outputWidth) return;

_outputWidth = outputWidth;

}

- (void)setOutputHeight:(int)outputHeight{

if (_outputHeight == outputHeight) return;

_outputHeight = outputHeight;

}

- (UIImage *)currentImage{

if (!frame->data[0]) return nil;

return [self imageFromAVPicture];

}

- (double)duration{

return (double)formatCtx->duration/AV_TIME_BASE;

}

- (double)currentTime{

AVRational timeBase = formatCtx->streams[videoStream]->time_base;

return packet.pts * (double)timeBase.num/timeBase.den;

}

- (int)sourceWidth{

return codecCtx->width;

}

-(int)sourceHeight{

return codecCtx->height;

}

-(double)fps{

return fps;

}

#pragma mark - 内部方法

- (UIImage *)imageFromAVPicture{

//attribute_deprecated

avpicture_free(&picture);

avpicture_alloc(&picture, AV_PIX_FMT_RGB24, _outputWidth, _outputHeight);

struct SwsContext *imgConvertCtx = sws_getContext(frame->width, frame->height, AV_PIX_FMT_YUV420P, _outputWidth, _outputHeight, AV_PIX_FMT_RGB24, SWS_FAST_BILINEAR, NULL, NULL, NULL);

if (imgConvertCtx == nil) return nil;

sws_scale(imgConvertCtx, frame->data, frame->linesize, 0, frame->height, picture.data, picture.linesize);

sws_freeContext(imgConvertCtx);

CGBitmapInfo bitmapInfo = kCGBitmapByteOrderDefault;

CFDataRef data = CFDataCreate(kCFAllocatorDefault,

picture.data[0],

picture.linesize[0] * _outputHeight);

CGDataProviderRef provider = CGDataProviderCreateWithCFData(data);

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGImageRef cgImage = CGImageCreate(_outputWidth,

_outputHeight,

8,

24,

picture.linesize[0],

colorSpace,

bitmapInfo,

provider,

NULL,

NO,

kCGRenderingIntentDefault);

UIImage *image = [UIImage imageWithCGImage:cgImage];

CGImageRelease(cgImage);

CGColorSpaceRelease(colorSpace);

CGDataProviderRelease(provider);

CFRelease(data);

return image;

}

#pragma mark - 释放资源

- (void)releaseResources{

isReleaseResources = YES;

//释放RGB

avpicture_free(&picture);

//释放frame

av_packet_unref(&packet);

//释放YUV frame

av_free(frame);

//关闭解码器

if (codecCtx) avcodec_close(codecCtx);

if (formatCtx) avformat_close_input(&formatCtx);

avformat_network_deinit();

}

在SB 拖一个 UIImageView 控件 和按钮 并连好线

#import "ViewController.h"

#import "SGVideoModel.h"

#define LERP(A,B,C) ((A)*(1.0-C)+(B)*C)

@interface ViewController ()

@property (weak, nonatomic) IBOutlet UIImageView *imageView;

@property (weak, nonatomic) IBOutlet UIButton *playBtn;

@property (weak, nonatomic) IBOutlet UIButton *replayBtn;

@property (weak, nonatomic) IBOutlet UILabel *fpsLabel;

@property (weak, nonatomic) IBOutlet UILabel *timeLabel;

@property (nonatomic, strong) SGVideoModel *video;

@property (nonatomic, assign) float lastFrameTime;

@end

@implementation ViewController

@synthesize imageView, fpsLabel, playBtn, video;

- (void)viewDidLoad {

[super viewDidLoad];

//播放网络视频

self.video = [[SGVideoModel alloc] initWithVideo:@"http://leyu-dev-livestorage.b0.upaiyun.com/leyulive.pull.dev.iemylife.com/leyu/e5d0490f46f343479418a21c42b779aa/recorder20171228103406.mp4"];

//播放本地视频

// self.video = [[SGVideoModel alloc] initWithVideo:[NSString bundlePath:@"Dalshabet.mp4"]];

// self.video = [[SGVideoModel alloc] initWithVideo:@"/Users/king/Desktop/Stellar.mp4"];

// self.video = [[SGVideoModel alloc] initWithVideo:@"/Users/king/Downloads/Worth it - Fifth Harmony ft.Kid Ink - May J Lee Choreography.mp4"];

// self.video = [[SGVideoModel alloc] initWithVideo:@"/Users/king/Downloads/4K.mp4"];

//播放直播

// self.video = [[SGVideoModel alloc] initWithVideo:@"http://live.hkstv.hk.lxdns.com/live/hks/playlist.m3u8"];

//设置video

// video.outputWidth = 800;

// video.outputHeight = 600;

// self.audio = [[SGVideoModel alloc] initWithVideo:@"/Users/king/Desktop/Stellar.mp4"];

// NSLog(@"视频总时长>>>video duration: %f",video.duration);

// NSLog(@"源尺寸>>>video size: %d x %d", video.sourceWidth, video.sourceHeight);

// NSLog(@"输出尺寸>>>video size: %d x %d", video.outputWidth, video.outputHeight);

//

// [self.audio seekTime:0.0];

// NSLog(@"%f", [self.audio duration])

// AVPacket *packet = [self.audio readPacket];

// NSLog(@"%ld", [self.audio decode])

int tns, thh, tmm, tss;

tns = video.duration;

thh = tns / 3600;

tmm = (tns % 3600) / 60;

tss = tns % 60;

// NSLog(@"fps --> %.2f", video.fps);

//// [ImageView setTransform:CGAffineTransformMakeRotation(M_PI)];

// NSLog(@"%02d:%02d:%02d",thh,tmm,tss);

}

- (IBAction)playBtnClick:(UIButton *)sender {

playBtn.enabled = NO;

_lastFrameTime = -1;

[video seekTime:0.0];

[NSTimer scheduledTimerWithTimeInterval: 1 / video.fps

target:self

selector:@selector(displayNextFrame:)

userInfo:nil

repeats:YES];

}

- (IBAction)replayBtnClick:(UIButton *)sender {

if (playBtn.enabled) {

[video replay];

[self playBtnClick:playBtn];

}

}

-(void)displayNextFrame:(NSTimer *)timer {

NSTimeInterval startTime = [NSDate timeIntervalSinceReferenceDate];

// self.TimerLabel.text = [NSString stringWithFormat:@"%f s",video.currentTime];

self.timeLabel.text = [self dealTime:video.currentTime];

if (![video stepFrame]) {

[timer invalidate];

[playBtn setEnabled:YES];

return;

}

imageView.image = video.currentImage;

float frameTime = 1.0 / ([NSDate timeIntervalSinceReferenceDate] - startTime);

if (_lastFrameTime < 0) {

_lastFrameTime = frameTime;

} else {

_lastFrameTime = LERP(frameTime, _lastFrameTime, 0.8);

}

fpsLabel.text = [NSString stringWithFormat:@"fps %.0f",_lastFrameTime];

}

- (NSString *)dealTime:(double)time {

int tns, thh, tmm, tss;

tns = time;

thh = tns / 3600;

tmm = (tns % 3600) / 60;

tss = tns % 60;

// [ImageView setTransform:CGAffineTransformMakeRotation(M_PI)];

return [NSString stringWithFormat:@"%02d:%02d:%02d",thh,tmm,tss];

}

如果遇到如下报错,请导入系统CoreMedia与VideoToolBox框架:

Undefined symbols for architecture arm64:

"_CMBlockBufferCreateWithMemoryBlock", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionWaitForAsynchronousFrames", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionInvalidate", referenced from:

_av_videotoolbox_default_free in libavcodec.a(videotoolbox.o)

"_CMSampleBufferCreate", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionDecodeFrame", referenced from:

_videotoolbox_common_end_frame in libavcodec.a(videotoolbox.o)

"_kCMFormatDescriptionExtension_SampleDescriptionExtensionAtoms", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

"_CMVideoFormatDescriptionCreate", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

"_VTDecompressionSessionCreate", referenced from:

_av_videotoolbox_default_init2 in libavcodec.a(videotoolbox.o)

ld: symbol(s) not found for architecture arm64

clang: error: linker command failed with exit code 1 (use -v to see invocation)

代码实现参照http://bbs.520it.com/forum.php?mod=viewthread&tid=707