hadoop高可用集群搭建

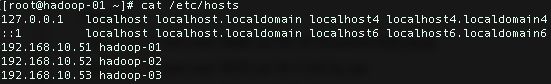

主机配置环境如下表

| 主机名 | IP | 系统 | 软件版本 | 安装目录 | 数据目录 |

|---|---|---|---|---|---|

| hadoop-01 | 192.168.10.51 | Centos 7.6 | hadoop-2.7.7、jdk1.8、zookeeper-3.4.6 | /usr/local/hadoop | /data/hadoop |

| hadoop-02 | 192.168.10.52 | Centos 7.6 | hadoop-2.7.7、jdk1.8、zookeeper-3.4.6 | /usr/local/hadoop | /data/hadoop |

| hadoop-03 | 192.168.10.53 | Centos 7.6 | hadoop-2.7.7、jdk1.8、zookeeper-3.4.6 | /usr/local/hadoop | /data/hadoop |

安装后启动的进程如下表

| hadoop-01 | hadoop-02 | hadoop-03 |

|---|---|---|

| NodeManager | NodeManager | NodeManager |

| NameNode | NameNode | |

| DataNode | DataNode | DataNode |

| DFSZKFailoverController | DFSZKFailoverController | |

| JournalNode | JournalNode | JournalNode |

| ResourceManager | ResourceManager | |

| QuorumPeerMain | QuorumPeerMain | QuorumPeerMain |

详细搭建过程记录如下

1. 配置主机名以及解析(3台)

2. 配置服务器间ssh免密码登陆(3台)

高可用模式下,hadoop的故障切换需要通过ssh登陆到其它机器,进行主备切换,因此需要配置主机间的免密码登陆

以hadoop-01为例,剩下两台服务器做相同配置

#第一步,ssh-keygen -t rsa生成密钥对

[root@hadoop-01 ~]$ ssh-keygen -t rsa

# 连续三次回车,即在本地生成了公钥和私钥,不设置密码,默认存储在 ~/.ssh目录下

[root@hadoop-01 ~]# ll .ssh/

total 16

-rw------- 1 root root 1989 Jul 24 14:18 authorized_keys

-rw------- 1 root root 1679 Jul 15 11:43 id_rsa

-rw-r--r-- 1 root root 396 Jul 15 11:43 id_rsa.pub

-rw-r--r-- 1 root root 1311 Jul 24 16:41 known_hosts

#第二步,用ssh-copy-id拷贝将公钥复制到远程机器中

[root@hadoop-01 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

[root@hadoop-01 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

[root@hadoop-01 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

#第三步,测试免密码登陆

[root@hadoop-01 ~]# ssh -p 9431 [email protected]

Last login: Mon Aug 19 15:30:09 2019 from 192.168.10.45

Welcome to Alibaba Cloud Elastic Compute Service !

[root@hadoop-02 ~]#

3. 安装hadoop

3.1 修改配置文件

(1)将hadoop安装包解压,重命名为hadoop,拷贝到/usr/local下

(2)修改各配置文件,所有的配置文件在/usr/local/hadoop/etc/hadoop目录下

修改core-site.xml,内容如下:

fs.defaultFS

hdfs://cluster

ha.zookeeper.quorum

hadoop-01:2181,hadoop-02:2181,hadoop-03:2181

修改hdfs-site.xml,内容如下:

dfs.replication

3

dfs.nameservices

cluster

dfs.ha.namenodes.cluster

nn01,nn02

dfs.namenode.rpc-address.cluster.nn01

hadoop-01:9000

dfs.namenode.http-address.cluster.nn01

hadoop-01:50070

dfs.namenode.rpc-address.cluster.nn02

hadoop-02:9000

dfs.namenode.http-address.cluster.nn02

hadoop-02:50070

dfs.namenode.shared.edits.dir

qjournal://hadoop-01:8485;hadoop-02:8485;hadoop-03:8485/cluster

dfs.journalnode.edits.dir

/data/hadoop/journaldata

dfs.ha.automatic-failover.enabled.cluster

true

dfs.client.failover.proxy.provider.cluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence(root:9431)

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

dfs.name.dir

/data/hadoop/tmp/dfs/name

dfs.data.dir

/data/hadoop/tmp/dfs/data

修改yarn-site.xml,内容如下:

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

yrc

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hadoop-01

yarn.resourcemanager.hostname.rm2

hadoop-02

yarn.resourcemanager.zk-address

hadoop-01:2181,hadoop-02:2181,hadoop-03:2181

yarn.nodemanager.aux-services

mapreduce_shuffle

修改mapred-site.xml(该文件不存在,需要手动创建),cp mapred-site.xml.template mapred-site.xml,内容如下:

mapreduce.framework.name

yarn

修改slaves文件,内容如下:

hadoop-01

hadoop-02

hadoop-03

修改hadoop-env.sh文件,指定jdk的地址

# The java implementation to use.

export JAVA_HOME=/usr/java/jdk1.8.0_131

# 如果ssh端口不是默认22,需要添加此配置

export HADOOP_SSH_OPTS="-p 9431"

# 指定hadoop相关的pid存放位置

export HADOOP_PID_DIR=/usr/local/hadoop/pids

配置hadoop环境变量,vim /etc/profile.d/hadoop.sh,内容如下

export HADOOP_HOME=/usr/local/hadoop

export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native

export HADOOP_COMMON_LIB_NATIVE_DIR=/usr/local/hadoop/lib/native

export HADOOP_OPTS="-Djava.library.path=/usr/local/hadoop/lib"

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

source /etc/profile.d/hadoop.sh

3.2 拷贝复制到其它机器

scp -r /usr/local/hadoop root@hadoop-02:/usr/local/

scp -r /usr/local/hadoop root@hadoop-03:/usr/local/

3.3 启动hadoop

启动hadoop前,需要执行几步格式化操作:

(1)启动journalnode,三台机器都要这一步操作(仅第一次启动hadoop时,需要这一步操作,之后不再需要手动启动journalnode)

cd /usr/local/hadoop/sbin

sh hadoop-daemon.sh start journalnode

(2) 在hadoop-01上执行格式化操作,格式化namenode和zkfc

hdfs namenode -format

hdfs zkfc -formatZK

(3) namenode主从信息同步,在hadoop-02节点上执行同步命令

bin/hdfs namenode -bootstrapStandby

上述步骤完成后,接下来我们就可以启动hadoop了

在hadoop-01机器上执行下面的命令

# 启动hdfs

cd /usr/local/hadoop/sbin

sh start-dfs.sh

# 启动yarn

sh start-yarn.sh

#启动ZookeeperFailoverController

sh hadoop-daemon.sh start zkfc

在hadoop-02机器上执行下边命令

/usr/local/hadoop/sbin/yarn-daemon.sh start resourcemanager

/usr/local/hadoop/sbin/hadoop-daemon.sh start zkfc

也可以简化启动

启动命令

#hadoop-01

/usr/local/hadoop/sbin/start-all.sh

/usr/local/hadoop/sbin/hadoop-daemon.sh start zkfc

#hadoop-02

/usr/local/hadoop/sbin/yarn-daemon.sh start resourcemanager

/usr/local/hadoop/sbin/hadoop-daemon.sh start zkfc

停止命令

#hadoop-01

/usr/local/hadoop/sbin/stop-all.sh

/usr/local/hadoop/sbin/hadoop-daemon.sh stop zkfc

#hadoop-02

/usr/local/hadoop/sbin/yarn-daemon.sh stop resourcemanager

/usr/local/hadoop/sbin/hadoop-daemon.sh stop zkfc

3.4 查看每台节点的进程

hadoop-01

[root@hadoop-01 ~]# jps | grep -v Jps

26849 NodeManager

2770 QuorumPeerMain

27331 DFSZKFailoverController

26308 DataNode

26537 JournalNode

26154 NameNode

26733 ResourceManager

hadoop-02

[root@hadoop-02 ~]# jps | grep -v Jps

7489 JournalNode

7281 NameNode

627 QuorumPeerMain

8233 DFSZKFailoverController

8123 ResourceManager

7389 DataNode

7631 NodeManager

hadoop-03

[root@hadoop-03 ~]# jps | grep -v Jps

21762 QuorumPeerMain

29476 NodeManager

29271 DataNode

29370 JournalNode

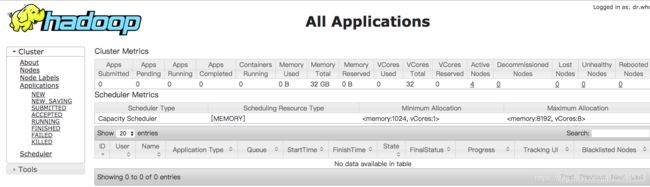

3.5 通过Web界面查看hadoop信息

(1) 浏览器访问http://192.168.10.51:50070

由图可知,当前hadoop-01是active节点,即namenode主节点

(2)浏览器访问http://192.168.10.52:50070

由图可知,当前hadoop-01是standby节点,即namenode备节点

(3) 查看yarn的web控制台,浏览器访问http://192.168.10.51:8088

3.6 测试namenode高可用

(1)在hadoop-01上kill掉namenode进程,然后通过浏览器查看hadoop-02的状态,发现状态变为active,说明高可用测试成功

(2)重新启动hadoop-01的namenode进程,sh start-dfs.sh,浏览器访问hadoop-01,此时hadoop-01的状态为standby