Python爬虫学习日志(10)

实例3:股票数据定向爬虫 ,使用两种爬取方法

- 编写爬虫

- 1. 功能描述

- 候选数据网站的选择

- 2. 技术路线:requests-re

- 源代码

- 代码优化

- 3. 技术路线:Scrapy爬虫框架

- 步骤

- 源代码

- 代码优化

- 更多

- 4. 存在的问题

编写爬虫

1. 功能描述

- 目标:获取上交所和深交所所有股票的名称和交易信息。

- 输出:保存到本地文件中。

候选数据网站的选择

- 选取原则:股票信息静态存在于HTML页面中,非js代码生成,没有Robots协议限制。

- 选取方法:浏览器F12,源代码查看等。

- 选取心态:不要纠结于某个网站,多找信息源尝试。

此处选取的网站(2019/12/3可用):东方财富网和老虎社区 - 股票代码查询 :http://quote.eastmoney.com/stock_list.html

- 个股信息 :https://www.laohu8.com/quotes

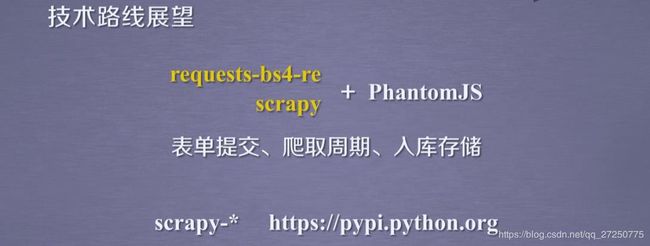

2. 技术路线:requests-re

源代码

import requests

from bs4 import BeautifulSoup

import traceback

import re

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

def getStockList(lst, stockURL):

html = getHTMLText(stockURL)

soup = BeautifulSoup(html, 'html.parser')

a = soup.find_all('a')

for i in a:

try:

href = i.attrs['href']

lst.append(re.findall(r"[s][hz]\d{6}", href)[0])#正则表达式,以s开头,中间是h或z,后面有6个数字

except:

continue

def getStockInfo(lst, stockURL, fpath):

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html == "":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div', attrs= {'class':'stock-bets'})

name = stockInfo.find_all(attrs = {'class':'bets-name'})[0]

infoDict.update({'股票名称':name.text.split()[0]})#split空格分割

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val#添加到字典中

with open(fpath, 'a', encoding= 'utf-8') as f:

f.write(str(infoDict) + '\n')

except:

traceback.print_exc()#获得错误信息

continue

return ""

def main():

stock_list_url = 'http://quote.eastmoney.com/stock_list.html'

stock_info_url = 'https://www.laohu8.com/quotes'

output_file = 'E://BaiduStockInfo.txt'

slist = []

getStockList(slist, stock_list_url)

getStockInfo(slist, stock_info_url, output_file)

print(main)

输出结果:

网站问题暂未解决,无结果。

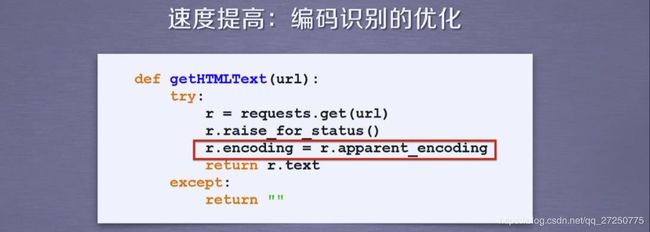

代码优化

def getHTMLText(url, code= 'utf-8'):#code默认utf-8编码

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

# r.encoding = r.apparent_encoding

r.encoding = code #直接赋值编码类型,提高速度

return r.text

except:

return ""

def getStockInfo(lst, stockURL, fpath):

count = 0

for stock in lst:

url = stockURL + stock + ".html"

html = getHTMLText(url)

try:

if html == "":

continue

infoDict = {}

soup = BeautifulSoup(html, 'html.parser')

stockInfo = soup.find('div', attrs= {'class':'stock-bets'})

name = stockInfo.find_all(attrs = {'class':'bets-name'})[0]

infoDict.update({'股票名称':name.text.split()[0]})#split空格分割

keyList = stockInfo.find_all('dt')

valueList = stockInfo.find_all('dd')

for i in range(len(keyList)):

key = keyList[i].text

val = valueList[i].text

infoDict[key] = val#添加到字典中

with open(fpath, 'a', encoding= 'utf-8') as f:

f.write(str(infoDict) + '\n')

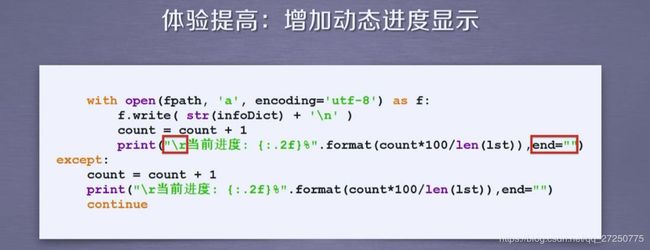

count = count + 1

#打印当前进度的百分比

# \r: 转义符,将打印字符串最后的光标提到字符串的头部,下次打印时会覆盖上次的信息,实现不换行的显示。.2f: 浮点型,两位小数

print('\r当前速度:{:.2f}%'.format(count*100/len(lst)),end='')

except:

traceback.print_exc()#获得错误信息

continue

return ""

3. 技术路线:Scrapy爬虫框架

步骤

- 建立工程和Spider模板

- 编写Spider

- 编写ITEM Pipeline

步骤1

- >scrapy startproject BaiduStocks

- >cd BaiduStocks

- >scrapy genspider stock baidu.com

- 进一步修改spiders/stocks.py文件

步骤2

- 配置stocks.py文件

- 修改对返回页面的处理

- 修改对新增URL爬取请求的处理

步骤3

- 配置pipelines.py文件

- 定义对爬取项(Scraped Item)的处理类

- 配置ITEM_PIPELINES选项

执行Scrapy爬虫

- >scrapy crawl stocks

源代码

stocks.py

# -*- coding: utf-8 -*-

import scrapy

import re

class StockSpider(scrapy.Spider):

name = 'stocks'

start_urls = ['http://quote.eastmoney.com/stockslist.html']

def parse(self, response):

for href in response.css('a::attr(href)').extract():

try:

stock = re.findall(r"[s][hz]\d{6}", href)[0]

url = 'https://gupiao.baidu.com/stock/' + stock + '.html'

yield scrapy.Reauest(url, callback= self.parse_stock)

except:

continue

def parse_stock(self, response):

infoDict = {}

stockInfo = response.css('.stock-bets')

name = stockInfo.css('bets-name').extract()[0]

keyList = stockInfo.css('dt').extract()

valueList = stockInfo.css('dd').extract()

for i in range(len(keyList)):

key = re.findall(r'>.*', keyList[i])[0][1:-5]

try:

val = re.findall(r'\d+\.?.*',valueList[i])[0][0:-5]

except:

val = '--'

infoDict[key] = val

infoDict.update(

{'股票名称':re.findall('\s.*\(',name)[0].split()[0] + \

re.findall('\>.*\<', name)[0][1:-1]})

yield infoDict

pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

class BaidustocksPipeline(object):

def process_item(self, item, spider):

return item

class BaidustocksInfoPipeline(object):

def open_spider(self, spider):

self.f = open('BaiduStockInfo.txt', 'w')

def close_spider(self, spider):

self.f.close()

def process_item(self, item, spider):

try:

line = str(dict(item)) + '\n'

self.f.write(line)

except:

pass

return item

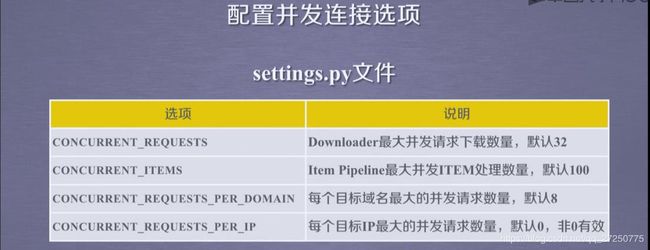

setting.py

将该部分进行以下修改

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'BaiduStocks.pipelines.BaidustocksInfoPipeline': 300,

}

输出结果:

网站问题暂未解决,无结果。

代码优化

更多

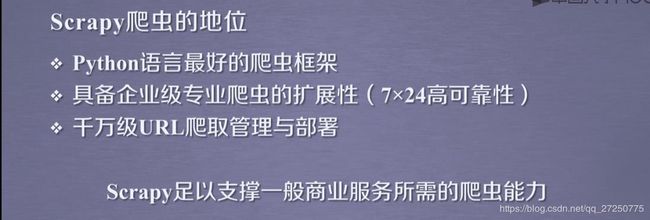

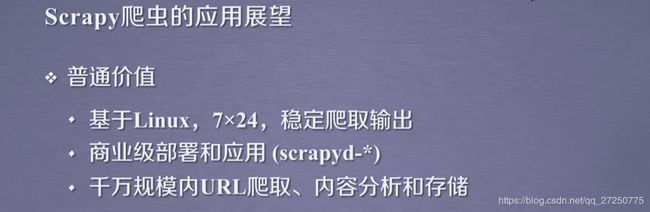

Scrapy爬虫的功能和特点:

- 持续爬取

- 商业服务

- 高可靠性

4. 存在的问题

- 视频中爬取股票的实例网站(百度股票和东方财富网),现在已不可用或存在非静态代码,后续修改。

- 此实例为单线程爬虫,运行较慢,请耐心等待。

视频学习部分OVER!