1.读取

file_path = r'C:\Users\39780\PycharmProjects\大作业\RobitStu\SMSSpamCollection' email = open(file_path,'r',encoding='utf-8') # 打开文件 email_data = [] # 列表存邮件 email_label = [] # 存标签 csv_reader = csv.reader(email,delimiter = '\t') for line in csv_reader: email_label.append(line[0]) email_data.append(preprocessing(line[1])) # 每封邮件做预处理 email.close() print("总的邮件数",len(email_data))

2.数据预处理

def get_wordnet_pos(treebank_tag): # 根据词性,生成还原参数pos if treebank_tag.startswith('J'): return nltk.corpus.wordnet.ADJ # 还原形容词 elif treebank_tag.startswith('V'): return nltk.corpus.wordnet.VERB # 还原动词 elif treebank_tag.startswith('N'): return nltk.corpus.wordnet.NOUN # 还原名词 elif treebank_tag.startswith('R'): return nltk.corpus.wordnet.ADV # 还原副词 else: return nltk.corpus.wordnet.NOUN def preprocessing(text): # 预处理 tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)] # 分词 stops = stopwords.words('english') # 停用词 tokens = [token for token in tokens if token not in stops] # 去掉停用词 tokens = [token.lower() for token in tokens if len(token)>=3] # 大小写, 短语 lmtzr = WordNetLemmatizer() tag = nltk.pos_tag(tokens) # 标注词性 tokens = [lmtzr.lemmatize(token,pos=get_wordnet_pos(tag[i][1])) for i,token in enumerate(tokens)] # 词性还原 preprocessing_text = ''.join(tokens) return preprocessing_text

3.数据划分—训练集和测试集数据划分

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

# 3.数据划分 from sklearn.model_selection import train_test_split # 导入内置的划分 x_train, x_test, y_train, y_test = train_test_split(email_data,email_label,test_size=0.2,random_state=0,stratify=email_label) # 划分训练集与测试集,x_train 训练集邮件,x_test训练集标签;y类此 print("划分的测试集标签数与测试集邮件是对应的:",len(y_test))

4.文本特征提取

sklearn.feature_extraction.text.CountVectorizer

https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.CountVectorizer.html?highlight=sklearn%20feature_extraction%20text%20tfidfvectorizer

sklearn.feature_extraction.text.TfidfVectorizer

https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.TfidfVectorizer.html?highlight=sklearn%20feature_extraction%20text%20tfidfvectorizer#sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

观察邮件与向量的关系

向量还原为邮件

# 4 文本特征选取即向量化,一个单词一种特征 from sklearn.feature_extraction.text import TfidfVectorizer tfidf2 = TfidfVectorizer() # 把原始文本转成特征矩阵 X_train = tfidf2.fit_transform(x_train) X_test = tfidf2.transform(x_test) print(X_train) print("X_train.toarray()数组向量",X_train.toarray()) print("X_train.toarray()",X_train.toarray().shape) print("X_test.toarray()",X_test.toarray().shape) # 统计所有的词 email_txt = [] for email in email_data: email_txt.extend(email.split()) print("总共有的单词数:",len(email_txt)) print("不重复的单词数:",len(set(email_txt))) print("生成词袋:",tfidf2.vocabulary_) # fit生成词袋 # 向量还原成邮件 print("X_train.toarray()[0]:",X_train.toarray()[0]) import numpy as np a = np.flatnonzero(X_train.toarray()[0]) # (中为邮件0的向量) 该函数输入一个矩阵,返回扁平化后矩阵中非零元素的位置(index)即下标 print("查看返回非零的个数:",a) print(X_train.toarray()[0][a]) # 非零元素对应的单词 b = tfidf2.vocabulary_ # 词汇表 key_list = [] for key,value in b.items(): if value in a: key_list.append(key) print("非零中查看有用的词",key_list) print("x_train[0]",x_train[0])

4.模型选择

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

答:选择多项式朴素贝叶斯这个模型单词从词袋拿出单词,词频的概率像N次独立随机时间中求分布概率,用多项式贝叶斯

# 模型构建

from sklearn.naive_bayes import MultinomialNB #多项式

mnb = MultinomialNB()

mnb.fit(X_train,y_train) # fit训练模型

y_mnb = mnb.predict(X_test) # 看模型好与坏,用测试集进行预测得知

print("多项式朴素贝叶斯预测邮件是正常还是垃圾的结果:",y_mnb)

print("原来的测试邮件标签还没转向量的,与预测对比看好与坏:",y_test)

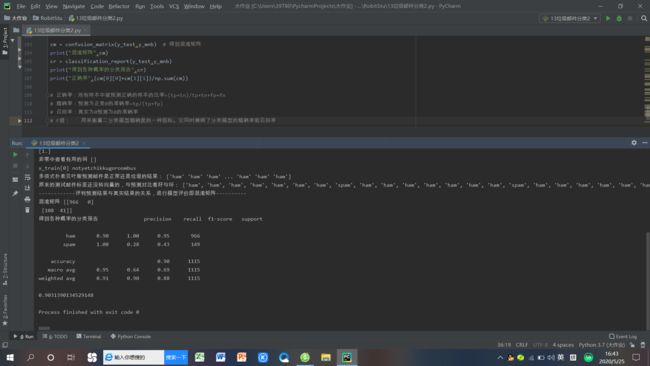

5.模型评价:混淆矩阵,分类报告

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

说明混淆矩阵的含义

from sklearn.metrics import classification_report

说明准确率、精确率、召回率、F值分别代表的意义

正确率:所有样本中被预测正确的样本的比率=(tp+tn)/tp+tn+fp+fn

精确率:预测为正类0的准确率=tp/(tp+fp)

召回率:真实为0预测为0的准确率

F值: 用来衡量二分类模型精确度的一种指标。它同时兼顾了分类模型的精确率和召回率

print("------------评判预测结果与真实结果的关系,进行模型评价即混淆矩阵----------") # 混淆矩阵分类报告,用于报告准确率、精确率、召回率 from sklearn.metrics import confusion_matrix # 混淆矩阵 from sklearn.metrics import classification_report # 分类报告 cm = confusion_matrix(y_test,y_mnb) # 得到混淆矩阵 print("混淆矩阵",cm) cr = classification_report(y_test,y_mnb) print("得到各种概率的分类报告",cr) print("正确率",(cm[0][0]+cm[1][1])/np.sum(cm))

6.比较与总结

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

TfidfVectorizer()比CountVectorizer()效果更佳,CountVectorizer()是根据词有无来判断,只考虑每个单词出现的频率,每一行表示一个训练文本的词频统计结果,只将训练文本中每个出现过的词汇单独视为一列特征

TfidfVectorizer()包括两部分tf和idf,两者相乘得到tf-idf算法,tf算法统计某训练文本中,某个词的出现次数(词频=某个词文章出现次数/文章总次数),idf算法,用于调整词频的权重系数,如果一个词越常见,那么分母就越大,逆文档频率就越小越接近0。