python 详解

- 1 Types and Operations

- 1-1 Built-in Objects preview

- 1-2 Numbers

- 1-3 String

- 1-3-1 Sequence Operations

- 1-3-2 Immutable

- 1-3-3 Type-Specific Methods

- 1-3-4 Getting Help

- 1-3-5 Unicode Strings

- 1-3-6 Pattern Matching

- 1-4 Lists

- 1-4-1 Sequence Operations

- 1-4-2 Type-Specific Operations

- 1-4-2 Bounds Checking

- 1-4-3 Nesting

- 1-4-4 Comprehensions

- 1-5 Dictionaries

- 1-5-1 Mapping Operations

- 1-5-2 Nesting Revisited

- 1-5-3 Missing Keys if Tests

- 1-5-4 Sorting Keys for Loops

- 1-5-5 Iteration and Optimization

- 1-6 Tuples

- 1-7 Files

- 1-7-1 Binary Bytes Files

- 1-7-2 Unicode Text files

- 1-8 Other Core Types

- 1-8-1 How to break your codes flexibility

- 2 Statements and Syntax

- 2-1 Introducing Python Statements

- 2-2 Iterations and Comprehensions

- 2-2-1 The Iteration Protocol File Iterators

- 2-2-2 The full iteration protocol

- 2-2-3 List Comprehensions A First Detailed Look

- 2-2-4 List Comprehensions Basics

- 2-2-4 Using List Comprehensions on Files

- 2-2-5 Extended List Comprehension Syntax

- 2-2-5-1 Filter clauses if

- 2-2-5-2 Nested loops for

- 3 The Documentation interlude

- 3-1 Python Documentation Sources

- 3-2 Comments

- 3-3 The dir Function

- 3-4 Docstrings doc

- 3-4-1 User-defined docstrings

- 3-4-2 Built-in docstrings

- 3-5 PyDoc The help Function

- 3-6 PyDoc HTML Reports

- 3-6-1 Python 32 and later PyDocs all-browser mode

- 3-6-2 Python 32 and earlier GUI client

- 3-7 Beyond docstrings Sphinx

- 4 Functions and Generators

- 4-1 Function Basics

- 4-2 Coding Functions

- 4-2-1 def Statements

- 4-2-2 def Executes at Runtime

- 4-3 Scopes

- 4-3-1 Python Scope Basics

- 4-3-1-1 Scope Details

- 4-3-1-2 Name Resolution The LEGB Rule

- 4-3-2 Scope Example

- 4-3-3 The Built-in Scope

- 4-3-4 Scopes and Nested Functions

- 4-3-4-1 Nested Scope Details

- 4-3-4-2 Nested Scope Example

- 4-3-4-3 Factory Functions Closures

-

- A simple function factory

-

- 4-3-1 Python Scope Basics

- 4-4 Arguments

- 4-4-1 Argument-Passing Basics

- 4-4-1-1 Arguments and Shared References

- 4-4-1-2 Avoiding Mutable Argument Changes

- 4-4-1-3 Simulating Output Parameters and Multiple Results

- 4-4-2 Special Argument-Matching Modes

- 4-4-2-1 Argument Matching Basics

- 4-4-2-2 Argument Matching Syntax

- 4-4-2-3 The Gritty Details

- 4-4-3 Arbitrary Arguments Examples

- 4-4-3-1 Calls Unpacking arguments

- 4-4-4 Python 3X Keyword-Only Arguments

- 4-4-1 Argument-Passing Basics

- 4-5 Advanced Function Topics

- 4-5-1 functions Design Concepts

- 4-5-2 Function Objects Attributes and Annotations

- 4-5-2-1 Indirect Function Calls First Class Objects

- 4-5-2-2 Function Introspection

- 4-5-2-3 Function Attributes

- 4-5-2-4 Function Annotations in 3X

- 4-5-3 Anonymous Functions lambda

- 4-5-3-1 lambda Basics

- 4-5-4 Functional Programming Tools

- 4-5-4-1 Mapping Functions over Iterables map

- 4-5-4-2 Selecting Items in Iterables filter

- 4-5-4-3 Combining Items in Iterables reduce

- 4-6 Comprehensions and Generations

- 4-6-1 List Comprehensions Versus map

- 4-6-2 Adding Tests and Nested Loops filter

- 4-6-2 Formal comprehension syntax

- 4-6-3 Dont Abuse List Comprehensions KISS

- 4-6-3-1 On the other hand performance conciseness expressiveness

- 4-7 Generator Functions and Expressions

- 4-7-1 Generator Functions yield Versus return

- 4-7-1-1 State suspension

- 4-7-1-2 Iteration protocol integration

- 4-7-1-3 Generator functions in action

- 4-7-1-4 Why generator functions

- 4-7-1-5 Extended generator function protocol send versus next

- 4-7-2 Generator Expressions Iterables Meet Comprehensions

- 4-7-3 Generator Functions Versus Generator Expressions

- 4-7-4 Generators Are Single-Iteration Objects

- 4-7-5 Preview User-defined iterables in classes

- 4-7-1 Generator Functions yield Versus return

- 4-8 The Benchmarking Interlude

- 4-8-1 Timing Iteration Alternatives

- 4-8-1-1 Timing Module Homegrown

- 4-8-1 Timing Iteration Alternatives

- 5 Modules and Packages

- 5-1 Modules The Big Picture

- 5-2-1 Python Program Architecture

- 5-2-1-1 How to Structure a Program

- 5-2-1-2 Imports and Attributes

- 5-2-1-3 Standard Library Modules

- 5-2-2 How Imports Work

- 5-2-2-1 Find it

- 5-2-2-2 Compile it Maybe

- 5-2-2-3 Run It

- 5-2-3 Byte Code Files pycache in Python 32

- 5-2-4 The Module Search Path

- 5-2-4-1 The syspath List

- 5-2-1 Python Program Architecture

- 5-1 Modules The Big Picture

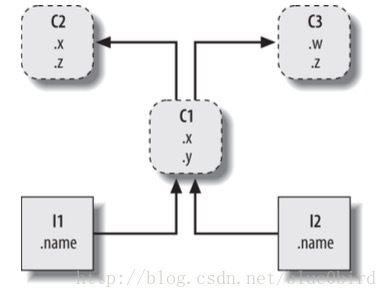

- 6 Classes and OOP

- 6-1 OOP The Big Picture

- 6-1-1 Why Use Classes

- 6-1-2 Attribute Inheritance Search

- 6-1-3 Classes and Instances

- 6-1-4 Method Calls

- 6-1-5 Coding Class Trees

- 6-1-6 Operator Overloading

- 6-1-7 Polymorphism and classes

- 6-2 Class Coding Basics

- 6-2-1 Classes Generate Multiple Instance Objects

- 6-2-1-1 Class Objects Provide Default Behavior

- 6-2-1-2 Instance Objects Are Concrete Items

- 6-2-1-3 A First Example

- 6-2-2 Classes Are Customized by Inheritance

- 6-2-2-1 Classes Are Attributes in Modules

- 6-2-3 Classes Can Intercept Python Operators

- 6-2-3-1 Examples

- 6-2-4 Future Directions

- 6-2-1 Classes Generate Multiple Instance Objects

- 6-3 Operator Overloading

- 6-3-1 The Basics

- 6-3-1-1 Constructors and Expressions init and sub

- 6-3-1-2 Common Operator Overloading Methods

- 6-3-1 The Basics

- 6-4 Advanced Class Topics

- 6-4-1 The New Style Class Model

- 6-4-2 New-Style Classes changes

- 6-4-2-1 Diamond Inheritance Change

- 6-4-2-1-1 Implications for diamond inheritance trees

- 6-4-2-1-2 Explicit conflict resolution

- 6-4-2-2 More on the MRO Method Resolution Order

- 6-4-2-1 Diamond Inheritance Change

- 6-4-3 New-Style Class Extensions

- 6-4-3-1 Slots Attribute Declarations

- 6-4-3-1-1 Slot basics

- 6-4-3-1-2 Slots and namespace dictionaries

- 6-4-3-1-3 Multiple slot lists in superclasses

- 6-4-3-1-4 Slot usage rules

- 6-4-3-2 Properties Attribute Accessors

- 6-4-3-2-1 Property basics

- 6-4-3-2-2 getattribute and Descriptors Attribute Tools

- 6-4-3-1 Slots Attribute Declarations

- 6-4-4 Static and Class Methods

- 6-4-4-1 Static Methods in 2X and 3X

- 6-4-4-2 Static Method Alternatives

- 6-4-4-3 Using Static and Class Methods

- 6-4-5 Decorators and Metaclasses Part 1

- 6-4-5-1 Function Decorator Basics

- 6-4-5-2 A First Look at User-Defined Function Decorators

- 6-4-5-3 A First Look at Class Decorators and Metaclasses

- 6-4-6 The super Built-in Function For Better or Worse

- 6-4-6-1 The Great super Debate

- 6-4-6-2 Traditional Superclass Call Form Portable General

- 6-4-6-3 Basic super Usage and Its Tradeoffs

- 6-4-6-3-1 Odd semantics A magic proxy in Python 3X

- 6-4-6-3-2 Pitfall Adding multiple inheritance naively

- 6-1 OOP The Big Picture

- 7 Exceptions and Tools

- 7-1 Exception Basics

- 7-1-1 Exception Roles

- 7-1-2 User-Defined Exceptions

- 7-1-3 Termination Actions

- 7-2 Exception Coding Details

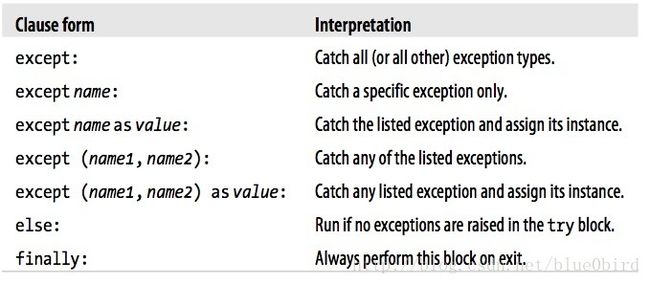

- 7-2-1 Try Statement Clauses

- 7-2-2 Catching any and all exceptions

- 7-2-3 The try else Clause

- 7-2-4 The raise Statement

- 7-2-4 Python 3X Exception Chaining raise from

- 7-2-5 The assert Statement

- 7-2-6 withas Context Managers

- 7-2-6-1 Basic Usage

- 7-2-6-2 The Context Management Protocol

- 7-2-6-3 Multiple Context Managers in 31 27 and Later

- 7-3 Exception Objects

- 7-3-1 Exceptions Back to the future

- 7-3-1-1 String Exceptions Are Right Out

- 7-3-1-2 Class-Based Exceptions

- 7-3-1-3 Coding Exceptions Classes

- 7-3-2 Built-in Exception Classes

- 7-3-3 Custom Data and Behavior

- 7-3-3-1 Providing Exception Details

- 7-3-3-2 Providing Exception Methods

- 7-3-1 Exceptions Back to the future

- 7-4 Designing with Exceptions

- 7-4-1 Exception idioms

- 7-4-1-1 Breaking Out of Multiple Nested Loops

- 7-4-1-2 Exceptions Arent Always Errors

- 7-4-1-3 Functions Can Signal Conditions with raise

- 7-4-1-4 Closing Files and Server Connections

- 7-4-1-5 Debugging with Outer try Statements

- 7-4-1-6 Running In-Process Tests

- 7-4-1-7 More on sysexc_info

- 7-4-1-8 Displaying Errors and Tracebacks

- 7-4-1 Exception idioms

- 7-1 Exception Basics

- 8 Advanced Topics

- 8-1 Unicode and Byte Strings

- 8-1-1 String Basics

- 8-1-1-1 Character Encoding Schemes

- 8-1-1 String Basics

- 8-2 Managed Attributes

- 8-2-1 Why Manage Attributes

- 8-2-1-1 Inserting Code to Run on Attribute Access

- 8-2-2 Properties

- 8-2-2-1 The Basics

- 8-2-2-2 A First Example

- 8-2-2-3 Coding Properties with Decorators

- 8-2-3 Descriptors

- 8-2-3-1 The Basics

- 8-2-3-1-1 Descriptor method arguments

- 8-2-3-1-2 Read-only descriptors

- 8-2-3-2 A First Example

- 8-2-3-3 Using State Information in Descriptors

- 8-2-3-4 How Properties and Descriptors Relate

- 8-2-3-1 The Basics

- 8-2-4 getattr and getattribute

- 8-2-4-1 The Basics

- 8-2-1 Why Manage Attributes

- 8-3 Decorators

- 8-3-1 Whats a Decorator

- 8-3-1-1 Managing Calls and Instances

- 8-3-1-2 Managing Functions and Classes

- 8-3-2 Function Decorators

- 8-3-2-1 Usage

- 8-3-2-2 Implementation

- 8-3-2-3 Supporting method decoration

- 8-3-3 Class Decorators

- 8-3-3-1 Usage

- 8-3-3-2 Implementation

- 8-3-3-3 Supporting multiple instances

- 8-3-4 Decorator Nesting

- 8-3-5 Decorator Arguments

- 8-3-1 Whats a Decorator

- 8-4 Metaclasses

- 8-1 Unicode and Byte Strings

1) Types and Operations

1-1) Built-in Objects preview

| Object type | Example literals/creation |

|---|---|

| Numbers | 1234, 3.1415, 3+4j, 0b111, Decimal(), Fraction() |

| Strings | ‘spam’, “Bob’s”, b’a\x01c’, u’sp\xc4m’ |

| Lists | [1, [2, ‘three’], 4.5],list(range(10)) |

| Dictionaries | {‘food’: ‘spam’, ‘taste’: ‘yum’},dict(hours=10) |

| Tuples | (1, ‘spam’, 4, ‘U’),tuple(‘spam’),namedtuple |

| Files | open(‘eggs.txt’),open(r’C:\ham.bin’, ‘wb’) |

| Sets | set(‘abc’),{‘a’, ‘b’, ‘c’} |

| Other core types | Booleans, types, None |

| Program unit types | Functions, modules, classes |

| Implementation-related types | Compiled code, stack tracebacks |

1-2) Numbers

Although it offers some fancier options, Python’s basic number types are, well, basic. Numbers in Python support the normal mathematical operations. For instance, the plus sign (+) performs addition, a star () is used for multiplication, and two stars (*) are used for exponentiation:

>>> 123 + 222 345

>>> 1.5 * 4 6.0

>>> 2 ** 100 1267650600228229401496703205376

# Integer addition

# Floating-point multiplication # 2 to the power 100, againNotice the last result here: Python 3.X’s integer type automatically provides extra precision for large numbers like this when needed (in 2.X, a separate long integer type handle numbers too large for the normal integer type in similar ways)

On Pythons prior to 2.7 and 3.1, once you start experimenting with floating-point numbers, you’re likely to stumble across something that may look like a bit odd at fist glance:

>>> 3.1415 * 2 # repr: as code (Pythons < 2.7 and 3.1) 6.2830000000000004

>>> print(3.1415 * 2) # str: user-friendly

6.283The first result isn’t a bug, it’s a display issue. It turns out that there are two ways to print server object in Python - with full precision (as in the first result shown here), and in a user-friendly form (as in the second). Formally, the first form is known as an objects as-code repr, and the second is its user-friendly str. In older Pythons, the floating-point repr sometimes displays more precision than you might expect. The difference can also matter when we step up to using classes.

Better yet, upgrade to Python 2.7 and the latest 3.X, where floatin-point numbers display themselves more intelligently, usually with fewer extraneous digits.

>>> 3.1415 * 2 # repr: as code (Pythons >= 2.7 and 3.1)

6.283Besides expressions, there are a handful of useful numeric modules that ship with Python - modules are just packages of additional tools that we import to use:

>>> import math

>>> math.pi

3.141592653589793

>>> math.sqrt(85)

9.2195444572928871-3) String

Strings are used to record both textual information, as well as arbitrary collections of bytes (such as an image file’s contents).

1-3-1) Sequence Operations

As sequences, strings support operations that assume a positional ordering among items.

>>> S = 'Spam'

>>> len(S) 4

>>> S[0]

'S'

>>> S[1] 'p'In Python, we can also index backward, from the end - positive indexes count from the left, and negative indexes count back from the right:

>>> S[-1] # The last item from the end in S

'm'

>>> S[-2] # The second-to-last item from the end

'a'In addition to simple positional indexing, sequences also support a more general form of indexing known as slicing. which is a way to extract an entire section (slice) in a single step. For Example:

>>> S # A 4-character string

'Spam'

>>> S[1:3] # Slice of S from offsets 1 through 2 (not 3)

'pa'Finally, as sequences, strings also support concatenation with plus sign (joining two strings into a new string) and repetition (making a new string by repeating another):

>>> S

'Spam'

>>> S + 'xyz' # Concatenation

'Spamxyz'

>>> S

'Spam'

>>> S * 8 # Repetition

'SpamSpamSpamSpamSpamSpamSpamSpam'Notice that the plus sign (+) means different things for different objects: addition for numbers, and concatenation for strings.

1-3-2) Immutable

Also notice in the prior examples that we were not changing the original string with any of the operations we run on it. Every string operation is define to produce a new string as its result, because strings are immutable in Python.

>>> S 'Spam'

>>> S[0] = 'z' # Immutable objects cannot be changed

...error text omitted...

TypeError: 'str' object does not support item assignment

>>> S = 'z' + S[1:] # But we can run expressions to make new objects

>>> S

'zpam'Strictly speaking, you can change text-based data in place if you either expand it into a list of individual characters and join it back together with nothing between, or use the newer bytearray type avaliable in Pythons 2.6, 3.0 and later:

>>> S = 'shrubbery'

>>> L = list(S) # Expand to a list: [...]

>>> L

['s', 'h', 'r', 'u', 'b', 'b', 'e', 'r', 'y']

>>> L[1] = 'c' # Change it in place

>>> ''.join(L) # Join with empty delimiter

'scrubbery'

>>> B = bytearray(b'spam') # A bytes/list hybrid (ahead)

>>>> B.extend(b'eggs') # 'b' needed in 3.X, not 2.X

>>> B bytearray(b'spameggs') # B[i] = ord(c) works here too

>>> B.decode() # Translate to normal string

'spameggs'The bytearray supports in-place changes for text, but only for text whose characters are all at most 8-bits wide. All other strings are still immutable – bytearray is a distinct hybrid of immutable bytes strings

(whose b’…’ syntax is required in 3.X and optional 2.X) and mutable lists (coded and displayed in []), and we have to learn more about both these and Unicode text to fully grasp this code.

1-3-3) Type-Specific Methods

Every string operation we’ve studied so far is really a sequence operation – that is, these operations will work on other sequences in Python as well, including lists and tuples. In addition to generic sequence operations, though, strings also have operations all their own, available as methods – functions that are attached to and act upon a specific object, which are triggered with a call expression.

>>> S = 'Spam'

>>> S.find('pa')

1

>>> S

'Spam'

>>> S.replace('pa', 'XYZ') 'SXYZm'

>>> S

'Spam'

>>> line = 'aaa,bbb,ccccc,dd'

>>> line.split(',')

['aaa', 'bbb', 'ccccc', 'dd']

>>> S = 'spam'

>>> S.upper()

'SPAM'

>>> S.isalpha()

True

>>> line = 'aaa,bbb,ccccc,dd\n'

>>> line.rstrip()

'aaa,bbb,ccccc,dd'

>>> line.rstrip().split(',')

['aaa', 'bbb', 'ccccc', 'dd']

Strings also support an advanced substitution operation known as formatting, available as both an expression and a string method call; the second of these allows you to omit relative argument value numbers as of 2.7 and 3.1:

>>> '%s, eggs, and %s' % ('spam', 'SPAM!') # Formatting expression (all)

'spam, eggs, and SPAM!'

>>> '{0}, eggs, and {1}'.format('spam', 'SPAM!') # Formatting method (2.6+, 3.0+)

'spam, eggs, and SPAM!'

>>> '{}, eggs, and {}'.format('spam', 'SPAM!') # Numbers optional (2.7+, 3.1+)

'spam, eggs, and SPAM!'Formatting is rich with features, which we’ll postpone discussing until later in this book, and which tend to matter most when you must generate numeric reports:

>>> '{:,.2f}'.format(296999.2567) # Separators, decimal digits

'296,999.26'

>>> '%.2f | %+05d' % (3.14159, −42) # Digits, padding, signs

'3.14 | −0042'1-3-4) Getting Help

What is available for string object, you can always call the built-in dir function. This functions lists variables assigned in the caller’s scope when called with no argument; more usefully, it returns a list of all the attributes available for any objects passed to it.

>>> dir(S)

['__add__', '__class__', '__contains__', '__delattr__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__getitem__', '__getnewargs__', '__gt__', '__hash__', '__init__', '__iter__', '__le__', '__len__', '__lt__', '__mod__', '__mul__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__rmod__', '__rmul__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', 'capitalize', 'casefold', 'center', 'count', 'encode', 'endswith', 'expandtabs', 'find', 'format', 'format_map', 'index', 'isalnum', 'isalpha', 'isdecimal', 'isdigit', 'isidentifier', 'islower', 'isnumeric', 'isprintable', 'isspace', 'istitle', 'isupper', 'join', 'ljust', 'lower', 'lstrip', 'maketrans', 'partition', 'replace', 'rfind', 'rindex', 'rjust', 'rpartition', 'rsplit', 'rstrip', 'split', 'splitlines', 'startswith', 'strip', 'swapcase', 'title', 'translate', 'upper', 'zfill']You probable won’t care about the names with double underscores in this list until later in the book, when we study operator overloading in classes – they represent the implementation of the string object and are available to support customization. The add method of strings, for example, is what really performs concatenation; Python maps the first of following to the second internally, though you shouldn’t usually use the second form yourself (it’s less intuitive, and might even run slower):

>>> S + 'NI!'

'spamNI!'

>>> S.__add__('NI!')

'spamNI!'In general, leading and trailing double underscores is the naming pattern Python uses for implementation details. The names without the underscores in this list are the callable methods on string object.

The dir function simply gives the method’s names. To ask what they do, you can pass them to the help function:

>>> help(S.replace)

Help on built-in function replace:

replace(...)

S.replace(old, new[, count]) -> str

Return a copy of S with all occurrences of substring old replaced by new. If the optional argument count is given, only the first count occurrences are replaced.1-3-5) Unicode Strings

Python’s strings also come with full Unicode support required for processing text in internationalized character sets.

In Python 3.X, the normal str string handles Unicode text (including ASCII, which is just a simple kind of Unicode); a distinct bytes string type represents raw byte values (including media and encoded text); and 2.X Unicode literals are supported in 3.3 and later for 2.x compatibility (they are treated the same as normal 3.X str strings):

>>> 'sp\xc4m' # 3.X: normal str strings are Unicode text

'spÄm'

>>> b'a\x01c' # bytes strings are byte-based data

b'a\x01c'

>>> u'sp\u00c4m''spÄm' # The 2.X Unicode literal works in 3.3+: just strIn Python 2.X, the normal str string handles both 8-bits character strings (including ASCII text) and raw byte values; a distinct unicode string type represents Unicode text; and 3.X bytes literals are supported in 2.6 and later for 3.X compatibility.

>>> print u'sp\xc4m' # 2.X: Unicode strings are a distinct type

spÄm

>>> 'a\x01c' # Normal str strings contain byte-based text/data

'a\x01c'

>>> b'a\x01c' # The 3.X bytes literal works in 2.6+: just str

'a\x01c'

Formally, in both 2.X and 3.X, non-Unicode strings are sequences of 8-bit bytes that print with ASCII characters when possible, and Unicode strings are sequences of Uni- code code points—identifying numbers for characters, which do not necessarily map to single bytes when encoded to files or stored in memory. In fact, the notion of bytes doesn’t apply to Unicode: some encodings include character code points too large for a byte, and even simple 7-bit ASCII text is not stored one byte per character under some encodings and memory storage schemes:

>>> 'spam' # Characters may be 1, 2, or 4 bytes in memory

'spam'

>>> 'spam'.encode('utf8') # Encoded to 4 bytes in UTF-8 in files

b'spam'

>>> 'spam'.encode('utf16') # But encoded to 10 bytes in UTF-16

b'\xff\xfes\x00p\x00a\x00m\x00'Both 3.X and 2.X also support the bytearray string type we met earlier, which is es- sentially a bytes string (a str in 2.X) that supports most of the list object’s in-place mutable change operations.

Both 3.X and 2.X also support coding non-ASCII characters with \x hexadecimal and short \u and long \U Unicode escapes, as well as file-wide encodings declared in program source files. Here’s our non-ASCII character coded three ways in 3.X (add a leading “u” and say “print” to see the same in 2.X):

>>> 'sp\xc4\u00c4\U000000c4m'

'spÄÄÄm'What these values mean and how they are used differs between text strings, which are the normal string in 3.X and Unicode in 2.X, and byte strings, which are bytes in 3.X and the normal string in 2.X. All these escapes can be used to embed actual Unicode code-point ordinal-value integers in text strings. By contrast, byte strings use only \x hexadecimal escapes to embed the encoded form of text, not its decoded code point values—encoded bytes are the same as code points, only for some encodings and char- acters:

>>> '\u00A3', '\u00A3'.encode('latin1'), b'\xA3'.decode('latin1') ('£', b'\xa3', '£')As a notable difference, Python 2.X allows its normal and Unicode strings to be mixed in expressions as long as the normal string is all ASCII; in contrast, Python 3.X has a tighter model that never allows its normal and byte strings to mix without explicit conversion:

u'x' + b'y' # Works in 2.X (where b is optional and ignored)

u'x' + 'y' # Works in 2.X: u'xy'

u'x' + b'y' # Fails in 3.3 (where u is optional and ignored)

u'x' + 'y' # Works in 3.3: 'xy'

'x' + b'y'.decode() # Works in 3.X if decode bytes to str: 'xy'

'x'.encode() + b'y' # Works in 3.X if encode str to bytes: b'xy'1-3-6) Pattern Matching

Readers with background in other scripting languages may be interested in to know that to do pattern matching in Python. we import a module called re. This module has analogous calls for searching, splitting, and replacement, but because we can use patterns to specify substrings, we can be much more general:

>>> import re

>>> match = re.match('Hello[ \t]*(.*)world', 'Hello Python world') >>> match.group(1)

'Python '

>>> match = re.match('[/:](.*)[/:](.*)[/:](.*)', '/usr/home:lumberjack') >>> match.groups()

('usr', 'home', 'lumberjack')

>>> re.split('[/:]', '/usr/home/lumberjack') ['', 'usr', 'home', 'lumberjack']1-4) Lists

1-4-1) Sequence Operations

>>> L = [123, 'spam', 1.23] # A list of three different-type objects

>>> len(L) # Number of items in the list

3

>>> L[0]

>123

>>> L[:-1]

[123, 'spam']

>>> L + [4, 5, 6]

[123, 'spam', 1.23, 4, 5, 6]

>>> L * 2

[123, 'spam', 1.23, 123, 'spam', 1.23]1-4-2) Type-Specific Operations

>>> L.append('NI') # Growing: add object at end of list

>>> L

[123, 'spam', 1.23, 'NI']

>>> L.pop(2) # Shrinking: delete an item in the middle

1.23

>>> L # "del L[2]" deletes from a list too

[123, 'spam', 'NI']

>>> M = ['bb', 'aa', 'cc'] >>> M.sort()

>>> M

['aa', 'bb', 'cc']

>>> M.reverse()

>>> M

['cc', 'bb', 'aa']The list sort method here, for example, orders the list in ascending fashion by default, and reverse reverses it – in both cases, the methods modify the list directly.

1-4-2) Bounds Checking

Although lists have no fixed size, Python still doesn’t allow us to reference items that are not present. Indexing off the end of a list is always a mistake, but so is assigning off the end:

>>> L

[123, 'spam', 'NI']

>>> L[99]

...error text omitted...

IndexError: list index out of range

>>> L[99] = 1

...error text omitted...

IndexError: list assignment index out of range1-4-3) Nesting

One nice feature of Python’s core data types is that they support arbitrary nesting.

>>> M = [[1, 2, 3], # A 3 × 3 matrix, as nested lists [4, 5, 6],

# Code can span lines if bracketed

[7, 8, 9]] >>> M

[[1, 2, 3], [4, 5, 6], [7, 8, 9]]

>>> M[1] # Get row 2 [4, 5, 6]

>>> M[1][2] # Get row 2, then get item 3 within the row 61-4-4) Comprehensions

In addition to sequence operations and list methods, Python includes a more advanced operation known as a list comprehension expression, which turns out to be a powerful way to process structures like our matrix. Suppose, for instance, that we need to extract the second column of our example matrix. It’s easy to grab rows by simple indexing because the matrix is stored by rows, but it’s almost as easy to get a column with list comprehension:

>>> col2 = [row[1] for row in M] # Collect the items in column 2

>>> col2

[2, 5, 8]

>>> M # The matrix is unchanged

[[1, 2, 3], [4, 5, 6], [7, 8, 9]]List comprehensions derive from set notation; they are a way to build a new list by running an expression on each item in a sequence, once at a time, from left to right.

List comprehensions can be more complex in practice:

>>> [row[1] + 1 for row in M] # Add 1 to each item in column 2

[3, 6, 9]

>>> [row[1] for row in M if row[1] % 2 == 0] # Filter out odd items

[2, 8]These expressions can also be used to collect multiple values, as long as we wrap those values in a nested collection. The following illustrates using range – a built-in that generates successive integers, and requires a surrounding list to display all its values in 3.X only:

>>> list(range(4)) # 0..3 (list() required in 3.X)

>[0, 1, 2, 4]

>> list(range(-6, 7, 2)) # −6 to +6 by 2 (need list() in 3.X)

>[−6, −4, −2, 0, 2, 4, 6]

>>> [[x**2, x ** 3] for x in range(4)]

>[[0, 0], [1, 1], [4, 8], [9, 27]]

>>> [[x, x / 2, x * 2] for x in range(−6, 7, 2) if x > 0]

[[2, 1, 4], [4, 2, 8], [6, 3, 12]]As a preview, though, you’ll find that in recent Pythons, comprehensions syntax has been generalized for other roles: it’s not just for making lists today. For example, enclosing a comprehension in a parentheses can also be used to create generators that produce results on demand.

>>> G = (sum(row) for row in M) # Create a generator of row sums

>>> next(G) # iter(G) not required here

6

>>> next(G) # Run the iteration protocol next()

>15

>>> next(G)

24The map built-in can do similar work, by generating the results of running iterms through a function, one at a time and on request. Like range, wrapping it in list forces it to return all its values in Python3.X; this isn’t needed in 2.X where map makes a list of results all at once instead, and is not needed in order contexts that iterate automatically, unless multiple scans or list-like behavior is also required:

>>> list(map(sum, M)) # Map sum over items in M

[6, 15, 24]

>>> {sum(row) for row in M} # Create a set of row sums

{24, 6, 15}

>>> {i : sum(M[i]) for i in range(3)} # Creates key/value table of row sums

{0: 6, 1: 15, 2: 24}In fact, lists, sets, dictionaries, and generators can all be built with comprehensions in 3.X and 2.7:

>>> [ord(x) for x in 'spaam'] # List of character ordinals

[115, 112, 97, 97, 109]

>>> {ord(x) for x in 'spaam'} # Sets remove duplicates

{112, 97, 115, 109}

>>> {x: ord(x) for x in 'spaam'} # Dictionary keys are unique

{'p': 112, 'a': 97, 's': 115, 'm': 109}

>>> (ord(x) for x in 'spaam') # Generator of values

at 0x000000000254DAB0> 1-5) Dictionaries

Dictionaries are instead known as mapping. Mappings are also collections of other objects, but they store objects by key instead of by relative position.

1-5-1) Mapping Operations

>>> D = {'food': 'Spam', 'quantity': 4, 'color': 'pink'}

>>> D['food'] # Fetch value of key 'food' 'Spam'

>>> D['quantity'] += 1 # Add 1 to 'quantity' value

>>> D

{'color': 'pink', 'food': 'Spam', 'quantity': 5}Although the curly-braces literal form does see use, it is perhaps more common to see dictionaries built up in different ways. The following code, for example, starts with an empty dictionary and fills it out one key at a time. Unlike out-of-bounds assignments in lists, which are forbidden, assignments to new dictionary keys create those keys:

>>> D = {}

>>> D['name'] = 'Bob' # Create keys by assignment

>>> D['job'] = 'dev'

>>> D['age'] = 40

>>> D

{'age': 40, 'job': 'dev', 'name': 'Bob'}

>>> print(D['name']) BobWe can also make dictionaries by passing to the dict type name ether keyword arguments or the result of zipping together sequences of keys and values obtained at runtime.

>>> bob1 = dict(name='Bob', job='dev', age=40) # Keywords

>>> bob1

{'age': 40, 'name': 'Bob', 'job': 'dev'}

>>> bob2 = dict(zip(['name', 'job', 'age'], ['Bob', 'dev', 40])) # Zipping

>>> bob2

{'job': 'dev', 'name': 'Bob', 'age': 40}Notice how the left-to-right order of dictionary keys is scrambled. Mappings are not positionally ordered.

1-5-2) Nesting Revisited

>>> rec = {'name': {'first': 'Bob', 'last': 'Smith'}, 'jobs': ['dev', 'mgr'],

'age': 40.5}

>>> rec['name']

>{'last': 'Smith', 'first': 'Bob'}

>>> rec['name']['last']

'Smith'

>>> rec['jobs'].append('janitor')

>>> rec

{'age': 40.5, 'jobs': ['dev', 'mgr', 'janitor'], 'name': {'last': 'Smith', 'first': 'Bob'}}

>>> rec = 0 # Now the object's space is reclaimed1-5-3) Missing Keys: if Tests

Although we can assign to a new key to expand a dictionary, fetching a nonexistent key is still a mistake:

>>> D = {'a': 1, 'b': 2, 'c': 3}

>>> D

{'a': 1, 'c': 3, 'b': 2}

>>> D['e'] = 99 # Assigning new keys grows dictionaries

>>> D

{'a': 1, 'c': 3, 'b': 2, 'e': 99}

>>> D['f'] # Referencing a nonexistent key is an error

...error text omitted...

KeyError: 'f'

>>> 'f' in D

False

>>> if not 'f' in D: # Python's sole selection statement

print('missing')

missingBesides the in test, there are a variety of ways to avoid accessing nonexistent keys in the dictionaries we create: the get method, a conditional index with a default; the Python 2.X has_key method, an in work-alike that is no longer available in 3.X;

>>> value = D.get('x', 0)

>>> value

0

>>> value = D['x'] if 'x' in D else 0

>>> value

01-5-4) Sorting Keys: for Loops

As mentioned earlier, because dictionaries are not sequences, they don’t maintain any dependable left-to-right order. If we make a dictionary and print is back, its keys may come back in a different order than that in which we type them, and may vary per Python version and other variables:

>>> D = {'a': 1, 'b': 2, 'c': 3}

>>> D

{'a': 1, 'c': 3, 'b': 2}

>>> Ks = list(D.keys()) # Unordered keys list

>>> Ks

['a', 'c', 'b']

>>> Ks.sort() # Sorted keys list

>>>> Ks

['a', 'b', 'c']

>>> for key in Ks: # Iterate though sorted keys

print(key, '=>', D[key]) # <== press Enter twice here (3.X print)

a => 1 b => 2 c => 3This is a three-step process, although, as we’ll see in later chapters, in recent versions of Python it can be done in one step with the newer sorted built-in function. The sorted call returns the result and sorts a variety of object types, in this case sorting dictionary keys automatically:

>>> D

{'a': 1, 'c': 3, 'b': 2}

>>> for key in sorted(D):

print(key, '=>', D[key])

a => 1

b => 2

c => 31-5-5) Iteration and Optimization

Formally, both types of objects are considered iterable because they support the iteration protocol – they respond to the iter call with an object that advances in response to next calls and raises an exception when finished producing values.

The generator comprehension expression we saw earlier is such an object: its values aren’t stored in memory all at once, but are produced as requested, usually by iteration tools. Python file objects similarly iterate line by line when used by an iteration tool: file content isn’t in a list, it’s fetched on demand. Both are iterable objects in Python – a category that expands in 3.X to include core tools like range and map.

Keep in mind that every Python tool that scans on object from left to right uses the iteration protocol. This is why the sorted call used in the prior section works on the dictionary directly– we don’t have to call the keys method to get a sequence because dictionaries are iterable objects, with a next that returns successive keys.

It may also help you to see that any list comprehension expression, such as this one, which computes the squares of a list of numbers:

>>> squares = [x ** 2 for x in [1, 2, 3, 4, 5]]

>>> squares

[1, 4, 9, 16, 25]A major rule of thumb in Python is to code for simplicity and readability first and worry about performance later, after your program is working, and after you’ve proved that there is a genuine performance concern. More often than not, your code will be quick enough as it is. If you do need to tweak code for performance, though, Python includes tools to help you out, including the time and itmeit modules for timing the speed of alternative, and the profile module for isolating bottlenecks.

1-6) Tuples

The tuple object is roughly like a list that cannot be changed – tuples are sequences, like lists, but they are immutable, like strings. Functionally, they’re used to represent fixed collections of items. the components of a specific calendar date, for instance. Syntactically, they are normally coded in parentheses instead of square brackets, and they support arbitrary types, arbitrary nesting, and the usual sequence operations:

>>> T = (1, 2, 3, 4)

>>> len(t)

4

>>> T + (5, 6)

(1, 2, 3, 4, 5, 6)

>>> T[0]

1The primary distinction for tuples is that they cannot be changed once created. That is, they are immutable sequences (one-item tuples like the one here require a trailing comma):

>>> T[0] = 2 # Tuples are immutable

...error text omitted...

TypeError: 'tuple' object does not support item assignment

>>> T = (2,) + T[1:] # Make a new tuple for a new value

>>> T

(2, 2, 3, 4)1-7) Files

File objects are Python code’s main interface to external files on your computer. There is no literal syntax for creating them. Rather, to create a file object, you call the built-in open function, passing an external filename and an optional processing mode as strings.

>>> f = open('data.txt', 'w')

>>> f.write('Hello\n')

6

>>> f.write('world\n')

6

>>> f.close()

>>> f = open('data.txt')

>>> text = f.read()

>>> text

'Hello\nworld\n'

>>> print(text)

Hello

world

>>> text.split()

['Hello', 'world']

>>> for line in open('data.txt'):

print(line)1-7-1) Binary Bytes Files

The prior section’s examples illustrate file basics that suffice for many roles. Techni- cally, though, they rely on either the platform’s Unicode encoding default in Python 3.X, or the 8-bit byte nature of files in Python 2.X. Text files always encode strings in 3.X, and blindly write string content in 2.X. This is irrelevant for the simple ASCII data used previously, which maps to and from file bytes unchanged. But for richer types of data, file interfaces can vary depending on both content and the Python line you use.

As hinted when we met strings earlier, Python 3.X draws a sharp distinction between text and binary data in files: text files represent content as normal str strings and per- form Unicode encoding and decoding automatically when writing and reading data, while binary files represent content as a special bytes string and allow you to access file content unaltered. Python 2.X supports the same dichotomy, but doesn’t impose it as rigidly, and its tools differ

>>> import struct

# Create packed binary data

>>> packed = struct.pack('>i4sh', 7, b'spam', 8)

>>> packed # 10 bytes, not objects or text

b'\x00\x00\x00\x07spam\x00\x08'

>>> file = open('data.bin', 'wb') # Open binary output file

>>> file.write(packed) # Write packed binary data

10

>>> file.close()

>>> data = open('data.bin', 'rb').read()

>>> data b'\x00\x00\x00\x07spam\x00\x08'

>>> data[4:8]

b'spam'

>>> list(data)

[0, 0, 0, 7, 115, 112, 97, 109, 0, 8]

>>> struct.unpack('>i4sh', data) # Unpack into objects again

(7, b'spam', 8)1-7-2) Unicode Text files

Text files are used to process all sort of text-based data, from memos to email content to json and xml documents.

Luckily, this is easier than it may sound. To access files containing non-ASCII Unicode text of the sort introduced earlier in this chapter, we simply pass in an encoding name if the text in the file doesn’t match the default encoding for our platform. In this mode, Python text files automatically encode on writes and decode on reads per the encoding scheme name you provide. In Python 3.X:

>>> S = 'sp\xc4m' # Non-ASCII Unicode text

>>> S

'spÄm'

>>> S[2] # Sequence of characters

'Ä'

# Write/encode UTF-8 text

>>> file = open('unidata.txt', 'w', encoding='utf-8')

>>> file.write(S) # 4 characters written

4

>>> file.close()

# Read/decode UTF-8 text

>>> text = open('unidata.txt', encoding='utf-8').read()

>>> text

'spÄm'

>>> len(text) # 4 chars (code points)

4This automatic encoding and decoding is what you normally want. Because files handle this on transfers, you may process text in memory as a simple string of characters without concern for its Unicode-encoded origins. If needed, though, you can also see what’s truly stored in your file by stepping into binary mode:

>>> raw = open('unidata.txt', 'rb').read() # Read raw encoded bytes

>>> raw

b'sp\xc3\x84m'

>>> len(raw) # Really 5 bytes in UTF-8 5You can also encode and decode manually if you get Unicode data from a source other than a file – parsed from an email message or fetched over a network connection, for example:

>>> text.encode('utf-8') # Manual encode to bytes b'sp\xc3\x84m'

>>> raw.decode('utf-8') # Manual decode to str

'spÄm'This all works more or less the same in Python 2.X, but Unicode strings are coded and display with a leading “u,” byte strings don’t require or show a leading “b,” and Unicode text files must be opened with codecs.open, which accepts an encoding name just like 3.X’s open, and uses the special unicode string to represent content in memory. Binary file mode may seem optional in 2.X since normal files are just byte-based data, but it’s required to avoid changing line ends if present

>>> import codecs

# 2.X: read/decode text

>>> codecs.open('unidata.txt', encoding='utf8').read() u'sp\xc4m'

# 2.X: read raw bytes

>>> open('unidata.txt', 'rb').read()

'sp\xc3\x84m'

# 2.X: raw/undecoded too

>>> open('unidata.txt').read()

'sp\xc3\x84m'1-8) Other Core Types

Beyond the core types we’ve seen so far, there are others that may or may not qualify for membership in the category, depending on how broadly it is defined. Sets, for ex- ample, are a recent addition to the language that are neither mappings nor sequences; rather, they are unordered collections of unique and immutable objects. You create sets by calling the built-in set function or using new set literals and expressions in 3.X and 2.7, and they support the usual mathematical set operations (the choice of new {…} syntax for set literals makes sense, since sets are much like the keys of a valueless dic- tionary):

>>> X = set('spam')

>>> Y = {'h', 'a', 'm'}

>>> X, Y # A tuple of two sets without parentheses

({'m', 'a', 'p', 's'}, {'m', 'a', 'h'})

>>> X & Y # Intersection

{'m', 'a'}

>>> X | Y # Union

{'m', 'h', 'a', 'p', 's'}

>>> X - Y # Difference

{'p', 's'}

>>> X > Y # Superset False

>>> {n ** 2 for n in [1, 2, 3, 4]} # Set comprehensions in 3.X and 2.7

{16, 1, 4, 9}In addition, Python recently grew a few new numeric types: decimal numbers, which are fixed-precision floating-point numbers, and fraction numbers, which are rational numbers with both a numerator and a denominator. Both can be used to work around the limitations and inherent inaccuracies of floating-point math:

>>> 1 / 3

0.3333333333333333

>>> (2/3) + (1/2)

1.1666666666666665

>>> import decimal

>>> d = decimal.Decimal('3.141')

>>> d + 1

Decimal('4.141')

# Floating-point (add a .0 in Python 2.X)

# Decimals: fixed precision

>>> decimal.getcontext().prec = 2

>>> decimal.Decimal('1.00') / decimal.Decimal('3.00') Decimal('0.33')

>>> from fractions import Fraction >>> f = Fraction(2, 3)

>>> f + 1

Fraction(5, 3)

>>> f + Fraction(1, 2) Fraction(7, 6)1-8-1) How to break your code’s flexibility

The type object, returned by the type built-in function, is an object that gives the type of another object; its result differs slightly in 3.X, because types have merged with classes completely. Assuming L is still the list of the prior section:

# In Python 2.X:

>>> type(L) # Types: type of L is list type object

<type 'list'>

>>> type(type(L)) # Even types are objects

<type 'type'>

# In Python 3.X:

>>> type(L) # 3.X: types are classes, and vice versa

<class 'list'>

>>> type(type(L))

<class 'type'>Besides allowing you to explore your objects interactively, the type object in its most practical application allows code to check the types of the object it processes. In fact, there are at least three ways to do so in a Python script:

>>> if type(L) == type([]): # Type testing, if you must...

print('yes')

yes

>>> if type(L) == list: # Using the type name

print('yes')

yes

>>> if isinstance(L, list): # Object-oriented tests

print('yes')

yes2) Statements and Syntax

2-1) Introducing Python Statements

| Statement | Role | Example |

|---|---|---|

| Assignment | Creating references | a, b = ‘good’, ‘bad’ |

| Calls and other expressions | Running functions | log.write(“spam, ham”) |

| print calls | Printing objects | print(‘The Killer’, joke) |

| if/elif/else | Selecting actions | if “python” in text: print(text) |

| for/else | Iteration | for x in mylist: print(x) |

| while/else | General loops | while X > Y: print(‘hello’) |

| pass | Empty placeholder | while True: pass |

| break | Loop exit | while True: if exittest(): break |

| continue | Loop continue | while True: if skiptest(): continue |

| def | Functions and methods | def f(a, b, c=1, *d): print(a+b+c+d[0]) |

| return | Functions results | def f(a, b, c=1, *d): return a+b+c+d[0] |

| yield | Generator functions | def gen(n): for i in n: yield i*2 |

| global | Namespaces | x = 'old' def function(): global x,y;x = 'new' |

| nonlocal | Namespaces (3.X) | def outer(): x = 'old' def function(): nonlocal x; x = 'new' |

| import | Module access | import sys |

| from | Attribute access | from sys import stdin |

| class | Building objects | class Subclass(Superclass): staticData = [] def method(self): pass |

| try/except/ finally | Catching exceptions | try: action() except: print('action error') |

| raise | Triggering exceptions | raise EndSearch(location) |

| assert | Debugging checks | assert X > Y, ‘X too small’ |

| with/as | Context managers (3.X, 2.6+) | with open('data') as myfile: process(myfile) |

| del | Deleting references | del data[k] |

2-2) Iterations and Comprehensions

2-2-1) The Iteration Protocol: File Iterators

One of the easiest ways to understand the iteration protocol is to see how it works with the built-in type such as the file. In this chapter, we’ll be using the following input file to demonstrate:

>>> print(open('script2.py').read())

import sys

print(sys.path) x =2

print(x ** 32)

>>> open('script2.py').read()

'import sys\nprint(sys.path)\nx = 2\nprint(x ** 32)\n'Following codes that open file objects have a method called readline, which reads one line of next from a file at a time – each time we call the readline method, we advance to the next line. At the end of the file, an empty string is returned, which we can detect to break out of the loop:

>>> f = open('script2.py')

>>> f.readline()

'import sys\n'

>>> f.readline()

'print(sys.path)\n'

>>> f.readline()

'x = 2\n'

>>> f.readline()

'print(x ** 32)\n'

>>> f.readline()However, file also have a method named next in 3.X (and next in 2.X) that has a nearly identical effect – it returns the next line from a file each time it is called. The only noticeable difference is that next raises a built-in StopIteration exception at end-of-file instead of returning an empty string:

>>> f = open('script2.py') # __next__ loads one line on each call too

>>> f.__next__() # But raises an exception at end-of-file

'import sys\n'

>>> f.__next__() ' # Use f.next() in 2.X, or next(f) in 2.X or 3.X

print(sys.path)\n'

>>> f.__next__()

'x = 2\n'

>>> f.__next__()

'print(x ** 32)\n'

>>> f.__next__()

Traceback (most recent call last):

File "" , line 1, in StopIteration This interface is most of what we call the iteration protocol in Python. Any object with a next method to advance to next result, which raises StopIteration exception at the end of the series of results, is considered an iterator in Python.

The net effect of this magic is that, the best way to read a text file line by line today is not read it at all – instead, allow the for loop to automatically call next to advance to the next line on each iteration.

>>> for line in open('script2.py'):

... print(line.upper(), end='')

...

IMPORT SYS

PRINT(SYS.PATH) X =2

PRINT(X ** 32)Notice that the print use end=” here to suppress adding a ‘\n’, because line strings already have one. This is considered the best way to read text file line by line today, for three reasons: it’s the simplest to code, might be the quickest to run, and is the best in terms of memory usage. The older, original way to achieve the same effect with a for loop is to call the file readlines method to load the file’s content into memory as a list of line strings:

>>> for line in open('script2.py').readlines():

... print(line.upper(), end='')

...

IMPORT SYS

PRINT(SYS.PATH) X =2

PRINT(X ** 32)This readlines technique still works but is not considered the best practice today and performs poorly in terms of memory usage. In fact, because this version really does load the entire file into memory all at once, it will not even work for files too big to fit into the memory space available on your computer.

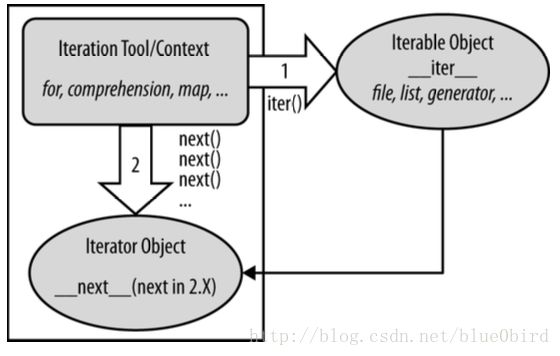

2-2-2) The full iteration protocol

It’s really based on two objects, used in two distinct steps by iteration tools:

- The iterable object you request iteration for, whose iter is run by iter

- The iteration object returned by the iterable that actually produces values during the iteration, whose next is run by next and raises StopIteration when finished producting results

In actual code, the protocol’s first step becomes obvious if we look at how for loops internally process built-in sequence types such as lists:

>>> L = [1, 2, 3]

>>> I = iter(L) # Obtain an iterator object from an iterable

>>> I.__next__() # Call iterator's next to advance to next item

1

>>> I.__next__()

2

>>> I.__next__()

3

...error next omiited ...

StopIteration2-2-3) List Comprehensions: A First Detailed Look

We use range to change a list as we step across it:

>>> L = [1, 2, 3, 4, 5]

>>> for i in range(len(L)):

L[i] += 10

>>> L

[11, 12, 13, 14, 15]This works, but it may not be the optimal “best practice” approach in Python. Today, the list comprehension expression makes many such prior coding patterns obsolete.

>>> L= [x+10 for x in L]

>>> L

[21, 22, 23, 24, 25]2-2-4) List Comprehensions Basics

To run the expression, Python executes an iteration across L inside the interpreter, assigning x to each item in turn, and collects the results of running the items through the expression on the left side.

Technically speaking, list comprehensions are never really required because we can always build up a list of expression results manually with for loops that append results as we go:

>>> res = []

>>> for x in L:

... res.append(x + 10)

...

>>> res

[31, 32, 33, 34, 35]In fact, this is exactly what the list comprehension does internally.

However, list comprehensions are more concise to write, and because this pattern of building up a list is so common in Python work, they turn out to be very useful in many contexts. Moreover, depending on your Python and code, list comprehensions might much faster than manual for loop statements (often roughly twice as fast) because their iterations are performed at C language speed inside the interpreter, rather than with manual Python code. Especially for larger data sets, there is often a major performance advantage to using this expression.

2-2-4) Using List Comprehensions on Files

Anytime we start thinking about performing an operations on each item in a sequence, we’re in the realm of list comprehensions.

>>> lines = [line.rstrip() for line in lines]

>>> lines

['import sys', 'print(sys.path)', 'x=2', 'print(x ** 32)']we don’t have to open the file ahead of time. If we open it inside the expression, the list comprehension will automatically use the iteration protocol.

>>> lines = [line.rstrip() for line in open('script2.py')]

>>> lines

['import sys', 'print(sys.path)', 'x = 2', 'print(x ** 32)']

>>> [line.upper() for line in open('script2.py')]

['IMPORT SYS\n', 'PRINT(SYS.PATH)\n', 'X = 2\n', 'PRINT(X ** 32)\n']

>>> [line.rstrip().upper() for line in open('script2.py')] ['IMPORT SYS', 'PRINT(SYS.PATH)', 'X = 2', 'PRINT(X ** 32)']

>>> [line.split() for line in open('script2.py')]

[['import', 'sys'], ['print(sys.path)'], ['x', '=', '2'],['print(x', '**', '32)']]

>>> [line.replace(' ', '!') for line in open('script2.py')] ['import!sys\n', 'print(sys.path)\n', 'x!=!2\n', 'print(x!**!32)\n']

>>> [('sys' in line, line[:5]) for line in open('script2.py')] [(True, 'impor'), (True, 'print'), (False, 'x = 2'), (False, 'print')]2-2-5) Extended List Comprehension Syntax

2-2-5-1) Filter clauses: if

As one particularly useful extension, the for loop nested in a comprehension expression can have an associated if clause to filter out of the result items for which the test is not true.

>>> lines = [line.rstrip() for line in open('script2.py') if line[0] == 'p']

lines

['print(sys.path)', 'print(x ** 32)']2-2-5-2) Nested loops: for

List comprehension can become even more complex if we need them to – for instance, they may contain nested loops, coded as a series of for clauses. In fact, their full syntax allows for any number of for clauses, each of which can have an optional associated if clause.

>>> [x + y for x in 'abc' for y in 'lmn']

['al', 'am', 'an', 'bl', 'bm', 'bn', 'cl', 'cm', 'cn']3) The Documentation interlude

3-1) Python Documentation Sources

One of the first questions that bewildered beginners often ask is: how do I find information on all the built-in tools? This section provides hints on the various documentation sources available in Python. It also presents documentation strings (docstrings) and the PyDoc system that make use of them.

Python documentation sources

| Tables | Are |

|---|---|

| comments | In-file documentation |

| The dir function | Lists of attributes available in objects |

| Docstrings: doc | In-file documentation attached to objects |

| PyDoc: thehelpfunction | Interactive help for objects |

| PyDoc: HTML reports | Module documentation in a browser |

| Sphinx third-party tool | Richer documentation for larger projects |

| The standard manual set | Official language and library descriptions |

| Web resources | Online tutorials, examples, and so on |

| Published books | Commercially polished reference texts |

3-2) # Comments

As we’ve learned, hash-mark comments are the most basic way to document your code.

3-3) The dir Function

As we’ve also seen, the built-in dir function is an easy way to grab a list of all the attributes available inside on object. It can be called with no arguments to list variables in the caller’s scope. More usefully, it can also be called on object that has attributes, including imported modules and built-in types, as well as the name of a data type.

>>> import sys

>>> dir(sys)

['__displayhook__', ...more names omitted..., 'winver']Notice that you can list built-in type attributes by passing a type name to dir instead of a literal:

>>> dir(str) == dir('') # Same result, type name or literal True

>>> dir(list) == dir([])

TrueThis works because names like str and list that were once type converter functions are actually names of types in Python today; calling one of these invokes its constructor to generate an instance of that type.

3-4) Docstrings: doc

Besides # comments, Python supports documentation that is automatically attached to objects and retained at runtime for inspection. Syntactically, such comments are coded as strings at the tops of module files and function and class statements, before any other executable code (# comments, including Unix-stye #! lines are OK before them). Python automatically stuffs the text of these strings, known informally as docstrings, into the doc attributes of the corresponding objects.

3-4-1) User-defined docstrings

Its docstrings appear at the beginning of the file and at the start of a function and a class within it. Here, I’ve used triple-quoted block strings for multiline comments in the file and the function, but any sort of string will work; single- or double-quoted one-liners like those in the class are fine, but don’t allow multiple-line text. We haven’t studied the def or class statements in detail yet, so ignore everything about them here except the strings at their tops:

"""

Module documentation Words Go Here

"""

spam = 40

def square(x):

"""

function documentation

can we have your liver then?

"""

return x ** 2

class Employee:

"class documentation"

pass

print(square(4))

print(square.__doc__)The whole point of this documentation protocol is that your comments are retained for inspection in doc attributes after the file is imported.

>>> import docstrings

function documentation

can we have your liver then?

>>> print(docstrings.__doc__)

Module documentation

Words Go Here

>>> print(docstrings.square.__doc__)

function documentation

>>> print(docstrings.Employee.__doc__)

class documentation

3-4-2) Built-in docstrings

As it turns out, built-in modules and objects in Python use similar techniques to attach documentation above and beyond the attribute lists returned by dir. For example, to see an actual human-readable description of a built-in module, import it and print its doc string:

>>> import sys

>>> print(sys.__doc__)

This module provides access to some objects used or maintained by the interpreter and to functions that interact strongly with the interpreter.

Dynamic objects:

argv -- command line arguments; argv[0] is the script pathname if known path -- module search path; path[0] is the script directory, else '' modules -- dictionary of loaded modules

...more text omitted...Functions, classes, and methods within built-in modules have attached descriptions in their doc attributes as well:

>>> print(sys.getrefcount.__doc__) getrefcount(object) -> integer

Return the reference count of object. The count returned is generally one higher than you might expect, because it includes the (temporary) reference as an argument to getrefcount().3-5) PyDoc: The help Function

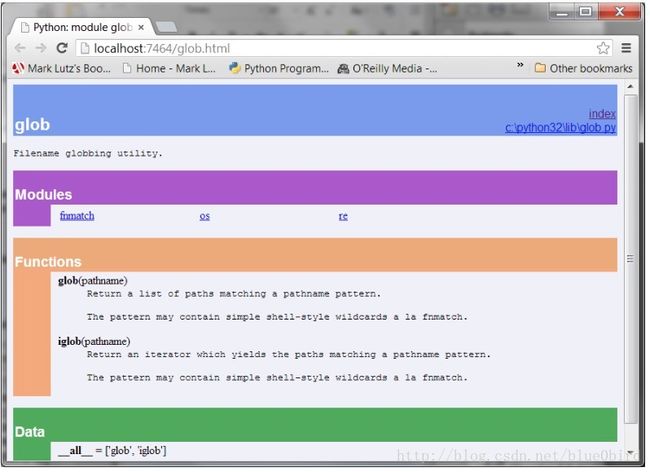

The docstring technique proved to be so useful that Python eventually added a tool that makes docstrings even easier to display. The standard PyDoc tool is Python code that knows how to extract docstrings and associated structural information and format them into nicely arranged reports of various types.

There are a variety of ways to launch PyDoc, including command-line script options that can save the resulting documentation for later viewing. Perhaps the two most prominent PyDoc interfaces are the built-in help function and the PyDoc GUI and web-based HTML report interfaces.

>>> import sys

>>> help(sys.getrefcount)

Help on built-in function getrefcount in module sys:

getrefcount(...)

getrefcount(object) -> integer

Return the reference count of object. The count returned is generally one higher than you might expect, because it includes the (temporary) reference as an argument to getrefcount().Note that you do not have to import sys in order to call help, but you do have to import sys to get help on sys this way; it expects an object reference to be passed in. In Pythons 3.3 and 2.7, you can get help for a module you have not imported by quoting the module’s name as a string – for example, help(‘re’), help(‘email.message’) – but support for this and other modes may differ across Python versions.

For larger objects such as modules and classes, the help display is break down into multiple sections, the preambles of which are shown here. Run this interactively to see the full report:

>>> help(sys)

Help on built-in module sys:

NAME

sys

MODULE REFERENCE

http://docs.python.org/3.3/library/sys

...more omitted...

DESCRIPTION

This module provides access to some objects used or maintained by the interpreter and to functions that interact strongly with the interpreter.

...more omitted...

FUNCTIONS

__displayhook__ = displayhook(...)

displayhook(object) -> None

...more omitted...

DATA

__stderr__ = <_io.TextIOWrapper name='' mode='w' encoding='cp4...

__stdin__ = <_io.TextIOWrapper name='<stdin>' mode='r' encoding='cp437...

__stdout__ = <_io.TextIOWrapper name='' mode='w' encoding='cp4... ...more omitted...

FILE

(built-in)Some of the information in this report is docstrings, and some of if (e.g., function call patterns) is structural information that PyDoc gleans automatically by inspecting objects’ internals, when available.

Besides modules, you can also use help on built-in functions, methods, and types. Usage varies slightly across Python versions, but to get help for a built-in type, try either the type name (e.g., dict for dictionary, str for string, list for list); an actual object of the type (e.g., {}, ”, []); or a method of an actual object or type name (e.g., str.join, ‘s’.join).

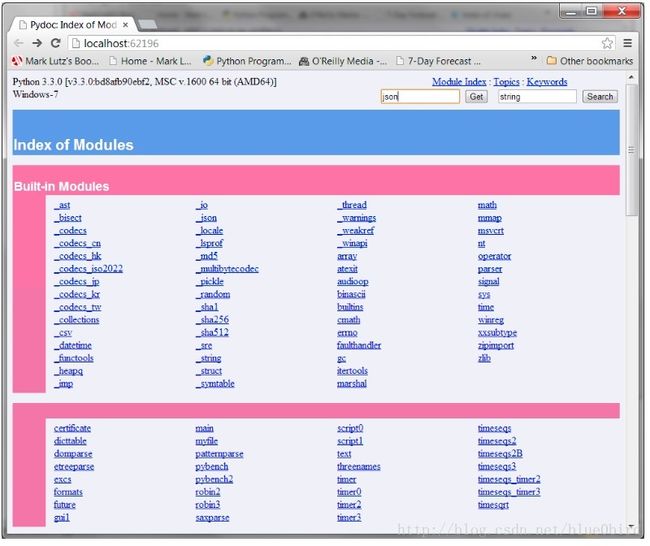

3-6) PyDoc: HTML Reports

The text displays of the help function are adequate in many contexts, especially at the interactive prompt. To readers who’ve grown accustomed to richer presentation mediums, though, they may seem a bit primitive. This section presents the HTML-based flavor of PyDoc, which renders module documentation more graphically for viewing in a web browser. and can even open one automatically for you. The way this is run has changed as of Python 3.3:

- Prior to 3.3, Python ships with a simple GUI desktop client for submitting search requests. This client launches a web browser to view documentation produced by an automatically started local server.

- As of 3.3, the former GUI client is replaced by an all-browser interface scheme, which combines both search and display in a web page that communicates with an automatically started local server.

3-6-1) Python 3.2 and later: PyDoc’s all-browser mode

As of Python 3.3 the original GUI client mode of PyDoc, present in 2.X and earlier 3.X releases, is no longer available. This mode is present through Python 3.2 with the “Module Docs” Start button entry on Windows 7 and earlier, and via the pydoc -g command line. This GUI mode was reportedly deprecated in 3.2, though you had to look closely to notice – it works fine and without warning on 3.2.

In 3.3, though, this mode goes away altogether, and is replaced with a pydoc -b command line, which instead spawns both a locally running documentation server, as well as a web browser that functions as both search engine client and page display.

To launch the newer browser-only mode of PyDoc in Python 3.2 and later, a command line like any of the following suffice: they all use the -m Python command-line argument for convenience to locate PyDoc’s module file on your module import search path.

c:\code> python -m pydoc -b

Server ready at http://localhost:62135/ Server commands: [b]rowser, [q]uit server> q

Server stopped

c:\code> py −3 -m pydoc -b

Server ready at http://localhost:62144/ Server commands: [b]rowser, [q]uit server> q

Server stopped

c:\code> C:\python33\python -m pydoc -b Server ready at http://localhost:62153/ Server commands: [b]rowser, [q]uit server> q

Server stopped

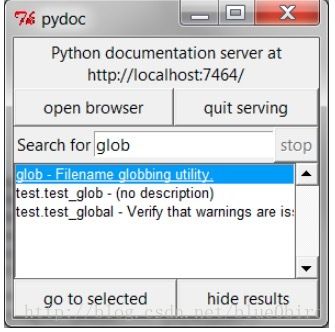

3-6-2) Python 3.2 and earlier: GUI client

c:\code> c:\python32\python -m pydoc -g # Explicit Python path

c:\code> py −3.2 -m pydoc -gOn Pythons 3.2 and 2.7, I had to add “.” to my PYTHONPATH to get PyDoc’s GUI client mode to look in the directory it was started from by command line:

c:\code> set PYTHONPATH=.;%PYTYONPATH%

c:\code> py −3.2 -m pydoc -g3-7) Beyond docstrings: Sphinx

If you’re looking for a way to document your Python system in a more sophisticated way, you may wish to check out Sphinx ((currently at http://sphinx-doc.org))

4) Functions and Generators

4-1) Function Basics

Functions are the alternative to programming by cutting and pasting – rather than having multiple redundant copies of an operation’s code, we can factor it into a single function. In so doing, we reduce our future work radically: if the operation must be changed later, we have only one copy to update in the function, not many scattered throughout the program.

4-2) Coding Functions

Here is a brief introduction to the main concepts behind Python functions:

- def is executable code. Python functions are written with a new statement, the def. Unlike functions in compiled languages such as C, def is an executable state- ment—your function does not exist until Python reaches and runs the def. In fact, it’s legal (and even occasionally useful) to nest def statements inside if statements, while loops, and even other defs. In typical operation, def statements are coded in module files and are naturally run to generate functions when the module file they reside in is first imported.

- def creates an object and assigns it to a name. When Python reaches and runs a def statement, it generates a new function object and assigns it to the function’s name. As with all assignments, the function name becomes a reference to the func- tion object. There’s nothing magic about the name of a function—as you’ll see, the function object can be assigned to other names, stored in a list, and so on. Function objects may also have arbitrary user-defined attributes attached to them to record data.

- lambda creates an object but returns it as a result. Function may also be created with the lambda expression, a feature that allows us to in-line function definitions in places where a def statement won’t work syntactically.

- return sends a result object back to the caller. When a function is called, the caller stops until the function finishes its work and returns control to the caller. Functions that compute a value send it back to the caller with a return statement; the returned value becomes the result of the function call. A return without a value simply returns to the caller (and sends back None, the default result).

- yield sends a result object back to the caller, but remembers where it left off. Functions known as generators may also use the yield statement to send back a value and suspend their state such that they may be resumed later, to produce a series of results over time.

- global declares module-level variables that are to be assigned. By default, all names assigned in a function are local to that function and exist only while the function runs. To assign a name in the enclosing module, functions need to list it in a global statement. More generally, names are always looked up in scopes— places where variables are stored—and assignments bind names to scopes.

- nonlocal declares enclosing function variables that are to be assigned. Simi- larly, the nonlocal statement added in Python 3.X allows a function to assign a name that exists in the scope of a syntactically enclosing def statement. This allows enclosing functions to serve as a place to retain state—information remembered between function calls—without using shared global names.

- Arguments are passed by assignment (object reference). In Python, arguments are passed to functions by assignment (which, as we’ve learned, means by object reference). As you’ll see, in Python’s model the caller and function share objects by references, but there is no name aliasing. Changing an argument name within a function does not also change the corresponding name in the caller, but changing passed-in mutable objects in place can change objects shared by the caller, and serve as a function result.

- Arguments are passed by position, unless you say otherwise. Values you pass in a function call match argument names in a function’s definition from left to right by default. For Flexibility, functions calls can also pass arguments by name with name=value keyword syntax, and unpack arbitrarily many arguments to send with *pargs and **kargs starred-arguments notation. Function definitions use the same two forms to specify argument defaults, and collect arbitrarily many arguments received.

- Arguments, return values, and variables are not declared. As with everything in Python, there are no type constraints on functions. In fact, nothing about a function needs to be declared ahead of time: you can pass in arguments of any type, return any kind of object, and so on. As one consequence, a single function can often be applied to a variety of object types—any objects that sport a compatible interface (methods and expressions) will do, regardless of their specific types.

4-2-1) def Statements

The def statement creates a function object and assigns it to a name. Its general format is as follows:

def name(arg1, arg2,... argN):

statements

def name(arg1, arg2,... argN):

...

return value4-2-2) def Executes at Runtime

if test:

def func(): # Define func this way

...

else:

def func(): # Or else this way

...

...

func() # Call the version selected and built

4-3) Scopes

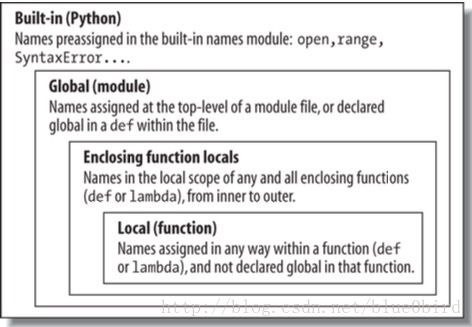

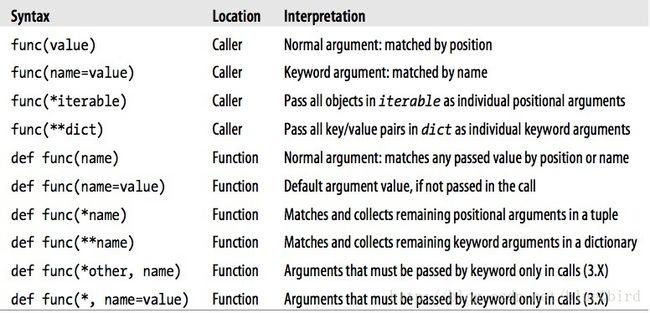

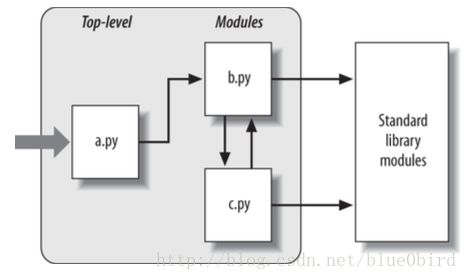

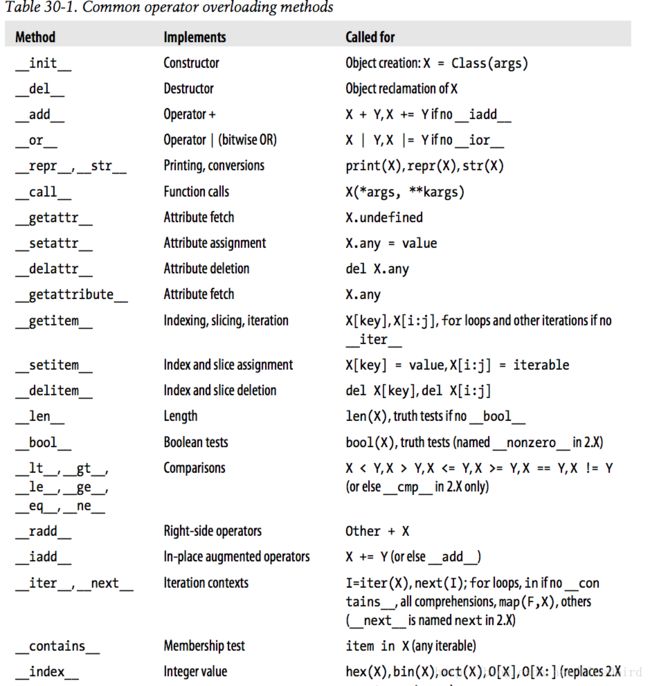

4-3-1) Python Scope Basics

Now that you’re ready to start writing your own functions, we need to get more formal about what names mean in Python. When you use a name in a program, Python creates, changes, or looks up the name in what is known as a namespace – a place where names live. When we talk about the search for a name’s value in relation to code, the term scope refers to a namespace: that is, the location of a name’s assignment in your source code determines the scope of the name’s visibility to your code.

Just about everything related to names, including scope classification, happens at assignment time in Python. As we’ve seen, names in Python spring into existence when they are first assigned values, and they must be assigned before they are used. Because names are not declared ahead of time, Python uses the location of the assignment of a name to associate it with a particular namespace. In other words, the place where you assign a name in your source code determines the namespace it will live in, and hence its scope of visibility.

Besides packaging code for reuse, functions add an extra namespace layer to your pro- grams to minimize the potential for collisions among variables of the same name—by default, all names assigned inside a function are associated with that function’s namespace, and no other. This rule means that:

- Namesassignedinsideadefcanonlybeseenbythecodewithinthatdef.You cannot even refer to such names from outside the function.

- Names assigned inside a def do not clash with variables outside the def, even if the same names are used elsewhere. A name X assigned outside a given def (i.e., in a different def or at the top level of a module file) is a completely different variable from a name X assigned inside that def.

4-3-1-1) Scope Details

Before we started writing functions, all the code we wrote was at the top level of a module (i.e., not nested in a def), so the names we used either lived in the module itself or were built-ins predefined by Python (e.g., open). Technically, the interactive prompt is a module named main that prints results and doesn’t save its code; in all other ways, though, it’s like the top level of a module file.

Functions, though, provide nested namespaces (scopes) that localize the names they use, such that names inside a function won’t clash with those outside it (in a module or another function). Functions define a local scope and modules define a global scope with the following properties:

The enclosing module is a global scope. Each module is a global scope—that is, a namespace in which variables created (assigned) at the top level of the module file live. Global variables become attributes of a module object to the outside world after imports but can also be used as simple variables within the module file itself.

The global scope spans a single file only. Don’t be fooled by the word“global” here—names at the top level of a file are global to code within that single file only. There is really no notion of a single, all-encompassing global file-based scope in Python. Instead, names are partitioned into modules, and you must always import a module explicitly if you want to be able to use the names its file defines. When you hear “global” in Python, think “module.”

Assigned names are local unless declared global or . nonlocal. By default, all the names assigned inside a function definition are put in the local scope (the namespace associated with the function call). If you need to assign a name that lives at the top level of the module enclosing the function, you can do so by declaring it in a global statement inside the function. If you need to assign a name that lives in an enclosing def, as of Python 3.X you can do so by declaring it in a nonlocal statement.

All other names are enclosing function locals, globals, or built-ins. Names not assigned a value in the function definition are assumed to be enclosing scope locals, defined in a physically surrounding def statement; globals that live in the enclosing module’s namespace; or built-ins in the predefined built-ins module Python provides.

Each call to a function creates a new local scope. Every time you call a function, you create a new local scope—that is, a namespace in which the names created inside that function will usually live. You can think of each def statement (and lambda expression) as defining a new local scope, but the local scope actually cor- responds to a function call. Because Python allows functions to call themselves to loop

4-3-1-2) Name Resolution: The LEGB Rule

If the prior section sounds confusing, it really boils down to three simple rules. With a def statement:

- Name assignment create or change local names by default.

- Name reference search at most four scope: local, then enclosing functions (if any), then global, then built-in.

- Names declared in global and nonlocal statement map assigned names to enclosing module and function scopes, respectively.

Python’s name-resolution scheme is sometimes called the LEGB rule, after the scope name:

When you use a unqualified name inside a function, Python searches up to four scope – then local (L) scope, then the local scopes of any enclosing (E) defs and lambdas, then the global (G) scope, and then the built-in (b) scope – and stops at the first place the name is found. If the name is not found during this search, Python report an error.

When you assign a name in function (instead of just referring to it in an expression), Python always creates or changes the name in the local scope, unless it’s declared to be global or nonlocal in that function.

##### 4-3-1-2-1) Other Python scopes: Preview

Though obscure at this point in the book, there are technically three more scopes in Python – temporary loop variables in some comprehensions, exception reference variables in some try handlers, and local scopes in class statements. The first two of these special cases that rarely impact real code, and the third falls under the LEGB umbrella rule.

Comprehension variables—the variable X used to refer to the current iteration item in a comprehension expression such as [X for X in I]. Because they might clash with other names and reflect internal state in generators, in 3.X, such variables are local to the expression itself in all comprehension forms: generator, list, set, and dictionary. In 2.X, they are local to generator expressions and set and dictionary compressions, but not to list comprehensions that map their names to the scope outside the expression. By contrast, for loop statements never localize their variables to the statement block in any Python.

Exception variables—the variable X used to reference the raised exception in a try statement handler clause such as except E as X. Because they might defer garbage collection’s memory recovery, in 3.X, such variables are local to that except block, and in fact are removed when the block is exited (even if you’ve used it earlier in your code!). In 2.X, these variables live on after the try statement.

4-3-2) Scope Example

# Global scope

X = 99 # X and func assigned in module: global

def func(Y): # Y and Z assigned in function: locals

# Local scope

Z = X + Y # X is a global

return Z

func(1) # func in module: result=1004-3-3) The Built-in Scope

The built-in scope is just a built-in module called builtins, but you have to import builtins to query built-ins because the name builtins is not itself built in…

No, I’m serious! The built-in scope is implemented as a standard library module named builtins in 3.X, but that name itself is not placed in the built-in scope, so you have to import it in order to inspect it. Once you do, you can run a dir call to see which names are predefined. In Python 3.3 (see ahead for 2.X usage):

>>> import builtins

>>> dir(builtins)

['ArithmeticError', 'AssertionError', 'AttributeError', 'BaseException', 'BlockingIOError', 'BrokenPipeError', 'BufferError', 'BytesWarning',

...many more names omitted...

'ord', 'pow', 'print', 'property', 'quit', 'range', 'repr', 'reversed', 'round', 'set', 'setattr', 'slice', 'sorted', 'staticmethod', 'str', 'sum', 'super', 'tuple', 'type', 'vars', 'zip']There are really two ways to refer to a built-in function—by taking advantage of the LEGB rule, or by manually importing the builtins module:

>>> zip # The normal way

<class 'zip'>

>>> import builtins # The hard way: for customizations

>>> builtins.zip

<class 'zip'>

>>> zip is builtins.zip # Same object, different lookups

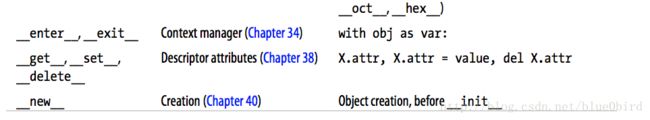

True4-3-4) Scopes and Nested Functions