spark 数据倾斜问题

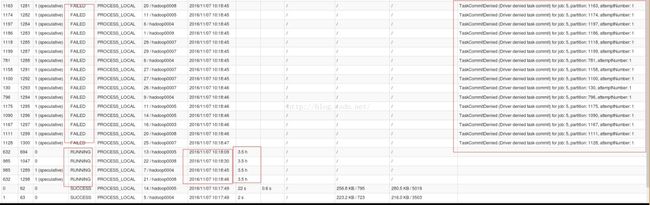

先上两张大图压压惊:

Driver拒绝提交任务:

16/11/07 10:31:50 INFO OutputCommitCoordinator: Task was denied committing, stage: 5, partition: 887, attempt: 1

16/11/07 10:33:11 INFO TaskSetManager: Finished task 632.1 in stage 5.0 (TID 1286) in 244369 ms on hadoop0009 (1199/1200)

16/11/07 10:36:35 WARN TaskSetManager: Lost task 632.0 in stage 5.0 (TID 695, hadoop0004): TaskCommitDenied (Driver denied task commit) for job: 5, partition: 632, attemptNumber: 0

程序直接处于假死的状态:

16/11/07 10:31:50 INFO OutputCommitCoordinator: Task was denied committing, stage: 5, partition: 887, attempt: 1

16/11/07 10:33:11 INFO TaskSetManager: Finished task 632.1 in stage 5.0 (TID 1286) in 244369 ms on hadoop0009 (1199/1200)

16/11/07 10:36:35 WARN TaskSetManager: Lost task 632.0 in stage 5.0 (TID 695, hadoop0004): TaskCommitDenied (Driver denied task commit) for job: 5, partition: 632, attemptNumber: 0

16/11/07 10:36:35 INFO OutputCommitCoordinator: Task was denied committing, stage: 5, partition: 632, attempt: 0

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on 172.16.0.3:51318 in memory (size: 2.3 KB, free: 7.0 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0008:34591 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0003:53324 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0005:39567 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0004:55268 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0003:57855 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0009:39399 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0009:53846 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0004:59068 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0005:37489 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_9_piece0 on hadoop0007:60705 in memory (size: 2.3 KB, free: 8.4 GB)

16/11/07 10:38:59 INFO BlockManagerInfo: Removed broadcast_8_piece0 on 172.16.0.3:51318 in memory (size: 2.8 KB, free: 7.0 GB)

开始的时候一直把关注点放在失败的任务上,问度娘,也没发现什么有效的解决方法。实在没办法了,查看spark的源代码:

/**

* :: DeveloperApi ::

* Task requested the driver to commit, but was denied.

*/

@DeveloperApi

case class TaskCommitDenied(

jobID: Int,

partitionID: Int,

attemptNumber: Int) extends TaskFailedReason {

override def toErrorString: String = s"TaskCommitDenied (Driver denied task commit)" +

s" for job: $jobID, partition: $partitionID, attemptNumber: $attemptNumber"

/**

* If a task failed because its attempt to commit was denied, do not count this failure

* towards failing the stage. This is intended to prevent spurious stage failures in cases

* where many speculative tasks are launched and denied to commit.

*/

override def countTowardsTaskFailures: Boolean = false

}

源码也没明确说出现什么问题,其实。提交一个spark任务,执行过程出现几个task失败的再正常不过的了。对,思路转过来了。之所以这样想,是因为我看到任务的执行时间里面有几个是以小时为单位的,尼玛的,赤裸裸的任务倾斜了,so,修改了一下提交任务的配置:

--conf spark.speculation=true \

--conf spark.speculation.interval=100 \

--conf spark.speculation.quantile=0.75 \

--conf spark.speculation.multiplier=1.5 \

上面的意思是,如果发生任务倾斜,就在其他节点上执行相同的任务,然后选择最快执行完的。但是还是一点用都没有,还是剩下那几个拖后腿的任务。接下来就是代码级的优化了。因为task倾斜大部分都是数据倾斜造成的,所以这也是最后的疗法了。怎么做呢?

从监控界面看到的是最后一个stage的操作一直没完成,所以定位到了大概的代码上面了,因为有个sort,groupByKey之后letfjoin,然后对比leftjoin 两边内容的过程。用countByKey打印出groupByKey后的内容,看到了想要的东西,果然存在数据倾斜的情况,因为数据存在10000*1000000的情况,所以数据处理一直完成不了。

最后的解决方法自然是修改代码,把list剪短。问题解决。

总结一下:

1.监控很重要,因为监控可以直接告诉你哪出了问题

2.度娘解决不了的问题,基本上就是你自己的问题了

总结一下:

1.监控很重要,因为监控可以直接告诉你哪出了问题

2.度娘解决不了的问题,基本上就是你自己的问题了