Docker容器与虚拟化技术——Kubernetes详解之k8s部署之二进制部署

Kubernetes详解——k8s部署之二进制部署

十一、二进制部署kubernetes高可用集群

1、环境设置(所有机器)

(1)host文件

echo "192.168.11.151 kube-node1" >>/etc/hosts

echo "192.168.11.152 kube-node2" >>/etc/hosts

echo "192.168.11.153 kube-node3" >>/etc/hosts(2)添加 k8s 和 docker 账户及参数

useradd -m k8s

sh -c 'echo 111111 | passwd k8s --stdin'

gpasswd -a k8s wheel

useradd -m docker

gpasswd -a k8s docker

mkdir -p /etc/docker/

cat > /etc/docker/daemon.json <修改sudo配置,支持无密码sudo:

visudo

%wheel ALL=(ALL) NOPASSWD: ALL

(3)无密码 ssh 登录其它节点(节点1)

ssh-keygen -t rsa

ssh-copy-id root@kube-node2

ssh-copy-id root@kube-node1

ssh-copy-id root@kube-node3

ssh-copy-id k8s@kube-node1

ssh-copy-id k8s@kube-node2

ssh-copy-id k8s@kube-node3(4)将可执行文件路径 /opt/k8s/bin 添加到 PATH 变量中

mkdir -p /opt/k8s/bin/

sh -c "echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>/root/.bashrc"

echo 'PATH=/opt/k8s/bin:$PATH:$HOME/bin:$JAVA_HOME/bin' >>~/.bashrc(5)安装依赖包

cd /etc/yum.repos.d/

rm -rf Centos-7.repo

rm -rf epel-7.repo

rm -rf docker*

mkdir bak

mv CentOS-* bak/

wget http://mirrors.aliyun.com/repo/Centos-7.repo

wget http://mirrors.aliyun.com/repo/epel-7.repo

wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y epel-release

yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp(6)关闭防火墙、selinux、swap分区

systemctl stop firewalld

systemctl disable firewalld

iptables -F && sudo iptables -X && sudo iptables -F -t nat && sudo iptables -X -t nat

iptables -P FORWARD ACCEPT

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config(7)关闭 dnsmasq

service dnsmasq stop

systemctl disable dnsmasq(8)设置系统参数

cat > kubernetes.conf <(9)加载内核模块

modprobe br_netfilter

modprobe ip_vs(10)设置系统时区

timedatectl set-timezone Asia/Shanghai

timedatectl set-local-rtc 0

systemctl restart rsyslog

systemctl restart crond(11)创建目录

mkdir -p /opt/k8s/bin

chown -R k8s /opt/k8s

mkdir -p /etc/kubernetes/cert

chown -R k8s /etc/kubernetes

mkdir -p /etc/etcd/cert

chown -R k8s /etc/etcd/cert

mkdir -p /var/lib/etcd && chown -R k8s /etc/etcd/cert(12)集群环境变量

cat > environment.sh <(13)分发集群环境变量定义脚本

source environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp environment.sh k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done2、创建AC证书和秘钥

(1)安装cfssl 工具集

mkdir -p /opt/k8s/cert && chown -R k8s /opt/k8s && cd /opt/k8s

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

mv cfssl_linux-amd64 /opt/k8s/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

mv cfssljson_linux-amd64 /opt/k8s/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /opt/k8s/bin/cfssl-certinfo

chmod +x /opt/k8s/bin/*

export PATH=/opt/k8s/bin:$PATH(2)创建根证书 (CA)

cat > ca-config.json < ca-csr.json < (3)分发证书

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert && chown -R k8s /etc/kubernetes"

scp ca*.pem ca-config.json k8s @${node_ip}:/etc/kubernetes/cert

done3、部署 kubectl 命令行工具

(1)下载和分发 kubectl 二进制文件

wget https://dl.k8s.io/v1.10.4/kubernetes-client-linux-amd64.tar.gz

tar -xzvf kubernetes-client-linux-amd64.tar.gz

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kubernetes/client/bin/kubectl root@${node_ip}:/opt/k8s/bin/

ssh root@${node_ip} "chmod +x /opt/k8s/bin/*"

done(2)创建 admin 证书和私钥

cat > admin-csr.json <(3)创建kubeconfig文件

source /opt/k8s/bin/environment.sh

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubectl.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=kubectl.kubeconfig

# 设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin \

--kubeconfig=kubectl.kubeconfig

# 设置默认上下文

kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig k8s@${node_ip}:~/.kube/config

ssh root@${node_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${node_ip}:~/.kube/config

done4、部署 etcd 集群

(1)下载和分发

wget https://github.com/coreos/etcd/releases/download/v3.3.7/etcd-v3.3.7-linux-amd64.tar.gz

tar -xvf etcd-v3.3.7-linux-amd64.tar.gz

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp etcd-v3.3.7-linux-amd64/etcd* k8s@${node_ip}:/opt/k8s/bin

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done(2)创建 etcd 证书和私钥

cat > etcd-csr.json <>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/etcd/cert && chown -R k8s /etc/etcd/cert"

scp etcd*.pem k8s@${node_ip}:/etc/etcd/cert/

done (3)创建 etcd 的服务模板文件

mkdir -p /var/lib/etcd

cat > etcd.service.template <(4)创建并分发服务文件

source /opt/k8s/bin/environment.sh

for (( i=0; i < 3; i++ ))

do

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" etcd.service.template > etcd-${NODE_IPS[i]}.service

done

ls *.service

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/etcd && chown -R k8s /var/lib/etcd"

scp etcd-${node_ip}.service root@${node_ip}:/etc/systemd/system/etcd.service

done(5)启动 etcd 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd &"

done(6)检查启动结果

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status etcd|grep Active"

done(7)验证服务状态

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ETCDCTL_API=3 /opt/k8s/bin/etcdctl \

--endpoints=https://${node_ip}:2379 \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem endpoint health;

done5、部署flannel网络

(1)下载分发

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

mkdir flannel

tar -xzvf flannel-v0.10.0-linux-amd64.tar.gz -C flannel

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flannel/{flanneld,mk-docker-opts.sh} k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done(2)创建 flannel 证书和私钥

cat > flanneld-csr.json <>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/flanneld/cert && chown -R k8s /etc/flanneld"

scp flanneld*.pem k8s@${node_ip}:/etc/flanneld/cert

done (3)向 etcd 写入集群 Pod 网段信息(只需执行一次)

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

set ${FLANNEL_ETCD_PREFIX}/config '{"Network":"'${CLUSTER_CIDR}'", "SubnetLen": 24, "Backend": {"Type": "vxlan"}}'(4)创建 flanneld 的服务文件

source /opt/k8s/bin/environment.sh

export IFACE=ens33

cat > flanneld.service << EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/k8s/bin/flanneld \\

-etcd-cafile=/etc/kubernetes/cert/ca.pem \\

-etcd-certfile=/etc/flanneld/cert/flanneld.pem \\

-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \\

-etcd-endpoints=${ETCD_ENDPOINTS} \\

-etcd-prefix=${FLANNEL_ETCD_PREFIX} \\

-iface=${IFACE}

ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp flanneld.service root@${node_ip}:/etc/systemd/system/

done(5)启动服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable flanneld && systemctl restart flanneld"

done(6)检查验证

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status flanneld|grep Active"

done

#检查分配给各 flanneld 的 Pod 网段信息

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/config

#查看已分配的 Pod 子网段列表(/24):

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

ls ${FLANNEL_ETCD_PREFIX}/subnets

#查看某一 Pod 网段对应的节点 IP 和 flannel 接口地址:

source /opt/k8s/bin/environment.sh

etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/cert/ca.pem \

--cert-file=/etc/flanneld/cert/flanneld.pem \

--key-file=/etc/flanneld/cert/flanneld-key.pem \

get ${FLANNEL_ETCD_PREFIX}/subnets/172.30.81.0-24

#验证各节点能通过 Pod 网段互通

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

done

#在各节点上 ping 所有 flannel 接口 IP,确保能通:

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "ping -c 1 172.30.6.0"

ssh ${node_ip} "ping -c 1 172.30.35.0"

ssh ${node_ip} "ping -c 1 172.30.88.0"

done6、部署 master 节点

(1)下载及分发二进制文件

wget https://dl.k8s.io/v1.10.4/kubernetes-server-linux-amd64.tar.gz

tar -xzvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes

tar -xzvf kubernetes-src.tar.gz

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp server/bin/* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done(2)部署kubernetes haproxy

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "yum install -y keepalived haproxy"

done

#配置文件

cat > haproxy.cfg <>> ${node_ip}"

scp haproxy.cfg root@${node_ip}:/etc/haproxy

done

#服务器启动

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl restart haproxy && systemctl enable haproxy"

done

#检查 haproxy 服务状态

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status haproxy|grep Active"

done

#检查 haproxy 是否监听 8443 端口:

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "netstat -lnpt|grep haproxy"

done

#配置和下发 keepalived 配置文件

master配置文件

source /opt/k8s/bin/environment.sh

cat > keepalived-master.conf < keepalived-backup.conf <>> ${node_ip}"

ssh root@${node_ip} "systemctl restart keepalived && systemctl enable keepalived"

done

#检查

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status keepalived|grep Active"

done

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh ${node_ip} "/usr/sbin/ip addr show ${VIP_IF}"

ssh ${node_ip} "ping -c 1 ${MASTER_VIP}"

done #查看haproxy页面

192.168.11.159:10080/status

admin

123456

(3)部署 kube-apiserver 组件

#创建 kubernetes 证书和私钥

source /opt/k8s/bin/environment.sh

cat > kubernetes-csr.json <>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/kubernetes/cert/ && sudo chown -R k8s /etc/kubernetes/cert/"

scp kubernetes*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done #创建加密配置文件

source /opt/k8s/bin/environment.sh

cat > encryption-config.yaml <>> ${node_ip}"

scp encryption-config.yaml root@${node_ip}:/etc/kubernetes/

done #创建 kube-apiserver服务文件

source /opt/k8s/bin/environment.sh

cat > kube-apiserver.service.template < kube-apiserver-${NODE_IPS[i]}.service

done

ls kube-apiserver*.service

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

scp kube-apiserver-${node_ip}.service root@${node_ip}:/etc/systemd/system/kube-apiserver.service

done #启动kube-apiserver

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-apiserver && systemctl restart kube-apiserver"

done#检查

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl status kube-apiserver |grep 'Active:'"

done

#打印 kube-apiserver 写入 etcd 的数据

source /opt/k8s/bin/environment.sh

ETCDCTL_API=3 etcdctl \

--endpoints=${ETCD_ENDPOINTS} \

--cacert=/etc/kubernetes/cert/ca.pem \

--cert=/etc/etcd/cert/etcd.pem \

--key=/etc/etcd/cert/etcd-key.pem \

get /registry/ --prefix --keys-only

#检查集群信息

kubectl cluster-info

kubectl get all --all-namespaces

kubectl get componentstatuses

#检查 kube-apiserver 监听的端口

netstat -lnpt|grep kube#授予 kubernetes 证书访问 kubelet API 的权限

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes(4)部署高可用 kube-controller-manager 集群

#创建 kube-controller-manager 证书和私钥

cat > kube-controller-manager-csr.json <>> ${node_ip}"

scp kube-controller-manager*.pem k8s@${node_ip}:/etc/kubernetes/cert/

done #创建和分发 kubeconfig 文件

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-controller-manager.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done#创建和分发 kube-controller-manager systemd unit 文件

source /opt/k8s/bin/environment.sh

cat > kube-controller-manager.service <>> ${node_ip}"

scp kube-controller-manager.service root@${node_ip}:/etc/systemd/system/

done #启动 kube-controller-manager 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

done#检查

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-controller-manager|grep Active"

done

#查看输出的 metric

netstat -lnpt|grep kube-controll

curl -s --cacert /etc/kubernetes/cert/ca.pem https://127.0.0.1:10252/metrics |head

#查看当前的 leader

kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml(5)部署高可用 kube-scheduler 集群

#创建 kube-scheduler 证书和私钥

cat > kube-scheduler-csr.json <#创建和分发 kubeconfig 文件

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context system:kube-scheduler \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp kube-scheduler.kubeconfig k8s@${node_ip}:/etc/kubernetes/

done#创建和分发 kube-scheduler systemd unit 文件

cat > kube-scheduler.service <>> ${node_ip}"

scp kube-scheduler.service root@${node_ip}:/etc/systemd/system/

done #启动服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-scheduler && systemctl restart kube-scheduler"

done#检查

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-scheduler|grep Active"

done

netstat -lnpt|grep kube-sche

curl -s https://127.0.0.1:10251/metrics |head

#查看当前leader

kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml7、部署 Work 节点

(1)部署docker

#下载分发

wget https://download.docker.com/linux/static/stable/x86_64/docker-18.03.1-ce.tgz

tar -xvf docker-18.03.1-ce.tgz

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker/docker* k8s@${node_ip}:/opt/k8s/bin/

ssh k8s@${node_ip} "chmod +x /opt/k8s/bin/*"

done#创建和分发 systemd unit 文件

cat > docker.service <<"EOF"

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.io

[Service]

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/opt/k8s/bin/dockerd --log-level=error $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp docker.service root@${node_ip}:/etc/systemd/system/

done

#配置和分发 docker 配置文件

cat > docker-daemon.json <>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /etc/docker/"

scp docker-daemon.json root@${node_ip}:/etc/docker/daemon.json

done #启动 docker 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "systemctl stop firewalld && systemctl disable firewalld"

ssh root@${node_ip} "/usr/sbin/iptables -F && /usr/sbin/iptables -X && /usr/sbin/iptables -F -t nat && /usr/sbin/iptables -X -t nat"

ssh root@${node_ip} "/usr/sbin/iptables -P FORWARD ACCEPT"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable docker && systemctl restart docker"

ssh root@${node_ip} 'for intf in /sys/devices/virtual/net/docker0/brif/*; do echo 1 > $intf/hairpin_mode; done'

ssh root@${node_ip} "sudo sysctl -p /etc/sysctl.d/kubernetes.conf"

done#检查服务运行状态

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status docker|grep Active"

done

#检查 docker0 网桥

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "/usr/sbin/ip addr show flannel.1 && /usr/sbin/ip addr show docker0"

done(2)部署kubelet

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

# 创建 token

export BOOTSTRAP_TOKEN=$(kubeadm token create \

--description kubelet-bootstrap-token \

--groups system:bootstrappers:${node_name} \

--kubeconfig ~/.kube/config)

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${node_name}.kubeconfig

done#查看 kubeadm 为各节点创建的 token:

kubeadm token list --kubeconfig ~/.kube/config

#各 token 关联的 Secret

kubectl get secrets -n kube-system

分发 bootstrap kubeconfig 文件到所有 worker 节点

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kubelet-bootstrap-${node_name}.kubeconfig k8s@${node_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done#创建和分发 kubelet 参数配置文件

source /opt/k8s/bin/environment.sh

cat > kubelet.config.json.template <>> ${node_ip}"

sed -e "s/##NODE_IP##/${node_ip}/" kubelet.config.json.template > kubelet.config-${node_ip}.json

scp kubelet.config-${node_ip}.json root@${node_ip}:/etc/kubernetes/kubelet.config.json

done #创建和分发 kubelet systemd unit 文件

cat > kubelet.service.template <>> ${node_name}"

sed -e "s/##NODE_NAME##/${node_name}/" kubelet.service.template > kubelet-${node_name}.service

scp kubelet-${node_name}.service root@${node_name}:/etc/systemd/system/kubelet.service

done

Bootstrap Token Auth 和授予权限

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers #启动 kubelet 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kubelet"

ssh root@${node_ip} "/usr/sbin/swapoff -a"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kubelet && systemctl restart kubelet"

done#自动 approve CSR 请求

cat > csr-crb.yaml <#创建 token 认证和授权:

kubectl create sa kubelet-api-test

kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test

SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}')

TOKEN=$(kubectl describe secret ${SECRET} | grep -E '^token' | awk '{print $2}')

echo ${TOKEN}

curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN}" https://192.168.11.151:10250/metrics|headsource /opt/k8s/bin/environment.sh

# 使用部署 kubectl 命令行工具时创建的、具有最高权限的 admin 证书;

curl -sSL --cacert /etc/kubernetes/cert/ca.pem --cert ./admin.pem --key ./admin-key.pem ${KUBE_APISERVER}/api/v1/nodes/kube-node1/proxy/configz | jq \

'.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"'#web页面

http://192.168.11.151:4194/containers/

(3)部署kube-proxy

#创建 kube-proxy 证书

cat > kube-proxy-csr.json <#创建和分发 kubeconfig 文件

source /opt/k8s/bin/environment.sh

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

source /opt/k8s/bin/environment.sh

for node_name in ${NODE_NAMES[@]}

do

echo ">>> ${node_name}"

scp kube-proxy.kubeconfig k8s@${node_name}:/etc/kubernetes/

done#创建 kube-proxy 配置文件

cat >kube-proxy.config.yaml.template <>> ${NODE_NAMES[i]}"

sed -e "s/##NODE_NAME##/${NODE_NAMES[i]}/" -e "s/##NODE_IP##/${NODE_IPS[i]}/" kube-proxy.config.yaml.template > kube-proxy-${NODE_NAMES[i]}.config.yaml

scp kube-proxy-${NODE_NAMES[i]}.config.yaml root@${NODE_NAMES[i]}:/etc/kubernetes/kube-proxy.config.yaml

done #创建和分发 kube-proxy systemd unit 文件

source /opt/k8s/bin/environment.sh

cat > kube-proxy.service <>> ${node_name}"

scp kube-proxy.service root@${node_name}:/etc/systemd/system/

done #启动 kube-proxy 服务

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "mkdir -p /var/lib/kube-proxy"

ssh root@${node_ip} "mkdir -p /var/log/kubernetes && chown -R k8s /var/log/kubernetes"

ssh root@${node_ip} "systemctl daemon-reload && systemctl enable kube-proxy && systemctl restart kube-proxy"

done#检查启动结果

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh k8s@${node_ip} "systemctl status kube-proxy|grep Active"

done

#检查路由规则

source /opt/k8s/bin/environment.sh

for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

ssh root@${node_ip} "/usr/sbin/ipvsadm -ln"

done8、验证集群

kubectl get nodes

9、部署 coredns 插件

(1)解包

mkdir -p /opt/k8s/kubernetes/

tar xf kubernetes-src.tar.gz -C /opt/k8s/kubernetes/

cd /opt/k8s/kubernetes/cluster/addons/dns

cp coredns.yaml.base coredns.yaml(2)修改配置

vim coredns.yaml

61行:kubernetes cluster.local. in-addr.arpa ip6.arpa {

153行:clusterIP: 10.254.0.2

(3)创建 coredns

kubectl create -f coredns.yaml

(4)检查 coredns 功能

kubectl get all -n kube-system

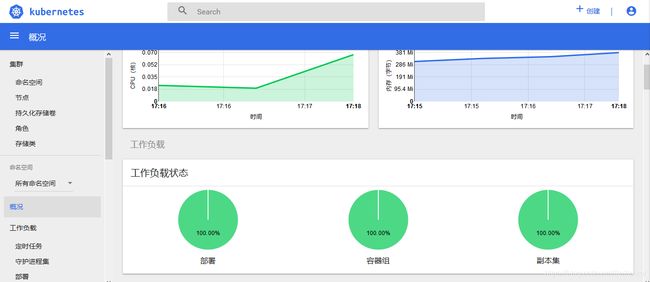

10、部署dashbord

(1)解包

mkdir -p /opt/k8s/kubernetes/cluster/addons/dashboard

tar xf kubernetes-src.tar.gz -C /opt/k8s/kubernetes/

cd /opt/k8s/kubernetes/cluster/addons/dashboard(2)修改yaml文件并创建

sed -i 's/k8s.gcr.io/siriuszg/' dashboard-controller.yaml

vim dashboard-service.yaml

10行添加:type: NodePort

kubectl create -f .

(3)查看分配的 NodePort

kubectl get deployment kubernetes-dashboard -n kube-system

kubectl --namespace kube-system get pods -o wide

kubectl get services kubernetes-dashboard -n kube-system

(4)查看 dashboard 支持的命令行参数

kubectl exec --namespace kube-system -it kubernetes-dashboard-65f7b4f486-ggv52 -- /dashboard --help

(5)dashboard访问地址

kubectl cluster-info

(6)创建token登录

kubectl create sa dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}')

DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

echo ${DASHBOARD_LOGIN_TOKEN}(7)创建使用 token 的 KubeConfig 文件

source /opt/k8s/bin/environment.sh

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/cert/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=dashboard.kubeconfig

# 设置客户端认证参数,使用上面创建的 Token

kubectl config set-credentials dashboard_user \

--token=${DASHBOARD_LOGIN_TOKEN} \

--kubeconfig=dashboard.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=dashboard_user \

--kubeconfig=dashboard.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=dashboard.kubeconfig(8)创建证书(用于浏览器导入)

需用到:admin-key.pem admin.pem ca-key.pem ca.pem

openssl pkcs12 -export -out admin.pfx -inkey admin-key.pem -in admin.pem -certfile ca.pem

密码可为空

11、部署heapster插件

Heapster是一个收集者,将每个Node上的cAdvisor的数据进行汇总,然后导到第三方工具(如InfluxDB)

(1)下载解包

wget https://github.com/kubernetes/heapster/archive/v1.5.3.tar.gz

tar -xzvf heapster-1.5.3.tar.gz.gz

(2)修改配置并创建

cd heapster-1.5.3/deploy/kube-config/influxdb

vim grafana.yaml

16行:image: wanghkkk/heapster-grafana-amd64-v4.4.3:v4.4.3 可更换为k8sstart/heapster-grafana-amd64-v4.4.3

67行:type: NodePort

vim heapster.yaml

23行:image: fishchen/heapster-amd64:v1.5.3

27行:- --source=kubernetes:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250

vim influxdb.yaml

16行:image: fishchen/heapster-influxdb-amd64:v1.3.3

kubectl create -f .

cd ../rbac/

vim heapster-rbac.yaml

> kind: ClusterRoleBinding

> apiVersion: rbac.authorization.k8s.io/v1beta1

> metadata:

> name: heapster-kubelet-api

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: system:kubelet-api-admin

> subjects:

> - kind: ServiceAccount

> name: heapster

> namespace: kube-system

kubectl create -f heapster-rbac.yaml

(3)检查执行结果

kubectl get pods -n kube-system | grep -E 'heapster|monitoring'

(4)访问 grafana

https://192.168.11.151:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy/dashboard/db/cluster?orgId=1