Ubuntu部署Kubernetes集群及问题解决

总计五部分:

- master节点部署;

- node节点部署并加入master;

- 管理工具部署;

- 问题解决;

- 常用命令

master节点:

1 . 关闭防火墙

主要是防止master和node之间、master和客户端之间通信阻止。

// 关闭防火墙

sudo ufw disable2 . 关闭swap并重新加载配置

因为k8s调度需要计算机器容量,因此关闭交换内存,减少计算误差,另外,启动kubelet时如果未关闭,也会报错。

sudo swapoff -a

sudo sysctl -p

sudo sysctl --system修改fstab,防止机器重启又自动打开swap,导致无法重新加入集群

vim /etc/fstab

注释掉swap盘挂载的一行,保存退出。

注:因为Ubuntu默认selinux关闭,因此不需要再关闭一次。

这种关闭swap的方式是临时的,重启机器就会失效。

3 . 设置机器名称

用于在集群中显示,以便分辨机器

sudo hostnamectl set-hostname 主机名4 . 安装kubeadm、kubectl、kubelet

apt-get update# add aliyun resource

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg| apt-key add -cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF apt-get update更新资源库之后就可以直接安装了,但是默认安装的是最新版本,如果需要安装指定版本的(例如只有1.15.0的整套镜像,就需要选择安装对应1.15.0的客户端) 。

# default install latest version

apt-get install -y kubelet kubeadm kubectl

NOTICE:

# list available version

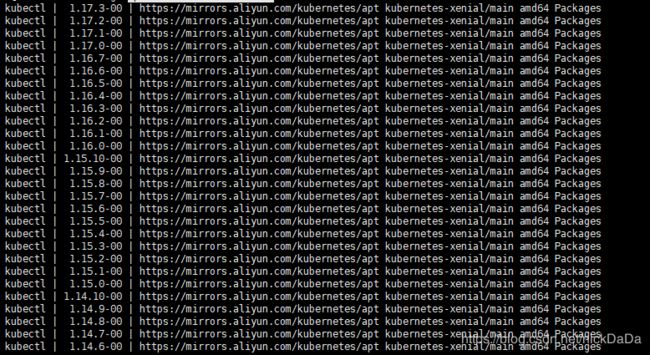

apt-cache madison kubectl

apt-cache madison kubeadm

apt-cache madison kubelet

# spec version to install

apt-get install -y kubelet= kubeadm kubectl= 5 . 安装docker

安装方式参考:https://blog.csdn.net/nickDaDa/article/details/92816938

墙裂建议:修改docker的默认存储位置(具体方式参考上链接),以防以后空间不足报![]() ,因为k8s是依赖docker创建容器的,以后pods创建数量过大会直接导致硬盘不足,整个集群面临瘫痪的风险,而且遇到问题之后再修改docker存储,会导致已有的镜像、容器丢失,如果是主节点,可能就要重新部署集群了。

,因为k8s是依赖docker创建容器的,以后pods创建数量过大会直接导致硬盘不足,整个集群面临瘫痪的风险,而且遇到问题之后再修改docker存储,会导致已有的镜像、容器丢失,如果是主节点,可能就要重新部署集群了。

如果需要让非root用户可使用docker命令,则参考一下两步;如果本身就是root用户,则不需要。

sudo groupadd docker

sudo usermod -aG docker $USER把docker加到系统服务中。

# add docker into system service

systemctl enable docker6 . 拉取镜像

将一下内容保存为download.sh,上传到master/node机器上,执行 sudo chmod ugo+x ./download.sh赋予权限。

直接执行./download.sh即可。

注意:镜像版本需要和kubectl客户端一致,如果不知道,执行kubectl version查看client部分即可。由于当前的kubectl的版本是1.15,所以镜像选的是1.15版本。

#!/bin/bash

images=(kube-proxy:v1.15.0 kube-scheduler:v1.15.0 kube-controller-manager:v1.15.0 kube-apiserver:v1.15.0 etcd:3.3.10 coredns:1.3.1 pause:3.1 )

for imageName in ${images[@]} ; do

docker pull registry.aliyuncs.com/google_containers/$imageName

docker tag registry.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.aliyuncs.com/google_containers/$imageName

done如果下载不下来,可以到我的网盘拉取(1.15.0版本):

链接:https://pan.baidu.com/s/1yNVKz3G4kaJ6XzMydLQULg

提取码:nlym

7 . 初始化master节点

初始化的过程中会用到上一步下载好的镜像。

使用默认网卡监听apiserver:

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16或者指定网卡监听apiserver(参考troubleShooting 9):

kubeadm init --kubernetes-version=v1.15.0 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.1.1 --apiserver-bind-port=6443返回成功后,执行以下操作(和返回的信息一致),如果跳过可能导致下一步创建flannel pod证书不受信。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config此时 获取node状态,会是NotReady,describe node会发现,是因为网络配置未完成。

8 . master初始化完成之后,创建网络层pod

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml如果不可下载,则复制以下脚本来创建。

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg文件虽大,但是不难看出它的组成是由一些角色创建、绑定、安全策略以及daemonSet模块组成,我们只需要关注DeamonSet部分,具体支持了amd64、arm64、arm、ppc64le、s390x,架构CPU,如果可以确定集群机器CPU架构,可以选择性删除一些不需要的支持。

9 . master上的kubelet加到系统服务 ,在kubeadm init之后,此服务状态才会是Active

systemctl enable kubeletnode节点:

1 . 关闭防火墙

// 关闭防火墙

sudo ufw disable2 . 关闭swap并重新加载配置

sudo swapoff -a

sudo sysctl -p

sudo sysctl --system3 . 设置机器名称,用于在集群中显示,以便分辨机器

sudo hostnamectl set-hostname 主机名4 . 安装kubeadm、kubectl、kubelet

apt-get update# add aliyun resource

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg| apt-key add -cat </etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

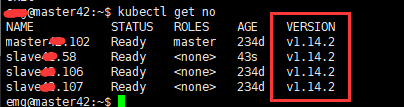

EOF apt-get update在安装kubelet、kubeadm、kubectl之前,最好先确认master上其它node的version,

安装对应版本的肯定不会错,如果使用默认命令安装,则会安装最新版本,如果不一致可能导致加入失败。

查询已有哪些版本可以安装:

apt-cache madison kubectl指定版本安装命令:

apt-get install kubelet=1.14.2-00

apt-get install kubeadm=1.14.2-00

apt-get install kubectl=1.14.2-00

安装最新版本命令:

# default is latest version

apt-get install -y kubelet kubeadm kubectl5 . 安装docker

安装方式参考:https://blog.csdn.net/nickDaDa/article/details/92816938

如果需要让非root用户可使用docker命令,如果本来就是root,则不需要执行。

sudo groupadd docker

sudo usermod -aG docker $USER# add docker into system service

systemctl enable docker6 . 拉取镜像

将一下内容保存为download.sh,上传到master/node机器上,执行 sudo chmod ugo+x ./download.sh赋予权限。

直接执行./download.sh即可。

注意:镜像版本需要和kubectl客户端一致,如果不知道,执行kubectl version查看client部分即可。

#!/bin/bash

images=(kube-proxy:v1.15.0 kube-scheduler:v1.15.0 kube-controller-manager:v1.15.0 kube-apiserver:v1.15.0 etcd:3.3.10 coredns:1.3.1 pause:3.1 )

for imageName in ${images[@]} ; do

docker pull registry.aliyuncs.com/google_containers/$imageName

docker tag registry.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.aliyuncs.com/google_containers/$imageName

done7 . node 部分创建并加入集群

执行格式是由创建master成功后返回的。

kubeadm join 116.xxx.xx.xx:6443 --token wc52ln.zxwjd0qvb5nivh1y \

--discovery-token-ca-cert-hash sha256:c87435f9d3f44ffd1ff013a4c8bd865f146370f518d98095c7690a744bb62d30如果已经找不到:

kubeadm token list可以返回token值

![]()

如果已经失效(默认24小时就消失了),则在master上创建一个新的:

kubeadm token create然后再执行 token list即可。

查询哈希值,在master上执行:

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'可以返回哈希值,两部分拼到一起即可。

8 . 将kubelet加到系统服务

systemctl enable kubelet

dashBoard安装:

dashboard是依托于k8s环境的一个应用程序,需要部署好k8s集群才能安装。

1 . dashboard脚本

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeSelector:

nodeType: master

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

env:

- name: ACCEPT_LANGUAGE

value: english

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --token-ttl=43200

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

- --token-ttl=43200参数指定session超时时间,ACCEPT_LANGUAGE指定了语言,可以修改为chinese或者不写,默认跟随系统语言。由于使用了阿里上的镜像,因此不存在无法拉取镜像的问题(如果确实无法拉取镜像,则手动拉取一个其它版本的,然后修改yaml中的镜像名称即可),脚本中Deployment.spec.template.spec.nodeSelector指定了机器必须是有NodeType=master,因为本次部署是计划部署到master节点上,如果要调度到master上,则需要让master可以被调度,需要先执行:

# label master

kubectl label no masterNode nodeType=master# allow master schedule

kubectl taint node k8s-master node-role.kubernetes.io/master-优化:上述脚本中,service的type是NodePort类型,也就是说指定了机器,并随机分配了一个端口。这样会导致如果删除掉再创建端口会发生变化(NotePort会随机分配一个30000以上的端口,如果手动指定会对k8s性能造成不必要的损失),因此service部分可以修改为:

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: LoadBalancer

ports:

- port: 443

targetPort: 8443

externalIPs:

- 集群中一台可用机器IP

selector:

k8s-app: kubernetes-dashboard使用机器IP需要提前查询443是否没有被占用,执行:

sudo netstat -anp | grep 443这样访问方式就是固定IP和port了,如果系统稳定则不需操作这一步。

2 . 创建角色

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system3 . 查看刚创建的用户(admin-user)持有token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')4 . 访问

由于证书是自动生成的,建议使用Firefox,输入https://ip:port即可访问。如果是chrome,需要在chrome.exe的property中加入--ignore-certificate-errors,否则不能使用。

troubleShooting:

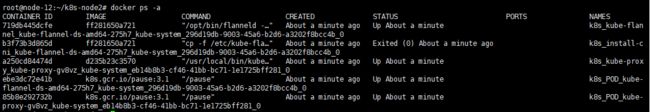

1 . node节点join之后一直NotReady

这种情况一般是因为node节点该启动的pod无法正常启动造成。处理思路为:先看node机器docker 容器是否正常,再看master节点上的node状态,再在master节点上describe node节点的pod的状态。

docker ps -a在node机器上查看docker容器启动情况是否正常,一般会启动如图几个容器, 如果正常,则进行下一步。

如果正常,则进行下一步。

kubectl describe no 节点名称执行命令查看是否有异常,如果不能解决则进行下一步。

kubectl get po -namespace kube-system -o wide | grep nodeNamekubectl describe -n kube-system 上一步查到的有问题的POD执行命令,查看失败节点的pods状态,一般是因为镜像拉取失败,如果是镜像拉取不到,可以找到一台机器有对应镜像的,执行

docker save img -o ./xxx.tar将对应镜像打成tar包,名称无所谓,还原成镜像后,名称会恢复,执行

scp ./xxx.tar user@ip:/path将tar包传输到失败节点机器上,执行

docker load < ./xxx.tar即可导出镜像。

或者,镜像都ok,可以查看一下docker依赖的环境是否ok,最近遇到一个情况就是node节点的/run目录占用满了,不能再跑container了,手动清理一下即可,然后在node机器上run一个镜像试试,能成功跑容器,即视为修改完成。

解决问题之后,在node节点上执行

kubeadm resetkubeadm reset && systemctl stop kubelet && systemctl stop docker && rm -rf /var/lib/cni/ && rm -rf /var/lib/kubelet/* && rm -rf /etc/cni/ && ifconfig cni0 down && ifconfig flannel.1 down && ifconfig docker0 down && ip link delete cni0 && ip link delete flannel.1 && systemctl start docker还原node节点,如果需要可以删除配置文件,最后再join即可。

注意:kubeadm reset之后并不会删除配置文件、文件及、镜像。

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.2 . master节点NotReady

kubectl describe no masterNamekubectl describe no -A | grep masterName查看node和pods状态即可,多情况下是flannel镜像丢失和配置文件已存在,根据提示处理即可。

3 . node节点从master中删除,再加入报错"cni0" already has an IP address different from ,网络创建错误。

由于上一次删除node,没有清空网络配置,再次加入网络无法创建,删除并重新加入即可。

亲测可用:

kubeadm reset

apt install ipvsadm

ipvsadm --clear

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

kubeadm join xxx.xxx.xx.xx:6443 --token tieqto.41ip1n2v018nku1n \

--discovery-token-ca-cert-hash sha256:912af6962629722e3e4cce88db150789d096747c99641b308c6f30759c7fa1ee网上其它方法:

kubeadm reset

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker4 . Error from server: error dialing backend: dial tcp 10.x.xx.xx:10250: i/o timeout

在master节点上执行 kubectl logs xxx 或 kubectl exec -it xxx 等操作,不能连接到node节点,一直报超时。可以尝试ping 上述ip地址,发现不通,因为master节点默认使用了node节点的eth0网卡,但是eth0网卡的inet addr是不通的。可能是由于此内网地址没有加到交换机。

/var/lib/kubelet/config.yaml中指定了对端端口10250,可以查看。

解决方式:在node机器上查看ifconfig找一个网卡可以访问的地址,例如:192.168.x.x,执行kubelet --address 192.168.x.x。成功之后基本就可以了。(参考:https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet/)

解析:以面向对象的思想,应该能想到,master上执行命令,实际执行者还是node节点上的kubelet.exe,它默认绑定的IP地址是0.0.0.0,也就选取了eth0的内网地址,当这个内网地址不可用时,就不能执行了。因此,切换成一个可用的网卡内网地址,能保证两台机器连通即可。

5 . master和nodes,不在同一网段,无法通信。

flannel默认使用机器的默认网卡,如果master节点和nodes的默认网卡不在同一网段,则会不通。

需要手动指定,一下以amd64为例,其它类型同理(https://github.com/coreos/flannel/blob/master/Documentation/configuration.md):

第一种:指定网卡:

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth2 #在这添加第二种:指定网段:

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface-regex=192\.168\.1\.* #在这改如果master和所有node的同名网卡都在同一网段,且能ping通,则可以使用第一种;如果能通的网卡名称不一致,无规则,则使用第二种。

6 . 修改nodeIP(https://networkinferno.net/trouble-with-the-kubernetes-node-ip)

sudo vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

========================

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.="yaml""

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/default/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --cloud-provider=vsphere --cloud-config=/etc/vsphereconf/vsphere.conf --node-ip 你的IP地址在ExecStart中加入node-ip指令,即可指定nodeIp(当前机器的集群内标识)。

保存后需要重新加载系统配置,并重启kubelet服务。

sudo systemctl daemon-reload && sudo systemctl restart kubelet注意:如果是node节点,还没join成功就启动kubelet(systemctl start kubelet.service / systemctl restart kubelet.service),会启动失败,报找不到配置文件

failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file “/var/lib/kubelet/config.yaml”, error: open /var/lib/kubelet/config.yaml: no such file or directory这些配置项会在join完成之后自动生成。

7 . kubelet/kubeadm/....服务启动、重启...失败

# view kubelet logs

journalctl --system | grep kubelet

journalctl --system | grep kubelet | tail -f通过查看日志,查看失败原因。

8 . node节点join时,kubelet总是报错,类似服务健康状态不正确或者服务未启动之类

kubelet是在join时自动启动的,对应的配置文件也会生成,此处报错一般是因为对应容器无法创建,因为docker有问题,例如磁盘满了之类的,需要在node上创建成功后才视为没问题

9 . node节点统统挂掉

通过dashboard查看,发现所有node节点都挂掉,在任意一台node机器上查看kubelet日志,发现是因为无法访问到master的api,ping之后发现是因为丢包率过高。因此尽量使用内网地址进行通信。

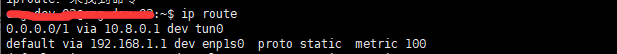

kubeadm init 会使用默认网卡,通过执行 ip route可以查看默认网卡。

via 的网卡就是默认网卡。

如果默认网卡是公网的,可以使用 kubeadm init --help查看,使用如下参数

--apiserver-advertise-address string The IP address the API Server will advertise it's listening on. If not set the default network interface will be used.

--apiserver-bind-port int32 Port for the API Server to bind to. (default 6443)跟上内网地址,即可为apiserver指定监听ip和port,node节点join的地址就是内网ip地址,即可规避node节点必须使用公网地址加入集群的问题。

10 . node节点机器重启,不能自动加回集群

机器由于加挂载硬盘或者加内存,需要重启,但是重启之后node状态一直是notReady。在master节点执行describe node节点发现没有发送心跳,再到node节点上执行docker ps -a 发现依赖的容器没有启动,正常来说机器在重启之后,启动了kubelet就可以自动拉起来相关容器,因此推断kubelet启动不成功。查看kubelet日志,定位到是因为swap没关,部署集群的时候是临时关闭的,重启后又自动打开了,手动关闭后,node节点自动加回集群成功。

11 . master节点报DiskPressure

因为没有改dockerd的data-root,导致所有的镜像、容器、挂载、日志,都会输出到/var/lib/docker/。kubelet是和docker通信的而它的默认路径是/var/lib/kubelet。但是“/”目录往往不是最大的硬盘。解决思路是,先看根目录“/”下的大文件,大文件的生产者是哪个进程,为什么要输出这么多。一般是因为没有把容器对应的输出目录挂载到/data下。如果确实要生成大量的数据文件,建议修改docker的data-root(修改方式参考https://blog.csdn.net/nickDaDa/article/details/92816938),或者修改kubelet的输出目录(启动时使用--root-dir string Directory path for managing kubelet files (volume mounts,etc). (default "/var/lib/kubelet"))。

12 .创建pod一直报Failed create pod sandbox: rpc error: code = Unknown desc

遇到过几次,一般是因为docker服务有问题,导致node无法创建pod。可以查看一下/lib/systemd/system/docker.service是否正常,是否有人修改了启动参数之类的操作。

常用命令:

#获取pod运行在哪个节点上的信息。

kubectl get po -o wide#获取集群中有多少节点

kubectl get nodes#创建token(24小时过期)

kubeadm token create#生成一条永久有效的token

kubeadm token create --ttl 0#查看token列表

kubeadm token list#获取ca证书sha256编码hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'#在node节点上执行:node节点加入

kubeadm join 192.168.x.xxx:6443 --token q6y34p.5xg6gaxwucl22ff5 --discovery-token-ca-cert-hash sha256:22367e3046478dd8ba0df256ac2af83e156b45731f2f769a6aea4671bad1d5cc#移除node

kubectl drain ubuntu-pub02 --delete-local-data --force --ignore-daemonsets

kubectl delete node ubuntu-pub02