Python爬取代理IP地址

我们都知道在爬取网页信息时很容易遭到网站的封禁,这时我们就要用到代理IP

以下是模拟浏览器访问网页的代码

在自定义函getHtml()中可以得到解析后的网页信息

_headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding':'gzip, deflate, sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Cache-Control':'max-age=0',

'Connection':'keep-alive',

'Host':'www.xicidaili.com',

'If-None-Match':'W/"b077743016dc54409ebe6b86ba7a869b"',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.75 Safari/537.36',

}

_cookies = None

def getHtml(page):

url = "https://www.xicidaili.com/nn/"+str(page) #page代表页数

result = requests.get(url,headers=_headers).text

html = etree.HTML(result)

return html

然后开始爬取数据

使用xpath得到想到数据

ips = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[2]/text()') #IP地址

duans = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[3]/text()') #端口号

types = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[6]/text()') #类型

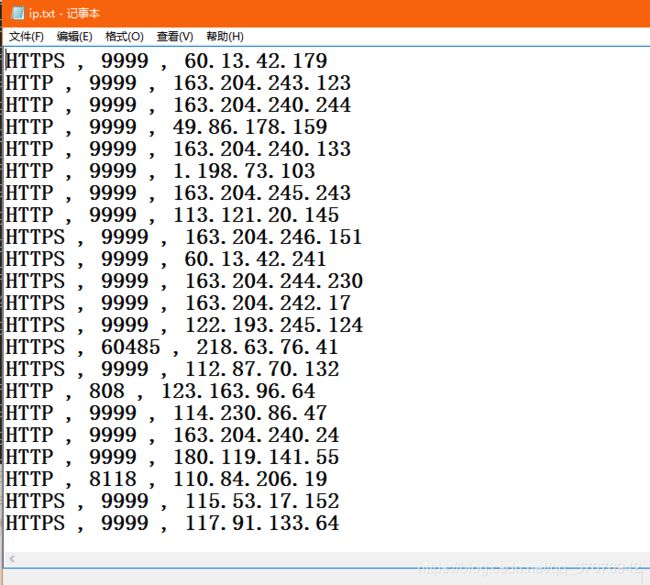

我将之保存到txt文件

以下是完整代码

#coding:utf-8

import requests

from lxml import etree

from urllib import request

_headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding':'gzip, deflate, sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Cache-Control':'max-age=0',

'Connection':'keep-alive',

'Host':'www.xicidaili.com',

'If-None-Match':'W/"b077743016dc54409ebe6b86ba7a869b"',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.75 Safari/537.36',

}

_cookies = None

def getHtml(page):

url = "https://www.xicidaili.com/nn/"+str(page)

result = requests.get(url,headers=_headers).text

html = etree.HTML(result)

return html

file = open('ip.txt', 'w', encoding='utf-8')

for j in range(1,10):

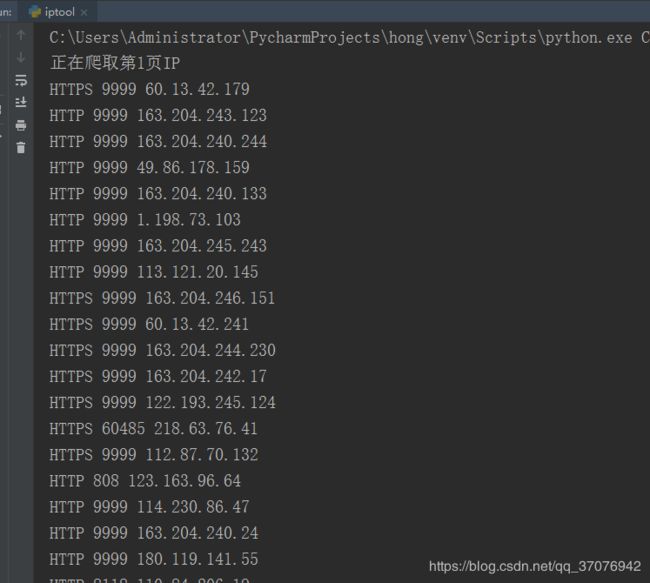

print("正在爬取第"+str(j)+"页IP")

try:

for html in getHtml(j):

ips = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[2]/text()')

duans = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[3]/text()')

types = html.xpath('(//tr[@class="odd"]|//tr[@class=""])/td[6]/text()')

for i in range(0,len(ips)):

file.write(types[i])

file.write(' , ')

file.write(duans[i])

file.write(' , ')

file.write(ips[i])

file.write('\n')

print(types[i],duans[i],ips[i])

except:

pass

file.close()