- Densenet模型花卉图像分类

深度学习乐园

分类数据挖掘人工智能

项目源码获取方式见文章末尾!600多个深度学习项目资料,快来加入社群一起学习吧。《------往期经典推荐------》项目名称1.【基于CNN-RNN的影像报告生成】2.【卫星图像道路检测DeepLabV3Plus模型】3.【GAN模型实现二次元头像生成】4.【CNN模型实现mnist手写数字识别】5.【fasterRCNN模型实现飞机类目标检测】6.【CNN-LSTM住宅用电量预测】7.【VG

- 基于AFM注意因子分解机的推荐算法

深度学习乐园

深度学习实战项目深度学习科研项目推荐算法算法机器学习

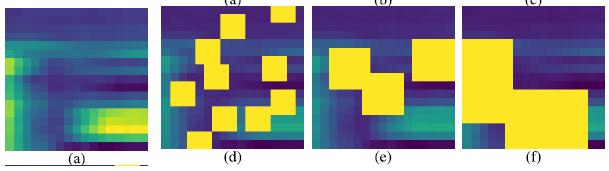

关于深度实战社区我们是一个深度学习领域的独立工作室。团队成员有:中科大硕士、纽约大学硕士、浙江大学硕士、华东理工博士等,曾在腾讯、百度、德勤等担任算法工程师/产品经理。全网20多万+粉丝,拥有2篇国家级人工智能发明专利。社区特色:深度实战算法创新获取全部完整项目数据集、代码、视频教程,请进入官网:zzgcz.com。竞赛/论文/毕设项目辅导答疑,v:zzgcz_com1.项目简介项目A033基于A

- 【Python】Pygame从零开始学习

宅男很神经

python开发语言

模块一:Pygame入门与核心基础本模块将引导您完成Pygame的安装,并深入理解Pygame应用程序的基石——游戏循环、事件处理、Surface与Rect对象、显示控制以及颜色管理。第一章:Pygame概览与环境搭建1.1什么是Pygame?Pygame是一组专为编写视频游戏而设计的Python模块。它构建在优秀的SDL(SimpleDirectMediaLayer)库之上,允许您使用Pytho

- 【教程】使用Visual Studio debug exe和dll

yunquantong

visualstudioide

如何Debugexe和dll在实际项目中,我们经常需要对可执行文件(exe)和动态链接库(dll)进行调试。本文详细总结如何通过远程和本地调试exe,以及如何调试dll,包括常规与资源路径调试。一、Debugexe1.远程调试exe(使用VSRemoteTools)适用场景:程序必须在服务器上运行。步骤:在目标服务器上部署对应版本的VisualStudioRemoteDebugger(如msvsm

- 深度学习实战:基于嵌入模型的AI应用开发

AIGC应用创新大全

AI人工智能与大数据应用开发MCP&Agent云算力网络人工智能深度学习ai

深度学习实战:基于嵌入模型的AI应用开发关键词:嵌入模型(EmbeddingModel)、深度学习、向量空间、语义表示、AI应用开发、相似性搜索、迁移学习摘要:本文将带你从0到1掌握基于嵌入模型的AI应用开发全流程。我们会用“翻译机”“数字身份证”等生活比喻拆解嵌入模型的核心原理,结合Python代码实战(BERT/CLIP模型)演示如何将文本、图像转化为可计算的语义向量,并通过“智能客服问答”“

- Fiddler中文版抓包工具在跨域与OAuth调试中的深度应用

2501_91600747

httpudphttpswebsocket网络安全网络协议tcp/ip

跨域和OAuth授权流程一直是Web和移动开发中最容易踩坑的领域。复杂的CORS配置、重定向中的Token传递、授权码流程的跳转,以及多域名环境下的Cookie共享,常常让开发者陷入调试困境。此时,一款能够精准捕获、修改、重放请求的抓包工具显得至关重要,而Fiddler抓包工具正是解决此类难题的核心武器。Fiddler中文网(https://telerik.com.cn/)为跨域和OAuth接入场

- Fiddler抓包工具在多端调试中的实战应用:结合Postman与Charles构建调试工作流

2501_91600747

httpudphttpswebsocket网络安全网络协议tcp/ip

在如今前后端分离、接口驱动开发逐渐成为主流的背景下,开发者越来越依赖于各类调试工具,以应对复杂的网络请求管理、多设备调试和跨团队协作等问题。而在诸多网络分析工具中,Fiddler抓包工具以其功能全面、扩展灵活、支持HTTPS抓包和断点调试等特性,在开发者圈中拥有稳定的口碑。本文将从一个更贴近日常开发流程的角度,探讨如何在多端调试、接口测试、数据模拟等环节中,灵活运用Fiddler,并与Postma

- 基于OpenCv的图片倾斜校正系统详细设计与具体代码实现

AI大模型应用之禅

人工智能数学基础计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

基于OpenCv的图片倾斜校正系统详细设计与具体代码实现1.背景介绍1.1图像处理的重要性在当今数字时代,图像处理技术在各个领域都扮演着重要角色。无论是在计算机视觉、模式识别、医学影像、遥感探测还是多媒体处理等领域,图像处理都是不可或缺的核心技术。通过对图像进行预处理、增强、分割、特征提取等操作,可以从图像中获取有价值的信息,为后续的分析和决策提供支持。1.2图像倾斜问题及其影响在实际应用中,由于

- 高通手机跑AI系列之——姿态识别

伊利丹~怒风

Qualcomm智能手机人工智能AI编程pythonarm

环境准备手机测试手机型号:RedmiK60Pro处理器:第二代骁龙8移动--8gen2运行内存:8.0GB,LPDDR5X-8400,67.0GB/s摄像头:前置16MP+后置50MP+8MP+2MPAI算力:NPU48TopsINT8&&GPU1536ALUx2x680MHz=2.089TFLOPS提示:任意手机均可以,性能越好的手机速度越快软件APP:AidLux2.0系统环境:Ubuntu2

- 高通手机跑AI系列之——实时头发识别

伊利丹~怒风

Qualcomm智能手机AI编程pythonarm人工智能

环境准备手机测试手机型号:RedmiK60Pro处理器:第二代骁龙8移动--8gen2运行内存:8.0GB,LPDDR5X-8400,67.0GB/s摄像头:前置16MP+后置50MP+8MP+2MPAI算力:NPU48TopsINT8&&GPU1536ALUx2x680MHz=2.089TFLOPS提示:任意手机均可以,性能越好的手机速度越快软件APP:AidLux2.0系统环境:Ubuntu2

- 高通手机跑AI系列之——手部姿势跟踪

伊利丹~怒风

Qualcomm智能手机AI编程pythonarm人工智能

环境准备手机测试手机型号:RedmiK60Pro处理器:第二代骁龙8移动--8gen2运行内存:8.0GB,LPDDR5X-8400,67.0GB/s摄像头:前置16MP+后置50MP+8MP+2MPAI算力:NPU48TopsINT8&&GPU1536ALUx2x680MHz=2.089TFLOPS提示:任意手机均可以,性能越好的手机运行速度越快软件APP:AidLux2.0系统环境:Ubunt

- 《AI颠覆编码:GPT-4在编译器层面的奇幻漂流》的深度技术解析

踢足球的,程序猿

人工智能pythonc语言

一、传统编译器的黄昏:LLVM面临的AI降维打击1.1经典优化器的性能天花板//LLVM循环优化Pass传统实现(LoopUnroll.cpp)voidLoopUnrollPass::runOnLoop(Loop*L){unsignedTripCount=SE->getSmallConstantTripCount(L);if(!TripCount||TripCount>UnrollThreshol

- 卷积神经网络(Convolutional Neural Network, CNN)

不想秃头的程序

神经网络语音识别人工智能深度学习网络卷积神经网络

卷积神经网络(ConvolutionalNeuralNetwork,CNN)是一种专门用于处理图像、视频等网格数据的深度学习模型。它通过卷积层自动提取数据的特征,并利用空间共享权重和池化层减少参数量和计算复杂度,成为计算机视觉领域的核心技术。以下是CNN的详细介绍:一、核心思想CNN的核心目标是从图像中自动学习层次化特征,并通过空间共享权重和平移不变性减少参数量和计算成本。其关键组件包括:卷积层(

- ResNet(Residual Network)

不想秃头的程序

神经网络语音识别人工智能深度学习网络残差网络神经网络

ResNet(ResidualNetwork)是深度学习中一种经典的卷积神经网络(CNN)架构,由微软研究院的KaimingHe等人在2015年提出。它通过引入残差连接(SkipConnection)解决了深度神经网络中的梯度消失问题,使得网络可以训练极深的模型(如上百层),并在图像分类、目标检测、语义分割等任务中取得了突破性成果。以下是ResNet的详细介绍:一、核心思想ResNet的核心创新是

- P25:LSTM实现糖尿病探索与预测

?Agony

lstm人工智能rnn

本文为365天深度学习训练营中的学习记录博客原作者:K同学啊一、相关技术1.LSTM基本概念LSTM(长短期记忆网络)是RNN(循环神经网络)的一种变体,它通过引入特殊的结构来解决传统RNN中的梯度消失和梯度爆炸问题,特别适合处理序列数据。结构组成:遗忘门:决定丢弃哪些信息,通过sigmoid函数输出0-1之间的值,表示保留或遗忘的程度。输入门:决定更新哪些信息,同样通过sigmoid函数控制更新

- Python训练营打卡——DAY16(2025.5.5)

cosine2025

Python训练营打卡python开发语言机器学习

目录一、NumPy数组基础笔记1.理解数组的维度(Dimensions)2.NumPy数组与深度学习Tensor的关系3.一维数组(1DArray)4.二维数组(2DArray)5.数组的创建5.1数组的简单创建5.2数组的随机化创建5.3数组的遍历5.4数组的运算6.数组的索引6.1一维数组索引6.2二维数组索引6.3三维数组索引二、SHAP值的深入理解三、总结1.NumPy数组基础总结2.SH

- 代码随想录算法训练营第52天 | 101.孤岛的总面积 、102.沉没孤岛、103.水流问题、104.建造最大岛屿

Amor_Fati_Yu

算法java数据结构

101.孤岛的总面积importjava.util.*;publicclassMain{privatestaticintcount=0;privatestaticfinalint[][]dir={{0,1},{1,0},{-1,0},{0,-1}};//四个方向privatestaticvoidbfs(int[][]grid,intx,inty){Queueque=newLinkedList=gr

- 【问题解决】pnpm : 无法将“pnpm”项识别为 cmdlet、函数、脚本文件或可运行程序的名称。

aPurpleBerry

问题解决前端

今天配置完poetry环境变量之后pnpm不能用了具体报错pnpm:无法将“pnpm”项识别为cmdlet、函数、脚本文件或可运行程序的名称。请检查名称的拼写,如果包括路径,请确保路径正确,然后再试一次。所在位置行:1字符:1+pnpmrundev+~~~~+CategoryInfo:ObjectNotFound:(pnpm:String)[],CommandNotFoundException+F

- 【机器学习&深度学习】反向传播机制

目录一、一句话定义二、类比理解三、为什重要?四、用生活例子解释:神经网络=烹饪机器人4.1第一步:尝一口(前向传播)4.2第二步:倒着推原因(反向传播)五、换成人工智能流程说一遍六、图示类比:找山顶(最优参数)七、总结一句人话八、PyTorch代码示例:亲眼看到每一层的梯度九、梯度=损失函数对参数的偏导数十、类比总结反向传播(Backpropagation)是神经网络中训练过程的核心机制,它就像“

- 人脸识别算法赋能园区无人超市安防升级

智驱力人工智能

算法人工智能边缘计算人脸识别智慧园区智慧工地智慧煤矿

人脸识别算法赋能园区无人超市安防升级正文在园区无人超市的运营管理中,传统安防手段依赖人工巡检或基础监控设备,存在响应滞后、误报率高、环境适应性差等问题。本文从技术背景、实现路径、功能优势及应用场景四个维度,阐述如何通过人脸识别检测、人员入侵算法及疲劳检测算法的协同应用,构建高效、精准的智能安防体系。一、技术背景:视觉分析算法的核心支撑人脸识别算法基于深度学习的卷积神经网络(CNN)模型,通过提取面

- 安卓开发 手动构建 .so

XCZHONGS

android

手动构建.so(兼容废弃ABI)下载旧版NDK(推荐r16b)地址:https://developer.android.com/ndk/downloads/older_releases下载NDKr16b(最后支持armeabi、mips、mips64的版本)使用ndk-build手动构建(不使用Gradle)在源文件目录下执行D:\ideal\androidstudio\sdk\ndk\16.1.

- Python编程:使用Opencv进行图像处理

【参考】https://github.com/opencv/opencv/tree/4.x/samples/pythonPython使用OpenCV进行图像处理OpenCV(OpenSourceComputerVisionLibrary)是一个开源的计算机视觉和机器学习软件库。下面将从基础到高阶介绍如何使用Python中的OpenCV进行图像处理。一、安装首先需要安装OpenCV库:pipinst

- Linux命令行操作基础

EnigmaCoder

Linuxlinux运维服务器

目录前言目录结构✍️语法格式操作技巧Tab补全光标操作基础命令登录和电源管理命令⚙️login⚙️last⚙️exit⚙️shutdown⚙️halt⚙️reboot文件命令⚙️浏览目录类命令pwdcdls⚙️浏览文件类命令catmorelessheadtail⚙️目录操作类命令mkdirrmdir⚙️文件操作类命令mvrmtouchfindgziptar⚙️cp前言大家好!我是EnigmaCod

- webpack和vite区别

PromptOnce

webpack前端node.js

一、Webpack1.概述Webpack是一个模块打包工具,它会递归地构建依赖关系图,并将所有模块打包成一个或多个bundle(包)。2.特点配置灵活:Webpack提供了高度可定制的配置文件,可以根据项目需求进行各种优化。生态系统丰富:Webpack拥有庞大的插件和加载器生态系统,可以处理各种资源类型(JavaScript、CSS、图片等)。支持代码拆分:通过代码拆分和懒加载,Webpack可以

- webpack和vite对比解析(AI)

秉承初心

AI创造webpack前端node.js

以下是Webpack和Vite的对比解析,从核心机制、性能、配置扩展性、适用场景等维度进行详细说明:⚙️一、核心机制差异构建模式Webpack:采用打包器模式,启动时需遍历整个模块依赖图,将所有资源打包成Bundle,再启动开发服务器。Vite:基于ESModules原生支持,开发环境跳过打包,按需编译(浏览器请求时实时编译)。生产环境才用Rollup打包。依赖处理Webpack:冷启动时需全量打

- 【Python深度学习】零基础掌握Pytorch Pooling layers nn.MaxPool方法

Mr数据杨

Python深度学习python深度学习pytorch

在深度学习的世界中,MaxPooling是一种关键的操作,用于降低数据的维度并保留重要特征。这就像是从一堆照片中挑选出最能代表某个场景的那张。PyTorch提供了多种MaxPooling层,包括nn.MaxPool1d、nn.MaxPool2d和nn.MaxPool3d,它们分别适用于不同维度的数据处理。如果处理的是声音信号(一维数据),就会用到nn.MaxPool1d。而处理图像(二维数据)时,

- C# 中 EventWaitHandle 实现多进程状态同步的深度解析

Leon@Lee

c#开发语言

在现代软件开发中,多进程应用场景日益普遍。无论是分布式系统、微服务架构,还是传统的客户端-服务器模型,进程间的状态同步都是一个关键挑战。C#提供了多种同步原语,其中EventWaitHandle是一个强大的工具,特别适合处理跨进程的同步需求。本文将深入探讨EventWaitHandle的工作原理、使用场景及最佳实践。一、EventWaitHandle基础原理EventWaitHandle是.NET

- 【lua】Linux上安装lua和luarocks包管理工具

果壳~

lualinux开发语言

目录安装lua安装luarocksluarocks其他命令安装lua首先打开lua官网https://lua.org点击download就可以看到安装脚本新建一个目录将压缩包下载到这个目录里curl-L-R-Ohttps://www.lua.org/ftp/lua-5.4.8.tar.gztarzxflua-5.4.8.tar.gzcdlua-5.4.8makealltest#最后还得加上make

- HDMIheb.dll hpgtg311.dll HPCommon.dll HQTTS.0409.409.dll HpuFunction.dll hpzpe4v3.DLL Hardware

a***0738

microsoftvisualstudiowindows

在使用电脑系统时经常会出现丢失找不到某些文件的情况,由于很多常用软件都是采用MicrosoftVisualStudio编写的,所以这类软件的运行需要依赖微软VisualC++运行库,比如像QQ、迅雷、Adobe软件等等,如果没有安装VC++运行库或者安装的版本不完整,就可能会导致这些软件启动时报错,提示缺少库文件。如果我们遇到关于文件在系统使用过程中提示缺少找不到的情况,如果文件是属于运行库文件的

- CSS实标题现同心圆的缩放

做一个暴躁的开发

css3html

CSS实标题现同心圆的缩放最近学习了css动画效果,记录一下同心圆的缩放问题问题描述我先设置了两个div,外圈是class=“one”,内圈是class=“two”,代码如下:分别设置他们的div,给他们边框,并且设置成圆形.one{width:500px;height:500px;border:20pxsolidlightcoral;border-radius:50%;overflow:hidd

- PHP,安卓,UI,java,linux视频教程合集

cocos2d-x小菜

javaUIPHPandroidlinux

╔-----------------------------------╗┆

- 各表中的列名必须唯一。在表 'dbo.XXX' 中多次指定了列名 'XXX'。

bozch

.net.net mvc

在.net mvc5中,在执行某一操作的时候,出现了如下错误:

各表中的列名必须唯一。在表 'dbo.XXX' 中多次指定了列名 'XXX'。

经查询当前的操作与错误内容无关,经过对错误信息的排查发现,事故出现在数据库迁移上。

回想过去: 在迁移之前已经对数据库进行了添加字段操作,再次进行迁移插入XXX字段的时候,就会提示如上错误。

&

- Java 对象大小的计算

e200702084

java

Java对象的大小

如何计算一个对象的大小呢?

- Mybatis Spring

171815164

mybatis

ApplicationContext ac = new ClassPathXmlApplicationContext("applicationContext.xml");

CustomerService userService = (CustomerService) ac.getBean("customerService");

Customer cust

- JVM 不稳定参数

g21121

jvm

-XX 参数被称为不稳定参数,之所以这么叫是因为此类参数的设置很容易引起JVM 性能上的差异,使JVM 存在极大的不稳定性。当然这是在非合理设置的前提下,如果此类参数设置合理讲大大提高JVM 的性能及稳定性。 可以说“不稳定参数”

- 用户自动登录网站

永夜-极光

用户

1.目标:实现用户登录后,再次登录就自动登录,无需用户名和密码

2.思路:将用户的信息保存为cookie

每次用户访问网站,通过filter拦截所有请求,在filter中读取所有的cookie,如果找到了保存登录信息的cookie,那么在cookie中读取登录信息,然后直接

- centos7 安装后失去win7的引导记录

程序员是怎么炼成的

操作系统

1.使用root身份(必须)打开 /boot/grub2/grub.cfg 2.找到 ### BEGIN /etc/grub.d/30_os-prober ### 在后面添加 menuentry "Windows 7 (loader) (on /dev/sda1)" {

- Oracle 10g 官方中文安装帮助文档以及Oracle官方中文教程文档下载

aijuans

oracle

Oracle 10g 官方中文安装帮助文档下载:http://download.csdn.net/tag/Oracle%E4%B8%AD%E6%96%87API%EF%BC%8COracle%E4%B8%AD%E6%96%87%E6%96%87%E6%A1%A3%EF%BC%8Coracle%E5%AD%A6%E4%B9%A0%E6%96%87%E6%A1%A3 Oracle 10g 官方中文教程

- JavaEE开源快速开发平台G4Studio_V3.2发布了

無為子

AOPoraclemysqljavaeeG4Studio

我非常高兴地宣布,今天我们最新的JavaEE开源快速开发平台G4Studio_V3.2版本已经正式发布。大家可以通过如下地址下载。

访问G4Studio网站

http://www.g4it.org

G4Studio_V3.2版本变更日志

功能新增

(1).新增了系统右下角滑出提示窗口功能。

(2).新增了文件资源的Zip压缩和解压缩

- Oracle常用的单行函数应用技巧总结

百合不是茶

日期函数转换函数(核心)数字函数通用函数(核心)字符函数

单行函数; 字符函数,数字函数,日期函数,转换函数(核心),通用函数(核心)

一:字符函数:

.UPPER(字符串) 将字符串转为大写

.LOWER (字符串) 将字符串转为小写

.INITCAP(字符串) 将首字母大写

.LENGTH (字符串) 字符串的长度

.REPLACE(字符串,'A','_') 将字符串字符A转换成_

- Mockito异常测试实例

bijian1013

java单元测试mockito

Mockito异常测试实例:

package com.bijian.study;

import static org.mockito.Mockito.mock;

import static org.mockito.Mockito.when;

import org.junit.Assert;

import org.junit.Test;

import org.mockito.

- GA与量子恒道统计

Bill_chen

JavaScript浏览器百度Google防火墙

前一阵子,统计**网址时,Google Analytics(GA) 和量子恒道统计(也称量子统计),数据有较大的偏差,仔细找相关资料研究了下,总结如下:

为何GA和量子网站统计(量子统计前身为雅虎统计)结果不同?

首先:没有一种网站统计工具能保证百分之百的准确出现该问题可能有以下几个原因:(1)不同的统计分析系统的算法机制不同;(2)统计代码放置的位置和前后

- 【Linux命令三】Top命令

bit1129

linux命令

Linux的Top命令类似于Windows的任务管理器,可以查看当前系统的运行情况,包括CPU、内存的使用情况等。如下是一个Top命令的执行结果:

top - 21:22:04 up 1 day, 23:49, 1 user, load average: 1.10, 1.66, 1.99

Tasks: 202 total, 4 running, 198 sl

- spring四种依赖注入方式

白糖_

spring

平常的java开发中,程序员在某个类中需要依赖其它类的方法,则通常是new一个依赖类再调用类实例的方法,这种开发存在的问题是new的类实例不好统一管理,spring提出了依赖注入的思想,即依赖类不由程序员实例化,而是通过spring容器帮我们new指定实例并且将实例注入到需要该对象的类中。依赖注入的另一种说法是“控制反转”,通俗的理解是:平常我们new一个实例,这个实例的控制权是我

- angular.injector

boyitech

AngularJSAngularJS API

angular.injector

描述: 创建一个injector对象, 调用injector对象的方法可以获得angular的service, 或者用来做依赖注入. 使用方法: angular.injector(modules, [strictDi]) 参数详解: Param Type Details mod

- java-同步访问一个数组Integer[10],生产者不断地往数组放入整数1000,数组满时等待;消费者不断地将数组里面的数置零,数组空时等待

bylijinnan

Integer

public class PC {

/**

* 题目:生产者-消费者。

* 同步访问一个数组Integer[10],生产者不断地往数组放入整数1000,数组满时等待;消费者不断地将数组里面的数置零,数组空时等待。

*/

private static final Integer[] val=new Integer[10];

private static

- 使用Struts2.2.1配置

Chen.H

apachespringWebxmlstruts

Struts2.2.1 需要如下 jar包: commons-fileupload-1.2.1.jar commons-io-1.3.2.jar commons-logging-1.0.4.jar freemarker-2.3.16.jar javassist-3.7.ga.jar ognl-3.0.jar spring.jar

struts2-core-2.2.1.jar struts2-sp

- [职业与教育]青春之歌

comsci

教育

每个人都有自己的青春之歌............但是我要说的却不是青春...

大家如果在自己的职业生涯没有给自己以后创业留一点点机会,仅仅凭学历和人脉关系,是难以在竞争激烈的市场中生存下去的....

&nbs

- oracle连接(join)中使用using关键字

daizj

JOINoraclesqlusing

在oracle连接(join)中使用using关键字

34. View the Exhibit and examine the structure of the ORDERS and ORDER_ITEMS tables.

Evaluate the following SQL statement:

SELECT oi.order_id, product_id, order_date

FRO

- NIO示例

daysinsun

nio

NIO服务端代码:

public class NIOServer {

private Selector selector;

public void startServer(int port) throws IOException {

ServerSocketChannel serverChannel = ServerSocketChannel.open(

- C语言学习homework1

dcj3sjt126com

chomework

0、 课堂练习做完

1、使用sizeof计算出你所知道的所有的类型占用的空间。

int x;

sizeof(x);

sizeof(int);

# include <stdio.h>

int main(void)

{

int x1;

char x2;

double x3;

float x4;

printf(&quo

- select in order by , mysql排序

dcj3sjt126com

mysql

If i select like this:

SELECT id FROM users WHERE id IN(3,4,8,1);

This by default will select users in this order

1,3,4,8,

I would like to select them in the same order that i put IN() values so:

- 页面校验-新建项目

fanxiaolong

页面校验

$(document).ready(

function() {

var flag = true;

$('#changeform').submit(function() {

var projectScValNull = true;

var s ="";

var parent_id = $("#parent_id").v

- Ehcache(02)——ehcache.xml简介

234390216

ehcacheehcache.xml简介

ehcache.xml简介

ehcache.xml文件是用来定义Ehcache的配置信息的,更准确的来说它是定义CacheManager的配置信息的。根据之前我们在《Ehcache简介》一文中对CacheManager的介绍我们知道一切Ehcache的应用都是从CacheManager开始的。在不指定配置信

- junit 4.11中三个新功能

jackyrong

java

junit 4.11中两个新增的功能,首先是注解中可以参数化,比如

import static org.junit.Assert.assertEquals;

import java.util.Arrays;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.junit.runn

- 国外程序员爱用苹果Mac电脑的10大理由

php教程分享

windowsPHPunixMicrosoftperl

Mac 在国外很受欢迎,尤其是在 设计/web开发/IT 人员圈子里。普通用户喜欢 Mac 可以理解,毕竟 Mac 设计美观,简单好用,没有病毒。那么为什么专业人士也对 Mac 情有独钟呢?从个人使用经验来看我想有下面几个原因:

1、Mac OS X 是基于 Unix 的

这一点太重要了,尤其是对开发人员,至少对于我来说很重要,这意味着Unix 下一堆好用的工具都可以随手捡到。如果你是个 wi

- 位运算、异或的实际应用

wenjinglian

位运算

一. 位操作基础,用一张表描述位操作符的应用规则并详细解释。

二. 常用位操作小技巧,有判断奇偶、交换两数、变换符号、求绝对值。

三. 位操作与空间压缩,针对筛素数进行空间压缩。

&n

- weblogic部署项目出现的一些问题(持续补充中……)

Everyday都不同

weblogic部署失败

好吧,weblogic的问题确实……

问题一:

org.springframework.beans.factory.BeanDefinitionStoreException: Failed to read candidate component class: URL [zip:E:/weblogic/user_projects/domains/base_domain/serve

- tomcat7性能调优(01)

toknowme

tomcat7

Tomcat优化: 1、最大连接数最大线程等设置

<Connector port="8082" protocol="HTTP/1.1"

useBodyEncodingForURI="t

- PO VO DAO DTO BO TO概念与区别

xp9802

javaDAO设计模式bean领域模型

O/R Mapping 是 Object Relational Mapping(对象关系映射)的缩写。通俗点讲,就是将对象与关系数据库绑定,用对象来表示关系数据。在O/R Mapping的世界里,有两个基本的也是重要的东东需要了解,即VO,PO。

它们的关系应该是相互独立的,一个VO可以只是PO的部分,也可以是多个PO构成,同样也可以等同于一个PO(指的是他们的属性)。这样,PO独立出来,数据持