4台虚拟机实现高可用Hadoop集群步骤

目录

一、集群安装

1、软件版本选择

2、机器配置

1)4台机器分配

2)修改hosts

3)免密登录

3、软件安装

1)安装jdk

2)安装zookeeper

3)安装hadoop

4)小结

二、启动集群

1、启动zookeeper

2、启动hadoop

1)启动journalnode进程初始化namenode

2)启动文件系统

3)启动yarn集群

4)启动 mapreduce 任务历史服务器

三、验证集群是否成功

四、一键启动脚本

一、集群安装

1、软件版本选择

正确的选择hadoop、zookeeper、jdk和系统版本会事半功倍,且有利于以后扩展hive、storm、spark等集群组件。我跟你讲,特别是不要64位的系统装32位的hadoop、32位的系统装64位的hadoop等等等,然后又去找对应版本的libhadoop.so.1.0.0替换,会出现一系列莫名其妙还隐藏很深的问题。下面是我使用的软件和系统版本:

系统:64位 redhat 6.5 链接:https://pan.baidu.com/s/12Mr6RHzaYac4F-xaAzm59Q 提取码:op9z

软件:jdk 1.7、hadoop 2.6、zookeeper 3.4 链接:https://pan.baidu.com/s/13phkiYe2zlw9NhaEvbDRzQ 提取码:t59h

2、机器配置

1)4台机器分配

| 主机名 | redhat01 | redhat02 | redhat03 | redhat04 |

| ip | 192.168.202.121 | 192.168.202.122 | 192.168.202.123 | 192.168.202.124 |

| zookeeper | zookeeper | zookeeper | zookeeper(obsrver) | |

| datanode | datanode | datanode | datanode | |

| nameipnode | namenode | resourcemanager | resourcemanager |

2)修改hosts

在4台机器的hosts添加如下配置

vim /etc/hosts

192.168.202.121 redhat01

192.168.202.122 redhat02

192.168.202.123 redhat03

192.168.202.124 redhat043)免密登录

(推荐4台机器都新建个相同的普通用户,以下操作都使用普通用户)在4台机器上分别执行:

ssh-keygen -t rsa一路回车后,在redhat01执行:

touch ~/.ssh/authorized_keys

chomd 600 ~/.ssh/authorized_keys将4台机器 ~/.ssh/id_rsa.pub 的内容都粘贴到上面新建的authorized_keys文件里,然后将authorized_keys文件分发到另外的3台机器

scp ~/.shh/authorized_keys redhat02:~/.shh/authorized_keys

scp ~/.shh/authorized_keys redhat03:~/.shh/authorized_keys

scp ~/.shh/authorized_keys redhat04:~/.shh/authorized_keys之后,在每台机器上都ssh连接4台机器(包括自己),第一次连接都要手动输入yes,以后就不要了,例如在redhat01上

ssh redhat01

#手动输入yes

ssh redhat02

#手动输入yes

ssh redhat03

#手动输入yes

ssh redhat04

#手动输入yes3、软件安装

1)安装jdk

解压jdk后,配置环境变量:

注意一定要配置到.bashrc,这样就不用修改hadoop-env.sh里面的JAVA_HOME项了。不然会报JAVA_HOME不存在的错。这是因为hadoop的守护进程是不会读取~/.bash_profile 这个配置文件的,但会读取.bashrc。想要具体了解可以百度“bashrc与profile的区别”。

vim ~/.bashrc

#加在最后

export JAVA_HOME=/home/hadoop/bigdata/jdk1.7.0_80

export PATH=.:$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar2)安装zookeeper

解压zookeeper,配置环境

vim ~/.bash_profile

## 在最后添加

export ZOOKEEPER_HOME=/home/hadoop/bigdata/zookeeper-3.4.11

PATH=$PATH:$HOME/bin:$ZOOKEEPER_HOME/bin

export PATH

进入zookeeper安装目录,在conf目录存放的是zookeeper的配置文件

cp zoo_sample.cfg zoo.cfg复制一下配置到zoo.cfg文件

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/hadoop/bigdata/zookeeper-3.4.11/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## add by user

# 以下内容手动添加

# server.id=主机名:心跳端口:选举端口

# 注意:这里给每个节点定义了id,这些id写到配置文件中

# id为1-255之间的任意的不重复的数字,一定要记得每个节点的id的对应关系

#observer表示该机器为观察者角色

server.1=redhat01:2888:3888

server.2=redhat02:2888:3888

server.3=redhat03:2888:3888

server.4=redhat04:2888:3888:observerzookeeper的三种角色:

leader:能接收所有的读写请求,也可以处理所有的读写请求,而且整个集群中的所有写数据请求都是由leader进行处理

follower:能接收所有的读写请求,但是读数据请求自己处理,写数据请求转发给leader

observer:跟follower的唯一的区别就是没有选举权和被选举权

注意这一行配置dataDir=/home/hadoop/bigdata/zookeeper-3.4.11/data,这个目录是需要改为你自己的目录,并手动新建好,在这个目录下,还要新建个myid文件,并且每台机器内容都不一样,需要和zoo.cfg最后的server.后面的数保持一致,

#在Redhat01上

echo 1 >/home/hadoop/bigdata/zookeeper-3.4.11/data/myid

#在Redhat02上

echo 2 >/home/hadoop/bigdata/zookeeper-3.4.11/data/myid

#在Redhat01上

echo 3 >/home/hadoop/bigdata/zookeeper-3.4.11/data/myid

#在Redhat01上

echo 4 >/home/hadoop/bigdata/zookeeper-3.4.11/data/myid3)安装hadoop

解压hadoop,配置环境

vim ~/.bash_profile

## 在最后添加

export ZOOKEEPER_HOME=/home/hadoop/bigdata/zookeeper-3.4.11

export HADOOP_HOME=/home/hadoop/bigdata/hadoop-2.6.0

PATH=$PATH:$HOME/bin:$ZOOKEEPER_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export PATH

修改配置文件,在hadoop目录的etc/hadoop 目录下

core-site.xml

fs.defaultFS

hdfs://ns/

hadoop.tmp.dir

/home/hadoop/bigdata/hadoop-2.6.0/data/hadoopdata

ha.zookeeper.quorum

redhat01:2181,redhat02:2181,redhat03:2181,redhat04:2181

ipc.client.connect.max.retries

50

Indicates the number of retries a client will make to establish a server connection.

ipc.client.connect.retry.interval

10000

Indicates the number of milliseconds a client will wait for before retrying to establish a server connection.

hdfs-site.xml

dfs.replication

2

dfs.nameservices

ns

dfs.ha.namenodes.ns

nn01,nn02

dfs.namenode.rpc-address.ns.nn01

redhat01:9000

dfs.namenode.http-address.ns.nn01

redhat01:50070

dfs.namenode.rpc-address.ns.nn02

redhat02:9000

dfs.namenode.http-address.ns.nn02

redhat02:50070

dfs.namenode.shared.edits.dir

qjournal://redhat01:8485;redhat02:8485;redhat03:8485/ns

dfs.journalnode.edits.dir

/home/hadoop/bigdata/hadoop-2.6.0/data/journaldata

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.ns

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

shell(/bin/true)

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

mapred-site.xml

注意集群没有这个文件这个要从mapred-site.xml.template复制一份,改名为mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

redhat01:10020

mapreduce.jobhistory.webapp.address

redhat01:19888

yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

jyarn

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

redhat03

yarn.resourcemanager.hostname.rm2

redhat04

yarn.resourcemanager.zk-address

redhat01:2181,redhat02:2181,redhat03:2181,redhat04:2181

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

86400

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

最后在修改 slaves配置文件,指定datanode所在节点

vim slaves

redhat01

redhat02

redhat03

redhat04

4)小结

这些在4台机器上都要配置,有点繁琐,但请一定要细心,不然很容易出问题。也可以在一台服务器上配置好后直接拷贝到另外的机器

scp -r /home/hadoop/bigdata/hadoop-2.6.0 redhat02:/home/hadoop/bigdata/hadoop-2.6.0特别是免密登录配置好后,使用scp拷贝非常方便

左右准备工作都做好后,要刷新下环境变量

#每天机器都要执行

source ~/.bash_profile二、启动集群

1、启动zookeeper

在四台服务器上分别执行

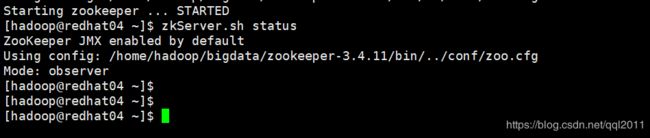

zkserver.sh start启动后可以查看节点状态,如redhat04确实处于observer模式

输入jps后可以看到zookeeper进程

2、启动hadoop

1)启动journalnode进程初始化namenode

在redhat01、redhat02、redhat03这3个journalnode节点上启动journalnode进程

hadoop-daemon.sh start journalnode在redhat01上初始化文件系统

hadoop namenode -format在redhat01上启动namenode进程

hadoop-daemon.sh start namenode在redhat02上同步namenode元数据

hadoop namenode -bootstrapStandby在redhat01或redhat02上格式化zkfc

hdfs zkfc -formatZK2)启动文件系统

先停掉所有进程,之后通过start-dfs.sh 一起启动

#在redhat01、redhat02、redhat03上分别执行,停掉journalnode进程

hadoop-daemon.sh stop journalnode

#在redhat01上执行,停掉namenode进程

hadoop-daemon.sh stop namenode

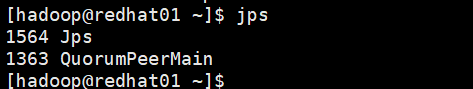

#在每个机器上执行jps,可看到所有进程都没停掉了。只有个jps进程和zookeeper进程

jps

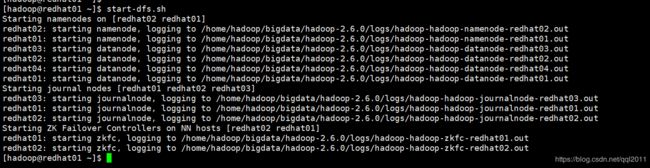

在redhat01上执行start-dfs.sh

启动结果如下

通过http://ip:50070访问web界面 ip是redhat01、redhat02的静态ip

3)启动yarn集群

在redhat03上执行start-yarn.sh

在redhat04上启动resourcemanager进程

yarn-daemon.sh start resourcemanager通过http://ip:8088端口查看yarn的web界面 ip是redhat03、redhat04的静态ip

由于redhat03的resourcemanager处于活动状态,redhat04处于standby,输入redhat04的ip访问yarn的web界面时会转到redhat03,由于本机电脑并没有配置redhat03的hosts所以找不到主机,并不是集群出错了

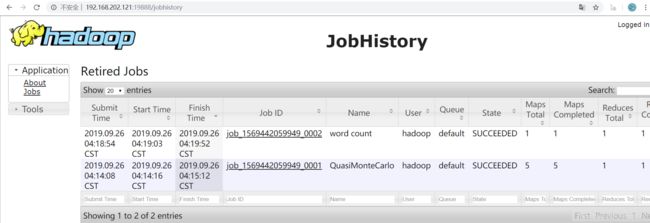

4)启动 mapreduce 任务历史服务器

在redhat01上执行

mr-jobhistory-daemon.sh start historyserver通过http://ip:19888 访问历史任务web界面 ip为redhat01静态ip

三、验证集群是否成功

四台机器的进程如下:

[hadoop@redhat01 ~]$ jps

2443 JobHistoryServer

2286 NodeManager

2071 DFSZKFailoverController

1950 JournalNode

1787 DataNode

2516 Jps

1363 QuorumPeerMain

1688 NameNode

[hadoop@redhat02 ~]$ jps

1667 DFSZKFailoverController

1249 QuorumPeerMain

1873 NodeManager

1417 NameNode

1559 JournalNode

2058 Jps

1484 DataNode

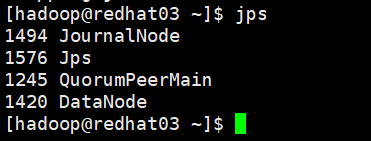

[hadoop@redhat03 ~]$ jps

1494 JournalNode

1624 ResourceManager

2067 Jps

1245 QuorumPeerMain

1725 NodeManager

1420 DataNode

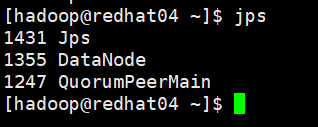

[hadoop@redhat04 ~]$ jps

1701 Jps

1476 NodeManager

1355 DataNode

1247 QuorumPeerMain

1622 ResourceManager

查看集群状态

[hadoop@redhat01 ~]$ hdfs dfsadmin -report

Configured Capacity: 50189762560 (46.74 GB)

Present Capacity: 21985267712 (20.48 GB)

DFS Remaining: 21984055296 (20.47 GB)

DFS Used: 1212416 (1.16 MB)

DFS Used%: 0.01%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Live datanodes (4):

Name: 192.168.202.124:50010 (redhat04)

Hostname: redhat04

Decommission Status : Normal

Configured Capacity: 12547440640 (11.69 GB)

DFS Used: 544768 (532 KB)

......

......(省略)

[hadoop@redhat01 ~]$

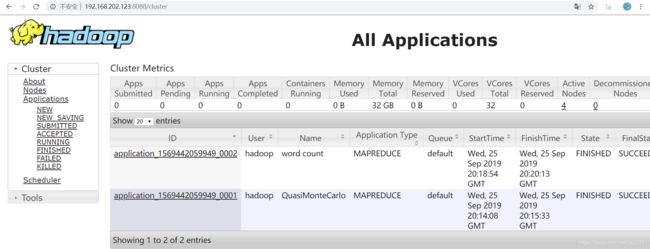

运行hadoop自带wordconut程序

#新建路径

hadoop fs -mkdir -p /user/hadoop/input

#将hadoop安装目录下license.txt文件上传

hadoop fs -put /home/hadoop/bigdata/hadoop-2.6.0/LICENSE.txt /user/hadoop/input/

#将hadoop安装目录下share/hadoop/mapreduce/ 目录下有自带的wordconut程序

hadoop jar /home/hadoop/bigdata/hadoop-2.6.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar wordcount /user/hadoop/input /user/hadoop/output

程序执行成功后在集群/user/hadoop/output目录下可以看到结果

四、一键启动脚本

由于每次启动太麻烦了,写了一个hadoop一键启动和关闭脚本,该脚本适合第二次启动,第一次初始化还是按照上面一步步来:

#!/bin/bash

zp_home_dir='/home/hadoop/bigdata/zookeeper-3.4.11/bin'

hd_home_dir='/home/hadoop/bigdata/hadoop-2.6.0/sbin'

nodeArr=(redhat01 redhat02 redhat03 redhat04)

echo '---- start zookeeper ----'

for node in ${nodeArr[@]};do

echo $node '-> zookeeper started'

ssh ${node} "${zp_home_dir}/zkServer.sh start"

done

sleep 3s

echo '---- start hdfs ----'

start-dfs.sh

sleep 3s

echo '----redhat03 start yarn ----'

ssh redhat03 "${hd_home_dir}/start-yarn.sh"

sleep 3s

echo '----redhat04 start resourcemanager ----'

ssh redhat04 "${hd_home_dir}/yarn-daemon.sh start resourcemanager"

echo '----redhat01 start mapreduce jobhistory tracker ----'

mr-jobhistory-daemon.sh start historyserver

关闭脚本:

#!/bin/bash

zp_home_dir='/home/hadoop/bigdata/zookeeper-3.4.11/bin'

hd_home_dir='/home/hadoop/bigdata/hadoop-2.6.0/sbin'

nodeArr=(redhat01 redhat02 redhat03 redhat04)

echo '----redhat01 stop mapreduce jobhistory tracker ----'

mr-jobhistory-daemon.sh stop historyserver

sleep 3s

echo '----redhat04 stop resourcemanager ----'

ssh redhat04 "${hd_home_dir}/yarn-daemon.sh stop resourcemanager"

sleep 3s

echo '----redhat03 stop yarn ----'

ssh redhat03 "${hd_home_dir}/stop-yarn.sh"

sleep 3s

echo '---- stop hdfs ----'

stop-dfs.sh

echo '---- stop zookeeper ----'

for node in ${nodeArr[@]};do

echo $node '-> zookeeper stopping'

ssh ${node} "${zp_home_dir}/zkServer.sh stop"

done