SpringBoot安装集成kafka,实现消息的发送和接收

SpringBoot如何集成kafka,实现消息的发送和接收

版本使用的是Boot是:2.0.6 kafka版本是: 2.1.10

环境准备,使用容器(docker)安装部署kafka

1. 下载镜像

- kafka需要zookeeper管理,所以需要先安装zookeeper镜像。

docker pull wurstmeister/zookeeper - 然后安装

kafka镜像:docker pull wurstmeister/kafka

2. 启动zookeeper和kafka容器

-

启动zookeeper镜像

docker run -d --name zookeeper -p 2181:2181 -t wurstmeister/zookeeper -

启动kafka镜像生成容器

docker run -d --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=10.0.75.1:2181 -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.0.75.1:9092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 -t wurstmeister/kafka

-

-e KAFKA_BROKER_ID=0 在kafka集群中,每个kafka都有一个BROKER_ID来区分自己

-

-e KAFKA_ZOOKEEPER_CONNECT=10.0.75.1:2181/kafka配置zookeeper管理kafka的路径192.168.155.56:2181/kafka (这里同一个虚拟机ip) -

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://10.0.75.1:9092把kafka的地址端口注册给zookeeper (这里同一个虚拟机ip) -

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092配置kafka的监听端口

3. 进行测试是否部署成功

-

进入kafka容器的命令行:

docker exec -it kafka /bin/bash -

进入kafka启动命令所在bin目录:

cd opt/kafka_x.xx-x.x.x/bin -

运行kafka生产者发送消息:

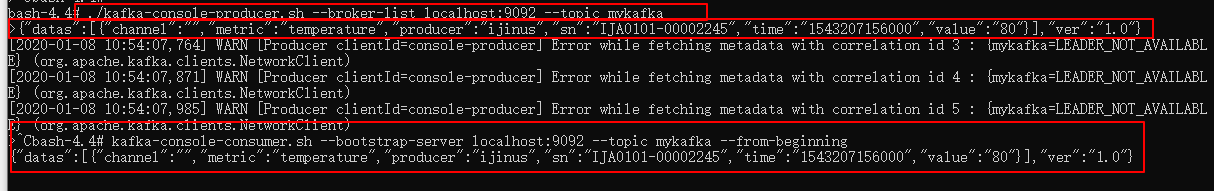

./kafka-console-producer.sh --broker-list localhost:9092 --topic mykafka{"datas":[{"channel":"","metric":"temperature","producer":"ijinus","sn":"IJA0101-00002245","time":"1543207156000","value":"80"}],"ver":"1.0"}

4.运行kafka消费者接收消息 : kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic mykafka --from-beginning

到此容器部署并成功启动了kafka

SpringBoot集成kafka

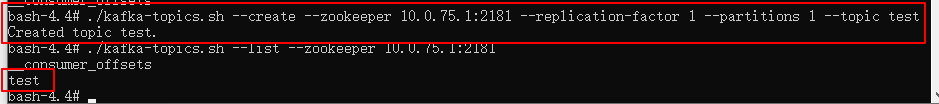

创建Kafka主题 - Kafka提供了一个名为 kafka-topics.sh 的命令行实用程序,用于在服务器上创建主题。 打开新终端并创建一个主题一个名为test的Topic

先进到opt/kafka_x.xx-x.x.x/bin目录

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test

这条命令的意思是,创建一个Topic到ZK(指定ZK的地址),副本个数为1,分区数为1,Topic的名称为test。

1. 导入依赖:

<parent>

<artifactId>spring-boot-demo-baseartifactId>

<groupId>spring-boot-demo-basegroupId>

<version>1.0-SNAPSHOTversion>

parent>

<modelVersion>4.0.0modelVersion>

<artifactId>spring-boot-demo-kafkaartifactId>

<version>1.0.0-SNAPSHOTversion>

<packaging>jarpackaging>

<dependencies>

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

dependency>

<dependency>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

<optional>trueoptional>

dependency>

dependencies>

2. application.yml

server:

port: 8080

servlet:

context-path: /kafka

spring:

kafka:

bootstrap-servers: 10.0.75.1:9092

#如果只需要发送信息就只配置生产者

producer:

retries: 0

batch-size: 16384

buffer-memory: 33554432

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

#消费者配置

consumer:

group-id: test-consumer

# 手动提交

enable-auto-commit: false

auto-offset-reset: latest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

properties:

session.timeout.ms: 60000

listener:

log-container-config: false

concurrency: 5

# 手动提交

ack-mode: manual_immediate

3. KafkaConfig.java配置类

/**

* @Author: zhihao

* @Date: 8/1/2020 下午 8:31

* @Description: kafka配置类

* @Versions 1.0

**/

@Configuration

@EnableConfigurationProperties({KafkaProperties.class})

@EnableKafka

@AllArgsConstructor

public class KafkaConfig {

private final KafkaProperties kafkaProperties;

@Bean

public KafkaTemplate<String, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

@Bean

public ProducerFactory<String, String> producerFactory() {

return new DefaultKafkaProducerFactory<>(kafkaProperties.buildProducerProperties());

}

//------------------------------以下是消费者配置-----------------

@Bean

public ConcurrentKafkaListenerContainerFactory<String, String> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(3);

factory.setBatchListener(true);

factory.getContainerProperties().setPollTimeout(3000);

return factory;

}

@Bean

public ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(kafkaProperties.buildConsumerProperties());

}

@Bean("ackContainerFactory")

public ConcurrentKafkaListenerContainerFactory<String, String> ackContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.getContainerProperties().setAckMode(AbstractMessageListenerContainer.AckMode.MANUAL_IMMEDIATE);

factory.setConcurrency(3);

return factory;

}

}

4. 写个消费者KafkaMessageHandler监听消息

/**

* @Author: zhihao

* @Date: 8/1/2020 下午 9:19

* @Description: 消息消费者

* @Versions 1.0

**/

@Component

@Slf4j

public class KafkaMessageHandler {

/***

* 接收消息后手动提交

*

* @param record 消费记录

* @param acknowledgment 确认接收

* @return void

* @author: zhihao

* @date: 8/1/2020

*/

@KafkaListener(topics = "test",containerFactory = "kafkaListenerContainerFactory")

public void handlerMessage(ConsumerRecord record, Acknowledgment acknowledgment){

try {

//手动接收消息

String value = (String) record.value();

System.out.println("手动接收<<接收到消息,进行消费>>>"+value);

} catch (Exception e) {

log.error("手动接收<<消费异常信息>>>"+e.getMessage());

}finally {

//最终提交确认接收到消息 手动提交 offset

acknowledgment.acknowledge();

}

}

// /***

// * 接收消息后自动提交 需要配置开启enable-auto-commit: true

// *

// * @param message 消息

// * @return void

// * @author: zhihao

// * @date: 8/1/2020

// */

// @KafkaListener(topics = "test",groupId = "test-consumer")

// public void handlerMessage(String message){

// System.out.println("接收到自动确认消息"+message);

// }

}

5. 写测试类KafkaSendMessage进行发送消息:

/**

* @Author: zhihao

* @Date: 8/1/2020 下午 9:36

* @Description: 测试发送消息

* @Versions 1.0

**/

@SpringBootTest(classes = ApplicationKafka.class)

@RunWith(value = SpringRunner.class)

public class KafkaSendMessage {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

/***

* 简单发送消息

*

* @param message 消息

* @return

* @author: zhihao

* @date: 8/1/2020

*/

public void testSend(String message){

//向test主题发送消息

kafkaTemplate.send("test",message);

}

/***

* 发送消息获取发送成功或者失败

*

* @param message 消息

* @return

* @author: zhihao

* @date: 8/1/2020

*/

public void Send(String message){

//向test主题发送消息

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send("test", message);

future.addCallback(new ListenableFutureCallback<SendResult<String, String>>() {

@Override

public void onFailure(Throwable throwable) {

System.out.printf("消息:{} 发送失败,原因:{}", message, throwable.getMessage());

}

@Override

public void onSuccess(SendResult<String, String> stringStringSendResult) {

System.out.printf("成功发送消息:{},offset=[{}]", message, stringStringSendResult.getRecordMetadata().offset());

}

});

}

@Test

public void test(){

this.testSend("这是一个简单发送消息测试");

this.Send("这是一个发送消息获取发送结果测试");

}

}

扩展资料:

编程狮文档:

Git-Kafka自动提交消息教程

Spring Boot 版本和 Spring-Kafka 的版本对应关系:https://spring.io/projects/spring-kafka

Spring-Kafka 官方文档:https://docs.spring.io/spring-kafka/docs/2.2.0.RELEASE/reference/html/

Kafka 官方文档 : http://kafka.apache.org/

项目代码: