Hadoop高可用集群搭建

集群资源与角色规划

| node1 | node2 | node3 | node4 | node5 |

|---|---|---|---|---|

| zookeeper | zookeeper | zookeeper | ||

| nn1 | nn2 | |||

| datanode | datanode | datanode | datanode | datanode |

| journal | journal | journal | ||

| rm1 | rm2 | |||

| nodemanager | nodemanager | nodemanager | nodemanager | nodemanager |

一、安装zookeeper

- zookeeper安装目录:/home/hadoop/zookeeper-3.4.13/

- zookeeper dataDir: /home/hadoop/zookeeper-data/data

- zookeeper dataLogDir: /home/hadoop/zookeeper-data/dataLog

- zoo.cfg 配置文件参考如下:

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/hadoop/zookeeper-data/data

dataLogDir=/home/hadoop/zookeeper-data/dataLog

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=node3:2888:3888

server.2=node4:2888:3888

server.3=node5:2888:3888

- 在node3,node4,node5节点中创建myid文件:

cd /home/hadoop/zookeeper-data/data

touch myid

echo 1 > myid ----> node3

(echo 2 > myid ) -----> node4

(echo 3 > myid ) -----> node5

- 编辑zookeeper环境变量

export ZOOKEEPER_HOME=/home/hadoop/zookeeper-3.4.13/

export PATH=$PATH:$ZOOKEEPER_HOME/bin

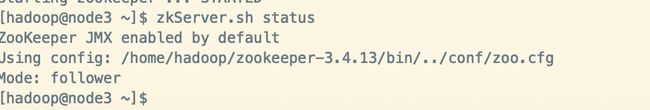

- 运行: zkServer.sh start

- 查看状态: zkServer.sh status

二、安装Hadoop

1、编辑 hadoop-env.sh

主要设置为你自己的JAVA_HOME

export JAVA_HOME=/usr/local/java

2、编辑 core-site.xml

<configuration>

<property>

<name>io.file.buffer.sizename>

<value>131072value>

property>

<property>

<name>ha.zookeeper.quorumname>

<value>node3:2181,node4:2181,node5:2181value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/home/hadoop/hadoop-data/tempvalue>

property>

<property>

<name>fs.defaultFSname>

<value>hdfs://leovalue>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

<property>

<name>hadoop.proxyuser.root.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.root.groupsname>

<value>*value>

property>

<property>

<name>hadoop.logfile.sizename>

<value>10000000value>

<description>The max size of each log filedescription>

property>

<property>

<name>hadoop.logfile.countname>

<value>10value>

<description>The max number of log filesdescription>

property>

<property>

<name>ipc.client.connect.max.retriesname>

<value>100value>

property>

<property>

<name>ipc.client.connect.retry.intervalname>

<value>10000value>

property>

configuration>

3、编辑 hdfs-site.xml

<configuration>

<property>

<name>dfs.nameservicesname>

<value>leovalue>

property>

<property>

<name>dfs.ha.namenodes.leoname>

<value>nn1,nn2value>

property>

<property>

<name>dfs.namenode.rpc-address.leo.nn1name>

<value>node1:8020value>

property>

<property>

<name>dfs.namenode.rpc-address.leo.nn2name>

<value>node2:8020value>

property>

<property>

<name>dfs.namenode.http-address.leo.nn1name>

<value>node1:50070value>

property>

<property>

<name>dfs.namenode.http-address.leo.nn2name>

<value>node2:50070value>

property>

<property>

<name>dfs.namenode.shared.edits.dirname>

<value>qjournal://node3:8485;node4:8485;node5:8485/leovalue>

property>

<property>

<name>dfs.client.failover.proxy.provider.leoname>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvidervalue>

property>

<property>

<name>dfs.ha.fencing.methodsname>

<value>sshfencevalue>

property>

<property>

<name>dfs.ha.fencing.ssh.private-key-filesname>

<value>/home/hadoop/root/.ssh/id_rsavalue>

property>

<property>

<name>dfs.journalnode.edits.dirname>

<value>/home/hadoop/hadoop-data/journalvalue>

property>

<property>

<name>dfs.ha.automatic-failover.enabledname>

<value>truevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/home/hadoop/hadoop-data/data-node-datavalue>

property>

<property>

<name>dfs.heartbeat.intervalname>

<value>10value>

property>

<property>

<name>dfs.qjournal.start-segment.timeout.msname>

<value>60000value>

property>

<property>

<name>dfs.qjournal.select-input-streams.timeout.msname>

<value>60000value>

property>

<property>

<name>dfs.qjournal.write-txns.timeout.msname>

<value>60000value>

property>

<property>

<name>dfs.datanode.max.transfer.threadsname>

<value>163value>

property>

<property>

<name>dfs.balance.bandwidthPerSecname>

<value>10485760value>

<description> Specifies the maximum bandwidth that each datanode can utilize for the balancing purpose in term of the number of bytes per second. description>

property>

<property>

<name>dfs.permissions.enabledname>

<value>falsevalue>

property>

configuration>

4、编辑 mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapred.child.java.optsname>

<value>-Xmx1024mvalue>

property>

<property>

<name>mapreduce.tasktracker.reduce.tasks.maximumname>

<value>5value>

property>

<property>

<name>mapreduce.tasktracker.map.tasks.maximumname>

<value>5value>

property>

configuration>

5、编辑 yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.nodemanager.log-dirsname>

<value>/home/hadoop/log/yarnvalue>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.log.retain-secondsname>

<value>259200value>

property>

<property>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>6value>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>10240value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>51200value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-vcoresname>

<value>30value>

property>

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>cluster1value>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm1,rm2value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm1name>

<value>node4value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm2name>

<value>node5value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1 name>

<value>node4:8088value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2name>

<value>node5:8088value>

property>

<property>

<name>yarn.resourcemanager.zk-addressname>

<value>node3:2181,node4:2181,node5:2181value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shuffle,spark_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.classname>

<value>org.apache.spark.network.yarn.YarnShuffleServicevalue>

property>

configuration>

6、编辑slaves文件

node1

node2

node3

node4

node5

7、分发hadoop到其余节点

8、增加hadoop的环境变量

export HADOOP_HOME=/home/hadoop/hadoop-2.7.4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

9、启动zookeeper集群

分别在node3、node4、node5节点上执行:zkServer.sh start

10、启动journalnode

分别在node3、node4、node5节点上执行:hadoop-daemon.sh start journalnode

jps 命令检验,三个节点上多了JournalNode进程

11、格式化namenode

在node1节点上执行命令:hdfs namenode -format

启动node1的namenode:hadoop-deamon.sh start namenode

12、在node2节点上同步node1的namenode元数据

hdfs namenode -bootstrapStandby

启动node2节点的namenode: hadoop-deamon.sh start namenode

13、格式化zkfc

node1节点上执行:hdfs zkfc -formatZK

14、启动HDFS

node1节点上执行:start-dfs.sh

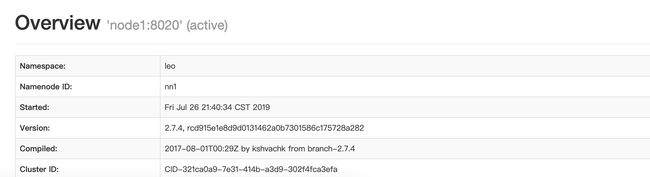

15、查看namenode状态

[hadoop@node1 hadoop]$ hdfs haadmin -getServiceState nn1

active

[hadoop@node1 hadoop]$ hdfs haadmin -getServiceState nn2

standby

[hadoop@node1 hadoop]$

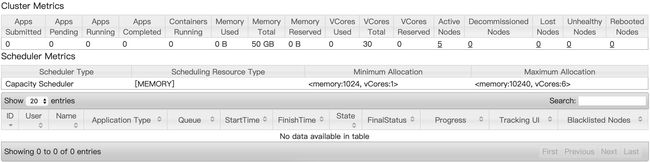

16、启动yarn

node1节点上执行:start-yarn.sh

分别在node4、node5节点上启动resourcemanager: yarn-daemon.sh start resourcemanager

17、查看yarn状态

[hadoop@node1 hadoop]$ yarn rmadmin -getServiceState rm1

active

[hadoop@node1 hadoop]$ yarn rmadmin -getServiceState rm2

standby

[hadoop@node1 hadoop]$