大数据之----IDE开发环境部署idea + hadoop + meavn + scala环境

软件版本:

jdk-8u172-windows-x64

ideaIU-2017.3

hadoop-2.8.1

apache-maven-3.3.9-bin

idea软件安装

安装软件 汉化 破解注册 参考下面

http://www.jb51.net/softjc/549047.html

jdk安装

完成安装 配置环境变量

JAVA_HOME=D:\Program Files\Java\jdk1.8.0_172

CLASSPATH =.;%JAVA_HOME%\lib;%JAVA_HOME%\lib\tools.jar;

Path=%JAVA_HOME%\bin;%JAVA_HOME%\jre\bin;

maven安装

解压编辑conf/settings.xml

创建文件夹 D:\software\apache-maven-3.3.9\repository

hadoop安装

hadoop编译好的压缩包 解压部署1. windows 解压hadoop2.8.1

2. 设置环境变量

HADOOP_HOME=E:\BIGDATA\hadoop-2.8.1

PATH=%HADOOP_HOME/bin;xxxxxxx

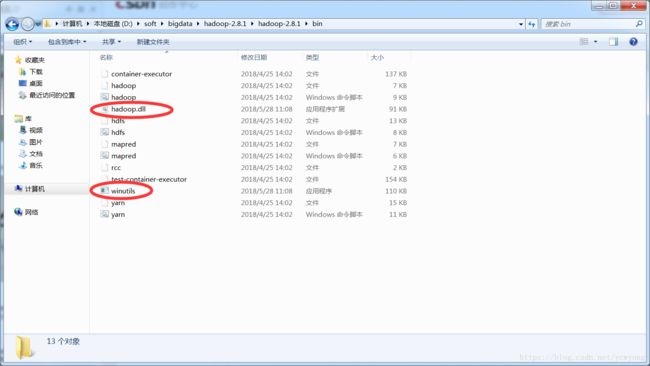

3.winutils.exe hadoop.dll 放到bin文件夹

4.重启笔记本 生效

新建project 配置maven

pom.xml文件配置

类似这样的依赖公共模块等配置查询

maven官网,找对应hadoop的版本,找到配置文件复制到这里

org.apache.hadoop hadoop-common 2.8.1

安装scala

设---- plugins-----> 搜索scala 在线安装

代码案例运行一个java操作hdfs案例

package com.ruozedata.hadoop;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

public class HDFS {

public static void main(String[] args) {

try {

//1.定义配置文件 fs.defaultFS

Configuration conf = new Configuration();

// xxx x1 = new xxx()

// x1 对象 万物皆对象 属性 静态存在 方法 动态 () 传参或不传参 返回结果或无结果 if for/while void

conf.set("fs.defaultFS", "hdfs://172.16.28.133:9008/");

conf.set("dfs.client.use.datanode.hostname","true"); //hdfs 在云服务器上

/**

* hdfs-site.xml 需要重启hdfs

dfs.permissions

false

*

*

*/

//2.文件系统

FileSystem fs = FileSystem.get(conf);

//3.创建文件夹

// boolean mkdirs = fs.mkdirs(new Path("/ruoze/data"));

// System.out.println(mkdirs);

//4.上传

fs.copyFromLocalFile(new Path("E:\\hdfs.txt"),new Path("/ruoze/data"));

fs.

//5.下载

//fs.copyToLocalFile(new Path("/ruoze/data/hdfs.log"),new Path("C:\\"));

//下载

/**

* ERROR: java.io.IOException: HADOOP_HOME or hadoop.home.dir are not set.

https://wiki.apache.org/hadoop/WindowsProblems

* 1. windows 解压hadoop2.8.1

* 2. 设置环境变量

* HADOOP_HOME=E:\BIGDATA\hadoop-2.8.1

* PATH=%HADOOP_HOME/bin;xxxxxxx

* 3.winutils.exe 放到bin文件夹

* 4.重启笔记本 生效

*/

}catch (Exception ex){

System.out.println(ex.toString());

}

}

}打包样例测试WordCount代码

clean 清理更新代码

package打包 生产jar包

下面生产jar的位置

将生成jar包上传到服务器上

上传jar 位置 /home/hadoop/d3-1.0-SNAPSHOT.jar com.ruozedata.hadoop.WordCount com.ruozedata.hadoop包名称 WordCount 类名称 /wordcount/input ---统计路径 /wordcount/output7 --输出路径

提交jar测试

[hadoop@hadoop01 ~]$ hadoop fs -ls /wordcount/input

Found 1 items

-rw-r--r-- 3 hadoop supergroup 10847 2018-05-10 09:21 /wordcount/input/install.log.syslog[hadoop@hadoop01 ~]$ hadoop jar /home/hadoop/d3-1.0-SNAPSHOT.jar com.ruozedata.hadoop.WordCount /wordcount/input /wordcount/output7

18/05/10 17:02:22 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

18/05/10 17:02:23 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

18/05/10 17:02:24 INFO input.FileInputFormat: Total input files to process : 1

18/05/10 17:02:24 INFO mapreduce.JobSubmitter: number of splits:1

18/05/10 17:02:24 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1525766740899_0002

18/05/10 17:02:25 INFO impl.YarnClientImpl: Submitted application application_1525766740899_0002

18/05/10 17:02:25 INFO mapreduce.Job: The url to track the job: http://hadoop01:8088/proxy/application_1525766740899_0002/

18/05/10 17:02:25 INFO mapreduce.Job: Running job: job_1525766740899_0002

18/05/10 17:02:35 INFO mapreduce.Job: Job job_1525766740899_0002 running in uber mode : false

18/05/10 17:02:35 INFO mapreduce.Job: map 0% reduce 0%

18/05/10 17:02:42 INFO mapreduce.Job: map 100% reduce 0%

18/05/10 17:02:50 INFO mapreduce.Job: map 100% reduce 100%

18/05/10 17:02:50 INFO mapreduce.Job: Job job_1525766740899_0002 completed successfully

18/05/10 17:02:50 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=4737

FILE: Number of bytes written=281597

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=10967

HDFS: Number of bytes written=3683

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=4959

Total time spent by all reduces in occupied slots (ms)=4831

Total time spent by all map tasks (ms)=4959

Total time spent by all reduce tasks (ms)=4831

Total vcore-milliseconds taken by all map tasks=4959

Total vcore-milliseconds taken by all reduce tasks=4831

Total megabyte-milliseconds taken by all map tasks=5078016

Total megabyte-milliseconds taken by all reduce tasks=4946944

Map-Reduce Framework

Map input records=134

Map output records=1236

Map output bytes=15656

Map output materialized bytes=4737

Input split bytes=120

Combine input records=1236

Combine output records=266

Reduce input groups=266

Reduce shuffle bytes=4737

Reduce input records=266

Reduce output records=266

Spilled Records=532

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=193

CPU time spent (ms)=2570

Physical memory (bytes) snapshot=430907392

Virtual memory (bytes) snapshot=4199063552

Total committed heap usage (bytes)=349700096

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=10847

File Output Format Counters

Bytes Written=3683[hadoop@hadoop01 ~]$ hadoop fs -ls /wordcount/output7

Found 2 items

-rw-r--r-- 3 hadoop supergroup 0 2018-05-10 17:02 /wordcount/output7/_SUCCESS

-rw-r--r-- 3 hadoop supergroup 3683 2018-05-10 17:02 /wordcount/output7/part-r-00000

[hadoop@hadoop01 ~]$ hadoop fs -cat /wordcount/output7/part-r-00000

'haldaemon' 4

'mail' 2

'postfix' 2

(seqno=2) 1

/etc/group: 36

/etc/gshadow: 36

14:36:27 5

14:36:28 5

14:36:30 4

14:36:31 14

14:36:32 10

14:38:06 4

14:40:58 9

14:41:49 3

14:42:09 3

14:43:07 6

14:43:47 4

14:43:56 4

14:43:57 13

14:44:07 4

14:44:20 6

14:44:21 4

14:44:44 4

14:44:45 6

14:46:15 1

14:47:26 4

。。。。。。。。。。。。

GID=29 1

UID=16, 1

UID=170, 1

UID=173, 1

UID=27, 1

UID=29, 1

UID=32, 1

UID=38, 1

UID=42, 1

UID=48, 1

UID=497, 1

UID=498, 1

UID=499, 1

UID=65534, 1

name=dialout 1

name=dialout, 2

name=floppy 1

name=floppy, 2

name=fuse 1

name=fuse, 2

name=gdm 1

name=gdm, 3

name=haldaemon 1

name=haldaemon, 3

name=mysql 1

name=mysql, 3

name=nfsnobody 1

name=nfsnobody, 3

name=ntp 1

name=ntp, 3

name=oprofile 1

name=oprofile, 3

name=postdrop 1

name=postdrop, 2

name=postfix 1

name=postfix, 3

name=pulse 1

name=pulse, 3

name=pulse-access 1

name=pulse-access, 2

name=rpc 1

name=rpc, 3

name=rpcuser, 2

name=rtkit 1

name=rtkit, 3

name=saslauth 1

name=saslauth, 3

name=slocate 1

name=slocate, 2

name=sshd 1

name=sshd, 3

name=stapdev 1

name=stapdev, 2

name=stapsys 1

name=stapsys, 2

name=stapusr 1

name=stapusr, 2

name=tape 1

name=tape, 2

name=tcpdump 1

name=tcpdump, 3

name=utempter 1

name=utempter, 2

name=utmp 1

name=utmp, 2

name=vcsa 1

name=vcsa, 3

name=wbpriv 1

name=wbpriv, 2

new 56

notice 1

policyload 1

received 1

shadow 2

shell=/bin/bash 1

shell=/sbin/nologin 18

to 76

user: 19

useradd[1870]: 1

useradd[1906]: 1

useradd[1920]: 1

useradd[1942]: 1

useradd[1965]: 1

useradd[2861]: 1

useradd[5046]: 3

useradd[5149]: 1

useradd[5186]: 1

useradd[5201]: 1

useradd[5218]: 3

useradd[5703]: 1

useradd[5731]: 2

useradd[5747]: 1

useradd[5765]: 1

useradd[5820]: 1

useradd[6020]: 1

useradd[6987]: 1

useradd[7030]: 1

[hadoop@hadoop01 ~]$ 错误1:

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

java.net.ConnectException: Call From chaoren-PC/192.168.1.119 to 172.16.28.133:9000 failed on connection exception: java.net.ConnectException: Connection refused: no further information; For more details see:

java.lang.IllegalArgumentException: java.net.UnknownHostException:

172.16.28.133:9000

错误2:

java.lang.IllegalArgumentException: java.net.UnknownHostException:

主机hosts配置的主机名没有生效

解决方法:代码里面直接使用IP地址连接

conf.set("fs.defaultFS", "hdfs://172.16.28.133:9008/");9000端口网络限制了,idea死活过不来,只能改服务器端口改成9008就好了,网络环境限制问题