im源码分析(teamtalk)--DbProxyServer

im源码分析(teamtalk)系列:

1. im源码分析(teamtalk)–LoginServer

2. im源码分析(teamtalk)–RouteServer

3. im源码分析(teamtalk)–DbProxyServer

DbProxyServer

- DbProxyServer说明

- 流程说明

- 配置文件

- 流程

- 如何根据函数指针找到正确的处理接口

- 数据库连接池如何保证数据读写的一致性和顺序性?

DbProxyServer说明

DbProxyServer是数据库代理模块,主要和redis和mysql数据库打交道。所有的数据持久化存储都经过DbProxyServer。功能听起来很简单,但具体的实现有些复杂。

流程说明

配置文件

ListenIP=0.0.0.0

ListenPort=10600

ThreadNum=48 # double the number of CPU core

MsfsSite=127.0.0.1

#configure for mysql

DBInstances=teamtalk_master,teamtalk_slave

#teamtalk_master

teamtalk_master_host=127.0.0.1

teamtalk_master_port=3306

teamtalk_master_dbname=teamtalk

teamtalk_master_username=test

teamtalk_master_password=12345

teamtalk_master_maxconncnt=16

#teamtalk_slave

teamtalk_slave_host=127.0.0.1

teamtalk_slave_port=3306

teamtalk_slave_dbname=teamtalk

teamtalk_slave_username=test

teamtalk_slave_password=12345

teamtalk_slave_maxconncnt=16

#configure for unread

CacheInstances=unread,group_set,token,sync,group_member

#未读消息计数器的redis

unread_host=127.0.0.1

unread_port=6379

unread_db=1

unread_maxconncnt=16

#群组设置redis

group_set_host=127.0.0.1

group_set_port=6379

group_set_db=1

group_set_maxconncnt=16

#同步控制

sync_host=127.0.0.1

sync_port=6379

sync_db=2

sync_maxconncnt=1

#deviceToken redis

token_host=127.0.0.1

token_port=6379

token_db=1

token_maxconncnt=16

#GroupMember

group_member_host=127.0.0.1

group_member_port=6379

group_member_db=1

group_member_maxconncnt=48

#AES 密钥

aesKey=12345678901234567890123456789012

需要注意的有以下几点:

ThreadNum=48 # double the number of CPU core

ThreadNum为线程池的个数

DBInstances=teamtalk_master,teamtalk_slave

mysql主从配置

CacheInstances=unread,group_set,token,sync,group_member

unread,group_set,token,sync,group_member这些热数据是放在redis里的。

_maxconncnt

同时注意_maxconncnt这个参数,是连接池的最大连接数

eg:

int CacheManager::Init()

{

CConfigFileReader config_file("dbproxyserver.conf");

char* cache_instances = config_file.GetConfigName("CacheInstances");

if (!cache_instances) {

log("not configure CacheIntance");

return 1;

}

char host[64];

char port[64];

char db[64];

char maxconncnt[64];

CStrExplode instances_name(cache_instances, ',');

for (uint32_t i = 0; i < instances_name.GetItemCnt(); i++) {

char* pool_name = instances_name.GetItem(i);

//printf("%s", pool_name);

snprintf(host, 64, "%s_host", pool_name);

snprintf(port, 64, "%s_port", pool_name);

snprintf(db, 64, "%s_db", pool_name);

snprintf(maxconncnt, 64, "%s_maxconncnt", pool_name);

char* cache_host = config_file.GetConfigName(host);

char* str_cache_port = config_file.GetConfigName(port);

char* str_cache_db = config_file.GetConfigName(db);

char* str_max_conn_cnt = config_file.GetConfigName(maxconncnt);

if (!cache_host || !str_cache_port || !str_cache_db || !str_max_conn_cnt) {

log("not configure cache instance: %s", pool_name);

return 2;

}

CachePool* pCachePool = new CachePool(pool_name, cache_host, atoi(str_cache_port),

atoi(str_cache_db), atoi(str_max_conn_cnt));

if (pCachePool->Init()) {

log("Init cache pool failed");

return 3;

}

m_cache_pool_map.insert(make_pair(pool_name, pCachePool));

}

return 0;

}

CachePool* pCachePool = new CachePool(pool_name, cache_host, atoi(str_cache_port), atoi(str_cache_db), atoi(str_max_conn_cnt));

创建最大连接个数为maxconncnt的缓存池;

流程

- 创建redis缓存连接池

CacheManager* pCacheManager = CacheManager::getInstance();

if (!pCacheManager) {

log("CacheManager init failed");

return -1;

}

- 创建mysql数据库连接池

CDBManager* pDBManager = CDBManager::getInstance();

if (!pDBManager) {

log("DBManager init failed");

return -1;

}

- 创建线程池

init_proxy_conn(thread_num);

- 监听地址和端口

- 收到MsgServer的消息后会创建一个CProxyTask的任务,把任务交给线程池处理

void CProxyConn::HandlePduBuf(uchar_t* pdu_buf, uint32_t pdu_len)

{

CImPdu* pPdu = NULL;

pPdu = CImPdu::ReadPdu(pdu_buf, pdu_len);

if (pPdu->GetCommandId() == IM::BaseDefine::CID_OTHER_HEARTBEAT) {

return;

}

pdu_handler_t handler = s_handler_map->GetHandler(pPdu->GetCommandId());

if (handler) {

CTask* pTask = new CProxyTask(m_uuid, handler, pPdu);

g_thread_pool.AddTask(pTask);

} else {

log("no handler for packet type: %d", pPdu->GetCommandId());

}

}

- 线程池调用每个CProxyConn类的

run接口执行任务。

class CProxyConn : public CImConn {

public:

CProxyConn();

virtual ~CProxyConn();

virtual void Close();

virtual void OnConnect(net_handle_t handle);

virtual void OnRead();

virtual void OnClose();

virtual void OnTimer(uint64_t curr_tick);

void HandlePduBuf(uchar_t* pdu_buf, uint32_t pdu_len);

static void AddResponsePdu(uint32_t conn_uuid, CImPdu* pPdu); // 工作线程调用

static void SendResponsePduList(); // 主线程调用

private:

// 由于处理请求和发送回复在两个线程,socket的handle可能重用,所以需要用一个一直增加的uuid来表示一个连接

static uint32_t s_uuid_alloctor;

uint32_t m_uuid;

static CLock s_list_lock;

static list<ResponsePdu_t*> s_response_pdu_list; // 主线程发送回复消息

};

- 通过函数指针找到对应的真正的处理函数,这个地方有点坑,在下面的地方会讲到。

// 函数指针,头文件中定义

typedef void (*pdu_handler_t)(CImPdu* pPdu, uint32_t conn_uuid);

...

// cpp中定义

void CProxyTask::run()

{

if (!m_pPdu) {

// tell CProxyConn to close connection with m_conn_uuid

CProxyConn::AddResponsePdu(m_conn_uuid, NULL);

} else {

if (m_pdu_handler) {

m_pdu_handler(m_pPdu, m_conn_uuid);

}

}

}

以创建群组为例,其具体的实现接口如下:

/**

* 创建群组

*

* @param pPdu 收到的packet包指针

* @param conn_uuid 该包过来的socket 描述符

*/

void createGroup(CImPdu* pPdu, uint32_t conn_uuid)

{

IM::Group::IMGroupCreateReq msg;

IM::Group::IMGroupCreateRsp msgResp;

if(msg.ParseFromArray(pPdu->GetBodyData(), pPdu->GetBodyLength()))

{

CImPdu* pPduRes = new CImPdu;

uint32_t nUserId = msg.user_id();

string strGroupName = msg.group_name();

IM::BaseDefine::GroupType nGroupType = msg.group_type();

if(IM::BaseDefine::GroupType_IsValid(nGroupType))

{

string strGroupAvatar = msg.group_avatar();

set<uint32_t> setMember;

uint32_t nMemberCnt = msg.member_id_list_size();

for(uint32_t i=0; i<nMemberCnt; ++i)

{

uint32_t nUserId = msg.member_id_list(i);

setMember.insert(nUserId);

}

log("createGroup.%d create %s, userCnt=%u", nUserId, strGroupName.c_str(), setMember.size());

uint32_t nGroupId = CGroupModel::getInstance()->createGroup(nUserId, strGroupName, strGroupAvatar, nGroupType, setMember);

...//省略部分代码

msgResp.set_attach_data(msg.attach_data());

pPduRes->SetPBMsg(&msgResp);

pPduRes->SetSeqNum(pPdu->GetSeqNum());

pPduRes->SetServiceId(IM::BaseDefine::SID_GROUP);

pPduRes->SetCommandId(IM::BaseDefine::CID_GROUP_CREATE_RESPONSE);

// 回复处理结果

CProxyConn::AddResponsePdu(conn_uuid, pPduRes);

}

- 调用群组模块(CGroupModel)创建接口

// 数据库处理接口

uint32_t nGroupId = CGroupModel::getInstance()->createGroup(nUserId, strGroupName, strGroupAvatar, nGroupType, setMember);

- 调用数据库接口

bool CGroupModel::insertNewGroup(uint32_t nUserId, const string& strGroupName, const string& strGroupAvatar, uint32_t nGroupType, uint32_t nMemberCnt, uint32_t& nGroupId)

{

bool bRet = false;

nGroupId = INVALID_VALUE;

CDBManager* pDBManager = CDBManager::getInstance();

CDBConn* pDBConn = pDBManager->GetDBConn("teamtalk_master");

if (pDBConn)

{

string strSql = "insert into IMGroup(`name`, `avatar`, `creator`, `type`,`userCnt`, `status`, `version`, `lastChated`, `updated`, `created`) "\

"values(?, ?, ?, ?, ?, ?, ?, ?, ?, ?)";

CPrepareStatement* pStmt = new CPrepareStatement();

if (pStmt->Init(pDBConn->GetMysql(), strSql))

{

uint32_t nCreated = (uint32_t)time(NULL);

uint32_t index = 0;

uint32_t nStatus = 0;

uint32_t nVersion = 1;

uint32_t nLastChat = 0;

pStmt->SetParam(index++, strGroupName);

pStmt->SetParam(index++, strGroupAvatar);

...

- 需要从第二步创建的数据库连接池(或者redis缓存连接池)中获取连接,然后对数据库进行存储。

CDBManager* pDBManager = CDBManager::getInstance();

CDBConn* pDBConn = pDBManager->GetDBConn("teamtalk_master");

- 回复处理结果

// 回复处理结果

CProxyConn::AddResponsePdu(conn_uuid, pPduRes);

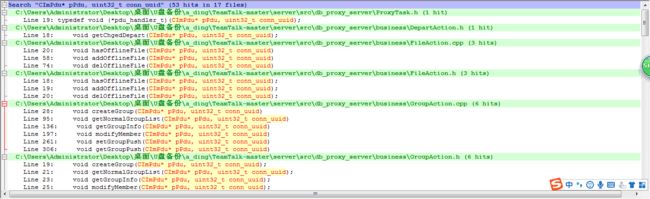

如何根据函数指针找到正确的处理接口

如何正确找到每个请求的处理接口呢? 关键在HandlerMap里面:

/**

* 初始化函数,加载了各种commandId 对应的处理函数

*/

void CHandlerMap::Init()

{

// Login validate

m_handler_map.insert(make_pair(uint32_t(CID_OTHER_VALIDATE_REQ), DB_PROXY::doLogin));

m_handler_map.insert(make_pair(uint32_t(CID_LOGIN_REQ_PUSH_SHIELD), DB_PROXY::doPushShield));

m_handler_map.insert(make_pair(uint32_t(CID_LOGIN_REQ_QUERY_PUSH_SHIELD), DB_PROXY::doQueryPushShield));

// recent session

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_RECENT_CONTACT_SESSION_REQUEST), DB_PROXY::getRecentSession));

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_REMOVE_SESSION_REQ), DB_PROXY::deleteRecentSession));

// users

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_USER_INFO_REQUEST), DB_PROXY::getUserInfo));

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_ALL_USER_REQUEST), DB_PROXY::getChangedUser));

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_DEPARTMENT_REQUEST), DB_PROXY::getChgedDepart));

m_handler_map.insert(make_pair(uint32_t(CID_BUDDY_LIST_CHANGE_SIGN_INFO_REQUEST), DB_PROXY::changeUserSignInfo));

// message content

m_handler_map.insert(make_pair(uint32_t(CID_MSG_DATA), DB_PROXY::sendMessage));

m_handler_map.insert(make_pair(uint32_t(CID_MSG_LIST_REQUEST), DB_PROXY::getMessage));

m_handler_map.insert(make_pair(uint32_t(CID_MSG_UNREAD_CNT_REQUEST), DB_PROXY::getUnreadMsgCounter));

m_handler_map.insert(make_pair(uint32_t(CID_MSG_READ_ACK), DB_PROXY::clearUnreadMsgCounter));

m_handler_map.insert(make_pair(uint32_t(CID_MSG_GET_BY_MSG_ID_REQ), DB_PROXY::getMessageById));

m_handler_map.insert(make_pair(uint32_t(CID_MSG_GET_LATEST_MSG_ID_REQ), DB_PROXY::getLatestMsgId));

// device token

m_handler_map.insert(make_pair(uint32_t(CID_LOGIN_REQ_DEVICETOKEN), DB_PROXY::setDevicesToken));

m_handler_map.insert(make_pair(uint32_t(CID_OTHER_GET_DEVICE_TOKEN_REQ), DB_PROXY::getDevicesToken));

//push 推送设置

m_handler_map.insert(make_pair(uint32_t(CID_GROUP_SHIELD_GROUP_REQUEST), DB_PROXY::setGroupPush));

m_handler_map.insert(make_pair(uint32_t(CID_OTHER_GET_SHIELD_REQ), DB_PROXY::getGroupPush));

// group

m_handler_map.insert(make_pair(uint32_t(CID_GROUP_NORMAL_LIST_REQUEST), DB_PROXY::getNormalGroupList));

m_handler_map.insert(make_pair(uint32_t(CID_GROUP_INFO_REQUEST), DB_PROXY::getGroupInfo));

m_handler_map.insert(make_pair(uint32_t(CID_GROUP_CREATE_REQUEST), DB_PROXY::createGroup));

m_handler_map.insert(make_pair(uint32_t(CID_GROUP_CHANGE_MEMBER_REQUEST), DB_PROXY::modifyMember));

// file

m_handler_map.insert(make_pair(uint32_t(CID_FILE_HAS_OFFLINE_REQ), DB_PROXY::hasOfflineFile));

m_handler_map.insert(make_pair(uint32_t(CID_FILE_ADD_OFFLINE_REQ), DB_PROXY::addOfflineFile));

m_handler_map.insert(make_pair(uint32_t(CID_FILE_DEL_OFFLINE_REQ), DB_PROXY::delOfflineFile));

}

在初始化的时候把接口的地址和事件类型挂钩,在收到某个类型的事件后,直接通过函数指针调用对应的处理接口就ok了。

数据库连接池如何保证数据读写的一致性和顺序性?

待完善。