什么是NUMA

什么是NUMA

Non-Uniform Memory Access (NUMA) refers to multiprocessor systems whose memory is divided into multiple memory nodes. The access time of a memory node depends on the relative locations of the accessing CPU and the accessed node. (This contrasts with a symmetric multiprocessor system, where the access time for all of the memory is the same for all CPUs.) Normally, each CPU on a NUMA system has a local memory node whose contents can be accessed faster than the memory in the node local to another CPU or the memory on a bus shared by all CPUs.

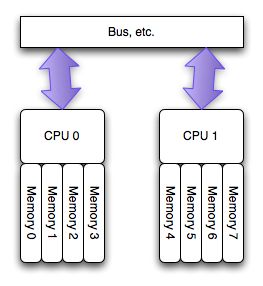

The NUMA architecture:

In NUMA architecture, each processor has a ‘local’ bank of memory, to which it has much closer (lower latency) access. The whole system may still operate as one unit, and all memory is basically accessible from everywhere, but at a potentially higher latency and lower performance.

Fundamentally, some memory locations (‘local’ ones) are faster, that is, cost less to access, than other locations (‘remote’ ones attached to other processors).

How Does Linux Handle NUMA ?

Linux manages memory in zones. A NUMA node can have multiple zones since it may be able to serve multiple DMA areas. How Linux has arranged memory can be determined by looking at /proc/zoneinfo. The NUMA node association of the zones allows the kernel to make decisions involving the memory latency relative to cores.

On boot-up, Linux will detect the organization of memory via the ACPI (Advanced Configuration and Power Interface) tables provided by the firmware and then create zones that map to the NUMA nodes and DMA areas as needed. Memory allocation then occurs from the zones.

NUMA Information:

There are numerous ways to view information about the NUMA characteristics of the system and of various processes currently running. The hardware NUMA configuration of a system can be viewed by using numactl –hardware. This includes a dump of the SLIT (system locality information table) that shows the cost of accesses to different nodes in a NUMA system. The example below shows a NUMA system with two nodes. The distance for a local access is 10. A remote access costs twice as much on this system (20).

numactl --hardware

available: 2 nodes (0-1)

node 0 cpus: 0 2 4 6 8 10 12 14 16 18 20 22 24 26 28 30

node 0 size: 131026 MB

node 0 free: 588 MB

node 1 cpus: 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31

node 1 size: 131072 MB

node 1 free: 169 MB

node distances:

node 0 1

0: 10 20

1: 20 10

Numastat is another tool that is used to show how many allocations were satisfied from the local node. Of particular interest is the numa_miss counter, which indicates that the system assigned memory from a different node in order to avoid reclaim. These allocations also contribute to other node. The remainder of the count is intentional off-node allocations. The amount of off-node memory can be used as a guide to figure out how effectively memory was assigned to processes running on the system.

$ numastat

node0 node1

numa_hit 13273229839 4595119371

numa_miss 2104327350 6833844068

numa_foreign 6833844068 2104327350

interleave_hit 52991 52864

local_node 13273229554 4595091108

other_node 2104327635 6833872331

from: https://access.redhat.com/solutions/700683