- RabbitMQ工作模式

霸都阿甘

RabbitMQrabbitmqjava分布式

一、工作模式介绍RabbbitMQ提供了6种工作模式:简单模式、workqueues、Publish/Subscribe发布与订阅模式、Routing路由模式、Topics主题模式、RPC远程调用模式(不太符合MQ)1.1简单模式P:生产者,也就是要发送消息的程序C:消费者,消息的接收者,会一直等待消息到来Queue:消息队列,图中红色部分。类似一个邮箱,可以缓存消息;生产者向其中投递消息,消费者

- python使用kafka原理详解_Python操作Kafka原理及使用详解

形象顧問Aking

Python操作Kafka原理及使用详解一、什么是KafkaKafka是一个分布式流处理系统,流处理系统使它可以像消息队列一样publish或者subscribe消息,分布式提供了容错性,并发处理消息的机制二、Kafka的基本概念kafka运行在集群上,集群包含一个或多个服务器。kafka把消息存在topic中,每一条消息包含键值(key),值(value)和时间戳(timestamp)。kafk

- vue3中测试:单元测试、组件测试、端到端测试

皓月当空hy

vue.js

1、单元测试:单元测试通常适用于独立的业务逻辑、组件、类、模块或函数,不涉及UI渲染、网络请求或其他环境问题。describe('increment',()=>{//测试用例})toBe():用于严格相等比较(===),适用于原始类型或检查引用类型是否指向同一个对象。toEqual():用于深度比较,检查两个对象或数组的内容是否相等(即使它们不是同一个对象)。例如:test('increments

- SOME/IP协议的建链过程

HL_LOVE_C

汽车电子SOME/IP汽车车载车载系统c++tcp/ip网络协议

在SOME/IP协议中,建立服务通信链路的过程主要涉及服务发现机制,通常需要以下三次交互:服务提供者广播服务可用性(OfferService)服务提供者启动后,周期性地通过OfferService消息向网络广播其提供的服务实例信息(如ServiceID、InstanceID、通信协议和端口等)。作用:通知潜在消费者该服务的存在及访问方式。服务消费者发送订阅请求(SubscribeEventgrou

- idea集成maven导入spring框架失败

言什

mavenspring

在命令行中输入mvnhelp:system显示错误这时,可以在命令行中再键入mvnhelp:describe-Dplugin=help-e-X查看具体的错误信息。如果镜像配置没有错误,可能是在添加镜像出现复制粘贴多或者少东西。可以通过键入mvnhelp:describe-Dplugin=help-e-X查看具体报错地方,修改过来,在idea中重新runmaven即可。

- PubSubJS的基本使用

SarinaDu

reactreactpubsub

前言日常积累,欢迎指正参考PubSubJS-GitHubPubSubJS-npm使用说明首先说明我当前使用的pubsub版本为1.6.0什么是pubsub?PubSubJSisatopic-basedpublish/subscribelibrarywritteninJavaScript.即一个利用JavaScript进行发布/订阅的库使用React+TypeScript发布importPubsub

- 强大的ETL利器—DataFlow3.0

lixiang2114

数据分析etlflumesqoop数据库数据仓库

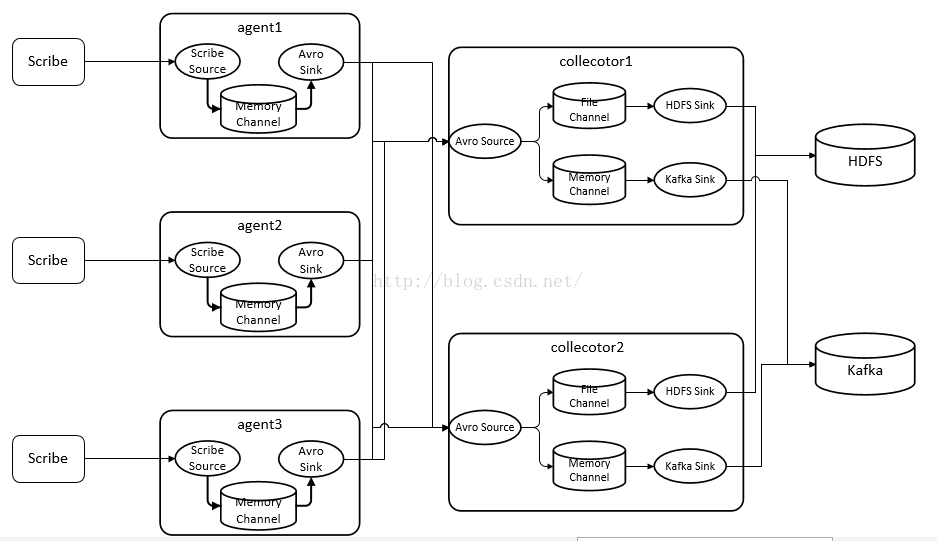

产品开发背景DataFlow是基于应用数据流程的一套分布式ETL系统服务组件,其前身是LogCollector2.0日志系统框架,自LogCollector3.0版本开始正式更名为DataFlow3.0。目前常用的ETL工具Flume、LogStash、Kettle、Sqoop等也可以完成数据的采集、传输、转换和存储;但这些工具都不具备事务一致性。比如Flume工具仅能应用到通信质量无障碍的局域网

- RTSP协议全解析

江同学_

音视频

RTSP(RealTimeStreamingProtocol)协议全解析一、协议概述定位:应用层协议,用于控制流媒体服务器(播放、暂停、录制),媒体传输由RTP/RTCP实现。特点:基于文本(类似HTTP),支持TCP/UDP(默认端口554)。无状态协议,通过Session头维护会话状态。核心命令:方法用途OPTIONS查询服务器支持的方法DESCRIBE获取媒体描述(SDP格式)SETUP建立

- Pod被OOM Killed与探针失败排查

完颜振江

OOMKilledOOMLinux

一、紧急信息收集(5分钟内完成)Pod状态快照#获取Pod最后状态(ExitCode=137表示OOM)kubectlgetpoditem-api-597d7778c5-nhzs5-nprod-owidekubectldescribepoditem-api-597d7778c5-nhzs5-nprod|grep-E'Status:|ExitCode:|LastState:|Reason:'#查看节

- python数据分析一周速成2.数据计算

噼里啪啦噼酷啪Q

python数据分析CDA

python数据分析一周速成2.数据计算一、按列聚合计算(常用函数,五星推荐describe一键多维展示)importnumpyasnpimportpandasaspdd=np.array([[1,12,13,15,16],[23,28,24,215,26],[370,39,355,325,3],[47,49,45,42,482],[571,519,5,52,57],[61,69,

- 大数据-257 离线数仓 - 数据质量监控 监控方法 Griffin架构

m0_74823705

面试学习路线阿里巴巴大数据架构

点一下关注吧!!!非常感谢!!持续更新!!!Java篇开始了!目前开始更新MyBatis,一起深入浅出!目前已经更新到了:Hadoop(已更完)HDFS(已更完)MapReduce(已更完)Hive(已更完)Flume(已更完)Sqoop(已更完)Zookeeper(已更完)HBase(已更完)Redis(已更完)Kafka(已更完)Spark(已更完)Flink(已更完)ClickHouse(已

- Vue+Jest 单元测试

arron4210

前端vue单元测试vue

新到一个公司,要求单元测试覆盖率达50%以上,我们都是后补的单测,其实单测的意义是根据需求提前写好,驱动开发,代替手动测试。然鹅这只是理想。。。这里总结一下各种遇到的单测场景挂载组件,调用elementui,mock函数```javascriptdescribe('页面验证',()=>{constwrapper=getVue({component:onlineFixedPrice,callback

- Python中的 redis keyspace 通知_python 操作redis psubscribe(‘__keyspace@0__ ‘)

2301_82243733

程序员python学习面试

最后Python崛起并且风靡,因为优点多、应用领域广、被大牛们认可。学习Python门槛很低,但它的晋级路线很多,通过它你能进入机器学习、数据挖掘、大数据,CS等更加高级的领域。Python可以做网络应用,可以做科学计算,数据分析,可以做网络爬虫,可以做机器学习、自然语言处理、可以写游戏、可以做桌面应用…Python可以做的很多,你需要学好基础,再选择明确的方向。这里给大家分享一份全套的Pytho

- 容器docker k8s相关的问题汇总及排错

weixin_43806846

dockerkubernetes容器

1.明确问题2.排查方向2.1、docker方面dockerlogs-f容器IDdocker的网络配置问题。2.2、k8s方面node组件问题pod的问题(方式kubectldescribepopod的名称-n命名空间&&kubectllogs-fpod的名称-n命名空间)调度的问题(污点、节点选择器与标签不匹配、存储卷的问题)service问题(访问不了,ingress的问题、service标签

- k8s rook-ceph MountDevice failed for volume pvc An operation with the given Volume ID already exists

时空无限

Kuberneteskubernetesceph

https://github.com/rook/rook/issues/4896环境kubeadm搭建的k8s集群,rook-ceph部署的ceph存储,monpod所在宿主机和挂载客户端机器pod所在机器不在一个二层网络里。故障pod挂载不上pvc,describepod信息如下MountDevicefailedforvolumepvcAnoperationwiththegivenVolumeI

- 29道WebDriverIO面试八股文(答案、分析和深入提问)整理

守护海洋的猫

virtualenv面试javascript前端职场和发展

1.如何在WebDriverIO中截取屏幕截图?回答在WebDriverIO中截取屏幕截图非常简单。你可以使用browser.saveScreenshot方法来截取当前浏览器窗口的屏幕截图,并将其保存到指定的文件路径。以下是一个基本的使用示例:基本示例describe('截取屏幕截图示例',()=>{it('应该截取当前屏幕',async()=>{//打开网页awaitbrowser.url('h

- KubeEdge 1.20.0 版本发布

laowangsen

数据库

KubeEdge1.20.0现已发布。新版本针对大规模、离线等边缘场景对边缘节点和应用的管理、运维等能力进行了增强,同时新增了多语言Mapper-Framework的支持。新增特性:支持批量节点操作多语言Mapper-Framework支持边缘keadmctl新增podslogs/exec/describe和Devicesget/edit/describe能力解耦边缘应用与节点组,支持使用Node

- pandas(02 pandas基本功能和描述性统计)

twilight ember

pandaspython开发语言

前面内容:pandas(01入门)目录一、PythonPandas基本功能1.1Series基本功能1.2DataFrame基本功能二、PythonPandas描述性统计2.1常用函数*2.2汇总数据(describe)*一、PythonPandas基本功能到目前为止,我们已经学习了三种Pandas数据结构以及如何创建它们。我们将主要关注DataFrame对象,因为它在实时数据处理中非常重要,并讨

- Oracle数据库

岚苼

oracle数据库

文章目录1.表的创建(1)创建表的语法举例1:创建出版社表。举例2:创建图书表(2)使用DESCRIBE(describe)显示图书表的结构(3)通过子查询创建表举例(4)设置列的默认值DEFAULT(default)举例(5)删除已创建的表解析CASCADECONSTRAINTS(cascadeconstraints)2.表的操作(1)表的重命名RENAMETO(2)清空表TRUNCATE(tr

- 数据仓库与数据挖掘记录 三

匆匆整棹还

数据挖掘

数据仓库的数据存储和处理数据的ETL过程数据ETL是用来实现异构数据源的数据集成,即完成数据的抓取/抽取、清洗、转换.加载与索引等数据调和工作,如图2.2所示。1)数据提取(Extract)从多个数据源中获取原始数据(如数据库、日志文件、API、云存储等)。数据源可能是结构化(如MySQL)、半结构化(如JSON)、非结构化(如文本)。关键技术:SQL查询、Web爬虫、日志采集工具(如Flume)

- java获取hive表所有字段,Hive Sql从表中动态获取空列计数

拾亿年

java获取hive表所有字段

我正在使用datastaxspark集成和sparkSQLthrift服务器,它为我提供了一个HiveSQL接口来查询Cassandra中的表.我的数据库中的表是动态创建的,我想要做的是仅根据表名在表的每列中获取空值的计数.我可以使用describedatabase.table获取列名,但在hiveSQL中,如何在另一个为所有列计数null的select查询中使用其输出.更新1:使用Dudu的解决

- Chromium Design Document学习及翻译之Multi-process Architecture

lail3344

browserchromium

ChromiumDesignDocument学习及翻译之Multi-processArchitecturehttp://www.chromium.org/developers/design-documents/multi-process-architectureMulti-processArchitectureThisdocumentdescribesChromium'shigh-levelarc

- ROS2: Qos机制

扛着相机的翻译官

ROS网络

ROS2:Qos机制Qos机制是ros2区别与ros1增加的重要内容,用来弥补ros1通讯不稳定的问题。按照我的理解,Qos机制通过参数的配置,相当于将通讯机制调整在介于TCP和UDP模式之间。根据使用场景,配置相应的Qos参数,可以侧重于数据通讯实时性或者数据通讯质量。兼容性ReliabilityQosPolicies:PublisherSubscriber兼容BesteffortBesteff

- 【大数据技术】搭建完全分布式高可用大数据集群(Flume)

Want595

Python大数据采集与分析大数据分布式flume

搭建完全分布式高可用大数据集群(Flume)apache-flume-1.11.0-bin.tar.gz注:请在阅读本篇文章前,将以上资源下载下来。写在前面本文主要介绍搭建完全分布式高可用集群Flume的详细步骤。注意:统一约定将软件安装包存放于虚拟机的/software目录下,软件安装至/opt目录下。安装Flume用finalshell将压缩包上传到虚拟机master的/software目录下

- 大数据技术Kafka详解 ③ | Kafka集群操作与API操作

dvlinker

C/C++实战专栏C/C++软件开发从入门到实战大数据kfaka分布式发布与订阅系统kfaka集群生产者消费者API操作

目录1、Kafka集群操作1.1、创建topic1.2、查看主题命令1.3、生产者生产1.4、消费者消费数据1.5、运行describetopics命令1.6、增加topic分区数1.7、增加配置1.8、删除配置1.9、删除topic2、Kafka的JavaAPI操作2.1、生产者代码2.2、消费者代2.2.1、自动提交offset2.2.2、手动提交offset2.2.3、消费完每个分区之后手动

- 计算机毕业设计hadoop+spark+hive新能源汽车数据分析可视化大屏 汽车推荐系统 新能源汽车推荐系统 汽车爬虫 汽车大数据 机器学习 大数据毕业设计 深度学习 知识图谱 人工智能

qq+593186283

hadoop大数据人工智能

(1)设计目的本次设计一个基于Hive的新能源汽车数据仓管理系统。企业管理员登录系统后可以在汽车保养时,根据这些汽车内置传感器传回的数据分析其故障原因,以便维修人员更加及时准确处理相关的故障问题。或者对这些数据分析之后向车主进行预警提示车主注意保养汽车,以提高汽车行驶的安全系数。(2)设计要求利用Flume进行分布式的日志数据采集,Kafka实现高吞吐量的数据传输,DateX进行数据清洗、转换和整

- python消费kafka数据nginx日志实时_基于nginx+flume+kafka+mongodb实现埋点数据采集

weixin_39534208

名词解释埋点其实就是用于记录用户在页面的一些操作行为。例如,用户访问页面(PV,PageViews)、访问页面用户数量(UV,UserViews)、页面停留、按钮点击、文件下载等,这些都属于用户的操作行为。开发背景我司之前在处理埋点数据采集时,模式很简单,当用户操作页面控件时,前端监听到操作事件,并根据上下文环境,将事件相关的数据通过接口调用发送至埋点数据采集服务(简称ets服务),ets服务对数

- Python入门(10)--面向对象进阶

ᅟᅠ 一进制

Python入门python开发语言

Python面向对象进阶1.继承与多态1.1继承基础classAnimal:def__init__(self,name,age):self.name=nameself.age=agedefspeak(self):passdefdescribe(self):returnf"{self.name}is{self.age}yearsold"classDog(Animal):def__init__(sel

- 查看kafka的topic列表

荒烟蔓草

kafka

1.查看topic列表.\kafka-topics.bat--zookeeper127.0.0.1:2181--list2.查看指定topic.\kafka-topics.bat--zookeeper127.0.0.1:2181--describe--topictest_msg3.修改topic的分区数(分区数只能增加不能减少).\kafka-topics.bat--zookeeper127.0.

- | ERROR: [2] bootstrap checks failed. You must address the points described in the following [2] lin

讓丄帝愛伱

环境elasticsearch大数据bigdata

elasticsearch启动报错:|ERROR:[2]bootstrapchecksfailed.Youmustaddressthepointsdescribedinthefollowing[2]linesbeforestartingElasticsearch.jvm1|bootstrapcheckfailure[1]of[2]:maxfiledescriptors[4096]forelasti

- java类加载顺序

3213213333332132

java

package com.demo;

/**

* @Description 类加载顺序

* @author FuJianyong

* 2015-2-6上午11:21:37

*/

public class ClassLoaderSequence {

String s1 = "成员属性";

static String s2 = "

- Hibernate与mybitas的比较

BlueSkator

sqlHibernate框架ibatisorm

第一章 Hibernate与MyBatis

Hibernate 是当前最流行的O/R mapping框架,它出身于sf.net,现在已经成为Jboss的一部分。 Mybatis 是另外一种优秀的O/R mapping框架。目前属于apache的一个子项目。

MyBatis 参考资料官网:http:

- php多维数组排序以及实际工作中的应用

dcj3sjt126com

PHPusortuasort

自定义排序函数返回false或负数意味着第一个参数应该排在第二个参数的前面, 正数或true反之, 0相等usort不保存键名uasort 键名会保存下来uksort 排序是对键名进行的

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8&q

- DOM改变字体大小

周华华

前端

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml&q

- c3p0的配置

g21121

c3p0

c3p0是一个开源的JDBC连接池,它实现了数据源和JNDI绑定,支持JDBC3规范和JDBC2的标准扩展。c3p0的下载地址是:http://sourceforge.net/projects/c3p0/这里可以下载到c3p0最新版本。

以在spring中配置dataSource为例:

<!-- spring加载资源文件 -->

<bean name="prope

- Java获取工程路径的几种方法

510888780

java

第一种:

File f = new File(this.getClass().getResource("/").getPath());

System.out.println(f);

结果:

C:\Documents%20and%20Settings\Administrator\workspace\projectName\bin

获取当前类的所在工程路径;

如果不加“

- 在类Unix系统下实现SSH免密码登录服务器

Harry642

免密ssh

1.客户机

(1)执行ssh-keygen -t rsa -C "

[email protected]"生成公钥,xxx为自定义大email地址

(2)执行scp ~/.ssh/id_rsa.pub root@xxxxxxxxx:/tmp将公钥拷贝到服务器上,xxx为服务器地址

(3)执行cat

- Java新手入门的30个基本概念一

aijuans

javajava 入门新手

在我们学习Java的过程中,掌握其中的基本概念对我们的学习无论是J2SE,J2EE,J2ME都是很重要的,J2SE是Java的基础,所以有必要对其中的基本概念做以归纳,以便大家在以后的学习过程中更好的理解java的精髓,在此我总结了30条基本的概念。 Java概述: 目前Java主要应用于中间件的开发(middleware)---处理客户机于服务器之间的通信技术,早期的实践证明,Java不适合

- Memcached for windows 简单介绍

antlove

javaWebwindowscachememcached

1. 安装memcached server

a. 下载memcached-1.2.6-win32-bin.zip

b. 解压缩,dos 窗口切换到 memcached.exe所在目录,运行memcached.exe -d install

c.启动memcached Server,直接在dos窗口键入 net start "memcached Server&quo

- 数据库对象的视图和索引

百合不是茶

索引oeacle数据库视图

视图

视图是从一个表或视图导出的表,也可以是从多个表或视图导出的表。视图是一个虚表,数据库不对视图所对应的数据进行实际存储,只存储视图的定义,对视图的数据进行操作时,只能将字段定义为视图,不能将具体的数据定义为视图

为什么oracle需要视图;

&

- Mockito(一) --入门篇

bijian1013

持续集成mockito单元测试

Mockito是一个针对Java的mocking框架,它与EasyMock和jMock很相似,但是通过在执行后校验什么已经被调用,它消除了对期望 行为(expectations)的需要。其它的mocking库需要你在执行前记录期望行为(expectations),而这导致了丑陋的初始化代码。

&nb

- 精通Oracle10编程SQL(5)SQL函数

bijian1013

oracle数据库plsql

/*

* SQL函数

*/

--数字函数

--ABS(n):返回数字n的绝对值

declare

v_abs number(6,2);

begin

v_abs:=abs(&no);

dbms_output.put_line('绝对值:'||v_abs);

end;

--ACOS(n):返回数字n的反余弦值,输入值的范围是-1~1,输出值的单位为弧度

- 【Log4j一】Log4j总体介绍

bit1129

log4j

Log4j组件:Logger、Appender、Layout

Log4j核心包含三个组件:logger、appender和layout。这三个组件协作提供日志功能:

日志的输出目标

日志的输出格式

日志的输出级别(是否抑制日志的输出)

logger继承特性

A logger is said to be an ancestor of anothe

- Java IO笔记

白糖_

java

public static void main(String[] args) throws IOException {

//输入流

InputStream in = Test.class.getResourceAsStream("/test");

InputStreamReader isr = new InputStreamReader(in);

Bu

- Docker 监控

ronin47

docker监控

目前项目内部署了docker,于是涉及到关于监控的事情,参考一些经典实例以及一些自己的想法,总结一下思路。 1、关于监控的内容 监控宿主机本身

监控宿主机本身还是比较简单的,同其他服务器监控类似,对cpu、network、io、disk等做通用的检查,这里不再细说。

额外的,因为是docker的

- java-顺时针打印图形

bylijinnan

java

一个画图程序 要求打印出:

1.int i=5;

2.1 2 3 4 5

3.16 17 18 19 6

4.15 24 25 20 7

5.14 23 22 21 8

6.13 12 11 10 9

7.

8.int i=6

9.1 2 3 4 5 6

10.20 21 22 23 24 7

11.19

- 关于iReport汉化版强制使用英文的配置方法

Kai_Ge

iReport汉化英文版

对于那些具有强迫症的工程师来说,软件汉化固然好用,但是汉化不完整却极为头疼,本方法针对iReport汉化不完整的情况,强制使用英文版,方法如下:

在 iReport 安装路径下的 etc/ireport.conf 里增加红色部分启动参数,即可变为英文版。

# ${HOME} will be replaced by user home directory accordin

- [并行计算]论宇宙的可计算性

comsci

并行计算

现在我们知道,一个涡旋系统具有并行计算能力.按照自然运动理论,这个系统也同时具有存储能力,同时具备计算和存储能力的系统,在某种条件下一般都会产生意识......

那么,这种概念让我们推论出一个结论

&nb

- 用OpenGL实现无限循环的coverflow

dai_lm

androidcoverflow

网上找了很久,都是用Gallery实现的,效果不是很满意,结果发现这个用OpenGL实现的,稍微修改了一下源码,实现了无限循环功能

源码地址:

https://github.com/jackfengji/glcoverflow

public class CoverFlowOpenGL extends GLSurfaceView implements

GLSurfaceV

- JAVA数据计算的几个解决方案1

datamachine

javaHibernate计算

老大丢过来的软件跑了10天,摸到点门道,正好跟以前攒的私房有关联,整理存档。

-----------------------------华丽的分割线-------------------------------------

数据计算层是指介于数据存储和应用程序之间,负责计算数据存储层的数据,并将计算结果返回应用程序的层次。J

&nbs

- 简单的用户授权系统,利用给user表添加一个字段标识管理员的方式

dcj3sjt126com

yii

怎么创建一个简单的(非 RBAC)用户授权系统

通过查看论坛,我发现这是一个常见的问题,所以我决定写这篇文章。

本文只包括授权系统.假设你已经知道怎么创建身份验证系统(登录)。 数据库

首先在 user 表创建一个新的字段(integer 类型),字段名 'accessLevel',它定义了用户的访问权限 扩展 CWebUser 类

在配置文件(一般为 protecte

- 未选之路

dcj3sjt126com

诗

作者:罗伯特*费罗斯特

黄色的树林里分出两条路,

可惜我不能同时去涉足,

我在那路口久久伫立,

我向着一条路极目望去,

直到它消失在丛林深处.

但我却选了另外一条路,

它荒草萋萋,十分幽寂;

显得更诱人,更美丽,

虽然在这两条小路上,

都很少留下旅人的足迹.

那天清晨落叶满地,

两条路都未见脚印痕迹.

呵,留下一条路等改日再

- Java处理15位身份证变18位

蕃薯耀

18位身份证变15位15位身份证变18位身份证转换

15位身份证变18位,18位身份证变15位

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 201

- SpringMVC4零配置--应用上下文配置【AppConfig】

hanqunfeng

springmvc4

从spring3.0开始,Spring将JavaConfig整合到核心模块,普通的POJO只需要标注@Configuration注解,就可以成为spring配置类,并通过在方法上标注@Bean注解的方式注入bean。

Xml配置和Java类配置对比如下:

applicationContext-AppConfig.xml

<!-- 激活自动代理功能 参看:

- Android中webview跟JAVASCRIPT中的交互

jackyrong

JavaScripthtmlandroid脚本

在android的应用程序中,可以直接调用webview中的javascript代码,而webview中的javascript代码,也可以去调用ANDROID应用程序(也就是JAVA部分的代码).下面举例说明之:

1 JAVASCRIPT脚本调用android程序

要在webview中,调用addJavascriptInterface(OBJ,int

- 8个最佳Web开发资源推荐

lampcy

编程Web程序员

Web开发对程序员来说是一项较为复杂的工作,程序员需要快速地满足用户需求。如今很多的在线资源可以给程序员提供帮助,比如指导手册、在线课程和一些参考资料,而且这些资源基本都是免费和适合初学者的。无论你是需要选择一门新的编程语言,或是了解最新的标准,还是需要从其他地方找到一些灵感,我们这里为你整理了一些很好的Web开发资源,帮助你更成功地进行Web开发。

这里列出10个最佳Web开发资源,它们都是受

- 架构师之面试------jdk的hashMap实现

nannan408

HashMap

1.前言。

如题。

2.详述。

(1)hashMap算法就是数组链表。数组存放的元素是键值对。jdk通过移位算法(其实也就是简单的加乘算法),如下代码来生成数组下标(生成后indexFor一下就成下标了)。

static int hash(int h)

{

h ^= (h >>> 20) ^ (h >>>

- html禁止清除input文本输入缓存

Rainbow702

html缓存input输入框change

多数浏览器默认会缓存input的值,只有使用ctl+F5强制刷新的才可以清除缓存记录。

如果不想让浏览器缓存input的值,有2种方法:

方法一: 在不想使用缓存的input中添加 autocomplete="off";

<input type="text" autocomplete="off" n

- POJO和JavaBean的区别和联系

tjmljw

POJOjava beans

POJO 和JavaBean是我们常见的两个关键字,一般容易混淆,POJO全称是Plain Ordinary Java Object / Pure Old Java Object,中文可以翻译成:普通Java类,具有一部分getter/setter方法的那种类就可以称作POJO,但是JavaBean则比 POJO复杂很多, Java Bean 是可复用的组件,对 Java Bean 并没有严格的规

- java中单例的五种写法

liuxiaoling

java单例

/**

* 单例模式的五种写法:

* 1、懒汉

* 2、恶汉

* 3、静态内部类

* 4、枚举

* 5、双重校验锁

*/

/**

* 五、 双重校验锁,在当前的内存模型中无效

*/

class LockSingleton

{

private volatile static LockSingleton singleton;

pri