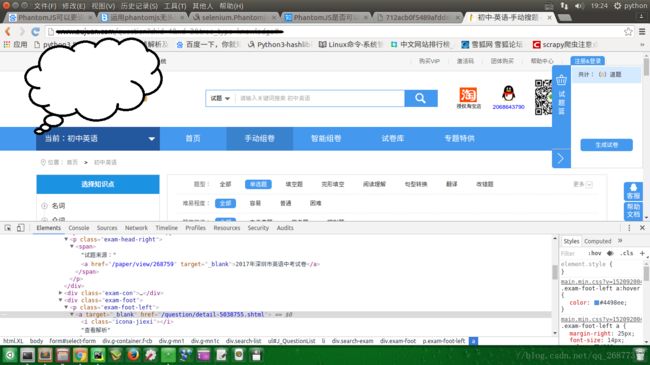

使用selenium爬取某出试卷的网站

from selenium import webdriver

import pymongo

import json

import time

import re

# 使用终极武器了.

# 修改头文件,防止被识别为爬虫

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

class Zujuan(object):

def __init__(self):

self.list = ["question_id", "question_type", "question_channel_type", "question_status", "chid", "xd",

"parent_id",

"is_objective", "difficult_index", "master_level", "exam_type", "evaluated", "region_ids",

"grade_id",

"question_source", "mode", "kid_num", "paperid", "save_num", "oldqid", "paper", "list",

"extra_file",

"question_text", "options", "knowledge", "category", "is_collect", "done", "myanswer", "score",

"sort",

"sort2", "is_use"]

self.client = pymongo.MongoClient("localhost", 27017)

# 获得数据库test1

self.db = self.client.zujuan

# 获得集合stu

self.stu = self.db.yingyu7

# 调用环境变量指定的PhantomJS浏览器创建浏览器对象

dcap = dict(DesiredCapabilities.PHANTOMJS)

dcap["phantomjs.page.settings.userAgent"] = (

"Mozilla/5.0 (Linux; Android 5.1.1; Nexus 6 Build/LYZ28E) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.23 Mobile Safari/537.36")

driver = webdriver.PhantomJS(desired_capabilities=dcap)

# driver = webdriver.PhantomJS()

# /home/python/Desktop/chromdriver

# driver = webdriver.Chrome(executable_path='/home/python/Desktop/chromdriver/chromedriver')

driver.get("http://www.zujuan.com/question?chid=4&xd=2&tree_type=knowledge")

time.sleep(2)

# driver.save_screenshot("页面.png")

# 普通按键使用时,直接使用sendkeys(theKeys)就可以,如按下enter键:

# action.sendKeys(Keys.ENTER).perform();

driver.get("防纠纷屏蔽")

time.sleep(0.5)

window_1 = driver.current_window_handle

js = 'window.open();'

driver.execute_script(js)

# print(driver.page_source)

windows = driver.window_handles

for handle in windows:

if handle != window_1:

driver.switch_to.window(handle)

self.run(driver)

def run(self, driver):

for i in range(1, 3782):

driver.get(

""防纠纷屏蔽"" + str(

i) + "&_=1521543426225")

# driver.save_screenshot("页面2.png")

data = driver.page_source

# print(data)

data = re.match(r""".*?({"data".*}) data = json.loads(data)

# print(data)

self.crawl(data)

def crawl(self, data):

try:

result = data["data"][0]["questions"]

except:

return

print(result)

for data in result:

dict_ = dict()

for field in self.list:

if field in data:

dict_[field] = data[field]

# print(dict_)

# 添加文档

self.stu.update({'question_id': dict_['question_id']}, dict(dict_), True)

def main():

zujuan = Zujuan()

# zujuan.run()

if __name__ == '__main__':

import pymongo

import json

import time

import re

# 使用终极武器了.

# 修改头文件,防止被识别为爬虫

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

class Zujuan(object):

def __init__(self):

self.list = ["question_id", "question_type", "question_channel_type", "question_status", "chid", "xd",

"parent_id",

"is_objective", "difficult_index", "master_level", "exam_type", "evaluated", "region_ids",

"grade_id",

"question_source", "mode", "kid_num", "paperid", "save_num", "oldqid", "paper", "list",

"extra_file",

"question_text", "options", "knowledge", "category", "is_collect", "done", "myanswer", "score",

"sort",

"sort2", "is_use"]

self.client = pymongo.MongoClient("localhost", 27017)

# 获得数据库test1

self.db = self.client.zujuan

# 获得集合stu

self.stu = self.db.yingyu7

# 调用环境变量指定的PhantomJS浏览器创建浏览器对象

dcap = dict(DesiredCapabilities.PHANTOMJS)

dcap["phantomjs.page.settings.userAgent"] = (

"Mozilla/5.0 (Linux; Android 5.1.1; Nexus 6 Build/LYZ28E) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.23 Mobile Safari/537.36")

driver = webdriver.PhantomJS(desired_capabilities=dcap)

# driver = webdriver.PhantomJS()

# /home/python/Desktop/chromdriver

# driver = webdriver.Chrome(executable_path='/home/python/Desktop/chromdriver/chromedriver')

driver.get("http://www.zujuan.com/question?chid=4&xd=2&tree_type=knowledge")

time.sleep(2)

# driver.save_screenshot("页面.png")

# 普通按键使用时,直接使用sendkeys(theKeys)就可以,如按下enter键:

# action.sendKeys(Keys.ENTER).perform();

driver.get("防纠纷屏蔽")

time.sleep(0.5)

window_1 = driver.current_window_handle

js = 'window.open();'

driver.execute_script(js)

# print(driver.page_source)

windows = driver.window_handles

for handle in windows:

if handle != window_1:

driver.switch_to.window(handle)

self.run(driver)

def run(self, driver):

for i in range(1, 3782):

driver.get(

""防纠纷屏蔽"" + str(

i) + "&_=1521543426225")

# driver.save_screenshot("页面2.png")

data = driver.page_source

# print(data)

data = re.match(r""".*?({"data".*}) data = json.loads(data)

# print(data)

self.crawl(data)

def crawl(self, data):

try:

result = data["data"][0]["questions"]

except:

return

print(result)

for data in result:

dict_ = dict()

for field in self.list:

if field in data:

dict_[field] = data[field]

# print(dict_)

# 添加文档

self.stu.update({'question_id': dict_['question_id']}, dict(dict_), True)

def main():

zujuan = Zujuan()

# zujuan.run()

if __name__ == '__main__':

main()