1.6 爬取猫眼网站信息并存储到数据库(Top100榜、最受期待榜、指定影院的影片信息、音乐榜)

1.Top100榜

import pymysql as mysql

import requests

from bs4 import BeautifulSoup

def top():

# 创建列表,存储片名、上映时间、主演、评分

list1 = []

list2 = []

list3 = []

list4 = []

# 二维数组删除前面的数据,保证list输出之前是不多余的100个数据

list1.clear()

list2.clear()

list3.clear()

# 请求

resp = requests.get(url=url_one,headers=head,timeout=time)

if resp.status_code == 200:

resp.encoding = "utf-8"

soup = BeautifulSoup(resp.text, "html.parser")

div_list = soup.find_all(name="div", attrs={"class": "movie-item-info"})

scorelist = soup.find_all(name="div",attrs={"class":"movie-item-number score-num"})

# 获取:片名、上映时间、主演

for div in div_list:

p = div.find_all(name="p") # 返回一个列表

list1.append(p[0].string)

list2.append(p[1].string.replace(" ", ""))

list3.append(p[2].string)

print(list1)

# 获取:评分

for score in scorelist:

s = score.find_all(name="i")

s0 = s[0].string

s1 = s[1].string

sAdd = s0 + s1

list4.append(sAdd)

# print(list4)

else:

print("错误!重新开始")

top()

# 存储到数据库中

for i in range(0,10):

try:

i1 = cur.execute("INSERT INTO Top100(`片名`,`主演`,`上映时间`,`评分`) VALUES('%s','%s','%s','%s')" %(

list1[i], list2[i], list3[i], list4[i]))

dbHelp.commit()

# print(i1)

except:

dbHelp.rollback()

if __name__ == '__main__':

# 连接数据库

host = "localhost" # 127.0.0.1=本机IP

user = "root"

password = "123456"

db = "maoYan" # 数据库

port = 3306 # number类型

dbHelp = mysql.connect(host=host, user=user, password=password, db=db, port=port)

print("数据库连接成功!")

cur = dbHelp.cursor() # 获取游标,使用sql语句

# 访问网络

offset = "0"

for i in range(11):

offset = i * 10

url_one = "https://maoyan.com/board/4?offset= " + str(offset)

head = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"}

time = 15

# 得到Top100榜

top()

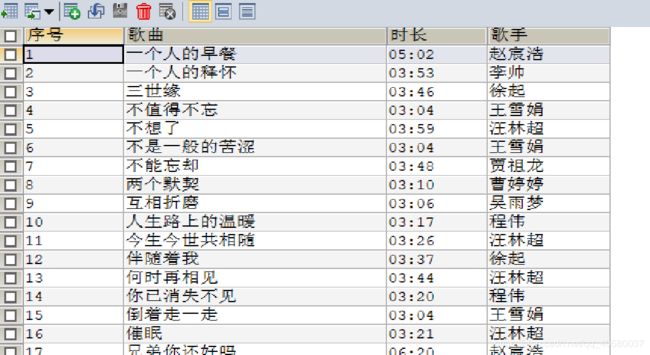

(部分)结果展示:

import pymysql as mysql

import requests

from bs4 import BeautifulSoup

def expect():

list1 = []

list2 = []

list3 = []

list1.clear()

list2.clear()

list3.clear()

resp = requests.get(url=url_one, headers=head, timeout=time)

if resp.status_code == 200:

resp.encoding = "utf-8"

soup = BeautifulSoup(resp.text, "html.parser")

div_list = soup.find_all(name="div", attrs={"class": "movie-item-info"})

wishlist = soup.find_all(name="div", attrs={"class": "movie-item-number wish"})

for div in div_list:

p = div.find_all(name="p") # 返回一个列表

list1.append(p[0].string)

list2.append(p[1].string)

try:

list3.append(p[2].string)

except:

list2.pop()

list2.append("None")

list3.append(p[1].string)

# for wish in wishlist:

# w = wish.find_all(name='span')

# for wish in w:

# print(wish.string)

else:

print("错误!重新开始")

expect()

# 存储到数据库中

for i in range(0, 10):

try:

i1 = cur.execute("INSERT INTO expect(`片名`,`主演`,`上映时间`) VALUES('%s','%s','%s')" % (

list1[i], list2[i],list3[i]))

dbHelp.commit()

except:

dbHelp.rollback()

if __name__ == '__main__':

# 连接数据库

host = "localhost" # 127.0.0.1=本机IP

user = "root"

password = "123456"

db = "maoYan" # 数据库

port = 3306 # number类型

dbHelp = mysql.connect(host=host, user=user, password=password, db=db, port=port)

print("数据库连接成功!")

cur = dbHelp.cursor() # 获取游标,使用sql语句

# 访问网络

offset = "0"

for i in range(6):

offset = i * 10

url_one = "https://maoyan.com/board/6?offset= " + str(offset)

head = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/"

"537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"}

time = 15

expect()

import pymysql as mysql

import requests

from bs4 import BeautifulSoup

def cinemas():

list1 = [] #片名

list2 = [] #评分

list3 =[] #时长

list4 =[] #类型

list5 = [] #演员

list6 =[] #观影时间

resp = requests.get(url=url_one, headers=head, timeout=15)

if resp.status_code == 200:

resp.encoding = "utf-8"

soup = BeautifulSoup(resp.text, "html.parser")

div_list = soup.find_all(name="div",attrs="movie-info")

div2_list = soup.find_all(name="div",attrs="movie-desc")

timelist = soup.find_all(name="div",attrs="show-date")

for div in div_list:

moviename = div.h3.string

# print(p)

moviescore = div.span.string

# print(moviescore)

list1.append(moviename)

list2.append(moviescore)

for d in div2_list:

dd = d.find_all(name="span")

mlength0 = dd[0].string

mlength1 = dd[1].string

mtype0 = dd[2].string

mtype1 = dd[3].a.string

mlength = mlength0+mlength1

mtype = mtype0+mtype1

list3.append(mlength)

list4.append(mtype)

try:

mstar0 = dd[4].string

mstar1 = dd[5].string

mstar = mstar0+mstar1

except:

mstar = "None"

list5.append(mstar)

for time in timelist:

t = time.find_all(name="span")

date1 = t[0].string

date2 = t[1].string

try:

date3 = t[2].string

date = date1 + date2 + date3

list6.append(date)

except IndexError:

date = date1 + date2

list6.append(date)

else:

print("错误!重新开始")

cinemas()

for i in range(0,20):

try:

i1 = cur.execute("INSERT INTO cinema(`片名`,`评分/想看人数`,`时长`,`类型`,`主演`,`观影时间`) VALUES('%s','%s','%s','%s','%s','%s')" %(

list1[i], list2[i], list3[i], list4[i],list5[i],list6[i]))

dbHelp.commit()

# print(i1)

except:

dbHelp.rollback()

if __name__ == '__main__':

# 连接网络

host = "localhost" # 127.0.0.1=本机IP

user = "root"

password = "123456"

db = "maoYan" # 数据库

port = 3306 # number类型

dbHelp = mysql.connect(host=host, user=user, password=password, db=db, port=port)

print("数据库连接成功!")

cur = dbHelp.cursor() # 获取游标,使用sql语句

# 访问网络

url_one = "https://maoyan.com/cinema/1065?poi=52804"

head = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"}

cinemas()

import pymysql as mysql

import requests

from bs4 import BeautifulSoup

def musics():

list1 = [] # 序号

list2 = [] # 歌曲

list3 = [] # 时长

list4 = [] # 歌手

resp = requests.get(url=url_one, headers=head)

if resp.status_code == 200:

resp.encoding = "utf-8"

soup = BeautifulSoup(resp.text, "html.parser")

div_list = soup.find_all(name="div", attrs="music-box")

for div in div_list:

try:

for i in range(0,50,5):

trr = div.find_all(name="td")

list1.append(trr[i].string)

list2.append(trr[i+1].string)

list3.append(trr[i+2].string)

list4.append(trr[i+3].string)

except: #最后一页歌曲数为9

for i in range(0,45,5):

trr = div.find_all(name="td")

list1.pop()

list2.pop()

list3.pop()

list4.pop()

list1.append(trr[i].string)

list2.append(trr[i+1].string)

list3.append(trr[i+2].string)

list4.append(trr[i+3].string)

print(list2)

for i in range(0, 10):

try:

i1 = cur.execute(

"INSERT INTO music(`序号`,`歌曲`,`时长`,`歌手`) VALUES('%s','%s','%s','%s')" % (

list1[i], list2[i], list3[i], list4[i]))

dbHelp.commit()

# print(i1)

except:

dbHelp.rollback()

if __name__ == '__main__':

# 连接数据库

host = "localhost" # 127.0.0.1=本机IP

user = "root"

password = "123456"

db = "maoYan" # 数据库

port = 3306 # number类型

dbHelp = mysql.connect(host=host, user=user, password=password, db=db, port=port)

print("数据库连接成功!")

cur = dbHelp.cursor() # 获取游标,使用sql语句

# 访问网络

offset = "0"

for i in range(14):

offset = i * 10

url_one = "https://maoyan.com/news?showTab=5&offset= " + str(offset)

head = {

"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.88 Safari/537.36"}

time = 15

musics()