使用docker部署redis-sentinel 和redis-cluster(单机版)

写这篇文章的目的并不是为了介绍redis以及它的高可用方案,主要是通过redis各种高可用方案的简单安装,让读者能够通过真实的集群环境更好的体会redis的高可用方案。对redis感兴趣的同学经常会遇到这样的一个问题,没有足够的虚拟机,因此有时候想要自己部署redis各种集群环境时总是显得力不从心,而使用官方的安装包又非常麻烦,因此本文利用docker-compose来快速安装redis,当然,前提是你要会简单使用docker和docker-compose哦。

话不多说,咱们直接进入正题,下面所有的操作都是在我的一台虚拟机(192.168.174.129)上完成的,读者在使用时可以把配置中涉及到的ip改成自己的实际ip.

一、单实例的redis

创建 /home/ubuntu/redis/standalone/docker-compose.yml,如下

version: '2'

services:

redis:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6379:6379$: cd /home/ubuntu/redis/standalone/

$: docker-compose up -d

然后,一个redis实例就启动好了,就是这么easy。此时只需要使用你的redis client访问192.168.174.129的6379端口就可以愉快的使用redis了,可以简单的验证一下,如下,还是在/home/ubuntu/redis/standalone目录下执行如下命令

$: docker-compose exec redis redis-cli

进入redis-cli的命令行,然后就可以在里面验证各种redis命令了

二、redis一主多从(读写分离)

创建 /home/ubuntu/redis/repl/docker-compose.yml,如下

version: '2'

services:

master:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6380:6379

slave-1:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6381:6379

command: ["redis-server","--slaveof","192.168.174.129","6380"]

slave-2:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6382:6379

command: ["redis-server","--slaveof","192.168.174.129","6380"]

slave-3:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6383:6379

command: ["redis-server","--slaveof","192.168.174.129","6382"]注:slave-3是slave-2的从服务器,slave-2和slave-1是master的从服务器,这样举例主要是为了体现嵌套树状复制结构 。

三、redis-sentinel

创建 /home/ubuntu/redis/sentinel/docker-compose.yml,如下

version: '2'

services:

repl-0:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6380:6379

networks:

- sentinel

command: ["redis-server","--replica-announce-ip","192.168.174.129","--replica-announce-port","6380"]

repl-1:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6381:6379

networks:

- sentinel

command: ["redis-server","--slaveof","repl-0","6379","--replica-announce-ip","192.168.174.129","--replica-announce-port","6381"]

repl-2:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6382:6379

networks:

- sentinel

command: ["redis-server","--slaveof","repl-0","6379","--replica-announce-ip","192.168.174.129","--replica-announce-port","6382"]

repl-3:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

ports:

- 6383:6379

networks:

- sentinel

ports:

- 6383:6379

command: ["redis-server","--slaveof","repl-2","6379","--replica-announce-ip","192.168.174.129","--replica-announce-port","6383"]

sentinel-1:

build: ./build

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

networks:

- sentinel

ports:

- 26380:26379

command: ["redis-server","/data/sentinel.conf","--sentinel","announce-ip","192.168.174.129","--sentinel","announce-port" , "26380"]

sentinel-2:

build: ./build

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

networks:

- sentinel

ports:

- 26381:26379

command: ["redis-server","/data/sentinel.conf","--sentinel","announce-ip","192.168.174.129","--sentinel","announce-port" , "26381"]

sentinel-3:

build: ./build

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

networks:

- sentinel

ports:

- 26382:26379

command: ["redis-server","/data/sentinel.conf","--sentinel","announce-ip","192.168.174.129","--sentinel","announce-port" , "26382"]

networks:

sentinel:

driver: bridge

注:与非docker环境相比,我们添加了announce-ip和announce-port这些命令,这是因为默认情况下主从服务器之间以及各个sentinel实例之间使用的ip是容器内部自动分配的ip,这样在切换主从服务或sentinel之间通信时无法联通,因此务必需要添加对应的命令。

同时在/home/ubuntu/redis/sentinel/build目录下创建 Dockerfile和sentinel.conf

Dockerfile:

FROM redis:5.0-rc-alpine

COPY ./sentinel.conf /data/sentinel.conf

EXPOSE 26379sentinel.conf

sentinel monitor mymaster 192.168.174.129 6380 2

sentinel down-after-milliseconds mymaster 10000

sentinel failover-timeout mymaster 180000

sentinel parallel-syncs mymaster 1

四、redis-cluster

创建 /home/ubuntu/redis/cluster/docker-compose.yml,如下

version: '2'

services:

cluster-a1:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6371.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]

cluster-a2:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6372.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]

cluster-b1:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6381.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]

cluster-b2:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6382.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]

cluster-c1:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6391.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]

cluster-c2:

image: redis:5.0-rc-alpine

restart: always

volumes:

- "/etc/timezone:/etc/timezone:ro"

- "/etc/localtime:/etc/localtime:ro"

- "./conf.d/cluster-6392.conf:/data/redis.conf"

network_mode: host

command: ["redis-server","/data/redis.conf"]同时在 /home/ubuntu/redis/cluster/conf.d目录下创建cluster-6371.conf 、 cluster-6372.conf 、cluster-6381.conf 、cluster-6382.conf 、cluster-6391.conf 、cluster-6392.conf ,这六个配置文件唯一的区别只是第一行的port不一样,每个配置文件的port与文件名上的数字一致,如下面是cluster-6371.conf

port 6371

cluster-enabled yes

cluster-node-timeout 15000

cluster-config-file "nodes.conf"

pidfile /var/run/redis.pid

logfile "cluster.log"

dbfilename dump-cluster.rdb

appendfilename "appendonly-cluster.aof"接下来,在/home/ubuntu/redis/cluster目录下进行如下操作启动所有redis实例

$: docker-compose up -d

-- 查看容器的状态

$: docker-compose ps

(1) 将所有实例加入集群

$: docker-compose exec cluster-c1 redis-cli -p 6381 -h 192.168.174.129

进入redis客户端命令行

192.168.174.129:6381> cluster meet 192.168.174.129 6371

192.168.174.129:6381> cluster meet 192.168.174.129 6372

192.168.174.129:6381> cluster meet 192.168.174.129 6381

192.168.174.129:6381> cluster meet 192.168.174.129 6382

192.168.174.129:6381> cluster meet 192.168.174.129 6391

192.168.174.129:6381> cluster meet 192.168.174.129 6392

检查一下效果

192.168.174.129:6381> cluster nodes

注1:上面的第一列是node_id,这个在步骤(3)中会用到,比如16a7e8a557ba01615c5b15e967c769f983913b85是192.168.174.129:6392的node_id。

注2:当前的6个node都是master,还没有分配主从关系,具体见步骤(3)

(2)分配虚拟槽

可以看到配置的六个节点都已经加入到了集群中,但是其现在还不能使用,因为还没有将16384个槽分配到集群节点中。虚拟槽的分配可以使用redis-cli分别连接到6371,6381和6391端口的节点中,然后分别执行如下命令:

$: docker-compose exec cluster-c1 redis-cli -p 6371 -h 192.168.174.129 cluster addslots {0..5000}

$: docker-compose exec cluster-c1 redis-cli -p 6381 -h 192.168.174.129 cluster addslots {5001..10000}

$: docker-compose exec cluster-c1 redis-cli -p 6391 -h 192.168.174.129 cluster addslots {10001..16383}

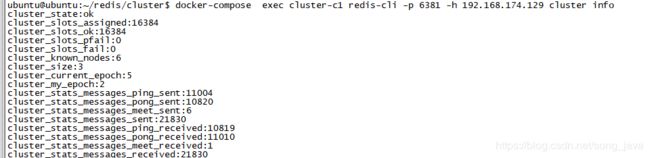

-- 检查当前集群的状态

$: docker-compose exec cluster-c1 redis-cli -p 6381 -h 192.168.174.129 cluster info

(3)设置主从关系

通过上面的步骤,我们把16384个槽分配给了6371、6381、6391这三个端口对应的redis实例,因此这三个实例必须是master,至少为每一个master再分配一个slave,如下命令 : cluster replicate [master_node_id],

-- 将6372对应的实例设置为6371的slave

$: docker-compose exec cluster-c1 redis-cli -p 6372 -h 192.168.174.129 cluster replicate 16a7e8a557ba01615c5b15e967c769f983913b85

-- 将6382对应的实例设置为6381的slave

$: docker-compose exec cluster-c1 redis-cli -p 6382 -h 192.168.174.129 cluster replicate 3921bc89204a900360de770fe42f931787431e7c

-- 将6392对应的实例设置为6391的slave

$: docker-compose exec cluster-c1 redis-cli -p 6392 -h 192.168.174.129 cluster replicate fad1e9b5115c876dbbaf3ba4c500ddfca0508065

-- 检查当前的节点主从关系

$: docker-compose exec cluster-c1 redis-cli -p 6382 -h 192.168.174.129 cluster nodes

(4)使用redis-cli连接集群

$: docker-compose exec cluster-c1 redis-cli -p 6382 -h 192.168.174.129 -c

进入redis-cli控制台

192.168.174.129:6382> get k1

![]()

发现跳转到6391对应的实例上,你也可以试着停掉其中的一个master,然后观察一下他的slave是否会自动转为master。

还有更多redis-cluster的特性,都可以在这个集群上进行测试验证,小伙伴们还等什么,赶紧动手吧。

注: 细心的同学会发现,我们使用redis-cli时都是使用了"docker-compose exec cluster-c1 redis-cli ",其实我们也可以使用其他的redis实例,比如 "docker-compose exec cluster-a2 redis-cli ",我们只是为了使用redis实例中内置的redis-cli命令,如果你本地已经装了redis-cli,也是可以直接使用redis-cli。

五、总结

关于redis主从复制、sentinel高可用以及redis-cluster,网上有很多优秀的文章对他们进行了非常详细的介绍,我在这里不在赘述,只是简单说一下我个人不太成熟的理解吧。redis主从复制可以实现读写分离,但是在master挂掉之后没有现成的机制重新选举,redis的写功能就不可用了,所以引出了sentinel,sentinel可以在master挂掉之后可以选一个slave替代挂掉的master,所以sentinel增强了高可用性,但是没有解决redis水平扩展的问题,当master的内存不足时,除了增加机器内存,没有现成的办法扩容,因此引入了redis-cluster,cluster通过哈希槽让各个master节点只负责一部分的哈希槽(hash slo),然后每个master有多个slave作为数据备份,一旦master不行了,可以让slave顶上去。

sentinel和cluster都不能很好的使用读写分离,slave节点只是作为备份节点,因此slave节点不能替master分担读的压力,同时由于redis使用的是异步复制,不能保证强一致性。

有一些不错的帖子,大家可以参考一些

https://my.oschina.net/zhangxufeng/blog/905611

https://dbaplus.cn/news-158-2182-1.html

redis镜像地址: https://hub.docker.com/_/redis

官方地址: https://redis.io/

英文不好的同学可以参考 http://www.redis.cn/

docker安装请参考 https://blog.csdn.net/song_java/article/details/88061162