Caused by: java.io.IOException: Lease timeout of 0 seconds expired.

2017-07-09 10:33:07.040 [pool-2-thread-9] ERROR com.bonree.browser.util.GenerateParquet - can not write PageHeader(type:DICTIONARY_PAGE, uncompressed_page_size:34, compressed_page_size:34, dictionary_page_header:DictionaryPageHeader(num_values:2, encoding:PLAIN_DICTIONARY))

java.io.IOException: can not write PageHeader(type:DICTIONARY_PAGE, uncompressed_page_size:34, compressed_page_size:34, dictionary_page_header:DictionaryPageHeader(num_values:2, encoding:PLAIN_DICTIONARY))

at org.apache.parquet.format.Util.write(Util.java:224) ~[parquet-format-2.3.0-incubating.jar:2.3.0-incubating]

at org.apache.parquet.format.Util.writePageHeader(Util.java:61) ~[parquet-format-2.3.0-incubating.jar:2.3.0-incubating]

at org.apache.parquet.format.converter.ParquetMetadataConverter.writeDictionaryPageHeader(ParquetMetadataConverter.java:732) ~[parquet-hadoop-1.7.0.jar:1.7.0]

at org.apache.parquet.hadoop.ParquetFileWriter.writeDictionaryPage(ParquetFileWriter.java:238) ~[parquet-hadoop-1.7.0.jar:1.7.0]

at org.apache.parquet.hadoop.ColumnChunkPageWriteStore ColumnChunkPageWriter.writeToFileWriter(ColumnChunkPageWriteStore.java:179) [parquet−hadoop−1.7.0.jar:1.7.0]atorg.apache.parquet.hadoop.ColumnChunkPageWriteStore.flushToFileWriter(ColumnChunkPageWriteStore.java:238) [parquet−hadoop−1.7.0.jar:1.7.0]atorg.apache.parquet.hadoop.InternalParquetRecordWriter.flushRowGroupToStore(InternalParquetRecordWriter.java:155) [parquet−hadoop−1.7.0.jar:1.7.0]atorg.apache.parquet.hadoop.InternalParquetRecordWriter.close(InternalParquetRecordWriter.java:113) [parquet−hadoop−1.7.0.jar:1.7.0]atorg.apache.parquet.hadoop.ParquetWriter.close(ParquetWriter.java:267) [parquet−hadoop−1.7.0.jar:1.7.0]atcom.bonree.browser.util.GenerateParquet.closeAjaxWriter(GenerateParquet.java:334) [classes/:na]atcom.bonree.browser.business.ConsumeFileBusiness.close(ConsumeFileBusiness.java:280)[classes/:na]atcom.bonree.browser.business.ConsumeFileBusiness.run(ConsumeFileBusiness.java:148)[classes/:na]atjava.util.concurrent.Executors RunnableAdapter.call(Executors.java:471) [na:1.7.0_79]

at java.util.concurrent.FutureTask.run(FutureTask.java:262) [na:1.7.0_79]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [na:1.7.0_79]

at java.util.concurrent.ThreadPoolExecutor Worker.run(ThreadPoolExecutor.java:615)[na:1.7.079]atjava.lang.Thread.run(Thread.java:745)[na:1.7.079]Causedby:parquet.org.apache.thrift.transport.TTransportException:java.io.IOException:Leasetimeoutof0secondsexpired.atparquet.org.apache.thrift.transport.TIOStreamTransport.write(TIOStreamTransport.java:147) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atparquet.org.apache.thrift.transport.TTransport.write(TTransport.java:105) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atparquet.org.apache.thrift.protocol.TCompactProtocol.writeByteDirect(TCompactProtocol.java:424) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atparquet.org.apache.thrift.protocol.TCompactProtocol.writeByteDirect(TCompactProtocol.java:431) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atparquet.org.apache.thrift.protocol.TCompactProtocol.writeFieldBeginInternal(TCompactProtocol.java:194) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atparquet.org.apache.thrift.protocol.TCompactProtocol.writeFieldBegin(TCompactProtocol.java:176) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atorg.apache.parquet.format.InterningProtocol.writeFieldBegin(InterningProtocol.java:74) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atorg.apache.parquet.format.PageHeader.write(PageHeader.java:918) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]atorg.apache.parquet.format.Util.write(Util.java:222) [parquet−format−2.3.0−incubating.jar:2.3.0−incubating]…16commonframesomittedCausedby:java.io.IOException:Leasetimeoutof0secondsexpired.atorg.apache.hadoop.hdfs.DFSOutputStream.abort(DFSOutputStream.java:2063) [hadoop−hdfs−2.5.2.jar:na]atorg.apache.hadoop.hdfs.DFSClient.closeAllFilesBeingWritten(DFSClient.java:871) [hadoop−hdfs−2.5.2.jar:na]atorg.apache.hadoop.hdfs.DFSClient.renewLease(DFSClient.java:825) [hadoop−hdfs−2.5.2.jar:na]atorg.apache.hadoop.hdfs.LeaseRenewer.renew(LeaseRenewer.java:417) [hadoop−hdfs−2.5.2.jar:na]atorg.apache.hadoop.hdfs.LeaseRenewer.run(LeaseRenewer.java:442) [hadoop−hdfs−2.5.2.jar:na]atorg.apache.hadoop.hdfs.LeaseRenewer.access 700(LeaseRenewer.java:71) ~[hadoop-hdfs-2.5.2.jar:na]

at org.apache.hadoop.hdfs.LeaseRenewer$1.run(LeaseRenewer.java:298) ~[hadoop-hdfs-2.5.2.jar:na]

… 1 common frames omitted

字面理解为文件操作超租期,实际上就是data stream操作过程中文件被删掉了。之前也遇到过,通常是因为Mapred多个task操作同一个文件,一个task完成后删掉文件导致。

不过这次在hdfs上传文件时发生了这个异常,导致上传失败。google了一把,有人反馈跟dfs.datanode.max.xcievers参数到达上限有关。这个是datanode处理请求的任务

上限,默认为256,集群上面配置为2048.于是去所有datanode上面扫了一下log,发现果然出现了IOE:

java.io.IOException: xceiverCount 2049 exceeds the limit of concurrent xcievers 2048

翻源码找了一下xcievers,有DataXcievers和DataXcieversServer两个类,DataXcievers是DataXcieversServer启动的一个线程,用于处理输入输出数据流,其run()

方法有如下判断:

1 public void run() { 2 … 56 int curXceiverCount = datanode.getXceiverCount(); 57 if (curXceiverCount > dataXceiverServer.maxXceiverCount) { 58 throw new IOException(“xceiverCount ” + curXceiverCount 59 + ” exceeds the limit of concurrent xcievers ” 60 + dataXceiverServer.maxXceiverCount); 61 }

堆栈信息:

xcievers超过限制抛了一个IOException,这反应到DFSClient端,就是正在操作的文件失去了响应,于是就出现了上面的租约超期异常。

解决方案:

继续改大 xceiverCount 至8192并重启集群生效。

dfs.datanode.max.xcievers:

dfs.datanode.max.xcievers

256

dfs.datanode.max.xcievers 对于datanode来说,就如同linux上的文件句柄的限制,当datanode 上面的连接数操作配置中的设置时,datanode就会拒绝连接。

一般都会将此参数调的很大,40000+左右。

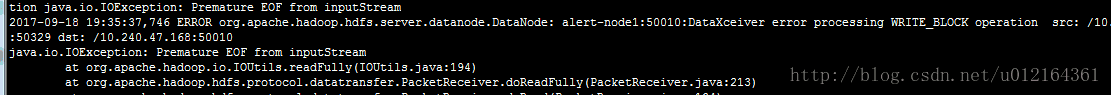

hadoop 日志错误 java.io.IOException: Premature EOF from inputStream

2015-03-17 11:24:25,467 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: d135.hadoop:50010:DataXceivererror processing WRITE_BLOCK operation src: /192.168.1.118:64599 dst: /192.168.1.135:50010

java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.HashMap.createEntry(HashMap.java:897)

at java.util.HashMap.addEntry(HashMap.java:884)

at java.util.HashMap.put(HashMap.java:505)

at java.util.HashSet.add(HashSet.java:217)

2015-03-17 10:06:30,822 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: d135.hadoop:50010:DataXceivererror processing WRITE_BLOCK operation src: /192.168.1.135:36440 dst: /192.168.1.135:50010

java.io.IOException: Premature EOF from inputStream

at org.apache.hadoop.io.IOUtils.readFully(IOUtils.java:194)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doReadFully(PacketReceiver.java:213)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.doRead(PacketReceiver.java:134)

at org.apache.hadoop.hdfs.protocol.datatransfer.PacketReceiver.receiveNextPacket(PacketReceiver.java:109)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receivePacket(BlockReceiver.java:446)

at org.apache.hadoop.hdfs.server.datanode.BlockReceiver.receiveBlock(BlockReceiver.java:702)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.writeBlock(DataXceiver.java:739)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.opWriteBlock(Receiver.java:124)

at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.processOp(Receiver.java:71)

at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:232)

at java.lang.Thread.run(Thread.java:745)

问题原因

文件操作超租期,实际上就是data stream操作过程中文件被删掉了。通常是因为Mapred多个task操作同一个文件,一个task完成后删掉文件导致。这个错误跟dfs.datanode.max.transfer.threads参数到达上限有关。这个是datanode同时处理请求的任务上限,总默认值是 4096,该参数取值范围[1 to 8192]

解决办法:

修改每个datanode节点的hadoop配置文件hdfs-site.xml:

增加dfs.datanode.max.transfer.threads属性的设置,设置为8192

[html] view plain copy

dfs.datanode.max.transfer.threads 8192

(dfs.datanode.max.xcievers和dfs.datanode.max.transfer.threads都是指的一个参数不过前者是hdp1.0的叫法)

dfs.datanode.max.xcievers设置得过低有什么后果?

首先概括的说这个参数是表示datanode上负责进行文件操作的线程数。如果需要处理的文件过多,而这个参数设置得过低就会有一部分文件

处理不过来,就会报下面这个异常:

ERROR org.apache.hadoop.dfs.DataNode: DatanodeRegistration(10.10.10.53:50010,storageID=DS-1570581820-10.10.10.53-50010-1224117842339,infoPort=50075, ipcPort=50020):DataXceiver: java.io.IOException: xceiverCount 258 exceeds the limit of concurrent xcievers 256

我们都知道linux系统中所有的文件操作都被绑定到一个socket上,那么形象点解释,操作文件就是对这个socket操作,进一步具体可以把他看做是一个线程。而这个参数就是指定这种线程的个数。

比如:

public DFSInputStream open(String src) throws IOException

public FSDataOutputStream create(Path f) throws IOException

在dfs中的这种open(),create()的操作对应到真实的动作就是在服务端(dn)new一个线程(socket)来处理。

下面来看看这个线程机制是怎么运转的:

在datanode里面有一个专门的线程组来维护这些线程,同时有一个守护线程来监视这个线程组的体量——DataXceiverServer

它负责监测线程数量是否到达上线,超过就抛出异常:

//在datanode启动时,创建这个线程组

this.threadGroup = new ThreadGroup(“dataXceiverServer”);

this.dataXceiverServer = new Daemon(threadGroup,

new DataXceiverServer(ss, conf, this));

this.threadGroup.setDaemon(true); // auto destroy when empty

//DataXceiverServer随时监控及报警

/* Number of concurrent xceivers per node. /

int getXceiverCount() {

return threadGroup == null ? 0 : threadGroup.activeCount();

}

if (curXceiverCount > dataXceiverServer.maxXceiverCount) {

throw new IOException(“xceiverCount ” + curXceiverCount

+ ” exceeds the limit of concurrent xcievers “

+ dataXceiverServer.maxXceiverCount);

}

为什么会有这样的机制呢?

这是因为如果这样的线程过多,系统内存就会暴掉(一个线程约占1M内存,一台datanode以60G内存计,则最多允许有6万个线程,而这只是理想状态的)

一些估算dfs.datanode.max.xcievers值得方法:

最常规的算法:

主要用作Hbase的服务器:

(Reserve(20%)是指多分配20%的空间以允许文件数等的增长)