- 机器学习与深度学习间关系与区别

ℒℴѵℯ心·动ꦿ໊ོ꫞

人工智能学习深度学习python

一、机器学习概述定义机器学习(MachineLearning,ML)是一种通过数据驱动的方法,利用统计学和计算算法来训练模型,使计算机能够从数据中学习并自动进行预测或决策。机器学习通过分析大量数据样本,识别其中的模式和规律,从而对新的数据进行判断。其核心在于通过训练过程,让模型不断优化和提升其预测准确性。主要类型1.监督学习(SupervisedLearning)监督学习是指在训练数据集中包含输入

- 机器学习VS深度学习

nfgo

机器学习

机器学习(MachineLearning,ML)和深度学习(DeepLearning,DL)是人工智能(AI)的两个子领域,它们有许多相似之处,但在技术实现和应用范围上也有显著区别。下面从几个方面对两者进行区分:1.概念层面机器学习:是让计算机通过算法从数据中自动学习和改进的技术。它依赖于手动设计的特征和数学模型来进行学习,常用的模型有决策树、支持向量机、线性回归等。深度学习:是机器学习的一个子领

- 联邦学习 Federated learning Google I/O‘19 笔记

努力搬砖的星期五

笔记联邦学习机器学习机器学习tensorflow

FederatedLearning:MachineLearningonDecentralizeddatahttps://www.youtube.com/watch?v=89BGjQYA0uE文章目录FederatedLearning:MachineLearningonDecentralizeddata1.DecentralizeddataEdgedevicesGboard:mobilekeyboa

- 【ShuQiHere】探索人工智能核心:机器学习的奥秘

ShuQiHere

人工智能机器学习

【ShuQiHere】什么是机器学习?机器学习(MachineLearning,ML)是人工智能(ArtificialIntelligence,AI)中最关键的组成部分之一。它使得计算机不仅能够处理数据,还能从数据中学习,从而做出预测和决策。无论是语音识别、自动驾驶还是推荐系统,背后都依赖于机器学习模型。机器学习与传统的编程不同,它不再依赖于人类编写的固定规则,而是通过数据自我改进模型,从而更灵活

- 机器学习 VS 表示学习 VS 深度学习

Efred.D

人工智能机器学习深度学习人工智能

文章目录前言一、机器学习是什么?二、表示学习三、深度学习总结前言本文主要阐述机器学习,表示学习和深度学习的原理和区别.一、机器学习是什么?机器学习(machinelearning),是从有限的数据集中学习到一定的规律,再把学到的规律应用到一些相似的样本集中做预测.机器学习的历史可以追溯到20世纪40年代McCulloch提出的人工神经元网络,目前学界大致把机器学习分为传统机器学习和机器学习两个类别

- 【python】【Ray的概述】

资源存储库

python开发语言

Overview概述Rayisanopen-sourceunifiedframeworkforscalingAIandPythonapplicationslikemachinelearning.Itprovidesthecomputelayerforparallelprocessingsothatyoudon’tneedtobeadistributedsystemsexpert.Rayminimi

- 2021-03-31 每日打卡

来多喜

昨日完成情况:1.6k散步,❌帕梅拉(我好懒)2.思维导图,statistical和machinelearning,先快速看一遍中文版,然后细看英文版.太多了,感觉在面试前看不完。决定集中精力讲清楚简历的内容。3.工作kki+myhabeats+handover。kki可以制作dataflow了,有了ga和publihser数据。myhabeatsremarketingaudience遇到困难。感

- 面向可信和节能的雾计算医疗决策支持系统的优化微型机器学习与可解释人工智能

神一样的老师

论文阅读分享人工智能

这篇论文的标题为《OptimizedTinyMachineLearningandExplainableAIforTrustableandEnergy-EfficientFog-EnabledHealthcareDecisionSupportSystem》,发表在《InternationalJournalofComputationalIntelligenceSystems》2024年第17卷,由R.

- 【论文阅读】AugSteal: Advancing Model Steal With Data Augmentation in Active Learning Frameworks(2024)

Bosenya12

科研学习模型窃取论文阅读模型窃取模型提取数据增强主动学习

摘要Withtheproliferationof(随着)machinelearningmodels(机器学习模型)indiverseapplications,theissueofmodelsecurity(模型的安全问题)hasincreasinglybecomeafocalpoint(日益成为人们关注的焦点).Modelstealattacks(模型窃取攻击)cancausesignifican

- 机器学习入门:机器学习的基本概念

Louis0687

姓名:高亦凡学号:19020100056学院:电子工程学院转载自:原文链接【嵌牛导读】机器学习(MachineLearning)是一门涉及统计学、系统辨识、逼近理论、神经网络、优化理论、计算机科学、脑科学等诸多领域的交叉学科,研究计算机怎样模拟或实现人类的学习行为,以获取新的知识或技能,重新组织已有的知识结构使之不断改善自身的性能,是人工智能技术的核心。【嵌牛鼻子】机器学习【嵌牛提问】什么是机器学

- L1正则和L2正则

wangke

等高线与路径HOML(Hands-OnMachineLearning)上对L1_norm和L2_norm的解释:左上图是L1_norm.背景是损失函数的等高线(圆形),前景是L1_penalty的等高线(菱形),这两个组成了最终的目标函数.在梯度下降的过程中,对于损失函数的梯度为白色点轨迹,对于L1_penalty函数的梯度为黄色点轨迹.可以看出,黄色的点更容易取值为0.因此在考虑两个损失的权衡时

- 机器学习概述与应用:深度学习、人工智能与经典学习方法

刷刷刷粉刷匠

人工智能机器学习深度学习

引言机器学习(MachineLearning)是人工智能(AI)领域中最为核心的分支之一,其主要目的是通过数据学习和构建模型,帮助计算机系统自动完成特定任务。随着深度学习(DeepLearning)的崛起,机器学习技术在各行各业中的应用变得越来越广泛。在本文中,我们将详细介绍机器学习的基础概念,包括无监督学习、有监督学习、增量学习,以及常见的回归和分类问题,并结合实际代码示例来加深理解。1.机器学

- Datawhale X 李宏毅苹果书 AI夏令营|机器学习基础之案例学习

Monyan

人工智能机器学习学习李宏毅深度学习

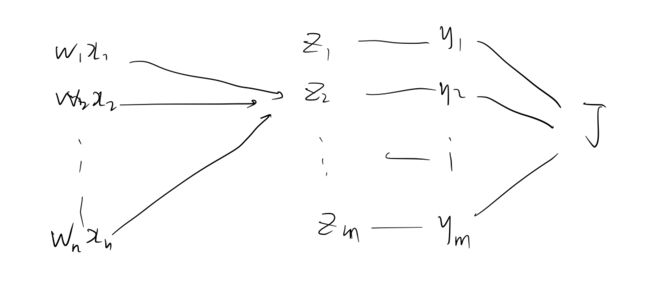

机器学习(MachineLearning,ML):机器具有学习的能力,即让机器具备找一个函数的能力函数不同,机器学习的类别不同:回归(regression):找到的函数的输出是一个数值或标量(scalar)。例如:机器学习预测某一个时间段内的PM2.5,机器要找到一个函数f,输入是跟PM2.5有关的的指数,输出是明天中午的PM2.5的值。分类(classification):让机器做选择题,先准备

- R语言 机器学习 KNN 2个例子

waterHBO

r语言机器学习开发语言

代码的写法,参考来源是这本书:MachineLearningwithR,2ndEdition.pdf相关的资源我已经上传了,包括代码,数据,以及这行本书。下载链接–免积分下载。https://download.csdn.net/download/waterHBO/896756871.第一个例子,代码和过程,全部来自书上#我根据书中第三章KNN的内容来做的。#第3章,KNN,K-NearestNei

- 【论文阅读】Model Stealing Attacks Against Inductive Graph Neural Networks(2021)

Bosenya12

科研学习模型窃取论文阅读图神经网络模型窃取

摘要Manyreal-worlddata(真实世界的数据)comeintheformofgraphs(以图片的形式).Graphneuralnetworks(GNNs图神经网络),anewfamilyofmachinelearning(ML)models,havebeenproposedtofullyleveragegraphdata(充分利用图数据)tobuildpowerfulapplicat

- 机器学习在旅游业的革新之旅

jun778895

机器学习人工智能

机器学习在旅游业的革新之旅随着科技的飞速发展,尤其是人工智能(AI)技术的广泛应用,各个行业都迎来了前所未有的变革。其中,旅游业作为全球经济的重要支柱之一,更是受益匪浅。机器学习(MachineLearning,ML)作为AI的核心技术之一,正在逐步重塑旅游业的各个方面,从需求分析、行程规划、服务体验到营销策略,无一不展现出其巨大的潜力和价值。本文将深入探讨机器学习在旅游业的革新之旅,揭示其如何推

- Python机器学习笔记:CART算法实战

战争热诚

完整代码及其数据,请移步小编的GitHub传送门:请点击我如果点击有误:https://github.com/LeBron-Jian/MachineLearningNote前言在python机器学习笔记:深入学习决策树算法原理一文中我们提到了决策树里的ID3算法,C4.5算法,并且大概的了

- 机器学习、深度学习、神经网络之间的关系

你好,工程师

AI机器学习

机器学习(MachineLearning)、深度学习(DeepLearning)和神经网络(NeuralNetworks)之间存在密切的关系,它们可以被看作是一种逐层递进的关系。下面简要介绍它们之间的关系:机器学习(MachineLearning):机器学习是一种人工智能的分支,关注如何通过数据让计算机系统从经验中学习,提高性能。机器学习算法可以分为监督学习、无监督学习、半监督学习和强化学习等不同

- 认识小波-DWT CWT Scattering

闪闪发亮的小星星

数字信号处理与分析计算机视觉人工智能信号处理

这里写自定义目录标题小波变换的种类连续小波变换(CWT)DWTANexampleapplicationofDWTANexampleofCWT5.MachineLearningandDeepLearningwithWaveletScattering小波散射网络大家好。在本次介绍性课程中,我将介绍一些基本的小波概念。我将主要使用一维示例,但相同的概念也可以应用于图像。首先,我们回顾一下什么是小波。现实

- 你说什么是机器学习呢

guguguyuan

人工智能

机器学习这个词是让人疑惑的,首先它是英文名称MachineLearning(简称ML)的直译,在计算界Machine一般指计算机。这个名字使用了拟人的手法,说明了这门技术是让机器“学习”的技术。但是计算机是死的,怎么可能像人类一样“学习”呢?传统上如果我们想让计算机工作,我们给它一串指令,然后它遵照这个指令一步步执行下去。有因有果,非常明确。但这样的方式在机器学习中行不通。机器学习根本不接受你输入

- 线性回归(1)

zidea

MachineLearninginMarketing感谢李宏毅《回归-案例研究》部分内容为听取李宏毅老师讲座的笔记,也融入了自己对机器学习理解,个人推荐李宏毅老师的机器学习系列课程,尤其对于初学者强烈推荐。课程设计相对其他课程要容易理解。在机器学习中算法通常分为回归和分类两种,今天我们探讨什么线性回归。以及如何设计一个线性回归模型。什么回归简单理解通过数据最终预测出来一个值。回归问题的实例就是找到

- 【了解机器学习的定义与发展历程】

AK@

人工智能人工智能机器学习

曾梦想执剑走天涯,我是程序猿【AK】目录简述概要知识图谱简述概要了解机器学习的定义与发展历程知识图谱机器学习(MachineLearning,ML)是一门跨学科的学科,它使用计算机模拟或实现人类学习行为,通过不断地获取新的知识和技能,重新组织已有的知识结构,从而提高自身的性能。简单来说,机器学习就是让计算机从数据中学习规律,并根据这些规律对未来数据进行预测。机器学习的发展历程可以追溯到上世纪50年

- 【机器学习】是什么?

dami_king

机器学习

机器学习(MachineLearning,ML)是一门多领域交叉学科,属于人工智能(ArtificialIntelligence,AI)的一个分支,致力于研究和构建算法及统计模型,让计算机系统能够在没有明确编程指令的情况下,通过分析和学习数据集中的规律与模式,从而获得新知识、发现内在联系、做出预测或者决策的能力。简单来说,机器学习就是使计算机程序能够从经验中学习和改进。以下是机器学习的一些核心概念

- 【IEEE出版、EI稳定检索】2024年机器学习与神经网络国际学术会议(MLNN 2024)

AEIC学术交流中心—李老师

机器学习神经网络人工智能

2024年机器学习与神经网络国际学术会议(MLNN2024)2024InternationalConferenceonMachinelearningandNeuralNetworks2024年4月19-21日中国-珠海重要信息大会官网:www.icmlnn.org(点击投稿/参会/了解会议详情)大会时间:2024年4月19-21日大会地点:中国-珠海接受/拒稿通知:投稿后1周左右截稿时间:2024

- ChatGPT魔法1: 背后的原理

王丰博

GPTchatgpt

1.AI的三个阶段1)上世纪50~60年代,计算机刚刚产生2)Machinelearning3)Deeplearning,有神经网络,最有代表性的是ChatGPT,GPT(GenerativePre-TrainedTransformer)2.深度神经网络llyaSutskever:做图像识别,使用了GPT去并行计算及训练。Alexnet数据库已经label好的(李飞飞)GPU算力3.GPT3.1T

- 论文阅读-面向机器学习的云工作负载预测模型的性能分析

向来痴_

论文阅读

论文名称:PerformanceAnalysisofMachineLearningCenteredWorkloadPredictionModelsforCloud摘要由于异构服务类型和动态工作负载的高变异性和维度,资源使用的精确估计是一个复杂而具有挑战性的问题。在过去几年中,资源使用和流量的预测已受到研究界的广泛关注。许多基于机器学习的工作负载预测模型通过利用其计算能力和学习能力得以发展。本文提出

- 深度学习环境下一些有用的链接

星海之眸

UsefulLinksAboutsystem初始安装系统的一些主要链接Ubuntu16.04系统美化输入法的安装wechat安装matlab安装ubuntu下matlab启动报错java.lang.runtime.Exception**********************,则执行这个命令:sudochmod-Ra+rw~/.matlabAboutMachineLearningtensorflo

- Week10

kidling_G

第10周十七、大规模机器学习(LargeScaleMachineLearning)17.1大型数据集的学习参考视频:17-1-LearningWithLargeDatasets(6min).mkv如果我们有一个低方差的模型,增加数据集的规模可以帮助你获得更好的结果。我们应该怎样应对一个有100万条记录的训练集?以线性回归模型为例,每一次梯度下降迭代,我们都需要计算训练集的误差的平方和,如果我们的学

- 机器学习入门之基础概念及线性回归

StarCoder_Yue

算法机器学习学习笔记机器学习线性回归正则化人工智能算法数学

任务目录什么是Machinelearning学习中心极限定理,学习正态分布,学习最大似然估计推导回归Lossfunction学习损失函数与凸函数之间的关系了解全局最优和局部最优学习导数,泰勒展开推导梯度下降公式写出梯度下降的代码学习L2-Norm,L1-Norm,L0-Norm推导正则化公式说明为什么用L1-Norm代替L0-Norm学习为什么只对w/Θ做限制,不对b做限制Question1:Wh

- Kaggle Intro Model Validation and Underfitting and Overfitting

卢延吉

NewDeveloper数据(Data)ML&ME&GPT机器学习

ModelValidationModelvalidationisthecornerstoneofensuringarobustandreliablemachinelearningmodel.It'stherigorousassessmentofhowwellyourmodelperformsonunseendata,mimickingreal-worldscenarios.Doneright,it

- jQuery 跨域访问的三种方式 No 'Access-Control-Allow-Origin' header is present on the reque

qiaolevip

每天进步一点点学习永无止境跨域众观千象

XMLHttpRequest cannot load http://v.xxx.com. No 'Access-Control-Allow-Origin' header is present on the requested resource. Origin 'http://localhost:63342' is therefore not allowed access. test.html:1

- mysql 分区查询优化

annan211

java分区优化mysql

分区查询优化

引入分区可以给查询带来一定的优势,但同时也会引入一些bug.

分区最大的优点就是优化器可以根据分区函数来过滤掉一些分区,通过分区过滤可以让查询扫描更少的数据。

所以,对于访问分区表来说,很重要的一点是要在where 条件中带入分区,让优化器过滤掉无需访问的分区。

可以通过查看explain执行计划,是否携带 partitions

- MYSQL存储过程中使用游标

chicony

Mysql存储过程

DELIMITER $$

DROP PROCEDURE IF EXISTS getUserInfo $$

CREATE PROCEDURE getUserInfo(in date_day datetime)-- -- 实例-- 存储过程名为:getUserInfo-- 参数为:date_day日期格式:2008-03-08-- BEGINdecla

- mysql 和 sqlite 区别

Array_06

sqlite

转载:

http://www.cnblogs.com/ygm900/p/3460663.html

mysql 和 sqlite 区别

SQLITE是单机数据库。功能简约,小型化,追求最大磁盘效率

MYSQL是完善的服务器数据库。功能全面,综合化,追求最大并发效率

MYSQL、Sybase、Oracle等这些都是试用于服务器数据量大功能多需要安装,例如网站访问量比较大的。而sq

- pinyin4j使用

oloz

pinyin4j

首先需要pinyin4j的jar包支持;jar包已上传至附件内

方法一:把汉字转换为拼音;例如:编程转换后则为biancheng

/**

* 将汉字转换为全拼

* @param src 你的需要转换的汉字

* @param isUPPERCASE 是否转换为大写的拼音; true:转换为大写;fal

- 微博发送私信

随意而生

微博

在前面文章中说了如和获取登陆时候所需要的cookie,现在只要拿到最后登陆所需要的cookie,然后抓包分析一下微博私信发送界面

http://weibo.com/message/history?uid=****&name=****

可以发现其发送提交的Post请求和其中的数据,

让后用程序模拟发送POST请求中的数据,带着cookie发送到私信的接入口,就可以实现发私信的功能了。

- jsp

香水浓

jsp

JSP初始化

容器载入JSP文件后,它会在为请求提供任何服务前调用jspInit()方法。如果您需要执行自定义的JSP初始化任务,复写jspInit()方法就行了

JSP执行

这一阶段描述了JSP生命周期中一切与请求相关的交互行为,直到被销毁。

当JSP网页完成初始化后

- 在 Windows 上安装 SVN Subversion 服务端

AdyZhang

SVN

在 Windows 上安装 SVN Subversion 服务端2009-09-16高宏伟哈尔滨市道里区通达街291号

最佳阅读效果请访问原地址:http://blog.donews.com/dukejoe/archive/2009/09/16/1560917.aspx

现在的Subversion已经足够稳定,而且已经进入了它的黄金时段。我们看到大量的项目都在使

- android开发中如何使用 alertDialog从listView中删除数据?

aijuans

android

我现在使用listView展示了很多的配置信息,我现在想在点击其中一条的时候填出 alertDialog,点击确认后就删除该条数据,( ArrayAdapter ,ArrayList,listView 全部删除),我知道在 下面的onItemLongClick 方法中 参数 arg2 是选中的序号,但是我不知道如何继续处理下去 1 2 3

- jdk-6u26-linux-x64.bin 安装

baalwolf

linux

1.上传安装文件(jdk-6u26-linux-x64.bin)

2.修改权限

[root@localhost ~]# ls -l /usr/local/jdk-6u26-linux-x64.bin

3.执行安装文件

[root@localhost ~]# cd /usr/local

[root@localhost local]# ./jdk-6u26-linux-x64.bin&nbs

- MongoDB经典面试题集锦

BigBird2012

mongodb

1.什么是NoSQL数据库?NoSQL和RDBMS有什么区别?在哪些情况下使用和不使用NoSQL数据库?

NoSQL是非关系型数据库,NoSQL = Not Only SQL。

关系型数据库采用的结构化的数据,NoSQL采用的是键值对的方式存储数据。

在处理非结构化/半结构化的大数据时;在水平方向上进行扩展时;随时应对动态增加的数据项时可以优先考虑使用NoSQL数据库。

在考虑数据库的成熟

- JavaScript异步编程Promise模式的6个特性

bijian1013

JavaScriptPromise

Promise是一个非常有价值的构造器,能够帮助你避免使用镶套匿名方法,而使用更具有可读性的方式组装异步代码。这里我们将介绍6个最简单的特性。

在我们开始正式介绍之前,我们想看看Javascript Promise的样子:

var p = new Promise(function(r

- [Zookeeper学习笔记之八]Zookeeper源代码分析之Zookeeper.ZKWatchManager

bit1129

zookeeper

ClientWatchManager接口

//接口的唯一方法materialize用于确定那些Watcher需要被通知

//确定Watcher需要三方面的因素1.事件状态 2.事件类型 3.znode的path

public interface ClientWatchManager {

/**

* Return a set of watchers that should

- 【Scala十五】Scala核心九:隐式转换之二

bit1129

scala

隐式转换存在的必要性,

在Java Swing中,按钮点击事件的处理,转换为Scala的的写法如下:

val button = new JButton

button.addActionListener(

new ActionListener {

def actionPerformed(event: ActionEvent) {

- Android JSON数据的解析与封装小Demo

ronin47

转自:http://www.open-open.com/lib/view/open1420529336406.html

package com.example.jsondemo;

import org.json.JSONArray;

import org.json.JSONException;

import org.json.JSONObject;

impor

- [设计]字体创意设计方法谈

brotherlamp

UIui自学ui视频ui教程ui资料

从古至今,文字在我们的生活中是必不可少的事物,我们不能想象没有文字的世界将会是怎样。在平面设计中,UI设计师在文字上所花的心思和功夫最多,因为文字能直观地表达UI设计师所的意念。在文字上的创造设计,直接反映出平面作品的主题。

如设计一幅戴尔笔记本电脑的广告海报,假设海报上没有出现“戴尔”两个文字,即使放上所有戴尔笔记本电脑的图片都不能让人们得知这些电脑是什么品牌。只要写上“戴尔笔

- 单调队列-用一个长度为k的窗在整数数列上移动,求窗里面所包含的数的最大值

bylijinnan

java算法面试题

import java.util.LinkedList;

/*

单调队列 滑动窗口

单调队列是这样的一个队列:队列里面的元素是有序的,是递增或者递减

题目:给定一个长度为N的整数数列a(i),i=0,1,...,N-1和窗长度k.

要求:f(i) = max{a(i-k+1),a(i-k+2),..., a(i)},i = 0,1,...,N-1

问题的另一种描述就

- struts2处理一个form多个submit

chiangfai

struts2

web应用中,为完成不同工作,一个jsp的form标签可能有多个submit。如下代码:

<s:form action="submit" method="post" namespace="/my">

<s:textfield name="msg" label="叙述:">

- shell查找上个月,陷阱及野路子

chenchao051

shell

date -d "-1 month" +%F

以上这段代码,假如在2012/10/31执行,结果并不会出现你预计的9月份,而是会出现八月份,原因是10月份有31天,9月份30天,所以-1 month在10月份看来要减去31天,所以直接到了8月31日这天,这不靠谱。

野路子解决:假设当天日期大于15号

- mysql导出数据中文乱码问题

daizj

mysql中文乱码导数据

解决mysql导入导出数据乱码问题方法:

1、进入mysql,通过如下命令查看数据库编码方式:

mysql> show variables like 'character_set_%';

+--------------------------+----------------------------------------+

| Variable_name&nbs

- SAE部署Smarty出现:Uncaught exception 'SmartyException' with message 'unable to write

dcj3sjt126com

PHPsmartysae

对于SAE出现的问题:Uncaught exception 'SmartyException' with message 'unable to write file...。

官方给出了详细的FAQ:http://sae.sina.com.cn/?m=faqs&catId=11#show_213

解决方案为:

01

$path

- 《教父》系列台词

dcj3sjt126com

Your love is also your weak point.

你的所爱同时也是你的弱点。

If anything in this life is certain, if history has taught us anything, it is

that you can kill anyone.

不顾家的人永远不可能成为一个真正的男人。 &

- mongodb安装与使用

dyy_gusi

mongo

一.MongoDB安装和启动,widndows和linux基本相同

1.下载数据库,

linux:mongodb-linux-x86_64-ubuntu1404-3.0.3.tgz

2.解压文件,并且放置到合适的位置

tar -vxf mongodb-linux-x86_64-ubun

- Git排除目录

geeksun

git

在Git的版本控制中,可能有些文件是不需要加入控制的,那我们在提交代码时就需要忽略这些文件,下面讲讲应该怎么给Git配置一些忽略规则。

有三种方法可以忽略掉这些文件,这三种方法都能达到目的,只不过适用情景不一样。

1. 针对单一工程排除文件

这种方式会让这个工程的所有修改者在克隆代码的同时,也能克隆到过滤规则,而不用自己再写一份,这就能保证所有修改者应用的都是同一

- Ubuntu 创建开机自启动脚本的方法

hongtoushizi

ubuntu

转载自: http://rongjih.blog.163.com/blog/static/33574461201111504843245/

Ubuntu 创建开机自启动脚本的步骤如下:

1) 将你的启动脚本复制到 /etc/init.d目录下 以下假设你的脚本文件名为 test。

2) 设置脚本文件的权限 $ sudo chmod 755

- 第八章 流量复制/AB测试/协程

jinnianshilongnian

nginxluacoroutine

流量复制

在实际开发中经常涉及到项目的升级,而该升级不能简单的上线就完事了,需要验证该升级是否兼容老的上线,因此可能需要并行运行两个项目一段时间进行数据比对和校验,待没问题后再进行上线。这其实就需要进行流量复制,把流量复制到其他服务器上,一种方式是使用如tcpcopy引流;另外我们还可以使用nginx的HttpLuaModule模块中的ngx.location.capture_multi进行并发

- 电商系统商品表设计

lkl

DROP TABLE IF EXISTS `category`; -- 类目表

/*!40101 SET @saved_cs_client = @@character_set_client */;

/*!40101 SET character_set_client = utf8 */;

CREATE TABLE `category` (

`id` int(11) NOT NUL

- 修改phpMyAdmin导入SQL文件的大小限制

pda158

sqlmysql

用phpMyAdmin导入mysql数据库时,我的10M的

数据库不能导入,提示mysql数据库最大只能导入2M。

phpMyAdmin数据库导入出错: You probably tried to upload too large file. Please refer to documentation for ways to workaround this limit.

- Tomcat性能调优方案

Sobfist

apachejvmtomcat应用服务器

一、操作系统调优

对于操作系统优化来说,是尽可能的增大可使用的内存容量、提高CPU的频率,保证文件系统的读写速率等。经过压力测试验证,在并发连接很多的情况下,CPU的处理能力越强,系统运行速度越快。。

【适用场景】 任何项目。

二、Java虚拟机调优

应该选择SUN的JVM,在满足项目需要的前提下,尽量选用版本较高的JVM,一般来说高版本产品在速度和效率上比低版本会有改进。

J

- SQLServer学习笔记

vipbooks

数据结构xml

1、create database school 创建数据库school

2、drop database school 删除数据库school

3、use school 连接到school数据库,使其成为当前数据库

4、create table class(classID int primary key identity not null)

创建一个名为class的表,其有一