[机器学习]线性回归(Linear regression)及代码实现

MLE + 高斯分布(误差满足均值为0,方差为斯塔平方的正太分布)能推出目标函数为误差平方和. 具体推到如下图.对数似然取对数仅仅是一种求解手段而已.

线性回归可以对样本非线性.只要对参数斯塔线性就行.例如 Y = Θ 0 + Θ 1 X 1 + Θ 2 X 2 X Y = \Theta _{0} + \Theta _{1}X_{1} + \Theta _{2}X_{2}X Y=Θ0+Θ1X1+Θ2X2X,可以用poly把X1 变成X1的平方或者X2的平方,或者X1X2,也就是另一种方式增加特征即增加了数据.对于同样的数据,就可以画出弧线来做回归.也就是不同阶.

样本个数有限叫做有参,样本个数无限叫做无参.

公式推导:

线性回归广告预测:

import csv

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

import matplotlib.pyplot as plt

import matplotlib as mpl

from sklearn.metrics import accuracy_score

if __name__ == '__main__':

#数据读取的几种方式

path = './Advertising.csv'

# #numpy读入

# data = np.loadtxt(path, delimiter=',', skiprows=1)#直接到第一行,因为第0行是标题,不要

# x = data[:, 1:-1]

# y = data[:, -1]

# print(data)

# print(x)

# print(y)

# #python自带库

# f = file(path, 'rb')

# print(f)

# d = csv.reader(f)

# for line in d:

# print(line)

# f.close()

# #手写读取数据

# f = file(path)

# x = []

# y = []

# for i, d in enumerate(f):

# if i == 0:

# continue

# d = d.strip()

# if not d:

# continue

# d = map(float, d.split(','))

# x.append(d[1:-1])

# y.append(d[-1])

# print(x)

# print(y)

#pandas读入

data = pd.read_csv(path)

data.head(5)

# x, y = data.values[:, 1:-1], data.values[:, -1]

# x = data[['TV', 'Radio', 'Newspaper']]

# y = data['Sales']

print(x)

print(y)

# 数据可视化

plt.figure(figsize=(9,12), facecolor='w')

plt.subplot(311)

plt.plot(data['TV'], y, 'ro')

plt.title('TV')

plt.grid(True)

plt.subplot(312)

plt.plot(data['Radio'], y, 'g^')

plt.title('Radio')

plt.grid(True)

plt.subplot(313)

plt.plot(data['Newspaper'], y, 'b*')

plt.title('Newspaper')

plt.grid(True)

plt.tight_layout()

plt.show()

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size = 0.8, random_state = 1)

clf = LinearRegression()

model = clf.fit(x_train, y_train)

print('模型为:', model)

print('sita参数 =', clf.coef_)

print('截距项 = ', clf.intercept_)

y_hat = clf.predict(x_test)

mse = np.average((y_hat - np.array(y_test)) ** 2) # Mean Squared Error

rmse = np.sqrt(mse) # Root Mean Squared Error

print('MSE = ', mse)

print('RMSE = ', rmse)

t = np.arange(len(x_test))

plt.plot(t, y_test, 'r-', linewidth=2, label='real data')

plt.plot(t, y_hat, 'g-', linewidth=2, label='predict data')

plt.legend(loc='upper right')

plt.title('LinearRegression Predict', fontsize=18)

plt.grid(True)

plt.show()

交叉验证:

运用Lasso(L1正则)或者Ridge(L2正则)回归进行交叉验证选取最合适的超参数值:

import numpy as np

import matplotlib as mpl

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import Lasso, Ridge

from sklearn.model_selection import GridSearchCV

if __name__ == "__main__":

# pandas读入

data = pd.read_csv('./Advertising.csv') # TV、Radio、Newspaper、Sales

x = data[['TV', 'Radio', 'Newspaper']]

y = data['Sales']

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size=0.8, random_state=1)

model = Lasso()

# model = Ridge()

alpha_can = np.logspace(-3, 2, 10)#第三个参数是个数.默认是10的多少次方,要是换就的加 base=2.这就是2的多少次方

print('alpha参数范围:', alpha_can)

lasso_model = GridSearchCV(model, param_grid={'alpha': alpha_can}, cv=10)

lasso_model.fit(x_train, y_train)

print('超参数:', lasso_model.best_params_)

y_hat = lasso_model.predict(np.array(x_test))

print('模型得分:',lasso_model.score(x_test, y_test))

mse = np.average((y_hat - y_test) ** 2) # Mean Squared Error

rmse = np.sqrt(mse) # Root Mean Squared Error

print('mse:' , mse)

print('rmse:' , rmse)

t = np.arange(len(x_test))

mpl.rcParams['font.sans-serif'] = [u'simHei']

mpl.rcParams['axes.unicode_minus'] = False

plt.plot(t, y_test, 'r-', linewidth=2, label=u'real data')

plt.plot(t, y_hat, 'g-', linewidth=2, label=u'predict data')

plt.title('predict', fontsize=18)

plt.legend(loc='upper right')

plt.grid()

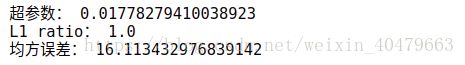

ElasticNet(波士顿房价预测):

建立pipeline和poly多项式:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.linear_model import ElasticNetCV

from sklearn.preprocessing import PolynomialFeatures, StandardScaler #标准化 (x-均值)/方差

from sklearn.pipeline import Pipeline

from sklearn.metrics import mean_squared_error

def not_empty(s):

return s != ''

if __name__ == "__main__":

# warnings.filterwarnings(action='ignore') #不报warning

# np.set_printoptions(suppress=True)

# file_data = pd.read_csv('./housing.data', header=None)

# # a = np.array([float(s) for s in str if s != ''])

# data = np.empty((len(file_data), 14))

# for i, d in enumerate(file_data.values):

# d = map(float, filter(not_empty, d[0].split(' ')))

# data[i] = d

path = './housing.data'

data = np.loadtxt(path)

x, y = np.split(data, (13, ), axis=1)

y = y.ravel()

# data = sklearn.datasets.load_boston()

# x = np.array(data.data)

# y = np.array(data.target)

print(u'样本个数:%d, 特征个数:%d' % x.shape)

print(y.shape)

# l1_ratio = np.logspace(-3, 2, 1000)

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size=0.7, random_state=0)

model = Pipeline([

('ss', StandardScaler()),

('poly', PolynomialFeatures(degree=3, include_bias=True)),

('linear', ElasticNetCV(l1_ratio=np.logspace(0, 1, 50), alphas=np.logspace(-3, 2, 5),

fit_intercept=False, max_iter=1e3, cv=3))

])

print(u'开始建模................................')

model.fit(x_train, y_train.ravel())

linear = model.get_params('linear')['linear']

print(u'超参数:', linear.alpha_)

print(u'L1 ratio:', linear.l1_ratio_)

# print(u'系数:', linear.coef_.ravel())

y_pred = model.predict(x_test)

mse = mean_squared_error(y_test, y_pred)

print(u'均方误差:', mse)

t = np.arange(len(y_pred))

plt.figure(facecolor='w')

plt.plot(t, y_test.ravel(), 'r-', lw=2, label=u'real')

plt.plot(t, y_pred, 'g-', lw=2, label=u'predict')

plt.legend(loc='best')

plt.title(u'booston house price', fontsize=18)

plt.xlabel(u'sample numble', fontsize=15)

plt.ylabel(u'house price', fontsize=15)

plt.grid()

plt.show()

![[机器学习]线性回归(Linear regression)及代码实现_第1张图片](http://img.e-com-net.com/image/info8/0b2c2f4c141041a299e420a1193953f8.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第2张图片](http://img.e-com-net.com/image/info8/222df8cfd05944cab125dda0b08d10ef.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第3张图片](http://img.e-com-net.com/image/info8/41fba30e27ba451ea15f938fc8cc5e07.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第4张图片](http://img.e-com-net.com/image/info8/280d5eb914ea4b82b773d74b6ceb0c70.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第5张图片](http://img.e-com-net.com/image/info8/3fdcf6697bb740579b8d3db7af9ce1c3.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第6张图片](http://img.e-com-net.com/image/info8/e8f3bc33e71b4e8ca373c8abd49ec09f.jpg)

![[机器学习]线性回归(Linear regression)及代码实现_第7张图片](http://img.e-com-net.com/image/info8/79424787b92d48b6b1c7d69c90e44186.jpg)