这几天整理了一下办公电脑里的开发工程,删除了一些测试工程,也发现了之前做的有些比较还可以的测试Demo。

代码工程上传至链接:https://pan.baidu.com/s/13-rTaeVLtcX0KzHy2RT5Ow 提取码:g0u2

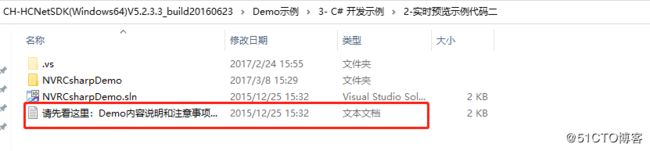

我这里用的海康威视的SDK是比较老了,因为这个Demo是2017年做的。(注:我只是将海康威视的C#编写的视频监控集成到Unity3D中使用(我Unity用的5.5版本),使用的是CH-HCNetSDK(Windows64)V5.2.3.3_build20160623_2版本下载地址:链接:https://pan.baidu.com/s/1aMX-bM3h00ogvD53YhER6w

提取码:o5y5 )

大家可以去海康威视下载最新的SDK,下载地址https://www.hikvision.com/cn/download_more_570.html#prettyPhoto

下载你需要的版本。

SDK下载完成后,你在Unity新建一个工程,按照下载SDK文件中找到Demo示例,找C#开发,里面有需要的功能C# demo,打开你需要的Demo,里面有一个说明,按照这个说明,找到.dll库文件,导入Unity的Plugins文件中。

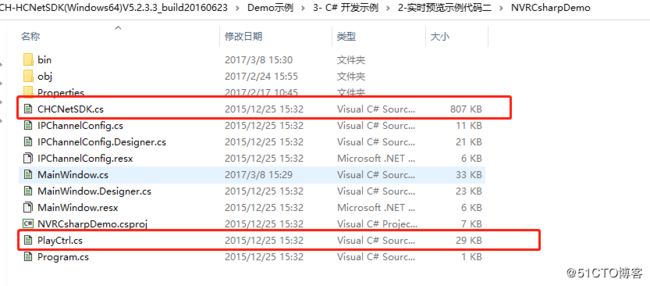

其次,找到CHCNetSDK.cs文件和PlayCtrl.cs文件,去掉C#编辑界面的内容重新集成SDK主要功能。

比如:

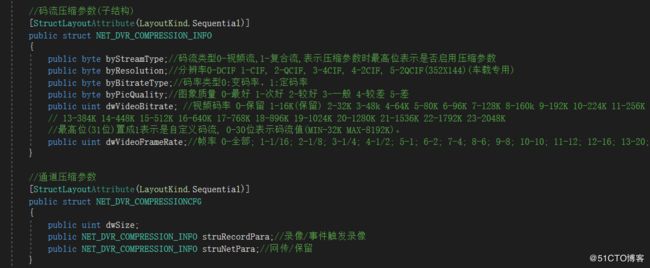

CHCNetSDK.cs

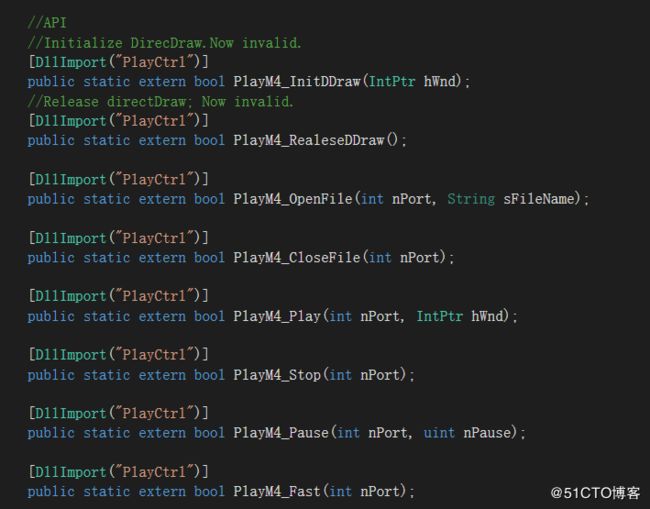

PlayCtrl.cs

再次,创建一个集成功能脚本工具,实现视频监控功能。我粘主要功能代码

private uint iLastErr = 0;

private int m_lUserID = -1;

private bool m_bInitSDK = false;

private bool m_bRecord = false;

private int m_lRealHandle = -1;

private string str;

private int m_lPort = -1;

Texture2D img;

private string move_url;

private FileInfo file;

private byte[] buffer_byte;

Texture2D tex;

private int buffer_count = 0;

private int num = 0;

private PlayCtrl.DECCBFUN m_fDisplayFun = null;

byte[] byteBuf;

byte[] outYuv;

//初始化

void Init()

{

tex = new Texture2D(2560, 1440, TextureFormat.ATC_RGBA8, false);

m_bInitSDK = CHCNetSDK.NET_DVR_Init();

if (!m_bInitSDK)

Debug.Log("NET_DVR_Init error!");

else

CHCNetSDK.NET_DVR_SetLogToFile(3, "D:\\SdkLog\\", true);

// Create a texture. Texture size does not matter, since

// LoadImage will replace with with incoming image size.

Texture2D texe= new Texture2D(2, 2);

// A small 64x64 Unity logo encoded into a PNG.

byte[] nn = new byte[] { 0, 78, 3, 56, 128 };

byte[] pngBytes = new byte[] {

0x89,0x50,0x4E,0x47,0x0D,0x0A,0x1A,0x0A,0x00,0x00,0x00,0x0D,0x49,0x48,0x44,0x52,

0x00,0x00,0x00,0x40,0x00,0x00,0x00,0x40,0x08,0x00,0x00,0x00,0x00,0x8F,0x02,0x2E,

0x02,0x00,0x00,0x01,0x57,0x49,0x44,0x41,0x54,0x78,0x01,0xA5,0x57,0xD1,0xAD,0xC4,

0x30,0x08,0x83,0x81,0x32,0x4A,0x66,0xC9,0x36,0x99,0x85,0x45,0xBC,0x4E,0x74,0xBD,

0x8F,0x9E,0x5B,0xD4,0xE8,0xF1,0x6A,0x7F,0xDD,0x29,0xB2,0x55,0x0C,0x24,0x60,0xEB,

0x0D,0x30,0xE7,0xF9,0xF3,0x85,0x40,0x74,0x3F,0xF0,0x52,0x00,0xC3,0x0F,0xBC,0x14,

0xC0,0xF4,0x0B,0xF0,0x3F,0x01,0x44,0xF3,0x3B,0x3A,0x05,0x8A,0x41,0x67,0x14,0x05,

0x18,0x74,0x06,0x4A,0x02,0xBE,0x47,0x54,0x04,0x86,0xEF,0xD1,0x0A,0x02,0xF0,0x84,

0xD9,0x9D,0x28,0x08,0xDC,0x9C,0x1F,0x48,0x21,0xE1,0x4F,0x01,0xDC,0xC9,0x07,0xC2,

0x2F,0x98,0x49,0x60,0xE7,0x60,0xC7,0xCE,0xD3,0x9D,0x00,0x22,0x02,0x07,0xFA,0x41,

0x8E,0x27,0x4F,0x31,0x37,0x02,0xF9,0xC3,0xF1,0x7C,0xD2,0x16,0x2E,0xE7,0xB6,0xE5,

0xB7,0x9D,0xA7,0xBF,0x50,0x06,0x05,0x4A,0x7C,0xD0,0x3B,0x4A,0x2D,0x2B,0xF3,0x97,

0x93,0x35,0x77,0x02,0xB8,0x3A,0x9C,0x30,0x2F,0x81,0x83,0xD5,0x6C,0x55,0xFE,0xBA,

0x7D,0x19,0x5B,0xDA,0xAA,0xFC,0xCE,0x0F,0xE0,0xBF,0x53,0xA0,0xC0,0x07,0x8D,0xFF,

0x82,0x89,0xB4,0x1A,0x7F,0xE5,0xA3,0x5F,0x46,0xAC,0xC6,0x0F,0xBA,0x96,0x1C,0xB1,

0x12,0x7F,0xE5,0x33,0x26,0xD2,0x4A,0xFC,0x41,0x07,0xB3,0x09,0x56,0xE1,0xE3,0xA1,

0xB8,0xCE,0x3C,0x5A,0x81,0xBF,0xDA,0x43,0x73,0x75,0xA6,0x71,0xDB,0x7F,0x0F,0x29,

0x24,0x82,0x95,0x08,0xAF,0x21,0xC9,0x9E,0xBD,0x50,0xE6,0x47,0x12,0x38,0xEF,0x03,

0x78,0x11,0x2B,0x61,0xB4,0xA5,0x0B,0xE8,0x21,0xE8,0x26,0xEA,0x69,0xAC,0x17,0x12,

0x0F,0x73,0x21,0x29,0xA5,0x2C,0x37,0x93,0xDE,0xCE,0xFA,0x85,0xA2,0x5F,0x69,0xFA,

0xA5,0xAA,0x5F,0xEB,0xFA,0xC3,0xA2,0x3F,0x6D,0xFA,0xE3,0xAA,0x3F,0xEF,0xFA,0x80,

0xA1,0x8F,0x38,0x04,0xE2,0x8B,0xD7,0x43,0x96,0x3E,0xE6,0xE9,0x83,0x26,0xE1,0xC2,

0xA8,0x2B,0x0C,0xDB,0xC2,0xB8,0x2F,0x2C,0x1C,0xC2,0xCA,0x23,0x2D,0x5D,0xFA,0xDA,

0xA7,0x2F,0x9E,0xFA,0xEA,0xAB,0x2F,0xDF,0xF2,0xFA,0xFF,0x01,0x1A,0x18,0x53,0x83,

0xC1,0x4E,0x14,0x1B,0x00,0x00,0x00,0x00,0x49,0x45,0x4E,0x44,0xAE,0x42,0x60,0x82,};

// Load data into the texture.

texe.LoadImage(pngBytes);

//数据流

public void RealDataCallBack(Int32 lRealHandle, UInt32 dwDataType, IntPtr pBuffer, UInt32 dwBufSize, IntPtr pUser)

{

Debug.Log("dwDataType " + dwDataType + "pBuffer " + pBuffer + "dwBufSize " + dwBufSize);

//byte[] bte = new byte[(int)dwBufSize];

switch (dwDataType)

{

case CHCNetSDK.NET_DVR_SYSHEAD:

if (dwBufSize > 0)

{

//Marshal.Copy(pBuffer, bte, 0, (int)dwBufSize);

//for (int i = 0; i < bte.Length; i++)

//{

// buffer_byte[num + i] = bte[i];

//}

//num += bte.Length;

if (m_lPort >= 0)

{

return; //同一路码流不需要多次调用开流接口

}

//获取播放句柄 Get the port to play

if (!PlayCtrl.PlayM4_GetPort(ref m_lPort))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_GetPort failed, error code= " + iLastErr;

Debug.Log(str);

break;

}

//设置流播放模式 Set the stream mode: real-time stream mode

if (!PlayCtrl.PlayM4_SetStreamOpenMode(m_lPort, PlayCtrl.STREAME_REALTIME))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "Set STREAME_REALTIME mode failed, error code= " + iLastErr;

Debug.Log(str);

}

//打开码流,送入头数据 Open stream

if (!PlayCtrl.PlayM4_OpenStream(m_lPort, pBuffer, dwBufSize, 2 * 1024 * 1024))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_OpenStream failed, error code= " + iLastErr;

Debug.Log(str);

break;

}

//设置显示缓冲区个数 Set the display buffer number

if (!PlayCtrl.PlayM4_SetDisplayBuf(m_lPort, 15))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_SetDisplayBuf failed, error code= " + iLastErr;

Debug.Log(str);

}

//设置显示模式 Set the display mode

if (!PlayCtrl.PlayM4_SetOverlayMode(m_lPort, 0, 0/* COLORREF(0)*/)) //play off screen

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_SetOverlayMode failed, error code= " + iLastErr;

Debug.Log(str);

}

//设置解码回调函数,获取解码后音视频原始数据 Set callback function of decoded data

m_fDisplayFun = new PlayCtrl.DECCBFUN(DecCallbackFUN);

//if (!PlayCtrl.PlayM4_SetDecCallBackEx(m_lPort, m_fDisplayFun, IntPtr.Zero, 0))

//{

// Debug.Log("PlayM4_SetDisplayCallBack fail");

//}

PlayCtrl.PlayM4_SetDecCallBack(m_lPort , DecCallbackFUN);

////开始解码 Start to play

if (!PlayCtrl.PlayM4_Play(m_lPort,IntPtr.Zero))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_Play failed, error code= " + iLastErr;

Debug.Log(str);

break;

}

}

break;

case CHCNetSDK.NET_DVR_STREAMDATA:

//Debug.Log("pBuffer" + pBuffer);

if (dwBufSize > 0 && m_lPort != -1)

{

//Marshal.Copy(pBuffer, bte, 0, (int)dwBufSize);

//for (int i = 0; i < bte.Length; i++)

//{

// buffer_byte[num + i] = bte[i];

// //Debug.Log("byteeeee " + buffer_byte[i]);

//}

//num += bte.Length;

//buffer_count = num;

//img.LoadImage(buffer_byte);

//RawImg.GetComponent().texture = byteArrayToImage;

//Creat(byt);

for (int i = 0; i < 999; i++)

{

//送入码流数据进行解码 Input the stream data to decode

if (!PlayCtrl.PlayM4_InputData(m_lPort, pBuffer, dwBufSize))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_InputData failed, error code= " + iLastErr;

Thread.Sleep(2);

}

else

{

break;

}

}

}

break;

default:

if (dwBufSize > 0 && m_lPort != -1)

{

//送入其他数据 Input the other data

for (int i = 0; i < 999; i++)

{

if (!PlayCtrl.PlayM4_InputData(m_lPort, pBuffer, dwBufSize))

{

iLastErr = PlayCtrl.PlayM4_GetLastError(m_lPort);

str = "PlayM4_InputData failed, error code= " + iLastErr;

Thread.Sleep(2);

}

else

{

break;

}

}

}

break;

}

}

//回调视频

private void DecCallbackFUN(int nPort, IntPtr pBuf, int nSize, ref PlayCtrl.FRAME_INFO pFrameInfo, int nReserved1, int nReserved2)

{

if (pFrameInfo.nType == 3) //#define T_YV12 3

{

FileStream fs = null;

BinaryWriter bw = null;

try

{

fs = new FileStream("DecodedVideo.yuv", FileMode.Append);

bw = new BinaryWriter(fs);

Marshal.Copy(pBuf, byteBuf, 0, byteBuf.Length);

Yv12ToYUV(byteBuf, pFrameInfo.nWidth, pFrameInfo.nHeight, 7680);

//for (int i = 0; i < byteBuf.Length; i++)

//{

// Debug.Log("byteBuf SSSSSS" + byteBuf[i]);

//}

//tex.LoadRawTextureData(byteBuf);

//tex.Apply();

//RawImg.GetComponent().texture = tex;

//Creat(byteBuf);

}

catch (System.Exception ex)

{

Debug.Log(ex.ToString());

}

finally

{

bw.Close();

fs.Close();

}

}

}

//视频流转换

private void Yv12ToYUV(byte[] inYv12 ,int width,int height,int widthStep)

{

int col;

int row;

int tmp;

int idx;

int Y, U, V;

for (row = 0; row < height; row++)

{

idx = row * widthStep;

int rowptr = row * width;

for (col = 0; col < width; col++)

{

tmp = (row / 2) * (width / 2) + (col / 2);

Y = ( int) inYv12[row * width + col];

U = ( int) inYv12[width * height + width * height / 4 + tmp];

V = ( int) inYv12[width * height + tmp];

outYuv[idx + col * 3] = (byte)Y;

outYuv[idx + col * 3 + 1] = (byte)U;

outYuv[idx + col * 3 + 2] = (byte)V;

}

}

}

最后,创建自己的View层脚本,即可实现海康威视的SDK集成Unity3D 实现监控。亲测可以,但是对视频解码这块不太懂,出来的画面有点色彩不太对,流畅度还可以。

注意:视频色彩可在Yv12ToYUV和DecCallbackFUN方法中修改。