leaf variable & with torch.no_grad & -=

本文以下列三层全连接神经网络代码为例讲解(包括输入层,中间层<含一个linear和一个reluctant>,输出层)。该代码没有采用torch.nn这个库,但使用了pytorch的自动求到,因此需要提前初始化参数W1和W2,手动写出y_pred和损失函数的表达式、手动更新参数。

# 定义样本数,输入层维度,隐藏层维度,输出层维度

n, d_input, d_hidden, d_output = 64, 1000, 100, 10

# 随机创建一些训练数据

X = torch.randn(n, d_input)

Y = torch.randn(n, d_output)

# 随机初始化W1和W2

W1 = torch.randn(d_input, d_hidden, requires_grad = True)

W2 = torch.randn(d_hidden, d_output, requires_grad = True)

# 自定义学习率

learning_rate = 1e-6

# 构建神经网络

for it in range(500): # 循环500次

Y_pred = X.mm(W1).clamp(min = 0).mm(W2)

# 定义损失函数形式

loss = (Y_pred - Y).pow(2).sum()

# 求梯度

loss.backward()# 会自动计算所有参数的导数计算出来(包括W1、W2、X)

# 更新参数

with torch.no_grad(): # 为了使下面的计算图不占用内存

W1 -= learning_rate * W1.grad

W2 -= learning_rate * W2.grad

# 梯度清零

W1.grad.zero_() # 将W1.grad这个tensor清零,不清零的话,再次循环式时会加上现在的值,导致梯度越来越大

W2.grad.zero_()

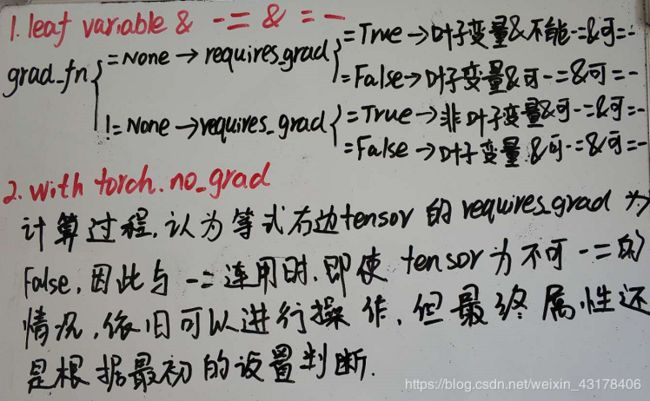

1. leaf variable

当grad_fn为None时,无论requires_grad为True还是False,都为叶子变量,即只要是直接初始化的就为叶子变量。

当grad_fn不为None时,requires_grad = False为叶子变量,requires_grad = True为非叶子变量

# grad_fn = None & requires_grad = False

x = torch.randn(2, 3, requires_grad = False)

x.is_leaf

# grad_fn = None & requires_grad = True

y = torch.randn(2, 3, requires_grad = True)

y.is_leaf

# grad_fn != None & requires_grad = False

w = torch.randn(2, 3, requires_grad = False)

z = torch.randn(2, 3, requires_grad = False)

u = w + z

u.is_leaf

# grad_fn != None & requires_grad = True

p = torch.randn(2, 3, requires_grad = True)

p = torch.randn(2, 3, requires_grad = True)

o = p + q

o.is_leaf

True

True

True

False

2. with torch.no_grad()

在讲述with torch.no_grad()前,先从requires_grad讲起

- requires_grad

在pytorch中,tensor有一个requires_grad参数,如果设置为True,则反向传播时,该tensor就会自动求导。tensor的requires_grad的属性默认为False,若一个节点(叶子变量:自己创建的tensor)requires_grad被设置为True,那么所有依赖它的节点requires_grad都为True(即使其他相依赖的tensor的requires_grad = False)。

x = torch.randn(10, 5, requires_grad = True)

y = torch.randn(10, 5, requires_grad = False)

z = torch.randn(10, 5, requires_grad = False)

w = x + y + z

w.requires_grad

True

- volatile

首先说明,该用法已经被移除,但为了说明torch.no_grad,还是需要讲解下该作用。在之前的版本中,tensor(或者说variable,以前版本tensor会转化成variable,目前该功能也被废弃,直接使用tensor即可)还有一个参数volatile,如果一个tensor的volatile = True,那么所有依赖他的tensor会全部变成True,反向传播时就不会自动求导了,因此大大节约了显存或者说内存。

既然一个tensor既有requires_grad,又有volatile,那么当两个参数设置相矛盾时怎么办?volatile=True的优先级高于requires_grad,即当volatile = True时,无论requires_grad是Ture还是False,反向传播时都不会自动求导。volatile可以实现一定速度的提升,并节省一半的显存,因为其不需要保存梯度。(volatile默认为False,这时反向传播是否自动求导,取决于requires_grad)

with torch.no_grad

上文提到volatile已经被废弃,替代其功能的就是with torch.no_grad。作用与volatile相似,即使一个tensor(命名为x)的requires_grad = True,由x得到的新tensor(命名为w-标量)requires_grad也为False,且grad_fn也为None,即不会对w求导。例子如下所示:

x = torch.randn(10, 5, requires_grad = True)

y = torch.randn(10, 5, requires_grad = True)

z = torch.randn(10, 5, requires_grad = True)

with torch.no_grad():

w = x + y + z

print(w.requires_grad)

print(w.grad_fn)

print(w.requires_grad)

False

None

False

下面可以以一个具体的求导例子看一看:

x = torch.randn(3, 2, requires_grad = True)

y = torch.randn(3, 2, requires_grad = True)

z = torch.randn(3, 2, requires_grad = False)

w = (x + y + z).sum()

print(w)

print(w.data)

print(w.grad_fn)

print(w.requires_grad)

w.backward()

print(x.grad)

print(y.grad)

print(z.grad)

tensor(0.0676, grad_fn=)

tensor(0.0676)

True

tensor([[1., 1.],

[1., 1.],

[1., 1.]])

tensor([[1., 1.],

[1., 1.],

[1., 1.]])

None

可以看到当w的requires_grad = True时,是可以求导的,而且仅对设置了requires_grad = True的x和y求导,没有对z进行求导

x = torch.randn(10, 5, requires_grad = True)

y = torch.randn(10, 5, requires_grad = True)

z = torch.randn(10, 5, requires_grad = True)

with torch.no_grad():

w = (x + y + z)

print(w.requires_grad)

print(x.requires_grad)

w.backward()

False

True

RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn

报错信息提示w没有grad_fn,即不能求导。

3. w-=n与w=w-n的区别

本篇开头的代码中,使用了-=的操作:W1 -= learning_rate * W1.grad,但是如果改成W1 = W1 - learning_rate*W1.grad 就会报错。那么二者有什么区别呢?

- -=操作可以认为是在原地修改(in-place)。即之前申请的内存地址不变,仅是数据发生了变化,类似于python中像列表这种可变类型。

- =-操作,则是新申请了一块内存,即原有的W1和更新后的W1内存地址是不变的。类似于python操作中像字符串这种不可变类型。

那么,这两种各适用什么情况呢?

-=只有在grad_fn为None且requires_grad = True的时候不适用,其他情况都可以;=-适用于任何情况

4. with torch.nn.no_grad 与 -= 搭配使用

本文开头代码中W1 -= learning_rate * W1.grad,这里W1的grad_fn = None且requires_grad = True,按照第五章的结论,应该不能进行操作,可是为什么这里却不会报错呢?

之前提到volatile,volatile = True后,即使requires_grad = Ture,tensor也不会求导,可以认为设置了volatile = True,相当于在计算过程中,把该tensor当成了requires_grad = False,故可以继续下一步,但是最终结果的requires_grad依旧根据本来的设置判断。

with torch.no_grad()用法与上述相似,参考下例,x本来是不能-=的,但是加上了with torch.no_grad,在计算时,认为x的requires_grad = False,因此可以进行-=,但是-=结束,新的x的requires_grad仍旧为True。

x = torch.randn(10, 5, requires_grad = True)

with torch.no_grad():

x -= y

x.requires_grad

True