python爬取京东(爬虫)

A.准备工作

1.打开cmd pip install selenium pip install lxml

2.https://github.com/mozilla/geckodriver/releases(下载自己的driver,个人使用的是火狐)

3.思路:用selenium自动获取HTML ,构造URL实现翻页,用xpath对HTML里面价格、描述、链接、销量、店铺的信息的提取,最后保存。

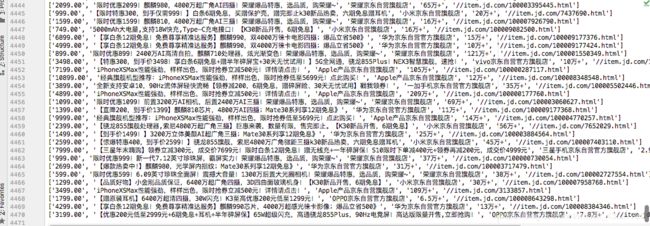

成果:对京东手机里面的价格、描述、链接、销量、店铺的信息提取了4471条

废话不多说,直接上代码。。。。。。。。。。。。。。。。

B.代码块

#autor:heguobao

#time:2019.12.16

#python/自动化测试selenium

#https://search.jd.com/Search?keyword=%E6%89%8B%E6%9C%BA&enc=utf-8&wq=%E6%89%8B%E6%9C%BA&pvid=af566b75cfb54d0bbf01feebb0069bcd

from selenium import webdriver

from lxml import etree,html

etree = html.etree

header={'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36'}

diver_path = r'/Users/apple/Desktop/diver/geckodriver'

driver = webdriver.Firefox(executable_path=diver_path)

driver.get('https://www.jd.com')

driver.find_element_by_id('key').send_keys('手机')

driver.find_element_by_class_name('button').click()

driver.execute_script('window.scrollTo(0,document.body.scrollHeight);')

html = driver.page_source

h5 = etree.HTML(html)

data = h5.xpath('//div[@id="J_goodsList"]//li[@class="gl-item"]')

url = 'https://search.jd.com/Search?keyword=%E6%89%8B%E6%9C%BA&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&wq=dain%20n&page={page}&s=1&click=0'

with open('4471条京东手机数据.txt', 'w', encoding='utf-8') as fp:

for i in range(1,150):

url1 = url.format(page=i)

driver.get(url1)

for k in data:

price = k.xpath('./div/div[@class="p-price"]//i/text()')

name = k.xpath('./div/div[@class="p-name p-name-type-2"]/a/@title')

shangjia = k.xpath('./div/div/span/a/@title')

jk = k.xpath('./div/div/strong/a/text()')

jks = k.xpath('./div/div[@class="p-name p-name-type-2"]/a/@href')

#print('价格:',price)

a = price+name +shangjia+jk+jks

print(a)

fp.write(str(a)+'\n')

if __name__ == '__main__':

print('成功')