Ubuntu16.04+RealsenseT265跑通VINS-Fusion

一、提前条件

系统版本:ubuntu16.04+ROS(kinetic)

默认已经掌握了ubuntu系统下的基本命令以及ROS的基本操作

二、realsenseT265的SDK测试

官方网站https://www.intelrealsense.com/get-started-tracking-camera/

照着其中https://github.com/IntelRealSense/librealsense/blob/development/doc/distribution_linux.md安装过程进行安装即可,保证运行realsense-viewer后能有相关界面。

三、realsenseT265的标定

1.准备工作(需要注意以下文件编译过程中,可能出现依赖库缺失的报错,这很正常,按照提示的错误信息安装对应依赖库即可)

1.1 下载并编译ceres

git clone https://github.com/ceres-solver/ceres-solver

cd ceres

mkdir build

cd build

cmake ..

make

sudo make install

1.2 下载并编译code_utils

首先,安装依赖库

sudo apt-get install libdw-dev

之后,安装code_utils

cd ~/catkin_ws/src

git clone https://github.com/gaowenliang/code_utils

cd ..

catkin_make

source ~/catkin_ws/devel/setup.bash

如果有报错fatal error: backward.hpp: 没有那个文件或目录。此时在code_utils下面找到sumpixel_test.cpp,修改#include "backward.hpp"为#include “code_utils/backward.hpp”后再catkin_make。

我也遇见过no module named “XXX”的错误,是因为没安装对应的依赖库,安装后再catkin_make即可。

1.3 下载并编译imu_utils

cd ~/catkin_ws/src

git clone https://github.com/gaowenliang/imu_utils.git

cd ..

catkin_make

source ~/catkin_ws/devel/setup.bash

注意:先编译code_utils,再编译imu_utils,不能放在一起编译。

1.4 下载并编译kalibr

首先安装依赖库

sudo apt-get install python-setuptools

sudo apt-get install python-setuptools python-rosinstall ipython libeigen3-dev libboost-all-dev doxygen

sudo apt-get install ros-kinetic-vision-opencv ros-kinetic-image-transport-plugins ros-kinetic-cmake-modules python-software-properties software-properties-common libpoco-dev python-matplotlib python-scipy python-git python-pip ipython libtbb-dev libblas-dev liblapack-dev python-catkin-tools libv4l-dev

接下来安装kalibr

cd ~/catkin_ws/src

git clone https://github.com/ethz-asl/Kalibr.git

cd ..

catkin_make

source ~/catkin_ws/devel/setup.bash

在看其他博客时发现可能会出现python相关的问题,我用的是ubuntu16.04自带的Python 2.7.12版本,在安装过程中没有出现问题。

1.5 下载并安装realsense-ros

首先安装依赖库

sudo apt-get install ros-kinetic-ddynamic-reconfigure

接下来安装realsense-ros

cd ~/catkin_ws/src

git clone https://github.com/IntelRealSense/realsense-ros

cd ~/catkin_ws

catkin_make

source ~/catkin_ws/devel/setup.bash

至此,我们的准备工作就做好了。

2.标定流程

2.1修改工作空间下文件的读写权限,不然后面在运行时会报错

cd ~/catkin_ws

sudo chmod 777 src/ -R

2.2 相关文件的修改

打开位于realsense-ros/realsense2_camera/launch目录下的rs_t265.launch文件,将原本的代码

在~/catkin_ws/src/imu_utils/launch中新建t265_imu.launch如下

<launch>

<node pkg="imu_utils" type="imu_an" name="imu_an" output="screen">

<param name="imu_topic" type="string" value= "/camera/imu"/>

<param name="imu_name" type="string" value= "BMI055"/>

<param name="data_save_path" type="string" value= "$(find imu_utils)/data/"/>

<param name="max_time_min" type="int" value= "60"/>

<param name="max_cluster" type="int" value= "200"/>

</node>

</launch>

2.3 IMU的校准

将realsenseT265插上电脑后,建议在你喜欢的地方新建一个文件夹,比如我是在桌面上新建了一个文件夹,然后在其中打开终端,输入以下命令

roslaunch realsense2_camera rs_t265.launch

roslaunch imu_utils t265_imu.launch

注意过程中显示wait for imu data是正常情况,等待大约60分钟即可出结果,在你新建的文件夹内生成了BMI055_imu_param.yaml文件,该文件给出了加速度计和陀螺仪三轴的noise_density(后缀n)和random_walk(后缀w),同时计算出了平均值,后面IMU+摄像头联合标定的时候需要这些均值。

2.4 相机的标定

下载官方给的april_6x6_80x80cm_A0.pdf或者其它标定文件。打印或者在屏幕显示,测量实际的尺寸后,在你之前新建的文件夹中新建apriltags.yaml,我的文件内容如下:

target_type: 'aprilgrid' #gridtype

tagCols: 6 #number of apriltags

tagRows: 6 #number of apriltags

tagSize: 0.16 #size of apriltag, edge to edge [m]

tagSpacing: 0.3125 #ratio of space between tags to tagSize

#example: tagSize=2m, spacing=0.5m --> tagSpacing=0.25[-]

之后,在你新建的文件夹中打开终端,开启realsenseT265

roslaunch realsense2_camera rs_t265.launch

修改话题发布频率

rosrun topic_tools throttle messages /camera/fisheye1/image_raw 10.0 /fisheye1

rosrun topic_tools throttle messages /camera/fisheye2/image_raw 10.0 /fisheye2

录制文件,注意录制过程中要缓慢移动相机,使其能看到完整清晰的标定文件(可以先在录制前打开rviz,调用image的话题进行观察,判断移动的位置)

rosbag record -O cameras_calibration /fisheye1 /fisheye2

调用kalibr的算法计算各个摄像头的内参和外参

kalibr_calibrate_cameras --target ./apriltags.yaml --bag ./cameras_calibration.bag --bag-from-to 2 35 --models omni-radtan omni-radtan --topics /fisheye1 /fisheye2

因为并不一定要用整个录制视频,2和35是你想要的起始和截止时间,可以修改。

如果在过程中出现Using the default setup in the initial run leads to an error of Cameras are not connected through mutual observations, please check the dataset. Maybe adjust the approx. sync. tolerance.相机不同步的报错,可以通过修改话题发布的频率,或者在kalibr命令的末尾加上–approx-sync 0.04来解决。

最终会生成camchain-.cameras_calibration.yaml、results-cam-.cameras_calibration.txt和report-cam-.cameras_calibration.pdf。

2.5. Camera-IMU联合标定

在你之前新建的文件夹中,新建imu.yaml文件如下(根据你之前的BMI055_imu_param.yaml填写参数):

#Accelerometers

accelerometer_noise_density: 8.003e-02 #Noise density (continuous-time)

accelerometer_random_walk: 5.382e-03 #Bias random walk

#Gyroscopes

gyroscope_noise_density: 8.163e-03 #Noise density (continuous-time)

gyroscope_random_walk: 3.470e-05 #Bias random walk

rostopic: /imu #the IMU ROS topic

update_rate: 200.0 #Hz (for discretization of the values above)

修改rs_t265.launch其中的两行代码如下:

<arg name="enable_sync" default="true"/>

<arg name="unite_imu_method" default="copy"/>

进入你之前新建的文件夹,打开终端,开启T265

roslaunch realsense2_camera rs_t265.launch

修改发布频率

rosrun topic_tools throttle messages /camera/fisheye1/image_raw 20.0 /fisheye1

rosrun topic_tools throttle messages /camera/fisheye2/image_raw 20.0 /fisheye2

rosrun topic_tools throttle messages /camera/imu 200.0 /imu

录制文件

rosbag record -O imu_cameras_calibration /fisheye1 /fisheye2 /imu

调用kalibr的算法计算IMU和camera外参

kalibr_calibrate_imu_camera --target ./apriltags.yaml --cam ./camchain-.cameras_calibration.yaml --imu ./imu.yaml --bag ./imu_cameras_calibration.bag --bag-from-to 2 35 --max-iter 30 --show-extraction

最终会输出camchain-imucam-.imu_cameras_calibration.yaml、imu-.imu_cameras_calibration.yaml、results-imucam-.imu_cameras_calibration.txt、report-imucam-.imu_cameras_calibration.pdf四个文件,你可以通过pdf文件查看你标定的准确性。

至此,我们完成了相机和IMU的标定。

四、运行VINS-FUSION

先下载并编译VINS-Fusion

cd ~/catkin_ws/src

git clone https://github.com/HKUST-Aerial-Robotics/VINS-Fusion

cd ..

catkin_make

source ~/catkin_ws/devel/setup.bash

在VINS-Fusion/config文件夹中,新建文件夹名为realsense_t265,并在其中新建fisheye1.yaml,fisheye2.yaml,stereo_imu.yaml三个文档,内容如下(注意相关参数需要参考BMI055_imu_param.yaml、results-cam-.cameras_calibration.txt和results-imucam-.imu_cameras_calibration.txt自行修改):

fisheye1.yaml

%YAML:1.0

---

model_type: MEI

camera_name: camera

image_width: 848

image_height: 800

mirror_parameters:

xi: 1.75347951

distortion_parameters:

k1: 0.03509054

k2: -0.3590536

p1: 0.00250588

p2: 0.00058101

projection_parameters:

gamma1: 788.10829678

gamma2: 788.19861186

u0: 416.33019648

v0: 404.21771229

fisheye2.yaml

%YAML:1.0

---

model_type: MEI

camera_name: camera

image_width: 848

image_height: 800

mirror_parameters:

xi: 1.95209328

distortion_parameters:

k1: 0.18993538

k2: -0.8234989

p1: 0.00337246

p2: 0.00013959

projection_parameters:

gamma1: 843.77832442

gamma2: 843.19711184

u0: 419.54774026

v0: 406.36245572

stereo_imu.yaml

%YAML:1.0

#common parameters

#support: 1 imu 1 cam; 1 imu 2 cam: 2 cam;

imu: 1

num_of_cam: 2

imu_topic: "/camera/imu"

image0_topic: "/camera/fisheye1/image_raw"

image1_topic: "/camera/fisheye2/image_raw"

output_path: "~/output"

cam0_calib: "fisheye1.yaml"

cam1_calib: "fisheye2.yaml"

image_width: 848

image_height: 800

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 1 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

body_T_cam0: !!opencv-matrix #cam0 coordinate under body coordinate

rows: 4

cols: 4

dt: d

data: [-0.9999306582373404, 0.001093290620038494, 0.01172533294445286, 0.5201790241280906,

-0.0011878229478030982, -0.9999668259020238, -0.008058298336212454, 0.04796159845159734,

0.011716133905124508, -0.008071667179143842, 0.9998987850754022, -0.05434762530417168,

0., 0., 0., 1.]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [-0.9999985320918137, 0.0011840662046389947, -0.0012384673758261621, -0.10570692194161202,

-0.001173065649638605, -0.999960187777609, -0.008845720815767944, 0.048863128512499784,

-0.0012488919887611945, -0.008844255027525165, 0.9999601089152549, -0.04892047362600982,

0., 0., 0., 1. ]

#Multiple thread support

multiple_thread: 1

#feature traker paprameters

max_cnt: 150 # max feature number in feature tracking

min_dist: 30 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 1 # publish tracking image as topic

flow_back: 1 # perform forward and backward optical flow to improve feature tracking accuracy

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 8.00274e-02 # accelerometer measurement noise standard deviation. #0.2 0.04

gyr_n: 8.16326e-03 # gyroscope measurement noise standard deviation. #0.05 0.004

acc_w: 5.38231e-03 # accelerometer bias random work noise standard deviation. #0.002

gyr_w: 3.470378e-05 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

#unsynchronization parameters

estimate_td: 0 # online estimate time offset between camera and imu

td: 0.0 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock)

#loop closure parameters

load_previous_pose_graph: 0 # load and reuse previous pose graph; load from 'pose_graph_save_path'

pose_graph_save_path: "~/output" # save and load path

save_image: 1 # save image in pose graph for visualization prupose; you can close this function by setting 0

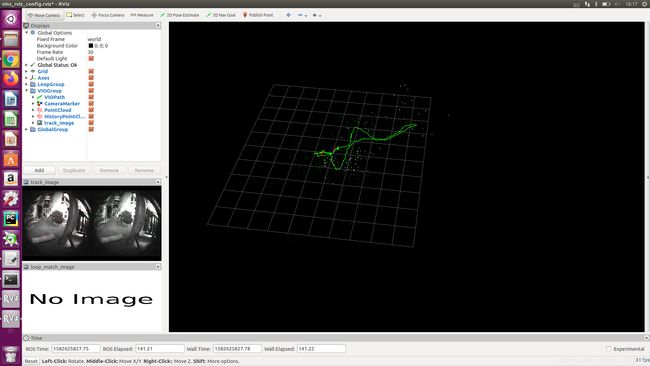

之后打开终端,运行

roslaunch realsense2_camera rs_t265.launch

rosrun vins vins_node ~/catkin_ws/src/VINS-Fusion/config/realsense_t265/stereo_imu.yaml

roslaunch vins vins_rviz.launch

参考文章:

Realsense T265标定及运行VINS–kalibr和imu_utils

如何用Realsense D435i运行VINS-Mono等VIO算法 获取IMU同步数据

使用imu_utils工具生成IMU的Allan方差标定曲线

VINS-Fusion