总结

代码

# Spider 1

def get_url_list_from(channel, page, who_sells=''):

list_view = '{}/{}o{}'.format(channel, who_sells, page)

wb_data = requests.get(list_view, headers=headers, proxies=proxies, timeout=6)

if wb_data.status_code == 200:

soup = BeautifulSoup(wb_data.text, 'lxml')

if soup.find('div', 'pageBox'):

links = soup.select('dl.list-bigpic > dt > a')

data = {

'link': list(map(lambda x: x.get('href'), links))

}

# print(data)

use_urls = []

for item in data['link']:

if item.find('ganji.com') == -1:

pass

else:

use_urls.append(item)

print('item'+item)

url_list.insert_one({'url': item})

get_item_info(item)

print(use_urls)

else:

print('last page: ' + str(page))

else:

print('status_code is ' + str(wb_data.status_code))

# Spider 2

def get_item_info(url):

wb_data = requests.get(url, headers=headers)

if wb_data.status_code == 404:

print('404 not found')

else:

try:

soup = BeautifulSoup(wb_data.text, 'lxml')

prices = soup.select('.f22.fc-orange.f-type')

pub_dates = soup.select('.pr-5')

areas = soup.select('ul.det-infor > li:nth-of-type(3) > a')

cates = soup.select('ul.det-infor > li:nth-of-type(1) > span > a')

# print(pub_dates, cates, sep='\n===========\n')

data = {

'title': soup.title.text.strip(),

'price': prices[0].text.strip() if len(prices) > 0 else 0,

'pub_date': pub_dates[0].text.strip().replace(u'\xa0', u' ') if len(pub_dates) > 0 else "",

'area': list(map(lambda x: x.text.strip(), areas)),

'cate': list(map(lambda x: x.text.strip(), cates)),

'url': url

}

print(data)

item_info.insert_one(data)

except AttributeError:

print('shits happened!')

except UnicodeEncodeError:

print('shits happened again!')

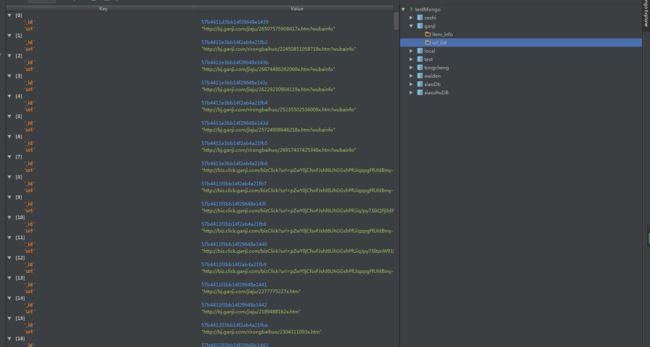

总结

-

1. 断点续传

db_urls = [item['url'] for item in url_list.find()]

index_urls = [item['url'] for item in item_info.find()]

x = set(db_urls)

y = set(index_urls)

rest_of_urls = x-y

pool.map(get_item_info, rest_of_urls)

思路也主要是逻辑上的,不是真正网络的断点续传,而是在Table-1:link_list和Table-2:item_info,插入一个相同的key——URL。

利用url的相同,在Table-1现行获取完url,数量不变的前提下,请求Table-2的数据时,即使发生中断冗余问题,也可根据url数量上的的绝对差值,

来继续Table-2的数据爬取。

-

2. Set 函数

a = ['1','1','2','3','5']

b = ['1','1','2','5']

x = set(a) // ['1', '2', '3', '5'] set中的顺序不确定,只是将list中的相同内容剔除掉

y = set(b)

// 有一点需要注意的就是,这里的x和y还只是一个set而不是list,需要list的话,还有再 list(x)才可以。

print( x - y) // ['3'] ,set可以直接做减法,将重合部分减掉

-

3. Pool进程池的使用

// 获取cpu数量的进程

pool = multiprocessing.Pool(multiprocessing.cpu_count())

close() 关闭pool,使其不在接受新的任务。

terminate() 结束工作进程,不在处理未完成的任务。

join() 主进程阻塞,等待子进程的退出, join方法要在close或terminate之后使用。

具体用法如下:

pool = multiprocessing.Pool(multiprocessing.cpu_count())

pool.map(get_all_links_from, channelist.split())

# pool.map(get_item_info, rest_of_urls)

pool.close()

pool.join()

参考文章: 进程池

-

4. Headers

headers = {

'UserAgent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.21 Safari/537.36',

'Connection': 'keep-alive'

}

headers = {

'User-Agent':r'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.87 Safari/537.36',

'Cookie':r'id58=c5/ns1ct99sKkWWeFSQCAg==; city=bj; 58home=bj; ipcity=yiwu%7C%u4E49%u4E4C%7C0; als=0; myfeet_tooltip=end; bj58_id58s="NTZBZ1Mrd3JmSDdENzQ4NA=="; sessionid=021b1d13-b32e-407d-a76f-924ec040579e; bangbigtip2=1; 58tj_uuid=0ed4f4ba-f709-4c42-8972-77708fcfc553; new_session=0; new_uv=1; utm_source=; spm=; init_refer=; final_history={}; bj58_new_session=0; bj58_init_refer=""; bj58_new_uv=1'.format(str(infoid)),

'Accept': '*/*',

'Accept-Encoding': 'gzip, deflate, sdch',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

'Host':'jst1.58.com',

'Referer':r'http://bj.58.com/pingbandiannao/{}x.shtml'.format(info_id)

}

-

5. lambda 匿名函数

list(map(lambda x: x * x, [1, 2, 3, 4, 5, 6, 7, 8, 9]))

[1, 4, 9, 16, 25, 36, 49, 64, 81]

links = soup.select('dl.list-bigpic > dt > a')

data = {

'link': list(map(lambda x: x.get('href'), links))

}

等价于:

[cate.text.strip() for cate in cates]

基本上比较常用的用法是,在map中使用,对一个list中的元素进行操作,代码量极大的缩减。

-

6. try except 函数

try:

soup = BeautifulSoup(wb_data.text, 'lxml')

prices = soup.select('.f22.fc-orange.f-type')

pub_dates = soup.select('.pr-5')

areas = soup.select('ul.det-infor > li:nth-of-type(3) > a')

cates = soup.select('ul.det-infor > li:nth-of-type(1) > span > a')

# print(pub_dates, cates, sep='\n===========\n')

data = {

'title': soup.title.text.strip(),

'price': prices[0].text.strip() if len(prices) > 0 else 0,

'pub_date': pub_dates[0].text.strip().split(' ')[0] if len(pub_dates) > 0 else "",

'area': list(map(lambda x: x.text.strip(), areas)),

'cate': list(map(lambda x: x.text.strip(), cates)),

'url': url

}

print(data)

item_info.insert_one(data)

except AttributeError:

print('shits happened!')

except UnicodeEncodeError:

print('shits happened again!')

def try_to_make(a_mess):

try:

print(1/a_mess)

except (ZeroDivisionError,TypeError): // 可以并列一起写

print('ok~')

try_to_make('0')

因为在爬取大量数据时,可能会碰到各种问题,但是检查修改起来其实并不容易,能看懂并结局问题所在最好,但是如果出现一些匪夷所思或者不影响抓取信息的错误,可以采取,except UnicodeEncodeError类似的手法,规避错误。

-

7. 标点符号 punctuation

from string import punctuation

if i['area'] :

area = [ i for i in i['area] if i not in punctuation ]