kubernetes资源监控(Metrics-Server部署+Dashboard部署)

文章目录

- 1.Metrics-Server介绍

- 2.Metrics-Server部署

- 3.Dashboard部署

1.Metrics-Server介绍

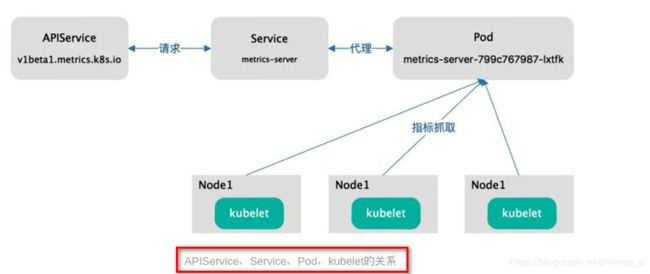

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。

• 容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了MetricsServer之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

• Metrics API 只可以查询当前的度量数据,并不保存历史数据。

• Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

• 必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet Summary API 获取数据。

示例:

http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes

http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes/ http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/namespace/

Metrics Server 并不是 kube-apiserver 的一部分,而是通过 Aggregator 这种插件机制,在独立部署的情况下同 kube-apiserver 一起统一对外服务的。

• kube-aggregator 其实就是一个根据 URL 选择具体的 API 后端的代理服务器。

Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的 CPU和内存使用情况。而其他Custom Metrics(自定义指标)由Prometheus等组件来完成。

2.Metrics-Server部署

官方参考:https://github.com/kubernetes-incubator/metrics-server

1.安装Metrics-Server

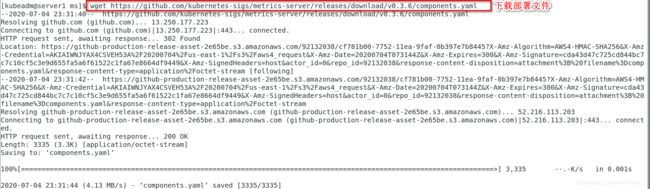

[kubeadm@server1 ms]$ wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

--2020-07-04 23:31:40-- https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

Resolving github.com (github.com)... 13.250.177.223

Connecting to github.com (github.com)|13.250.177.223|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github-production-release-asset-2e65be.s3.amazonaws.com/92132038/cf781b00-7752-11ea-9faf-0b397e7b8445?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20200704%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20200704T073144Z&X-Amz-Expires=300&X-Amz-Signature=cda43d47c725cd844bc7c7c10cf5c3e9d655fa5a6f61522c1fa67e8664df9449&X-Amz-SignedHeaders=host&actor_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream [following]

--2020-07-04 23:31:42-- https://github-production-release-asset-2e65be.s3.amazonaws.com/92132038/cf781b00-7752-11ea-9faf-0b397e7b8445?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20200704%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20200704T073144Z&X-Amz-Expires=300&X-Amz-Signature=cda43d47c725cd844bc7c7c10cf5c3e9d655fa5a6f61522c1fa67e8664df9449&X-Amz-SignedHeaders=host&actor_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream

Resolving github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)... 52.216.113.203

Connecting to github-production-release-asset-2e65be.s3.amazonaws.com (github-production-release-asset-2e65be.s3.amazonaws.com)|52.216.113.203|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3335 (3.3K) [application/octet-stream]

Saving to: ‘components.yaml’

100%[====================================================================================================================>] 3,335 --.-K/s in 0.001s2020-07-04 23:31:44 (4.13 MB/s) - ‘components.yaml’ saved [3335/3335]

[kubeadm@server1 ms]$ ls

components.yaml

[kubeadm@server1 ms]$ vim components.yaml

[kubeadm@server1 ms]$ kubectl apply -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[kubeadm@server1 ms]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-698fcc7d7c-2mm89 1/1 Running 1 16h

coredns-698fcc7d7c-gsbj8 1/1 Running 1 16h

etcd-server1 1/1 Running 117 16h

kube-apiserver-server1 1/1 Running 118 16h

kube-controller-manager-server1 1/1 Running 16 16h

kube-flannel-ds-amd64-cbq9v 1/1 Running 1 16h

kube-flannel-ds-amd64-w9wqb 1/1 Running 1 16h

kube-flannel-ds-amd64-zw255 1/1 Running 1 16h

kube-proxy-2xln8 1/1 Running 1 16h

kube-proxy-h2kwf 1/1 Running 1 16h

kube-proxy-t2krj 1/1 Running 1 16h

kube-scheduler-server1 1/1 Running 16 16h

metrics-server-7cdfcc6666-mc2hd 1/1 Running 0 24s

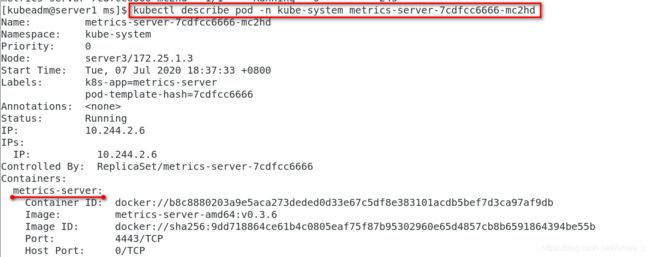

[kubeadm@server1 ms]$ kubectl describe pod -n kube-system metrics-server-7cdfcc6666-mc2hd

Name: metrics-server-7cdfcc6666-mc2hd

Namespace: kube-system

Priority: 0

Node: server3/172.25.1.3

Start Time: Tue, 07 Jul 2020 18:37:33 +0800

Labels: k8s-app=metrics-server

pod-template-hash=7cdfcc6666

Annotations: <none>

Status: Running

IP: 10.244.2.6

IPs:

IP: 10.244.2.6

Controlled By: ReplicaSet/metrics-server-7cdfcc6666

Containers:

metrics-server:

Container ID: docker://b8c8880203a9e5aca273deded0d33e67c5df8e383101acdb5bef7d3ca97af9db

Image: metrics-server-amd64:v0.3.6

Image ID: docker://sha256:9dd718864ce61b4c0805eaf75f87b95302960e65d4857cb8b6591864394be55b

Port: 4443/TCP

Host Port: 0/TCP

Args:

--cert-dir=/tmp

--secure-port=4443

State: Running

Started: Tue, 07 Jul 2020 18:37:35 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/tmp from tmp-dir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from metrics-server-token-cw44x (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

tmp-dir:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

metrics-server-token-cw44x:

Type: Secret (a volume populated by a Secret)

SecretName: metrics-server-token-cw44x

Optional: false

QoS Class: BestEffort

Node-Selectors: kubernetes.io/arch=amd64

kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned kube-system/metrics-server-7cdfcc6666-mc2hd to server3

Normal Pulled 5m27s kubelet, server3 Container image "metrics-server-amd64:v0.3.6" already present on machine

Normal Created 5m27s kubelet, server3 Created container metrics-server

Normal Started 5m26s kubelet, server3 Started container metrics-server

2.解决Metrics-server的Pod日志报错

部署后查看Metrics-server的Pod日志:

kubectl logs -n kube-system metrics-server-7cdfcc6666-mc2hd

错误1:dial tcp: lookup server2 on 10.96.0.10:53: no such host

这是因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。可以直接修改 coredns的configmap,讲各个节点的主机名加入到hosts中,这样所有Pod都可以从 CoreDNS中解析各个节点的名字。

解决方法:

kubectl edit configmap coredns -n kube-system

apiVersion: v1

data:

Corefile: |

...

ready

hosts {

172.25.1.1 server1

172.25.1.2 server2

172.25.1.3 server3

fallthrough

}报错2:x509: certificate signed by unknown authority![]()

**Metric Server 支持一个参数 --kubelet-insecure-tls,可以跳过这一检查,然而官 方也明确说了,这种方式不推荐生产使用。 **

解决方法:

启用TLS Bootstrap 证书签发(在master和各个节点中)

vim /var/lib/kubelet/config.yaml

serverTLSBootstrap: truesystemctl restart kubelet

kubectl get csr

kubectl certificate approve csr-n9pvr certificatesigningrequest.certificates.k8s.io/csr-n9pvr approved

[kubeadm@server1 ms]$ sudo vim /var/lib/kubelet/config.yaml

[kubeadm@server1 ms]$ sudo tail -1 /var/lib/kubelet/config.yaml

serverTLSBootstrap: true

[kubeadm@server1 ms]$ systemctl restart kubelet

[root@server2 ~]# vim /var/lib/kubelet/config.yaml

[root@server2 ~]# tail -1 /var/lib/kubelet/config.yaml

serverTLSBootstrap: true

[root@server2 ~]# systemctl restart kubelet

[root@server3 ~]# vim /var/lib/kubelet/config.yaml

[root@server3 ~]# tail -1 /var/lib/kubelet/config.yaml

serverTLSBootstrap: true

[root@server3 ~]# systemctl restart kubelet

[kubeadm@server1 ms]$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-gmk7m 66s kubernetes.io/kubelet-serving system:node:server2 Pending

csr-n4t5j 30s kubernetes.io/kubelet-serving system:node:server3 Pending

csr-pglj4 2m10s kubernetes.io/kubelet-serving system:node:server1 Pending

[kubeadm@server1 ms]$ kubectl certificate approve csr-pglj4

certificatesigningrequest.certificates.k8s.io/csr-pglj4 approved

[kubeadm@server1 ms]$ kubectl certificate approve csr-gmk7m

certificatesigningrequest.certificates.k8s.io/csr-gmk7m approved

[kubeadm@server1 ms]$ kubectl certificate approve csr-n4t5j

certificatesigningrequest.certificates.k8s.io/csr-n4t5j approved

[kubeadm@server1 ms]$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-gmk7m 2m5s kubernetes.io/kubelet-serving system:node:server2 Approved,Issued

csr-n4t5j 89s kubernetes.io/kubelet-serving system:node:server3 Approved,Issued

csr-pglj4 3m9s kubernetes.io/kubelet-serving system:node:server1 Approved,Issued

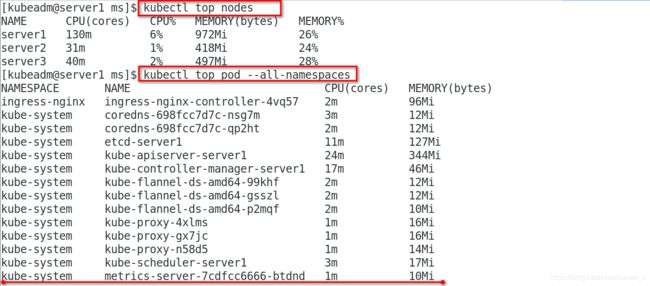

[kubeadm@server1 ms]$ kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

server1 130m 6% 972Mi 26%

server2 31m 1% 418Mi 24%

server3 40m 2% 497Mi 28%

[kubeadm@server1 ms]$ kubectl top pod --all-namespaces

NAMESPACE NAME CPU(cores) MEMORY(bytes)

ingress-nginx ingress-nginx-controller-4vq57 2m 96Mi

kube-system coredns-698fcc7d7c-nsg7m 3m 12Mi

kube-system coredns-698fcc7d7c-qp2ht 2m 12Mi

kube-system etcd-server1 11m 127Mi

kube-system kube-apiserver-server1 24m 344Mi

kube-system kube-controller-manager-server1 17m 46Mi

kube-system kube-flannel-ds-amd64-99khf 2m 12Mi

kube-system kube-flannel-ds-amd64-gsszl 2m 12Mi

kube-system kube-flannel-ds-amd64-p2mqf 2m 10Mi

kube-system kube-proxy-4xlms 1m 16Mi

kube-system kube-proxy-gx7jc 1m 16Mi

kube-system kube-proxy-n58d5 1m 14Mi

kube-system kube-scheduler-server1 3m 17Mi

kube-system metrics-server-7cdfcc6666-btdnd 1m 10Mi

**

报错3: Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

如果metrics-server正常启动,没有错误,应该就是网络问题。修改metricsserver的Pod 网络模式:hostNetwork: true

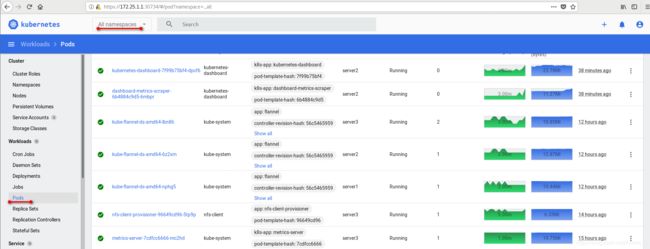

3.Dashboard部署

参考官网:https://github.com/kubernetes/dashboard

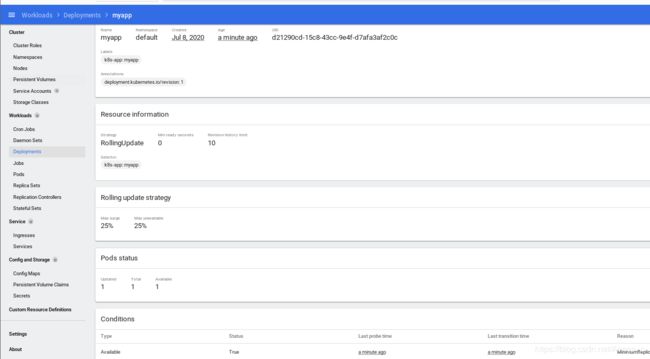

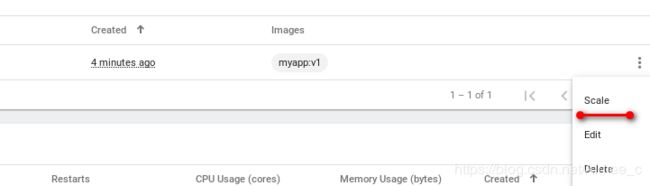

Dashboard可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。用户可以 用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管 理 Kubernetes 各种资源。

1.下载部署文件:

[kubeadm@server1 ~]$ mkdir dash

[kubeadm@server1 ~]$ cd dash/

[kubeadm@server1 dash]$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

--2020-07-05 00:47:08-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 7552 (7.4K) [text/plain]

Saving to: ‘recommended.yaml’

100%[====================================================================================================================>] 7,552 14.2KB/s in 0.5s

2020-07-05 00:47:10 (14.2 KB/s) - ‘recommended.yaml’ saved [7552/7552]

[kubeadm@server1 dash]$ ls

recommended.yaml2.应用部署文件:

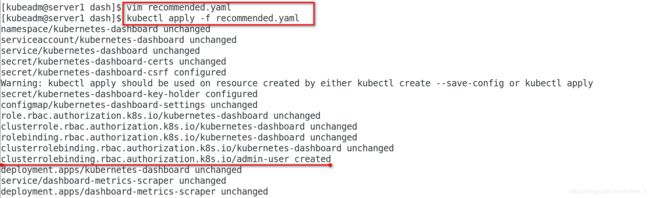

[kubeadm@server1 dash]$ vim recommended.yaml

[kubeadm@server1 dash]$ kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[kubeadm@server1 dash]$ kubectl get namespaces

NAME STATUS AGE

default Active 15d

ingress-nginx Active 7d5h

kube-node-lease Active 15d

kube-public Active 15d

kube-system Active 15d

kubernetes-dashboard Active 12s

[kubeadm@server1 dash]$ kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6b4884c9d5-rxfg5 1/1 Running 0 50s

kubernetes-dashboard-7f99b75bf4-xq76v 1/1 Running 0 50s

[kubeadm@server1 dash]$ kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.103.120.157 <none> 8000/TCP 71s

kubernetes-dashboard ClusterIP 10.102.117.32 <none> 443/TCP 71s

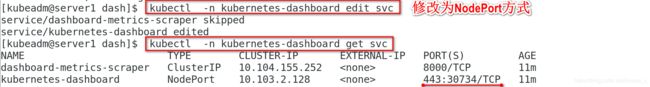

3.修改为NodePort方式,以便外部访问

kubectl edit svc -n kubernetes-dashboard

spec:

clusterIP: 10.102.117.32

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort

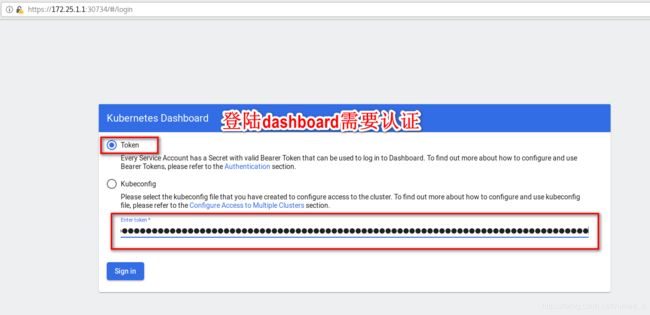

4.获取dashboard pod的token

登陆dashboard需要认证,需要获取dashboard pod的token:

[kubeadm@server1 dash]$ kubectl get namespace

NAME STATUS AGE

default Active 31h

kube-node-lease Active 31h

kube-public Active 31h

kube-system Active 31h

kubernetes-dashboard Active 15m

nfs-client Active 14h

[kubeadm@server1 dash]$ kubectl -n kubernetes-dashboard get secrets

NAME TYPE DATA AGE

default-token-krkcm kubernetes.io/service-account-token 3 15m

kubernetes-dashboard-certs Opaque 0 15m

kubernetes-dashboard-csrf Opaque 1 15m

kubernetes-dashboard-key-holder Opaque 2 15m

kubernetes-dashboard-token-6cnlx kubernetes.io/service-account-token 3 15m

[kubeadm@server1 dash]$ kubectl -n kubernetes-dashboard describe secrets kubernetes-dashboard-token-6cnlx

Name: kubernetes-dashboard-token-6cnlx

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 45f05e11-017d-4508-ba65-d3c193bf3cf7

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Im9TRzVPSHgxelNiU1dkWHJIVVJqeHRxRTRQNzBPUVhiNVFZUnMtbVhEcHcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi02Y25seCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ1ZjA1ZTExLTAxN2QtNDUwOC1iYTY1LWQzYzE5M2JmM2NmNyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.bwCHyGKap1BEIBfBJPq20JahrSotwMTpiacdDDTx2yIeJ-77jNIANj3TffAeE86fsAWNf5oGe8FqQd58Fw48JW-uY7O9oYuC9I63K6F9NK0de1SsWxY_vkIiX5BUuL-A_WryIvf7TKyqJ4a56ki-J83P-fIYNeqnSAlkLmeK03UHHdxfpay8T3bs-nzZxNp8p29r-N3a8_F62G6fL-wBCe7vnu90E3lQ3eW_v07Inn7Bf4NS5BKT3oGRYZ0M6z7G2Daz8YRSVjXyHVaEDt0mLPXE6CX3zQEYZ6ME3H_SMkQi-obWWaYCbbToXVyHIC3iLSnGBXNG0MLx9PPxUmKP9g

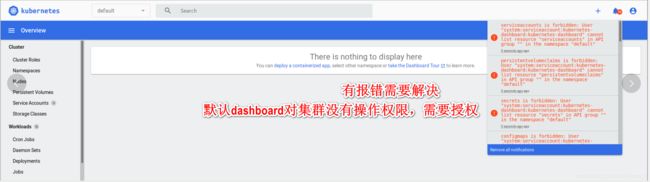

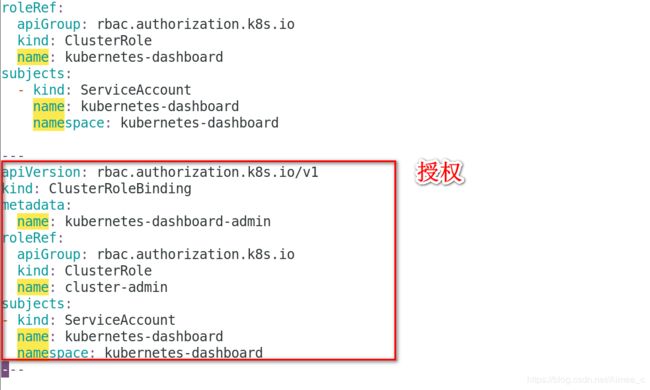

5.对dashboard需要授权, 使对集群有操作权限

[kubeadm@server1 dash]$ vim recommended.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard[kubeadm@server1 dash]$ kubectl apply -f recommended.yaml

授权后可以正常查看无报错