分布式一致性算法与开源分布式应用具体实现

Paxos算法:

Paxos是目前最广泛流行的分布式一致性算法之一,也是目前被各大开源分布式框架使用较多的算法之一(例如zookeeper的核心算法就是基于Paxos算法优化实现的),它的核心思想就是少数服从多数原则,即对于任意的value的产生都需要进行半数以上通过。

在Paxos算法中存在3个角色:Proposer (提案者-用于生成各提案-其中提案由-提案编号(一般为全局唯一且递增的)+实际值value),Acceptor(接受者:接受Proposer提出的提案并选举最终value),Learner(学习者最终拿到Accpetor选取到的value进行)

对于Paxos算法在选举最终value的整体的过程可以分为两个阶段,prepare与acceptor,在这两个阶段都是Proposer与Accpetor之间进行通信。

在任意的节点下,每个副本同时具有Proposer、Acceptor、Learner三种角色。

第一阶段:proposer生成一个提案编号N(一般都是自增提案编号),携带该编号发送一个prepare请求到超过半数的Acceptor,Acceptor接受该提案有两种选择:

- 如果当前Acceptor没有接受过任何提案,那么认为此处是第一次提案,记录当前编号N,并向当前proposer保证不再接受比当前编号N小的提案(这里是小于等于),并且返回null值。

- 若当前Acceptor已经存在提案,对比当前提案编号与该Acceptor的所存储的旧的最大提案编号,若是大于则接受该新提案,更改新编号并返回 旧值,否者拒绝该提案不做任何响应。

第二阶段:Proposer接收来自各Acceptor,若接受到超过半数以上的Acceptor响应,就进行第二阶段,否者自增proposerId重新进行第一阶段执行。若当前所有Acceptor响应携带的值为null则该Proposer可提交任何值否则只能使用当前响应的最大编号对应的value,此时Proposer将发送请求到超过半数的Acceptor上(注:第一阶段和第二阶段发送的请求可能落到不同的Acceptor),若Acceptor没有接受到其他Proposer超过N的编号,则该Acceptor接受提案,否则不回复或回复error。但Proposer接受到了超过了半数以上Acceptor的AOK响应 (确定响应)代表提案被最终确定,否则重新开始第一阶段。

经过第一阶段和第二阶段,Proposer肯定会选举产生一个最终value,该值一旦产生可以通过不同的方式直接通知Learner,由Learner具体做不同

Paxos算法与二阶段和三阶段最大不同就是可以保证分布式数据的绝对一致性。

Raft选举机制:

Paxos算法虽然可以保证绝对的一致性,但因为它的难以理解以及工程上的难以实现,所以Raft选举算法应运而生。

Raft选举算法并由Leader节点负责管理日志复制来实现多副本的一致性。

Raft中存在以下3个角色,任意一个分布式节点都可以是以下角色:

领导人(Leader):负责接收客户端的请求,将日志复制到其他节点并告知其他节点何时应用这些日志是安全的

候选者(Candidate):用于选举Leader的一种角色,集群中任意一个节点都可以成为Candidate

跟随者(follower):负责响应来自Leader(进行日志复制)或者Candidate(进行投票选举)的请求

任期的概念:

- 每一个server内部都会维护一个任期该任期随着时间自增,并且该值会持久化,任期越大代表这个服务的数据越新。

- 每一段任期从一次选举开始,一个或者多个 candidate 尝试成为 leader 。如果一个 candidate 赢得选举,然后他就在该任期剩下的时间里充当 leader 。在某些情况下,一次选举无法选出 leader 。在这种情况下,这一任期会以没有 leader 结束;一个新的任期(包含一次新的选举)会很快重新开始。

-

每一个leader都有自己的任期(Raft把时间切割为任意长度的任期,每个任期都有一个任期号,采用连续的整数)

-

服务器之间的通信都会携带任期号,follower会根据对比Candidate与本地的任期号的大小决定是否对其投票,Candidate 或者 leader 发现自己的任期号过期了,它会立即回到 follower 状态。

对于raft算法来说,所有服务都可以成为leader,但是我们需要在这些server上尽量选举出更适合的leader,这个塞选条件就是服务器中的数据是不是最新的,用越新的数据的server成为leader之后与各follower之间数据同步所消耗的时间越少,数据的一致性的保障也最高。那么如何来评论数据的新或者不新就从任期(自增)+logid(自增)来判断。

Raft算法的整体实现过程:

- Raft 使用一种心跳机制来触发 leader 选举,在server启动的之后每个节点都成为一个follower角色,在定时时间(一般是150ms-300ms之间,并且该值的设定是随机确定)内没有收到leader的心跳检测,那么该follower就变更为Candidate,自增当前的任期(默认情况一个新的节点加入的任期都为0),开始广播信息进行选举,此时Candidate都会投于自己一票

- 每一个follower都能进行投票,follower收到Candidate的信息,会比较两这的任期是否相同,如果follower任期大于Candidate会拒绝投票,如果follower任期小于等于当前Candidate,更新自己服务中的任期号并且投出手中同一任期的唯一票(对于同一个任期,每个服务器节点只会投给一个 candidate ,按照先来先服务的顺序),如果该follower收到了后续的大于该任期号的其它请求,更新自己服务中的任期号并且投票。

- 经过一段时间Candidate会有以下的情况:1.获得过半数以上的投票,则当前Candidate成为Leader,并广播各Follower停止投票,2,未收到过半数请求,自增任期号开始下轮选举,3:收到了来自其它的自称为leader的Candidate的信息,这个时候就需要比较两者的任期号来确定谁更适合成为leader,如果低于leader则该Candidate降为follower,如果高于则仍然保持Candidate 4,可能存在多个Candidate瓜分了选票导致过半这一个条件无法达到,等待选举超时,重置定时器时间等待一下轮的选举。(若出现多个Candidate选举的情况,会存在多个Candidate获取到相同的选票那么此时就会出现选举失败,此时可以通过Candidate随机睡眠一定时间,重新打撒选票,重新开始进行选举。)

- leader在没过一小段时间后,就给所有的 Follower 发送一个 Heartbeat 以保持所有节点的状态,Follower 收到 Leader 的 Heartbeat 后重设 Timeout。

一旦选取到领导者,那么后续与客户端的操作都由Leader操作,Leader写入完成同时发送同步数据请求到所有follower(此时的leader中数据是Uncomit状态),follower完成数据预写入(这个时候数据的状态为Uncomit状态)并进行ACK应答,leader收到了过半数的follower的响应,数据Commit直接返回客户端,同时会发送AppendEntries(就是commit请求)请求给follower,follower收到请求进行commit(这里也有个两阶段提交的概念,第一次预提交第二次是真正的落地。这里就会存在一定的数据不一致的情况。例如leader在返回客户端之后就挂机了,那么此时就会导致follower未收到commit请求,那条数据仍处于Uncommit中。这个时候重新进行leader选举,若leader在拥有此条数据的follower中产生,那么仍然可以commit,若被选举的leader中未包含此数据,就会出现丢数据情况),commit成功之后,follower会进行一个ack确认,这里不能保证第二次AppendEntries一次成功,所以,leader如果没有收到某个follower的ACK通知,那么leader会不断给它AppendEntries直到该Follower回应。如果这时候客户端又有新的请求,那么leader给滞后的Follower的AppendEntries里就会包含上一次的第二阶段和新请求的第一阶段leader来调度这些并发请求的顺序,并且保证leader与followers状态的一致性。raft中的做法是,将这些请求以及执行顺序告知followers。leader和followers以相同的顺序来执行这些请求,保证状态一致。若该follower长时间不进行响应,leader会认为该节点挂掉,将其节点从集群中剔除。

开源实现:服务注册与发现中心:nacos中CP(基于Raft算法实现的Nacos CP 也并不是严格的,只是能保证一半所见一致,以及数据的丢失概率较小)

Nacos中Raft算法源码分析(基于Nacos-1.3.0版本,与Raft算法还是有一些不同的区别的):

Nacos是阿里开源的一款集注册中心与配置中心于一体的一个分布式应用组件。官网:http://dubbo.apache.org/zh-cn/docs/user/references/registry/nacos.html。nacos针对于应用环境的不同提供了不同的分布式CAP的实现(Raft选举的CP与参考Eureka Server对等节点的AP)。这里主要讲解的就是raft算法的实现。

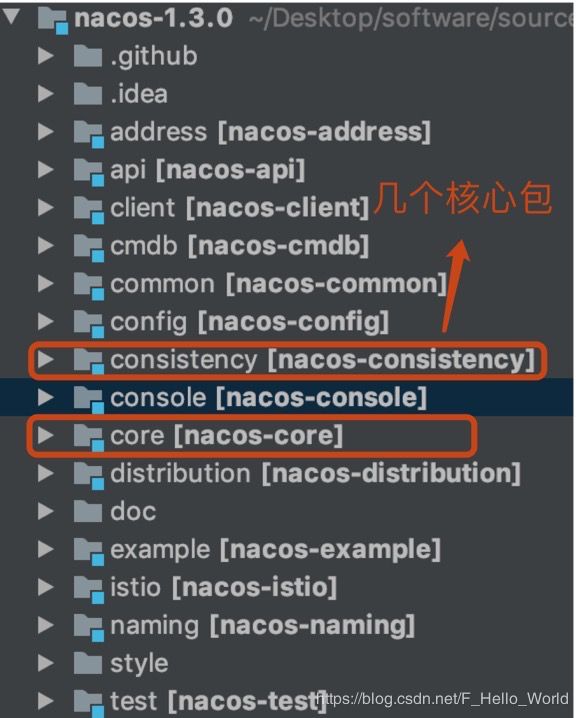

nacos 1.3.0是一个springboot项目(所以本质代码走读较其它组件的应用来说来的简单),如下图所示是nacos源码:

核心选举代码在RaftCore中(核心代码):

public class RaftCore {

/**

* 提供对外访问api路径

*/

public static final String API_VOTE = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/vote";

public static final String API_BEAT = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/beat";

public static final String API_PUB = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/datum";

public static final String API_DEL = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/datum";

public static final String API_GET = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/datum";

public static final String API_ON_PUB = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/datum/commit";

public static final String API_ON_DEL = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/datum/commit";

public static final String API_GET_PEER = UtilsAndCommons.NACOS_NAMING_CONTEXT + "/raft/peer";

private ScheduledExecutorService executor = new ScheduledThreadPoolExecutor(1, new ThreadFactory() {

@Override

public Thread newThread(Runnable r) {

Thread t = new Thread(r);

t.setDaemon(true);

t.setName("com.alibaba.nacos.naming.raft.notifier");

return t;

}

});

//

public static final Lock OPERATE_LOCK = new ReentrantLock();

public static final int PUBLISH_TERM_INCREASE_COUNT = 100;

//记录Listener

private volatile Map> listeners = new ConcurrentHashMap<>();

private volatile ConcurrentMap datums = new ConcurrentHashMap<>();

@Autowired

//对等节点集合 在选举阶段节点都是定位一致

private RaftPeerSet peers;

@Autowired

private SwitchDomain switchDomain;

@Autowired

private GlobalConfig globalConfig;

@Autowired

private RaftProxy raftProxy;

@Autowired

private RaftStore raftStore;

//通知者 用于发送信息给其它节点

public volatile Notifier notifier = new Notifier();

private boolean initialized = false;

@PostConstruct

//在RaftCore初始化之后执行

public void init() throws Exception {

//初始化

Loggers.RAFT.info("initializing Raft sub-system");

//启动Notifier,轮询Datums,通知RaftListener

executor.submit(notifier);

long start = System.currentTimeMillis();

//用于从磁盘加载Datum和term数据进行数据恢复 并添加到notifier

raftStore.loadDatums(notifier, datums);

//初始化当前 term选票 默认为0

setTerm(NumberUtils.toLong(raftStore.loadMeta().getProperty("term"), 0L));

//打印日志

Loggers.RAFT.info("cache loaded, datum count: {}, current term: {}", datums.size(), peers.getTerm());

while (true) {

if (notifier.tasks.size() <= 0) {

break;

}

Thread.sleep(1000L);

}

initialized = true;

Loggers.RAFT.info("finish to load data from disk, cost: {} ms.", (System.currentTimeMillis() - start));

//注册master选举任务 选举超时时间0-15s之间 每TICK_PERIOD_MS=500ms执行一次

GlobalExecutor.registerMasterElection(new MasterElection());

//注册一个心跳检测事件 0-5s执行每TICK_PERIOD_MS=500ms执行一次

GlobalExecutor.registerHeartbeat(new HeartBeat());

Loggers.RAFT.info("timer started: leader timeout ms: {}, heart-beat timeout ms: {}",

GlobalExecutor.LEADER_TIMEOUT_MS, GlobalExecutor.HEARTBEAT_INTERVAL_MS);

}

public Map> getListeners() {

return listeners;

}

public void signalPublish(String key, Record value) throws Exception {

if (!isLeader()) {

ObjectNode params = JacksonUtils.createEmptyJsonNode();

params.put("key", key);

params.replace("value", JacksonUtils.transferToJsonNode(value));

Map parameters = new HashMap<>(1);

parameters.put("key", key);

final RaftPeer leader = getLeader();

raftProxy.proxyPostLarge(leader.ip, API_PUB, params.toString(), parameters);

return;

}

try {

OPERATE_LOCK.lock();

long start = System.currentTimeMillis();

final Datum datum = new Datum();

datum.key = key;

datum.value = value;

if (getDatum(key) == null) {

datum.timestamp.set(1L);

} else {

datum.timestamp.set(getDatum(key).timestamp.incrementAndGet());

}

ObjectNode json = JacksonUtils.createEmptyJsonNode();

json.replace("datum", JacksonUtils.transferToJsonNode(datum));

json.replace("source", JacksonUtils.transferToJsonNode(peers.local()));

onPublish(datum, peers.local());

final String content = json.toString();

final CountDownLatch latch = new CountDownLatch(peers.majorityCount());

for (final String server : peers.allServersIncludeMyself()) {

if (isLeader(server)) {

latch.countDown();

continue;

}

final String url = buildURL(server, API_ON_PUB);

HttpClient.asyncHttpPostLarge(url, Arrays.asList("key=" + key), content, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.warn("[RAFT] failed to publish data to peer, datumId={}, peer={}, http code={}",

datum.key, server, response.getStatusCode());

return 1;

}

latch.countDown();

return 0;

}

@Override

public STATE onContentWriteCompleted() {

return STATE.CONTINUE;

}

});

}

if (!latch.await(UtilsAndCommons.RAFT_PUBLISH_TIMEOUT, TimeUnit.MILLISECONDS)) {

// only majority servers return success can we consider this update success

Loggers.RAFT.error("data publish failed, caused failed to notify majority, key={}", key);

throw new IllegalStateException("data publish failed, caused failed to notify majority, key=" + key);

}

long end = System.currentTimeMillis();

Loggers.RAFT.info("signalPublish cost {} ms, key: {}", (end - start), key);

} finally {

OPERATE_LOCK.unlock();

}

}

public void signalDelete(final String key) throws Exception {

OPERATE_LOCK.lock();

try {

if (!isLeader()) {

Map params = new HashMap<>(1);

params.put("key", URLEncoder.encode(key, "UTF-8"));

raftProxy.proxy(getLeader().ip, API_DEL, params, HttpMethod.DELETE);

return;

}

// construct datum:

Datum datum = new Datum();

datum.key = key;

ObjectNode json = JacksonUtils.createEmptyJsonNode();

json.replace("datum", JacksonUtils.transferToJsonNode(datum));

json.replace("source", JacksonUtils.transferToJsonNode(peers.local()));

onDelete(datum.key, peers.local());

for (final String server : peers.allServersWithoutMySelf()) {

String url = buildURL(server, API_ON_DEL);

HttpClient.asyncHttpDeleteLarge(url, null, json.toString()

, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.warn("[RAFT] failed to delete data from peer, datumId={}, peer={}, http code={}", key, server, response.getStatusCode());

return 1;

}

RaftPeer local = peers.local();

local.resetLeaderDue();

return 0;

}

});

}

} finally {

OPERATE_LOCK.unlock();

}

}

public void onPublish(Datum datum, RaftPeer source) throws Exception {

RaftPeer local = peers.local();

if (datum.value == null) {

Loggers.RAFT.warn("received empty datum");

throw new IllegalStateException("received empty datum");

}

if (!peers.isLeader(source.ip)) {

Loggers.RAFT.warn("peer {} tried to publish data but wasn't leader, leader: {}",

JacksonUtils.toJson(source), JacksonUtils.toJson(getLeader()));

throw new IllegalStateException("peer(" + source.ip + ") tried to publish " +

"data but wasn't leader");

}

if (source.term.get() < local.term.get()) {

Loggers.RAFT.warn("out of date publish, pub-term: {}, cur-term: {}",

JacksonUtils.toJson(source), JacksonUtils.toJson(local));

throw new IllegalStateException("out of date publish, pub-term:"

+ source.term.get() + ", cur-term: " + local.term.get());

}

local.resetLeaderDue();

// if data should be persisted, usually this is true:

if (KeyBuilder.matchPersistentKey(datum.key)) {

raftStore.write(datum);

}

datums.put(datum.key, datum);

if (isLeader()) {

local.term.addAndGet(PUBLISH_TERM_INCREASE_COUNT);

} else {

if (local.term.get() + PUBLISH_TERM_INCREASE_COUNT > source.term.get()) {

//set leader term:

getLeader().term.set(source.term.get());

local.term.set(getLeader().term.get());

} else {

local.term.addAndGet(PUBLISH_TERM_INCREASE_COUNT);

}

}

raftStore.updateTerm(local.term.get());

notifier.addTask(datum.key, ApplyAction.CHANGE);

Loggers.RAFT.info("data added/updated, key={}, term={}", datum.key, local.term);

}

public void onDelete(String datumKey, RaftPeer source) throws Exception {

RaftPeer local = peers.local();

if (!peers.isLeader(source.ip)) {

Loggers.RAFT.warn("peer {} tried to publish data but wasn't leader, leader: {}",

JacksonUtils.toJson(source), JacksonUtils.toJson(getLeader()));

throw new IllegalStateException("peer(" + source.ip + ") tried to publish data but wasn't leader");

}

if (source.term.get() < local.term.get()) {

Loggers.RAFT.warn("out of date publish, pub-term: {}, cur-term: {}",

JacksonUtils.toJson(source), JacksonUtils.toJson(local));

throw new IllegalStateException("out of date publish, pub-term:"

+ source.term + ", cur-term: " + local.term);

}

local.resetLeaderDue();

// do apply

String key = datumKey;

deleteDatum(key);

if (KeyBuilder.matchServiceMetaKey(key)) {

if (local.term.get() + PUBLISH_TERM_INCREASE_COUNT > source.term.get()) {

//set leader term:

getLeader().term.set(source.term.get());

local.term.set(getLeader().term.get());

} else {

local.term.addAndGet(PUBLISH_TERM_INCREASE_COUNT);

}

raftStore.updateTerm(local.term.get());

}

Loggers.RAFT.info("data removed, key={}, term={}", datumKey, local.term);

}

//执行选举任务

public class MasterElection implements Runnable {

@Override

public void run() {

try {

if (!peers.isReady()) {

return;

}

RaftPeer local = peers.local();

//一旦leaderDueMs=0说明选举定时任务已经超期 要进行一轮选举

local.leaderDueMs -= GlobalExecutor.TICK_PERIOD_MS;

if (local.leaderDueMs > 0) {

return;

}

// reset timeout

//重置选举触发超时时间

local.resetLeaderDue();

//重置本地心跳时间 避免触发无效的心跳检测

local.resetHeartbeatDue();

//集群广播发送选票

sendVote();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while master election {}", e);

}

}

public void sendVote() {

//

RaftPeer local = peers.get(NetUtils.localServer());

Loggers.RAFT.info("leader timeout, start voting,leader: {}, term: {}",

JacksonUtils.toJson(getLeader()), local.term);

//将所有peer节点的leader置为null

peers.reset();

//自增选举周期

local.term.incrementAndGet();

//将自身作为候选者 相当于投自己一票

local.voteFor = local.ip;

//修改当前节点状态为CANDIDATE候选者

local.state = RaftPeer.State.CANDIDATE;

Map params = new HashMap<>(1);

params.put("vote", JacksonUtils.toJson(local));

//将本地vote 发送除自己以外的其它节点

for (final String server : peers.allServersWithoutMySelf()) {

final String url = buildURL(server, API_VOTE);

try {

//异步发送http请求 这里跳转到对应的response接口

HttpClient.asyncHttpPost(url, null, params, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT vote failed: {}, url: {}", response.getResponseBody(), url);

return 1;

}

//收到发送节点的response响应 这里接受者投自己认为最合适的leader一票 不一定是当前发送投票的候选者

RaftPeer peer = JacksonUtils.toObj(response.getResponseBody(), RaftPeer.class);

Loggers.RAFT.info("received approve from peer: {}", JacksonUtils.toJson(peer));

//收到请求之后马上 归档票数是否满足过半要求

peers.decideLeader(peer);

return 0;

}

});

} catch (Exception e) {

//发送失败记录日志

Loggers.RAFT.warn("error while sending vote to server: {}", server);

}

}

}

}

/**

* 接受选票信息 并做出处理 这里使用synchronized 并发请求串行化

* @param remote

* @return

*/

public synchronized RaftPeer receivedVote(RaftPeer remote) {

//查看收到的选票是否合法

if (!peers.contains(remote)) {

throw new IllegalStateException("can not find peer: " + remote.ip);

}

//获取当前节点信息

RaftPeer local = peers.get(NetUtils.localServer());

//判断vote携带的选举周期与当前选举周期是否为同一个周期

if (remote.term.get() <= local.term.get()) {

//小于选举周期

String msg = "received illegitimate vote" +

", voter-term:" + remote.term + ", votee-term:" + local.term;

//打印日志信息

Loggers.RAFT.info(msg);

//判断当前节点是否已经已经投过票 若还未投票投给自己(term越大说明选举出来的master最新)

if (StringUtils.isEmpty(local.voteFor)) {

//投给自身一票

local.voteFor = local.ip;

}

//将本身返回 加快选举过程 这点与传统的raft算法做出了改进

return local;

}

//说明收到选票周期处于领先中

//避免当前follower成为CANDIDATE候选者 参与选举

local.resetLeaderDue();

//修改当前 节点状态 并改变投票信息 选举周期 这些操作减少竞争者出现 尽量早点选举leader

local.state = RaftPeer.State.FOLLOWER;

local.voteFor = remote.ip;

local.term.set(remote.term.get());

Loggers.RAFT.info("vote {} as leader, term: {}", remote.ip, remote.term);

return local;

}

/**

* 用于心跳任务

*/

public class HeartBeat implements Runnable {

@Override

public void run() {

try {

if (!peers.isReady()) {

return;

}

//获取本地节点信息

RaftPeer local = peers.local();

//通过周期来控制

local.heartbeatDueMs -= GlobalExecutor.TICK_PERIOD_MS;

if (local.heartbeatDueMs > 0) {

return;

}

//重新重置心跳

local.resetHeartbeatDue();

//发送心跳检测 若心跳失败将触发leader选举

sendBeat();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while sending beat {}", e);

}

}

/**

* 发送心跳检测信息 nacos通过心跳检测机制(leader发送心跳包的同时会携带自身对应的服务列表信息)来保证集群的一致性

* @throws IOException

* @throws InterruptedException

*/

public void sendBeat() throws IOException, InterruptedException {

//获取当前服务信息

RaftPeer local = peers.local();

//只有需leader以及nacos为集群模式才进行心跳检测(这里心跳检测机制是由leader发起)

if (local.state != RaftPeer.State.LEADER && !ApplicationUtils.getStandaloneMode()) {

return;

}

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[RAFT] send beat with {} keys.", datums.size());

}

//触发了心跳检测 当前leader正常重新刷新leader超时

local.resetLeaderDue();

// build data

ObjectNode packet = JacksonUtils.createEmptyJsonNode();

//封装当前节点信息 发送给所有follower 保证数据状态一致

packet.replace("peer", JacksonUtils.transferToJsonNode(local));

ArrayNode array = JacksonUtils.createEmptyArrayNode();

if (switchDomain.isSendBeatOnly()) {

Loggers.RAFT.info("[SEND-BEAT-ONLY] {}", String.valueOf(switchDomain.isSendBeatOnly()));

}

//是否开启只发送心跳检测 而不携带datums信息(客户端节点信息)

if (!switchDomain.isSendBeatOnly()) {

for (Datum datum : datums.values()) {

//构建个连接的客户端节点信息

ObjectNode element = JacksonUtils.createEmptyJsonNode();

if (KeyBuilder.matchServiceMetaKey(datum.key)) {

element.put("key", KeyBuilder.briefServiceMetaKey(datum.key));

} else if (KeyBuilder.matchInstanceListKey(datum.key)) {

element.put("key", KeyBuilder.briefInstanceListkey(datum.key));

}

element.put("timestamp", datum.timestamp.get());

array.add(element);

}

}

//将当前节点拥有的datums发送

packet.replace("datums", array);

// broadcast

Map params = new HashMap(1);

params.put("beat", JacksonUtils.toJson(packet));

//封装数据 因为数据体量可能较大 将请求进行gzip压缩

String content = JacksonUtils.toJson(params);

ByteArrayOutputStream out = new ByteArrayOutputStream();

GZIPOutputStream gzip = new GZIPOutputStream(out);

gzip.write(content.getBytes(StandardCharsets.UTF_8));

gzip.close();

byte[] compressedBytes = out.toByteArray();

//获取压缩后数据

String compressedContent = new String(compressedBytes, StandardCharsets.UTF_8);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("raw beat data size: {}, size of compressed data: {}",

content.length(), compressedContent.length());

}

//循环发送现有的所有节点信息

for (final String server : peers.allServersWithoutMySelf()) {

try {

//构建请求url

final String url = buildURL(server, API_BEAT);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("send beat to server " + server);

}

//发送心跳信息

HttpClient.asyncHttpPostLarge(url, null, compressedBytes, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

//

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT beat failed: {}, peer: {}",

response.getResponseBody(), server);

//监控记录失败数

MetricsMonitor.getLeaderSendBeatFailedException().increment();

return 1;

}

//收到raft节点信息

peers.update(JacksonUtils.toObj(response.getResponseBody(), RaftPeer.class));

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("receive beat response from: {}", url);

}

return 0;

}

@Override

public void onThrowable(Throwable t) {

Loggers.RAFT.error("NACOS-RAFT error while sending heart-beat to peer: {} {}", server, t);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

});

} catch (Exception e) {

Loggers.RAFT.error("error while sending heart-beat to peer: {} {}", server, e);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

}

}

}

/**

* follower收到beat心跳后

* @param beat

* @return

* @throws Exception

*/

public RaftPeer receivedBeat(JsonNode beat) throws Exception {

//本地节点

final RaftPeer local = peers.local();

//创建收到的leader节点信息 并赋值(这里可能会出现非)

final RaftPeer remote = new RaftPeer();

JsonNode peer = beat.get("peer");

remote.ip = peer.get("ip").asText();

remote.state = RaftPeer.State.valueOf(peer.get("state").asText());

remote.term.set(peer.get("term").asLong());

remote.heartbeatDueMs = peer.get("heartbeatDueMs").asLong();

remote.leaderDueMs = peer.get("leaderDueMs").asLong();

remote.voteFor = peer.get("voteFor").asText();

//心跳数据检测是否合法数据

//所有非leader发送的心跳检测都为不合法

if (remote.state != RaftPeer.State.LEADER) {

Loggers.RAFT.info("[RAFT] invalid state from master, state: {}, remote peer: {}",

remote.state, JacksonUtils.toJson(remote));

throw new IllegalArgumentException("invalid state from master, state: " + remote.state);

}

//follower一定要小于leader的term周期 这样也就代表nacos 中raft以选举周期为第一 谁的选举周期高谁就成为leader

//这里会出现一个问题 若出现网络分区的情况 集群出现了分区(假设集群有1-5个节点 1节点为leader 2-4 为

//follower 若1-3 为一个分区 4,5为一个分区)1,3正常运行 而4,5缺少leader重新分区 ,因为一致无法确定分区导致

//4,5选举term周期一直领先于1节点 若分区正常了,1发送给4,5的心跳在这里被拒绝,也就是说集群会重新进行选举并且在4,5中被选中leader

if (local.term.get() > remote.term.get()) {

Loggers.RAFT.info("[RAFT] out of date beat, beat-from-term: {}, beat-to-term: {}, remote peer: {}, and leaderDueMs: {}"

, remote.term.get(), local.term.get(), JacksonUtils.toJson(remote), local.leaderDueMs);

throw new IllegalArgumentException("out of date beat, beat-from-term: " + remote.term.get()

+ ", beat-to-term: " + local.term.get());

}

//当前服务器状态必须为follower 若不是需要重新修改

if (local.state != RaftPeer.State.FOLLOWER) {

Loggers.RAFT.info("[RAFT] make remote as leader, remote peer: {}", JacksonUtils.toJson(remote));

// mk follower

local.state = RaftPeer.State.FOLLOWER;

local.voteFor = remote.ip;

}

final JsonNode beatDatums = beat.get("datums");

//因为follower与leader能正常通信 避免该follower 成为Candidate候选者

local.resetLeaderDue();

local.resetHeartbeatDue();

//确定leader地位(一定leader确定就是通过心跳检测广播通知 确定自己在集群中leader位置 )

peers.makeLeader(remote);

//如果

if (!switchDomain.isSendBeatOnly()) {

//交叉对比 本地与leader携带服务实例

Map receivedKeysMap = new HashMap<>(datums.size());

//加载本地缓存对应的实例数据

for (Map.Entry entry : datums.entrySet()) {

receivedKeysMap.put(entry.getKey(), 0);

}

//记录后续批次请求数据

List batch = new ArrayList<>();

//每收集到50个datumKey,则向Leader节点的/v1/ns/raft/get路径发送请求,请求参数为这50个datumKey,获取对应的50个最新的Datum对象;

int processedCount = 0;

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[RAFT] received beat with {} keys, RaftCore.datums' size is {}, remote server: {}, term: {}, local term: {}",

beatDatums.size(), datums.size(), remote.ip, remote.term, local.term);

}

//处理收到的leader对应实例数据

for (Object object : beatDatums) {

processedCount = processedCount + 1;

JsonNode entry = (JsonNode) object;

String key = entry.get("key").asText();

final String datumKey;

//判断datum类型是服务实例key还是Meta元数据类型

if (KeyBuilder.matchServiceMetaKey(key)) {

datumKey = KeyBuilder.detailServiceMetaKey(key);

} else if (KeyBuilder.matchInstanceListKey(key)) {

datumKey = KeyBuilder.detailInstanceListkey(key);

} else {

// ignore corrupted key:

continue;

}

long timestamp = entry.get("timestamp").asLong();

receivedKeysMap.put(datumKey, 1);

try {

//这里开始检测实例数据datum的有效性

//本地包含了实例信息 并且注册的timestamp>=leader的说明实例有效 并且不是最后一个datumKey

if (datums.containsKey(datumKey) && datums.get(datumKey).timestamp.get() >= timestamp && processedCount < beatDatums.size()) {

continue;

}

//本地实例中不包含datumKey或者注册的实例没有leader最新 说明这些实例需要更新

if (!(datums.containsKey(datumKey) && datums.get(datumKey).timestamp.get() >= timestamp)) {

//写入待更新列表

batch.add(datumKey);

}

if (batch.size() < 50 && processedCount < beatDatums.size()) {

continue;

}

//合并数据

String keys = StringUtils.join(batch, ",");

if (batch.size() <= 0) {

continue;

}

//记录日志

Loggers.RAFT.info("get datums from leader: {}, batch size is {}, processedCount is {}, datums' size is {}, RaftCore.datums' size is {}"

, getLeader().ip, batch.size(), processedCount, beatDatums.size(), datums.size());

// 修改datum 数据

//获取最新实例数据 修改对应节点保存的数据

String url = buildURL(remote.ip, API_GET) + "?keys=" + URLEncoder.encode(keys, "UTF-8");

HttpClient.asyncHttpGet(url, null, null, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

return 1;

}

//解析返回的列表数据

List datumList = JacksonUtils.toObj(response.getResponseBody(), new TypeReference>() {});

for (JsonNode datumJson : datumList) {

//加锁处理 (提供的http接口)避免多线程访问安全

OPERATE_LOCK.lock();

Datum newDatum = null;

try {

//获取本地Datum数据

Datum oldDatum = getDatum(datumJson.get("key").asText());

//再次验证Datum数据

if (oldDatum != null && datumJson.get("timestamp").asLong() <= oldDatum.timestamp.get()) {

Loggers.RAFT.info("[NACOS-RAFT] timestamp is smaller than that of mine, key: {}, remote: {}, local: {}",

datumJson.get("key").asText(), datumJson.get("timestamp").asLong(), oldDatum.timestamp);

continue;

}

//判断数据类型 感觉不同类型进行节点替换

if (KeyBuilder.matchServiceMetaKey(datumJson.get("key").asText())) {

Datum serviceDatum = new Datum<>();

serviceDatum.key = datumJson.get("key").asText();

serviceDatum.timestamp.set(datumJson.get("timestamp").asLong());

serviceDatum.value = JacksonUtils.toObj(datumJson.get("value").toString(), Service.class);

newDatum = serviceDatum;

}

if (KeyBuilder.matchInstanceListKey(datumJson.get("key").asText())) {

Datum instancesDatum = new Datum<>();

instancesDatum.key = datumJson.get("key").asText();

instancesDatum.timestamp.set(datumJson.get("timestamp").asLong());

instancesDatum.value = JacksonUtils.toObj(datumJson.get("value").toString(), Instances.class);

newDatum = instancesDatum;

}

if (newDatum == null || newDatum.value == null) {

Loggers.RAFT.error("receive null datum: {}", datumJson);

continue;

}

//将最新的datum写入磁盘

raftStore.write(newDatum);

//修改本地缓存信息

datums.put(newDatum.key, newDatum);

//添加datum变更任务

notifier.addTask(newDatum.key, ApplyAction.CHANGE);

//再次重置leader选举超时时间

local.resetLeaderDue();

//同步选举周期 这是一个缓慢过程

if (local.term.get() + 100 > remote.term.get()) {

getLeader().term.set(remote.term.get());

local.term.set(getLeader().term.get());

} else {

//本地选举落后leader过多(例如新增的节点) 存在datum差距过大 若一次性同步选举周期 会导致

//极端情况下 新增的节点会成为leader

local.term.addAndGet(100);

}

//将最新的term进行落盘

raftStore.updateTerm(local.term.get());

Loggers.RAFT.info("data updated, key: {}, timestamp: {}, from {}, local term: {}",

newDatum.key, newDatum.timestamp, JacksonUtils.toJson(remote), local.term);

} catch (Throwable e) {

Loggers.RAFT.error("[RAFT-BEAT] failed to sync datum from leader, datum: {}", newDatum, e);

} finally {

OPERATE_LOCK.unlock();

}

}

TimeUnit.MILLISECONDS.sleep(200);

return 0;

}

});

//清除缓存key

batch.clear();

} catch (Exception e) {

Loggers.RAFT.error("[NACOS-RAFT] failed to handle beat entry, key: {}", datumKey);

}

}

//这里需要处理下follower中存在实例而leader中不存在 也就是以leader数据为主

List deadKeys = new ArrayList<>();

for (Map.Entry entry : receivedKeysMap.entrySet()) {

if (entry.getValue() == 0) {

//添加本地存在的key

deadKeys.add(entry.getKey());

}

}

for (String deadKey : deadKeys) {

try {

//删除本地存在的实例信息 一切以leader信息为主 也就是上文叙说的情况 这也是为何nacos的raft算法并不是强一致性CP模型

deleteDatum(deadKey);

} catch (Exception e) {

Loggers.RAFT.error("[NACOS-RAFT] failed to remove entry, key={} {}", deadKey, e);

}

}

}

return local;

}

/**

* 添加一个监听

* @param key

* @param listener

*/

public void listen(String key, RecordListener listener) {

List listenerList = listeners.get(key);

if (listenerList != null && listenerList.contains(listener)) {

return;

}

if (listenerList == null) {

listenerList = new CopyOnWriteArrayList<>();

listeners.put(key, listenerList);

}

Loggers.RAFT.info("add listener: {}", key);

listenerList.add(listener);

// if data present, notify immediately

for (Datum datum : datums.values()) {

if (!listener.interests(datum.key)) {

continue;

}

try {

listener.onChange(datum.key, datum.value);

} catch (Exception e) {

Loggers.RAFT.error("NACOS-RAFT failed to notify listener", e);

}

}

}

/**

* 取消一个监听

* @param key

* @param listener

*/

public void unlisten(String key, RecordListener listener) {

if (!listeners.containsKey(key)) {

return;

}

for (RecordListener dl : listeners.get(key)) {

// TODO maybe use equal:

if (dl == listener) {

listeners.get(key).remove(listener);

break;

}

}

}

public void unlistenAll(String key) {

listeners.remove(key);

}

public void setTerm(long term) {

peers.setTerm(term);

}

public boolean isLeader(String ip) {

return peers.isLeader(ip);

}

public boolean isLeader() {

return peers.isLeader(NetUtils.localServer());

}

public static String buildURL(String ip, String api) {

if (!ip.contains(UtilsAndCommons.IP_PORT_SPLITER)) {

ip = ip + UtilsAndCommons.IP_PORT_SPLITER + ApplicationUtils.getPort();

}

return "http://" + ip + ApplicationUtils.getContextPath() + api;

}

public Datum getDatum(String key) {

return datums.get(key);

}

public RaftPeer getLeader() {

return peers.getLeader();

}

public List getPeers() {

return new ArrayList<>(peers.allPeers());

}

public RaftPeerSet getPeerSet() {

return peers;

}

public void setPeerSet(RaftPeerSet peerSet) {

peers = peerSet;

}

public int datumSize() {

return datums.size();

}

public void addDatum(Datum datum) {

datums.put(datum.key, datum);

notifier.addTask(datum.key, ApplyAction.CHANGE);

}

public void loadDatum(String key) {

try {

Datum datum = raftStore.load(key);

if (datum == null) {

return;

}

datums.put(key, datum);

} catch (Exception e) {

Loggers.RAFT.error("load datum failed: " + key, e);

}

}

private void deleteDatum(String key) {

Datum deleted;

try {

//移除缓存数据

deleted = datums.remove(URLDecoder.decode(key, "UTF-8"));

if (deleted != null) {

raftStore.delete(deleted);

Loggers.RAFT.info("datum deleted, key: {}", key);

}

//添加异步删除任务

notifier.addTask(URLDecoder.decode(key, "UTF-8"), ApplyAction.DELETE);

} catch (UnsupportedEncodingException e) {

Loggers.RAFT.warn("datum key decode failed: {}", key);

}

}

public boolean isInitialized() {

return initialized || !globalConfig.isDataWarmup();

}

public int getNotifyTaskCount() {

return notifier.getTaskSize();

}

/**

* 异步处理datum发生的各种事件 change 或DELETE

* 用于通知各listeners

*/

public class Notifier implements Runnable {

//用于记录待处理的️services列表

private ConcurrentHashMap services = new ConcurrentHashMap<>(10 * 1024);

//阻塞队列任务

private BlockingQueue tasks = new LinkedBlockingQueue<>(1024 * 1024);

//添加任务

public void addTask(String datumKey, ApplyAction action) {

if (services.containsKey(datumKey) && action == ApplyAction.CHANGE) {

return;

}

if (action == ApplyAction.CHANGE) {

services.put(datumKey, StringUtils.EMPTY);

}

Loggers.RAFT.info("add task {}", datumKey);

tasks.add(Pair.with(datumKey, action));

}

public int getTaskSize() {

return tasks.size();

}

@Override

public void run() {

//启动通知者

Loggers.RAFT.info("raft notifier started");

while (true) {

try {

//从任务列表中取出待处理任务

Pair pair = tasks.take();

if (pair == null) {

continue;

}

String datumKey = (String) pair.getValue0();

ApplyAction action = (ApplyAction) pair.getValue1();

//取出任务就移除旧任务

services.remove(datumKey);

Loggers.RAFT.info("remove task {}", datumKey);

int count = 0;

//处理注册的listeners 发送对应的监听事件

//处理SERVICE_META

if (listeners.containsKey(KeyBuilder.SERVICE_META_KEY_PREFIX)) {

if (KeyBuilder.matchServiceMetaKey(datumKey) && !KeyBuilder.matchSwitchKey(datumKey)) {

for (RecordListener listener : listeners.get(KeyBuilder.SERVICE_META_KEY_PREFIX)) {

try {

if (action == ApplyAction.CHANGE) {

listener.onChange(datumKey, getDatum(datumKey).value);

}

if (action == ApplyAction.DELETE) {

listener.onDelete(datumKey);

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] error while notifying listener of key: {}", datumKey, e);

}

}

}

}

if (!listeners.containsKey(datumKey)) {

continue;

}

for (RecordListener listener : listeners.get(datumKey)) {

count++;

try {

//change事件

if (action == ApplyAction.CHANGE) {

listener.onChange(datumKey, getDatum(datumKey).value);

continue;

}

//del

if (action == ApplyAction.DELETE) {

listener.onDelete(datumKey);

continue;

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] error while notifying listener of key: {}", datumKey, e);

}

}

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[NACOS-RAFT] datum change notified, key: {}, listener count: {}", datumKey, count);

}

} catch (Throwable e) {

Loggers.RAFT.error("[NACOS-RAFT] Error while handling notifying task", e);

}

}

}

}

}

Nacos中选举过程与上文Raft过程大致相同,有以下不同:

- nacos中的广播拉取选票(通过http进行异步通信),follower会投出同一周期内的唯一选票,若follower的周期大于候选者会返回自身,相对于告诉候选者我有更好的选择

- ncaos中的心跳检测是通过leader发起,folloer会拒绝小于本身term周期的leader检测,这样的机制是保证term周期大的一定成为leader

- nacos中的raft是通过定时的心跳检测来同步leader与follower之间节点数据。

- nacos中若leader与follower之间存在差异性,以leader数据为主,leader不会同步follower中的数据

在极端情况下会存在数据不一致情况,而且存在丢数据情况:

若出现网络分区的情况 集群出现了分区(假设集群有1-5个节点 1节点为leader 2-4 为follower 若1-3 为一个分区 4,5为一个分区)1,3正常运行 而4,5缺少leader重新分区 ,因为一致无法确定分区导致 4,5选举term周期一直领先于1节点 若分区正常了,1发送给4,5的心跳在这里被拒绝,也就是说集群会重新进行选举并且在4,5中被选中leader。这个时候其实4,5中对应的数据肯定不为最新的数据,那么若以leader为主的化,那么其它已被注册在1-3中数据就会被delete。只有等待节点重新对leader发起注册或心跳检测请求时,才会数据进行同步。nacos为了减少上述情况,只要leader发送数据变更对于的term自增了100

Nacos中接受数据过程也和raft基本相同,这里可以参考:https://blog.csdn.net/F_Hello_World/article/details/106911636

Zookeeper中选举算法(本博客源码为zookeeper-3.5.8-release):

在旧的版本(3.4.0)中zk提供了3种选举算法实现:AuthFastLeaderElection,FastLeaderElection,LeaderElection,3.4.0之后只剩下FastLeaderElection,过期了另外两种算法,对于该选举算法的确定在QuorumPeer类中的startLeaderElection确定。

zk中的角色目前有以下3种:

- leader:统一处理事务性请求,也就是Proposal请求,也是集团内的服务的调度者

- flower:具有leader选举时的投票权,也具备成为leader的权利,对外提供读功能

- observing:(除了不能参与选举其它功能和flower一致,observing的被提出就是提高集群的负载能力,在3.4.0时被提出)

这里主要介绍的是FastLeaderElection类讲解。

这里先描述下zk选举的整体过程:

zk选举发生在两种阶段:zk启动时,leader崩溃回复的时候。

-

Notification:表示收到的选票信息(其它服务发送来的选票信息),包含选举周期,事务id,服务id等信息 -

ToSend:表示发送给其它服务器的服务信息,包含选举周期,事务id,服务id等信息 -

Messenger:Messenger中包含两个线程类,WorkerReceiver(接受socket中传入的bytebuff信息,并解析成Notification)与WorkerSender用以将ToSend信息变为bytebuff信息发送到socket中

核心方法:

- sendNotifications():该函数用于集群广播发送ToSend消息

-

totalOrderPredicate():该函数作用将最新接受的选票和当前选票内容进行对比 查看最新选票中包含的id是否更适合成为leader,主要对比的参数有选举的epoch,事务zxid 与 服务器对应mid ,按照顺序进行比对 -

termPredicate():该函数判断给定一组选票决定是否有足够的票数(超过半数以上)宣布选举结束 -

checkLeader():该函数用于检测被选定的leader的活性,尽量避免集群不断地选择选举一个已经崩溃或者不再领先的服务。 -

lookForLeader():核心函数用于选举出leader

整体的选举流程():

第一步:自增逻辑时钟(选举周期),注册jmx,初始化选票信息(根据自身的数据).广播选票

第二步:如果当前状态正确,源源不断再recvqueue拉取中Notification,如果无法获取则判定是否需要广播更多的信息,验证是否断开连接了(判断是否存有没有发送完的Notification),如果是的重连,否则再次广播选票

第三步:开始处理收到的Notification, 根据服务状态执行不同分支代码

3.1:如果Notification中对应的服务器状态为looking主要感觉对比内外的选举周期是否相同(选票的合法性必须要保证选举是在同一个周期),有以下3种情况:

1:如果Notification中对应的的选举周期大于当前周期,变更本地选举周期,清除之前接受到的选票,使用初始化的选票信息和Notification进行对比获取最新的vote,广播最新的选票

2:如果Notification中对应的的选举周期小与当前周期,不做处理,输出日志

3:如果Notification中对应的的选举周期等于当前周期,说明是一个周期,使用记录的leader Proposal与Notification中Proposal对比,若Notification更高,则变更然后广播选票(这里为了就是加快选举,我发现了一个更适合的人当领导,告诉其他人和我一样要选举他)

写入recvset中,统计是否达到过半数要求,如果未达到结束开始下一轮处理消息,若过半数则需要处理完后续所有的Notification消息,若在超时时间内已经没有新的通知到达则说明leader已被确认 变更当前服务状态,清空recvset队列(即使后续有更好的服务加入集群也不会处理它发出的Notification了)。否则判定Notification 中的leader Proposal是发送变更,若发送变动废弃当前统计结果并,将更优的选票放在recvset中,开始进行下一轮投票统计,当无Notification处理是还未发生变更,说明leader已被确认无需处理

3.2:如果Notification中对应的服务器状态为OBSERVING 不做处理

3.3:如果Notification中对应的服务器状态为FOLLOWING,或Leader时,这时候说明集群中leader已被选举成功,接收到的notification实际上是为了加快选举进程(原因在于各个服务都是自己判定自己的角色,可能因为时间或者网络关系,所以可能存在FOLLOWING已确定 leader还未确定的现象),收到leader信息 早定完成leader选举

注:WorkerReceiver会做一些接受Notification的前置处理

WorkerReceiver:负责接受从recvQueue(接受队列中)中获取Notification 的bytebuffer信息,将解析为Notification。总体流程接受bytebuffer,判断当前收到的Notification是否投票的权限(必须为follower),若没有则将服务保存的leader信息发送原服务器,若有则继续判断当前服务状态是否为looking 若是接受当前选票,并且对比Notification中选举周期小于当前服务器的选举周期,并且发送当前服务中最新的leader Proposal信息给指定服务,协助对方变更最新leader信息。若当前服务不是looking说明当前服务认为集群中的leader已经被选定,这时候判定原服务的服务状态是否为looking,如果是需要发送leader信息协助原服务早点认定成功

FastLeaderElection源码分析:

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.zookeeper.server.quorum;

import java.io.IOException;

import java.nio.BufferUnderflowException;

import java.nio.ByteBuffer;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicLong;

import org.apache.zookeeper.common.Time;

import org.apache.zookeeper.jmx.MBeanRegistry;

import org.apache.zookeeper.server.ZooKeeperThread;

import org.apache.zookeeper.server.quorum.QuorumCnxManager.Message;

import org.apache.zookeeper.server.quorum.QuorumPeer.LearnerType;

import org.apache.zookeeper.server.quorum.QuorumPeer.ServerState;

import org.apache.zookeeper.server.quorum.QuorumPeerConfig.ConfigException;

import org.apache.zookeeper.server.quorum.flexible.QuorumVerifier;

import org.apache.zookeeper.server.util.ZxidUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* Implementation of leader election using TCP. It uses an object of the class

* QuorumCnxManager to manage connections. Otherwise, the algorithm is push-based

* as with the other UDP implementations.

*

* There are a few parameters that can be tuned to change its behavior. First,

* finalizeWait determines the amount of time to wait until deciding upon a leader.

* This is part of the leader election algorithm.

*/

public class FastLeaderElection implements Election {

private static final Logger LOG = LoggerFactory.getLogger(FastLeaderElection.class);

/**

* 完成Leader选举之后需要等待时⻓ 该值每次空转之后进行翻倍 最大不会超过maxNotificationInterval

*/

final static int finalizeWait = 200;

/**

* Upper bound on the amount of time between two consecutive

* notification checks. This impacts the amount of time to get

* the system up again after long partitions. Currently 60 seconds.

*/

/**

* 选举两次拉取Notification的时间最大间隔

*

*/

final static int maxNotificationInterval = 60000;

/**

* Connection manager. Fast leader election uses TCP for

* communication between peers, and QuorumCnxManager manages

* such connections.

*/

/**

* 连接管理 FastLeaderElection选举使用TCP 在集群中进行通信 QuorumCnxManager管理这样的连接。

*/

QuorumCnxManager manager;

/**

* Notifications are messages that let other peers know that

* a given peer has changed its vote, either because it has

* joined leader election or because it learned of another

* peer with higher zxid or same zxid and higher server id

*/

/**

* Notification

* 包含一个票务的所有信息

*/

static public class Notification {

/*

* Format version, introduced in 3.4.6

*/

//当前zk版本

public final static int CURRENTVERSION = 0x2;

int version;

/*

* Proposed leader

*/

//记录被推选的leader id

long leader;

/*

* zxid of the proposed leader

*/

//被推选leader的 事务id

long zxid;

/*

* Epoch

*/

//推选者的选举周期 每次选举周期各个服务只能投一次

long electionEpoch;

/*

* current state of sender

*/

//发送者服务的状态 LOOKING, FOLLOWING, LEADING, OBSERVING; 四种

//服务器启动都为LOOKING 当完成选举之后各服务器变更对应状态

QuorumPeer.ServerState state;

/*

* Address of sender

*/

//推选者的服务器id 由配置文件指定 每一个id都不同

long sid;

//验证器

QuorumVerifier qv;

/*

* epoch of the proposed leader

*/

//被推选leader的选举周期

long peerEpoch;

}

static byte[] dummyData = new byte[0];

/**

* Messages that a peer wants to send to other peers.

* These messages can be both Notifications and Acks

* of reception of notification.

*/

/**

*

* 集群服务要发送给其它服务的消息(用于同一身份)

* 这些消息既可以是Notification,也可以是接收Notification的ack。

*

*/

static public class ToSend {

//当前发送信息的类型

static enum mType {crequest, challenge, notification, ack}

ToSend(mType type,

long leader,

long zxid,

long electionEpoch,

ServerState state,

long sid,

long peerEpoch,

byte[] configData) {

this.leader = leader;

this.zxid = zxid;

this.electionEpoch = electionEpoch;

this.state = state;

this.sid = sid;

this.peerEpoch = peerEpoch;

this.configData = configData;

}

/*

* Proposed leader in the case of notification

*/

//如果是发送消息类型为notification 代表的是被推选的leader

long leader;

/*

* id contains the tag for acks, and zxid for notifications

*/

//id包含acks的标记,zxid包含通知的标记

//被推选leader的事务id

long zxid;

/*

* Epoch

*/

//推选者(发送者的)的周期

long electionEpoch;

/*

* Current state;

*/

//推选者(发送者)当前服务状态

QuorumPeer.ServerState state;

/*

* Address of recipient

*/

//推选者(发送者)的服务id

long sid;

/*

* Used to send a QuorumVerifier (configuration info)

*/

//用于QuorumVerifier 最后一次

byte[] configData = dummyData;

/*

* Leader epoch

*/

//被推选leader周期

long peerEpoch;

}

//定义发送信息队列

LinkedBlockingQueue sendqueue;

//定义接受Notification 队列

LinkedBlockingQueue recvqueue;

/**

* Multi-threaded implementation of message handler. Messenger

* implements two sub-classes: WorkReceiver and WorkSender. The

* functionality of each is obvious from the name. Each of these

* spawns a new thread.

*/

/**

* Messenger

* Messenger实现了两个子类:WorkReceiver(接受选票)和WorkSender(发送选票)。

* 发送接受Notification都会通过一个线程来进行

*/

protected class Messenger {

/**

* Receives messages from instance of QuorumCnxManager on

* method run(), and processes such messages.

*/

/**

* 每一个WorkerReceiver 都是实现Thread 通过run来运行

*/

class WorkerReceiver extends ZooKeeperThread {

//是否停止接受的标示

volatile boolean stop;

//连接管理 单例模式

QuorumCnxManager manager;

WorkerReceiver(QuorumCnxManager manager) {

super("WorkerReceiver");

this.stop = false;

this.manager = manager;

}

public void run() {

Message response;

//等待stop 为true代表选leader结束

while (!stop) {

// Sleeps on receive

try {

//这里是通过ArrayBlockingQueue 阻塞队列 保证消息消费顺序性 阻塞 3s等待消息到达

response = manager.pollRecvQueue(3000, TimeUnit.MILLISECONDS);

if(response == null) continue;

//获取当前消息长度

final int capacity = response.buffer.capacity();

// The current protocol and two previous generations all send at least 28 bytes

//一个协议最少发送字节是28字节 少于

if (capacity < 28) {

LOG.error("Got a short response from server {}: {}", response.sid, capacity);

continue;

}

// this is the backwardCompatibility mode in place before ZK-107

// It is for a version of the protocol in which we didn't send peer epoch

// With peer epoch and version the message became 40 bytes

//zk的版本不同对应协议也不同 基础协议byte数也不同

boolean backCompatibility28 = (capacity == 28);

// this is the backwardCompatibility mode for no version information

boolean backCompatibility40 = (capacity == 40);

response.buffer.clear();

// Instantiate Notification and set its attributes

//实例化Notification并设置其属性

Notification n = new Notification();

int rstate = response.buffer.getInt();

long rleader = response.buffer.getLong();

long rzxid = response.buffer.getLong();

long relectionEpoch = response.buffer.getLong();

long rpeerepoch;

//兼容zk的版本 不同版本不同具体实现

int version = 0x0;

QuorumVerifier rqv = null;

try {

if (!backCompatibility28) {

rpeerepoch = response.buffer.getLong();

if (!backCompatibility40) {

/*

* Version added in 3.4.6

*/

version = response.buffer.getInt();

} else {

LOG.info("Backward compatibility mode (36 bits), server id: {}", response.sid);

}

} else {

LOG.info("Backward compatibility mode (28 bits), server id: {}", response.sid);

rpeerepoch = ZxidUtils.getEpochFromZxid(rzxid);

}

// check if we have a version that includes config. If so extract config info from message.

//检查是否有包含配置的版本。如果是,则从消息中提取配置信息configData

if (version > 0x1) {

//

int configLength = response.buffer.getInt();

// we want to avoid errors caused by the allocation of a byte array with negative length

// (causing NegativeArraySizeException) or huge length (causing e.g. OutOfMemoryError)

//希望避免由于分配长度为负(导致NegativeArraySizeException)或

//长度较大(例如导致OutOfMemoryError)的字节数组而导致的错误

if (configLength < 0 || configLength > capacity) {

throw new IOException(String.format("Invalid configLength in notification message! sid=%d, capacity=%d, version=%d, configLength=%d",

response.sid, capacity, version, configLength));

}

byte b[] = new byte[configLength];

//获取配置信息

response.buffer.get(b);

synchronized (self) {

try {

//解析选票中QuorumVerifier对象

rqv = self.configFromString(new String(b));

//QuorumVerifier

QuorumVerifier curQV = self.getQuorumVerifier();

//比对version 并且当前服务状态为looking

if (rqv.getVersion() > curQV.getVersion()) {

LOG.info("{} Received version: {} my version: {}", self.getId(),

Long.toHexString(rqv.getVersion()),

Long.toHexString(self.getQuorumVerifier().getVersion()));

if (self.getPeerState() == ServerState.LOOKING) {

LOG.debug("Invoking processReconfig(), state: {}", self.getServerState());

//收到新的配置替换本地最新

self.processReconfig(rqv, null, null, false);

//比较两者状态若不相同则重写开始领导人选举

if (!rqv.equals(curQV)) {

LOG.info("restarting leader election");

self.shuttingDownLE = true;

self.getElectionAlg().shutdown();

break;

}

} else {

LOG.debug("Skip processReconfig(), state: {}", self.getServerState());

}

}

} catch (IOException | ConfigException e) {

LOG.error("Something went wrong while processing config received from {}. " +

"Continue to process the notification message without handling the configuration.", response.sid);

}

}

} else {

LOG.info("Backward compatibility mode (before reconfig), server id: {}", response.sid);

}

} catch (BufferUnderflowException | IOException e) {

LOG.warn("Skipping the processing of a partial / malformed response message sent by sid={} (message length: {})",

response.sid, capacity, e);

continue;

}

/*

* If it is from a non-voting server (such as an observer or

* a non-voting follower), respond right away.

*/

//如果是来自无投票权的服务器(如观察者或无投票权的追随者)将自己作为leader候选者发送给原服务

//validVoter方法校验 是否自无投票权的服务器

if(!validVoter(response.sid)) {

//获取当前票务Vote消息

Vote current = self.getCurrentVote();

QuorumVerifier qv = self.getQuorumVerifier();

//组装一个ToSend 类型为notification

ToSend notmsg = new ToSend(ToSend.mType.notification,

current.getId(),

current.getZxid(),

logicalclock.get(),

self.getPeerState(),

response.sid,

current.getPeerEpoch(),

qv.toString().getBytes());

//放到当前代发送队列尾部

sendqueue.offer(notmsg);

} else {

// Receive new message

//接受一个新 notification message

if (LOG.isDebugEnabled()) {

LOG.debug("Receive new notification message. My id = "

+ self.getId());

}

// State of peer that sent this message

//判断当前notification的状态

QuorumPeer.ServerState ackstate = QuorumPeer.ServerState.LOOKING;

switch (rstate) {

case 0:

ackstate = QuorumPeer.ServerState.LOOKING;

break;

case 1:

ackstate = QuorumPeer.ServerState.FOLLOWING;

break;

case 2:

ackstate = QuorumPeer.ServerState.LEADING;

break;

case 3:

ackstate = QuorumPeer.ServerState.OBSERVING;

break;

default:

continue;

}

//填充Notification属性

n.leader = rleader;

n.zxid = rzxid;

n.electionEpoch = relectionEpoch;

n.state = ackstate;

n.sid = response.sid;

n.peerEpoch = rpeerepoch;

n.version = version;

n.qv = rqv;

/*

* Print notification info

*/

//是否打印Notification消息 由开关设置

if(LOG.isInfoEnabled()){

printNotification(n);

}

/*

* If this server is looking, then send proposed leader

*/

/**

* 如果当前服务状态是否为looking 若是直接接受选票 但会做些前置处理 验证是否在同一选举周期,若低于当前周期需要告知对应服务最新的Vote情况,否则不做任何处理

* 当前服务状态不为looking ,需要确定发送选票的服务是否还在looking 若是则指导它快速加入已选举完成的集群中(需要发送确定的leader信息给指定服务),本身不做其它任何动作了

* 真正做事的是lookForLeader函数

*/

if(self.getPeerState() == QuorumPeer.ServerState.LOOKING){

//将票数接受队列尾部

recvqueue.offer(n);

/*

* Send a notification back if the peer that sent this

* message is also looking and its logical clock is

* lagging behind.

*/

/**

* 如果发送此notification的服务的状态为looking(选举状态),

* 并且对方服务选举周期落后本服务,则发送notification

*/

if((ackstate == QuorumPeer.ServerState.LOOKING)

&& (n.electionEpoch < logicalclock.get())){

//创建新的Vote 选票 该值记录了proposed的leader的信息

Vote v = getVote();

QuorumVerifier qv = self.getQuorumVerifier();

//构建一个发送信息

ToSend notmsg = new ToSend(ToSend.mType.notification,

v.getId(),

v.getZxid(),

logicalclock.get(),

self.getPeerState(),

response.sid,

v.getPeerEpoch(),

qv.toString().getBytes());

//放到当前代发送队列尾部

sendqueue.offer(notmsg);

}

} else {

/*

* If this server is not looking, but the one that sent the ack

* is looking, then send back what it believes to be the leader.

*/

/*

*当前服务器已经不处于looking状态了

*但是发送的服务器若是还在looking状态, 发送ack leader的信息

*/

Vote current = self.getCurrentVote();

//发送服务器还在进行looking

if(ackstate == QuorumPeer.ServerState.LOOKING){

//是否开启debug日志输出 开启记录日志

if(LOG.isDebugEnabled()){

LOG.debug("Sending new notification. My id ={} recipient={} zxid=0x{} leader={} config version = {}",

self.getId(),

response.sid,

Long.toHexString(current.getZxid()),

current.getId(),

Long.toHexString(self.getQuorumVerifier().getVersion()));

}

QuorumVerifier qv = self.getQuorumVerifier();

//通过这样可以通过发送的信息可以协助其它服务快速判定leader的完成

ToSend notmsg = new ToSend(

ToSend.mType.notification,

current.getId(),

current.getZxid(),

current.getElectionEpoch(),

self.getPeerState(),

response.sid,

current.getPeerEpoch(),

qv.toString().getBytes());

//放到待发送队列

sendqueue.offer(notmsg);

}

}

}

} catch (InterruptedException e) {

LOG.warn("Interrupted Exception while waiting for new message" +

e.toString());

}

}

LOG.info("WorkerReceiver is down");

}

}

/**

* This worker simply dequeues a message to send and

* and queues it on the manager's queue.

*/

/**

* 该工作进程只需将要发送的消息出列,然后将其放入管理器队列中。

*/

class WorkerSender extends ZooKeeperThread {

volatile boolean stop;

QuorumCnxManager manager;

WorkerSender(QuorumCnxManager manager){

super("WorkerSender");

this.stop = false;

this.manager = manager;

}

public void run() {

while (!stop) {

try {

//一直从sendqueue阻塞队列中一直拿ToSend然后发送 若无数据时最大阻塞3s

ToSend m = sendqueue.poll(3000, TimeUnit.MILLISECONDS);

if(m == null) continue;

process(m);

} catch (InterruptedException e) {

break;

}

}

LOG.info("WorkerSender is down");

}

/**

* Called by run() once there is a new message to send.

*

* @param m message to send

*/

//发送一个

void process(ToSend m) {

//将数据 压缩变更为ByteBuffer通过manager 发送

ByteBuffer requestBuffer = buildMsg(m.state.ordinal(),

m.leader,

m.zxid,

m.electionEpoch,

m.peerEpoch,

m.configData);

//通过sid指定发送到具体服务器中

manager.toSend(m.sid, requestBuffer);

}

}

WorkerSender ws;

WorkerReceiver wr;

//定义发送线程 用于执行WorkerSender中run方法

Thread wsThread = null;

//定接受线程 用于执行WorkerReceiver中run方法

Thread wrThread = null;

/**

* Constructor of class Messenger.

*

* @param manager Connection manager

*/

//实例化各依赖参数

Messenger(QuorumCnxManager manager) {

this.ws = new WorkerSender(manager);

this.wsThread = new Thread(this.ws,

"WorkerSender[myid=" + self.getId() + "]");

this.wsThread.setDaemon(true);

this.wr = new WorkerReceiver(manager);

this.wrThread = new Thread(this.wr,

"WorkerReceiver[myid=" + self.getId() + "]");

this.wrThread.setDaemon(true);

}

/**

* Starts instances of WorkerSender and WorkerReceiver

*/

//开启接受 和发送线程

void start(){

this.wsThread.start();

this.wrThread.start();

}

/**

* Stops instances of WorkerSender and WorkerReceiver

*/

//停止 WorkerSender and WorkerReceiver实例

void halt(){

this.ws.stop = true;

this.wr.stop = true;

}

}

//QuorumPeer是Zookeeper服务器实例

//(ZooKeeperServer)的托管者,QuorumPeer代表了集群中的⼀台机器,在运⾏期间,

//QuorumPeer会不断检测当前服务器实例的运⾏状态,同时根据情况发起Leader选举

QuorumPeer self;

//messenger实例

Messenger messenger;

//通过logicalclock逻辑时钟来记录当前服务选举的周期 没进行一轮选举该值+1

AtomicLong logicalclock = new AtomicLong(); /* Election instance */

//记录最新的被接受proposed Leader所在服务id

long proposedLeader;

//记录最新的被接受proposed Leader所在服务的当前事务ID

long proposedZxid;

//记录最新的被接受proposed Leader所在服务的最新周期

long proposedEpoch;

/**

* Returns the current vlue of the logical clock counter

*/

//获取值

public long getLogicalClock(){

return logicalclock.get();

}

/**

* build建造者模式 将选票信息写入ByteBuffer中 发送其他服务

* 只在测试使用

* @return

*/

static ByteBuffer buildMsg(int state,

long leader,

long zxid,

long electionEpoch,

long epoch) {

byte requestBytes[] = new byte[40];

ByteBuffer requestBuffer = ByteBuffer.wrap(requestBytes);

/*

* Building notification packet to send, this is called directly only in tests

*/

requestBuffer.clear();

requestBuffer.putInt(state);

requestBuffer.putLong(leader);

requestBuffer.putLong(zxid);

requestBuffer.putLong(electionEpoch);

requestBuffer.putLong(epoch);

requestBuffer.putInt(0x1);

return requestBuffer;

}

/**

* build建造者模式 将选票信息写入ByteBuffer中 发送其他服务 包含配置信息

* @return

*/

static ByteBuffer buildMsg(int state,

long leader,

long zxid,

long electionEpoch,

long epoch,

byte[] configData) {

byte requestBytes[] = new byte[44 + configData.length];

ByteBuffer requestBuffer = ByteBuffer.wrap(requestBytes);

/*

* Building notification packet to send

*/

requestBuffer.clear();

requestBuffer.putInt(state);

requestBuffer.putLong(leader);

requestBuffer.putLong(zxid);

requestBuffer.putLong(electionEpoch);

requestBuffer.putLong(epoch);

requestBuffer.putInt(Notification.CURRENTVERSION);

requestBuffer.putInt(configData.length);

requestBuffer.put(configData);

return requestBuffer;

}

/**

* Constructor of FastLeaderElection. It takes two parameters, one

* is the QuorumPeer object that instantiated this object, and the other

* is the connection manager. Such an object should be created only once

* by each peer during an instance of the ZooKeeper service.

*

* @param self QuorumPeer that created this object

* @param manager Connection manager

*/

/**

* FastLeaderElection 基本构造函数

* 它有两个参数一个是管理zk各实例化 QuorumPeer对象,

* 另一个是连接管理器。

* 在ZooKeeper服务的实例中 这两个对象为单例

* @param self

* @param manager

*/

public FastLeaderElection(QuorumPeer self, QuorumCnxManager manager){

this.stop = false;

this.manager = manager;

//初始化完成就开始进行选举

starter(self, manager);

}

/**

* This method is invoked by the constructor. Because it is a

* part of the starting procedure of the object that must be on

* any constructor of this class, it is probably best to keep as

* a separate method. As we have a single constructor currently,

* it is not strictly necessary to have it separate.

*

* @param self QuorumPeer that created this object

* @param manager Connection manager

*/

//进行leader选举

private void starter(QuorumPeer self, QuorumCnxManager manager) {

this.self = self;

//默认开始无被提按leader

proposedLeader = -1;

proposedZxid = -1;

//初始化接受和发送队列

sendqueue = new LinkedBlockingQueue();

recvqueue = new LinkedBlockingQueue();

//初始化Messenger 但未启动

this.messenger = new Messenger(manager);

}

/**

* This method starts the sender and receiver threads.

*/

//这个方法实际上是启动接受和发送线程

public void start() {

this.messenger.start();

}

//该方法用于清除 recvqueue接受的票数

private void leaveInstance(Vote v) {

if(LOG.isDebugEnabled()){

LOG.debug("About to leave FLE instance: leader={}, zxid=0x{}, my id={}, my state={}",

v.getId(), Long.toHexString(v.getZxid()), self.getId(), self.getPeerState());

}

recvqueue.clear();

}

public QuorumCnxManager getCnxManager(){

return manager;

}

//选举运行标志符 默认false

volatile boolean stop;

//停止fast选举

public void shutdown(){

stop = true;

proposedLeader = -1;

proposedZxid = -1;

LOG.debug("Shutting down connection manager");

manager.halt();

LOG.debug("Shutting down messenger");

messenger.halt();

LOG.debug("FLE is down");

}

/**

* Send notifications to all peers upon a change in our vote

*/

//如果当前vote发生变化时向所有具有(选票权限的机器发送)服务发送通知

private void sendNotifications() {

// 遍历投票参与者集合 发送投票信息

for (long sid : self.getCurrentAndNextConfigVoters()) {

//获取配置属性

QuorumVerifier qv = self.getQuorumVerifier();

//发送当前认为的leader信息

ToSend notmsg = new ToSend(ToSend.mType.notification,

proposedLeader,

proposedZxid,

logicalclock.get(),

QuorumPeer.ServerState.LOOKING,

sid,

proposedEpoch, qv.toString().getBytes());

if(LOG.isDebugEnabled()){

LOG.debug("Sending Notification: " + proposedLeader + " (n.leader), 0x" +

Long.toHexString(proposedZxid) + " (n.zxid), 0x" + Long.toHexString(logicalclock.get()) +

" (n.round), " + sid + " (recipient), " + self.getId() +

" (myid), 0x" + Long.toHexString(proposedEpoch) + " (n.peerEpoch)");

}

//放到待发送队列 异步发送

sendqueue.offer(notmsg);

}

}

/**

* 打印Notification 信息

* @param n

*/

private void printNotification(Notification n){

LOG.info("Notification: "

+ Long.toHexString(n.version) + " (message format version), "

+ n.leader + " (n.leader), 0x"

+ Long.toHexString(n.zxid) + " (n.zxid), 0x"

+ Long.toHexString(n.electionEpoch) + " (n.round), " + n.state

+ " (n.state), " + n.sid + " (n.sid), 0x"

+ Long.toHexString(n.peerEpoch) + " (n.peerEPoch), "

+ self.getPeerState() + " (my state)"

+ (n.qv!=null ? (Long.toHexString(n.qv.getVersion()) + " (n.config version)"):""));

}

/**

* Check if a pair (server id, zxid) succeeds our

* current vote.

*

* @param id Server identifier 服务id

* @param zxid Last zxid observed by the issuer of this vote

*/

/**

* 该函数作用将最新接受的选票和当前选票内容进行对比 查看最新选票中包含的id是否更适合成为leader

* 主要通过leaderEpoch zxid 与 服务器对应mid

* @return

*/

protected boolean totalOrderPredicate(long newId, long newZxid, long newEpoch, long curId, long curZxid, long curEpoch) {

LOG.debug("id: " + newId + ", proposed id: " + curId + ", zxid: 0x" +

Long.toHexString(newZxid) + ", proposed zxid: 0x" + Long.toHexString(curZxid));

//使⽤计票器判断当前 服务器的权重是否为0 这个于设置的仲裁验证器QuorumVerifier的具体实现有关

// QuorumMaj 默认都为1 同样的 Weight

// QuorumHierarchical 可以根据服务机房级别不同构建不同Weight Weight=0说明不希望该节点成为leader

if(self.getQuorumVerifier().getWeight(newId) == 0){

return false;

}

/*

* We return true if one of the following three cases hold:

* 1- New epoch is higher

* 2- New epoch is the same as current epoch, but new zxid is higher

* 3- New epoch is the same as current epoch, new zxid is the same

* as current zxid, but server id is higher.

*/

/**

* 如果以下三种情况之一成立,则返回true:

* 1: 消息⾥的leader选举周期是不是⽐当前的⼤

* 2: 若选举周期相同 比较事务id

* 3: 若epoch,zxid都相同 比较服务id(说明)

*/

return ((newEpoch > curEpoch) ||

((newEpoch == curEpoch) &&

((newZxid > curZxid) || ((newZxid == curZxid) && (newId > curId)))));

}

/**

* Termination predicate. Given a set of votes, determines if have

* sufficient to declare the end of the election round.

*

* @param votes

* Set of votes

* @param vote

* Identifier of the vote received last

*/

/**

* 判断给定一组选票决定是否有足够的票数(超过半数以上)宣布选举结束

*本质:将收到的选票与当前的选票对比 选票相同的放在一个集合中 若出现超过半数以上选票 代表选举结束

*

* @return

*/

protected boolean termPredicate(Map votes, Vote vote) {

//用于

SyncedLearnerTracker voteSet = new SyncedLearnerTracker();

//将验证器QuorumVerifier注入

voteSet.addQuorumVerifier(self.getQuorumVerifier());

//如果当前服务还存在

if (self.getLastSeenQuorumVerifier() != null

&& self.getLastSeenQuorumVerifier().getVersion() > self

.getQuorumVerifier().getVersion()) {

voteSet.addQuorumVerifier(self.getLastSeenQuorumVerifier());

}

/*

* First make the views consistent. Sometimes peers will have different

* zxids for a server depending on timing.

*/

//循环比较所有当前接受到的选票的ack结果

for (Map.Entry entry : votes.entrySet()) {

if (vote.equals(entry.getValue())) {

voteSet.addAck(entry.getKey());

}

}

return voteSet.hasAllQuorums();

}

/**

* In the case there is a leader elected, and a quorum supporting

* this leader, we have to check if the leader has voted and acked

* that it is leading. We need this check to avoid that peers keep

* electing over and over a peer that has crashed and it is no

* longer leading.

*

* @param votes set of votes

* @param leader leader id

* @param electionEpoch epoch id

*/

/**

* 如果有一个服务过半当选为leader

* 我们必须检查这个leader是否投票并确认他是领导

* 以避免集群不断地选择选举一个已经崩溃或者不再领先的服务

* 检查是否完成leader 选举 此时的leader肯定为LEADING状态

* @param votes 选票集合

* @param leader 被选举leader id

* @param electionEpoch 被选举leader周期

* @return

*/

protected boolean checkLeader(

Map votes,

long leader,

long electionEpoch){

//true 可以成为leader false 不合法

boolean predicate = true;

/*

* If everyone else thinks I'm the leader, I must be the leader.

* The other two checks are just for the case in which I'm not the

* leader. If I'm not the leader and I haven't received a message

* from leader stating that it is leading, then predicate is false.

*/

//这里本质要求选举票集合中必须votes包含leader的服务信息(一般是当前服务器发送选票是给已成为leader的服务的通过WorkerReceiver回复信息)

//要求已存为leader的服务与本服务通信正常

if(leader != self.getId()){ //leader不是自己

if(votes.get(leader) == null) predicate = false; //若选票信息中未包含leader所在服务器信息 继续选举流程

else if(votes.get(leader).getState() != ServerState.LEADING) predicate = false; //接受的包含leader的选票对应服务器状态必须为leader 即其它服务还未知道leader存在

} else if(logicalclock.get() != electionEpoch) { //如果当前选举leader是自己那么 必须要保证选举对应的周期要一致

predicate = false;

}

return predicate;

}

/**

* 同步方法 修改被选举的leader信息

* @param leader

* @param zxid

* @param epoch

*/

synchronized void updateProposal(long leader, long zxid, long epoch){

if(LOG.isDebugEnabled()){

LOG.debug("Updating proposal: " + leader + " (newleader), 0x"

+ Long.toHexString(zxid) + " (newzxid), " + proposedLeader

+ " (oldleader), 0x" + Long.toHexString(proposedZxid) + " (oldzxid)");

}

proposedLeader = leader;

proposedZxid = zxid;

proposedEpoch = epoch;

}

/**

* 获取一个新的Vote选票 依据现在保存的最新被推举的leader信息

* @return

*/

synchronized public Vote getVote(){

return new Vote(proposedLeader, proposedZxid, proposedEpoch);

}

/**

* A learning state can be either FOLLOWING or OBSERVING.

* This method simply decides which one depending on the

* role of the server.

*

* @return ServerState

*/

//获取learn 状态 可能是FOLLOWING 可能是FOLLOWING

private ServerState learningState(){

if(self.getLearnerType() == LearnerType.PARTICIPANT){

LOG.debug("I'm a participant: " + self.getId());

return ServerState.FOLLOWING;

}

else{

LOG.debug("I'm an observer: " + self.getId());

return ServerState.OBSERVING;

}

}

/**

* Returns the initial vote value of server identifier.

*

* @return long

*/

/**

* 返回服务器标识符的初始投票值

* @return

*/

private long getInitId(){

if(self.getQuorumVerifier().getVotingMembers().containsKey(self.getId()))

return self.getId();

else return Long.MIN_VALUE;

}

/**

* Returns initial last logged zxid.

* 返回最后记录的上次记录的zxid

* @return long

*/

private long getInitLastLoggedZxid(){

if(self.getLearnerType() == LearnerType.PARTICIPANT)

return self.getLastLoggedZxid();

else return Long.MIN_VALUE;

}

/**

* Returns the initial vote value of the peer epoch.

*获取当前服务的最新投票周期

* @return long

*/

private long getPeerEpoch(){

if(self.getLearnerType() == LearnerType.PARTICIPANT)

try {

return self.getCurrentEpoch();

} catch(IOException e) {

RuntimeException re = new RuntimeException(e.getMessage());

re.setStackTrace(e.getStackTrace());

throw re;

}

else return Long.MIN_VALUE;

}

/**

* Starts a new round of leader election. Whenever our QuorumPeer

* changes its state to LOOKING, this method is invoked, and it

* sends notifications to all other peers.

*/

/**

* 开始新一轮领导人选举。

* 每当我们的QuorumPeer将其状态更改为LOOKING时

* 就会调用此方法,并向所有其他集群发送通知。

* @return

* @throws InterruptedException

*/

public Vote lookForLeader() throws InterruptedException {

try {

//注册jmx服务

self.jmxLeaderElectionBean = new LeaderElectionBean();

MBeanRegistry.getInstance().register(

self.jmxLeaderElectionBean, self.jmxLocalPeerBean);

} catch (Exception e) {

LOG.warn("Failed to register with JMX", e);

self.jmxLeaderElectionBean = null;

}

//开始选举时间

if (self.start_fle == 0) {

self.start_fle = Time.currentElapsedTime();

}

try {

//记录接受到服务地址与对应的最新选票 (sid,Vote)

HashMap recvset = new HashMap();

HashMap outofelection = new HashMap();

//完成Leader选举之后需要等待时⻓

int notTimeout = finalizeWait;

synchronized(this){

//选举周期+1

logicalclock.incrementAndGet();

//初始化 被选举的leader信息

updateProposal(getInitId(), getInitLastLoggedZxid(), getPeerEpoch());

}

LOG.info("New election. My id = " + self.getId() +

", proposed zxid=0x" + Long.toHexString(proposedZxid));

//广播发送选票信息

sendNotifications();

/*

* Loop in which we exchange notifications until we find a leader

*/

/**

* 集群中服务交换notifications,直到找到一个leader

*/

while ((self.getPeerState() == ServerState.LOOKING) &&

(!stop)){

/*

* Remove next notification from queue, times out after 2 times

* the termination time

*/

//从接受队列中获取 notification 在的notTimeout2倍后超时 超时获取Notification未null

Notification n = recvqueue.poll(notTimeout,

TimeUnit.MILLISECONDS);

/*

* Sends more notifications if haven't received enough.

* Otherwise processes new notification.

*/

//如果尚未收到,则看是否需要发送更多通知Notifications 否则处理新通知。

if(n == null){

//判断发送队列中是否还存在未发送Notification 如果没有则继续发送广播Notification

if(manager.haveDelivered()){

sendNotifications();

} else {

//如果还存在未发送的Notification 看是否是和其它服务失去连接 尝试重新连接所有

manager.connectAll();

}

/*

* Exponential backoff

*/

//修改最大等待消息时间 最大不超过maxNotificationInterval

int tmpTimeOut = notTimeout*2;

notTimeout = (tmpTimeOut < maxNotificationInterval?

tmpTimeOut : maxNotificationInterval);

LOG.info("Notification time out: " + notTimeout);

}

//投票者集合中包含接收到消息中的服务器id 即收到notification的合法性校验

else if (validVoter(n.sid) && validVoter(n.leader)) {

/*

* Only proceed if the vote comes from a replica in the current or next

* voting view for a replica in the current or next voting view.

*/

//判断notification中发送方服务状态

switch (n.state) {

case LOOKING:

// If notification > current, replace and send messages out

//如果通知中选举周期大于当前选举周期 替换并且重新发送notification

if (n.electionEpoch > logicalclock.get()) {

//更新最新的选举周期

logicalclock.set(n.electionEpoch);

//清空之前收到的选票

recvset.clear();

//因为选举周期都不一致了 放弃现有的leader proposal, 通过当前服务初始值选票值重新开始比较

//判断当前服务成为与Notification提出leader proposal的更适合

if(totalOrderPredicate(n.leader, n.zxid, n.peerEpoch,

getInitId(), getInitLastLoggedZxid(), getPeerEpoch())) {

//替换当前服务拥有的leader proposal

updateProposal(n.leader, n.zxid, n.peerEpoch);

} else {

//无法选举出

updateProposal(getInitId(),

getInitLastLoggedZxid(),

getPeerEpoch());

}

//广播最新的 Notifications 可能会重复发送多次相同选票

sendNotifications();

} else if (n.electionEpoch < logicalclock.get()) { //选举周期小于逻辑时钟不做处理

if(LOG.isDebugEnabled()){

LOG.debug("Notification election epoch is smaller than logicalclock. n.electionEpoch = 0x"

+ Long.toHexString(n.electionEpoch)

+ ", logicalclock=0x" + Long.toHexString(logicalclock.get()));

}

break;

} else if (totalOrderPredicate(n.leader, n.zxid, n.peerEpoch,

proposedLeader, proposedZxid, proposedEpoch)) { //如果选举周期相同 用当前拥有的 leader proposal与sendNotification比较

//修改新值

updateProposal(n.leader, n.zxid, n.peerEpoch);

//将变更的leader消息广播发送

sendNotifications();

}

//日志打印

if(LOG.isDebugEnabled()){

LOG.debug("Adding vote: from=" + n.sid +

", proposed leader=" + n.leader +

", proposed zxid=0x" + Long.toHexString(n.zxid) +

", proposed election epoch=0x" + Long.toHexString(n.electionEpoch));

}

// don't care about the version if it's in LOOKING state

//不在乎当前zk版本 只要是looking状态的都记录对应的选票

recvset.put(n.sid, new Vote(n.leader, n.zxid, n.electionEpoch, n.peerEpoch));

//统计当前的leader的proposal是否得到半数以上的ack确认

if (termPredicate(recvset,

new Vote(proposedLeader, proposedZxid,

logicalclock.get(), proposedEpoch))) {

// Verify if there is any change in the proposed leader

/**

*核实拟任leader是否发送了变动

*需要处理完后续所有的Notification消息(在超时时间内)

*若已经没有新的通知达到说明leader已被确认 变更服务状态(这里尽量选举出周期大,zxid大,mid大的服务)

*若leader是否发送变更,若发送变动需要进行下一轮投票统计

*

*/

while((n = recvqueue.poll(finalizeWait,

TimeUnit.MILLISECONDS)) != null){

//如果后续能收到了关于leader的信息说明leader

if(totalOrderPredicate(n.leader, n.zxid, n.peerEpoch,

proposedLeader, proposedZxid, proposedEpoch)){

recvqueue.put(n);

break;

}

}

/*

* This predicate is true once we don't read any new

* relevant message from the reception queue

*/

//如果没有从接收队列中读取任何新的相关消息 直接返回最终的Vote

// 如果确定的leader是本身变更服务状态为LEADING 否则根据配置的不同确定为FOLLOWING 或者OBSERVING

if (n == null) {

self.setPeerState((proposedLeader == self.getId()) ?

ServerState.LEADING: learningState());

Vote endVote = new Vote(proposedLeader,

proposedZxid, logicalclock.get(),

proposedEpoch);

//清除接受通道

leaveInstance(endVote);

return endVote;

}

}

break;

case OBSERVING:

//如果当前是OBSERVING 不做任何处理 日志打印

LOG.debug("Notification from observer: " + n.sid);

break;

//如果收到的来自于FOLLOWING 或LEADING

case FOLLOWING:

case LEADING:

/*