Binder (一) mmap与一次拷贝原理

Binder机制

- 跨进程通信IPC

- 远程过程调用手段RPC

- 4个角色进行粘合,Client、Server、Service Manager和Binder驱动程序

- 整个过程只需要一次拷贝

Binder Driver misc设备

/dev/binder

//没有read,write, ioctl实现

static struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

static struct miscdevice binder_miscdev = {

.minor = MISC_DYNAMIC_MINOR,

.name = "binder",

.fops = &binder_fops

};

static int __init binder_init(void)

{

int ret;

binder_proc_dir_entry_root = proc_mkdir("binder", NULL);

if (binder_proc_dir_entry_root)

binder_proc_dir_entry_proc = proc_mkdir("proc", binder_proc_dir_entry_root);

ret = misc_register(&binder_miscdev);

if (binder_proc_dir_entry_root) {

create_proc_read_entry("state", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_state, NULL);

create_proc_read_entry("stats", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_stats, NULL);

create_proc_read_entry("transactions", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transactions, NULL);

create_proc_read_entry("transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log);

create_proc_read_entry("failed_transaction_log", S_IRUGO, binder_proc_dir_entry_root, binder_read_proc_transaction_log, &binder_transaction_log_failed);

}

return ret;

}

device_initcall(binder_init);

binder_open

创建一个struct binder_proc数据结构来保存打开设备文件/dev/binder的进程的上下文信息

并且将这个进程上下文信息保存在打开文件结构struct file的私有数据成员变量private_data中

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc; //proc

if (binder_debug_mask & BINDER_DEBUG_OPEN_CLOSE)

printk(KERN_INFO "binder_open: %d:%d\n", current->group_leader->pid, current->pid);

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

proc->default_priority = task_nice(current);

mutex_lock(&binder_lock);

binder_stats.obj_created[BINDER_STAT_PROC]++;

hlist_add_head(&proc->proc_node, &binder_procs);

proc->pid = current->group_leader->pid;

INIT_LIST_HEAD(&proc->delivered_death);

filp->private_data = proc; //private_data

mutex_unlock(&binder_lock);

...

}

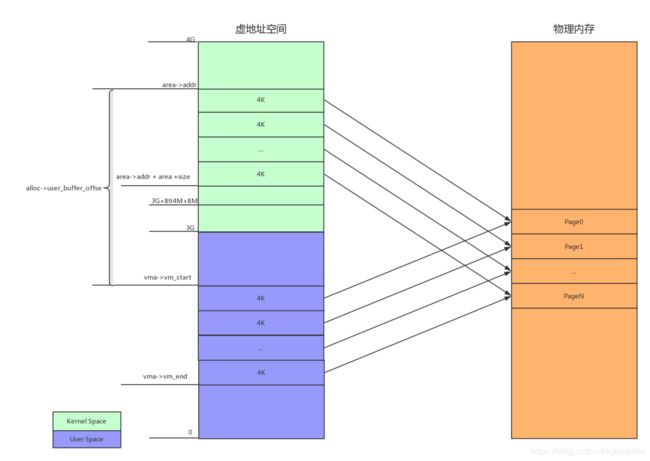

binder_mmap

对打开的设备文件进行内存映射操作mmap

重点:同一物理地址,当内核地址为kernel_addr,则进程地址为proc_addr = kernel_addr + user_buffer_offset

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{

int ret;

struct vm_struct *area;

//private_data得到在打开设备文件/dev/binder时创建的struct binder_proc结构

struct binder_proc *proc = filp->private_data;

const char *failure_string;

struct binder_buffer *buffer;

//struct vm_area_struct用户空间内存映射信息,连续的虚拟地址空间

//mapsize 最大4M

if ((vma->vm_end - vma->vm_start) > SZ_4M)

vma->vm_end = vma->vm_start + SZ_4M;

binder_debug(BINDER_DEBUG_OPEN_CLOSE,

"binder_mmap: %d %lx-%lx (%ld K) vma %lx pagep %lx\n",

proc->pid, vma->vm_start, vma->vm_end,

(vma->vm_end - vma->vm_start) / SZ_1K, vma->vm_flags,

(unsigned long)pgprot_val(vma->vm_page_prot));

if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) {

ret = -EPERM;

failure_string = "bad vm_flags";

goto err_bad_arg;

}

vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE;

mutex_lock(&binder_mmap_lock);

if (proc->buffer) {

ret = -EBUSY;

failure_string = "already mapped";

goto err_already_mapped;

}

//一次拷贝的原理

//分配一个连续的内核虚拟空间,与进程虚拟空间大小一致

//struct vm_struct内核空间内存映射信息,(3G+896M + 8M) ~ 4G 之间 (120M)

//同一个物理页面,一方映射到进程虚拟地址空间,一方面映射到内核虚拟地址空间

//只需要把Client进程空间的数据拷贝一次到内核空间,然后Server与内核共享这个数据

//get_vm_area获得size字节大小的内核虚拟空间 ,物理页面是需要时才去申请和映射

area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP);

if (area == NULL) {

ret = -ENOMEM;

failure_string = "get_vm_area";

goto err_get_vm_area_failed;

}

//proc->buffer指向内核虚拟空间的起始地址,buffer_size大小

proc->buffer = area->addr;

//地址偏移量 = 用户虚拟地址空间 - 内核虚拟地址空间

proc->user_buffer_offset = vma->vm_start - (uintptr_t)proc->buffer;

mutex_unlock(&binder_mmap_lock);

#ifdef CONFIG_CPU_CACHE_VIPT

if (cache_is_vipt_aliasing()) {

while (CACHE_COLOUR((vma->vm_start ^ (uint32_t)proc->buffer))) {

printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p bad alignment\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer);

vma->vm_start += PAGE_SIZE;

}

}

#endif

//分配物理页的指针数组,数组大小为vma的等效page个数;

proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL);

if (proc->pages == NULL) {

ret = -ENOMEM;

failure_string = "alloc page array";

goto err_alloc_pages_failed;

}

proc->buffer_size = vma->vm_end - vma->vm_start;

vma->vm_ops = &binder_vm_ops;

vma->vm_private_data = proc;

//先分配1个物理页PAGE_SIZE,并将其分别映射到内核线性地址和用户态虚拟地址上

if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) {

ret = -ENOMEM;

failure_string = "alloc small buf";

goto err_alloc_small_buf_failed;

}

//binder_buffer对象 指向proc的buffer起始地址

buffer = proc->buffer;

//初始化进程binder_buffer链表头, binder_buffer,在将来transaction时会用到

INIT_LIST_HEAD(&proc->buffers);

//buffers添加第一个节点buffer

list_add(&buffer->entry, &proc->buffers);

buffer->free = 1;

//将空闲buffer放入proc->free_buffers中

binder_insert_free_buffer(proc, buffer);

proc->free_async_space = proc->buffer_size / 2;

barrier();

proc->files = get_files_struct(proc->tsk);

proc->vma = vma;

proc->vma_vm_mm = vma->vm_mm;

/*printk(KERN_INFO "binder_mmap: %d %lx-%lx maps %p\n",

proc->pid, vma->vm_start, vma->vm_end, proc->buffer);*/

return 0;

err_alloc_small_buf_failed:

kfree(proc->pages);

proc->pages = NULL;

err_alloc_pages_failed:

mutex_lock(&binder_mmap_lock);

vfree(proc->buffer);

proc->buffer = NULL;

err_get_vm_area_failed:

err_already_mapped:

mutex_unlock(&binder_mmap_lock);

err_bad_arg:

printk(KERN_ERR "binder_mmap: %d %lx-%lx %s failed %d\n",

proc->pid, vma->vm_start, vma->vm_end, failure_string, ret);

return ret;

static int binder_update_page_range(struct binder_proc *proc, int allocate,

void *start, void *end, struct vm_area_struct *vma)

{

void *page_addr;

unsigned long user_page_addr;

struct vm_struct tmp_area;

struct page **page;

struct mm_struct *mm;

if (binder_debug_mask & BINDER_DEBUG_BUFFER_ALLOC)

printk(KERN_INFO "binder: %d: %s pages %p-%p\n",

proc->pid, allocate ? "allocate" : "free", start, end);

if (end <= start)

return 0;

if (vma)

mm = NULL;

else

mm = get_task_mm(proc->tsk);

if (mm) {

down_write(&mm->mmap_sem);

vma = proc->vma;

}

//allocate 申请/释放

if (allocate == 0)

goto free_range;

if (vma == NULL) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed to "

"map pages in userspace, no vma\n", proc->pid);

goto err_no_vma;

}

//开始循环分配物理页

for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) {

int ret;

struct page **page_array_ptr;

page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

BUG_ON(*page);

//分配1个page

*page = alloc_page(GFP_KERNEL | __GFP_ZERO);

if (*page == NULL) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"for page at %p\n", proc->pid, page_addr);

goto err_alloc_page_failed;

}

tmp_area.addr = page_addr;

tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */;

page_array_ptr = page;

//内存映射到内核空间

ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %p in kernel\n",

proc->pid, page_addr);

goto err_map_kernel_failed;

}

// 内存映射到用户空间

user_page_addr =

(uintptr_t)page_addr + proc->user_buffer_offset;

ret = vm_insert_page(vma, user_page_addr, page[0]);

if (ret) {

printk(KERN_ERR "binder: %d: binder_alloc_buf failed "

"to map page at %lx in userspace\n",

proc->pid, user_page_addr);

goto err_vm_insert_page_failed;

}

/* vm_insert_page does not seem to increment the refcount */

}

if (mm) {

up_write(&mm->mmap_sem);

mmput(mm);

}

return 0;

free_range:

for (page_addr = end - PAGE_SIZE; page_addr >= start;

page_addr -= PAGE_SIZE) {

page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE];

if (vma)

zap_page_range(vma, (uintptr_t)page_addr +

proc->user_buffer_offset, PAGE_SIZE, NULL);

err_vm_insert_page_failed:

unmap_kernel_range((unsigned long)page_addr, PAGE_SIZE);

err_map_kernel_failed:

__free_page(*page);

*page = NULL;

err_alloc_page_failed:

;

}

err_no_vma:

if (mm) {

up_write(&mm->mmap_sem);

mmput(mm);

}

return -ENOMEM;

}

//分配2的n此方个页面

//struct page用于表示一个内存物理页

struct page * alloc_pages(unsigned int gfp_mask, unsigned int order);

//vm_area_struct表示用户空间的一段虚拟内存区域, 0~3G的空间中一段连续的虚拟地址空间

//即使mmap系统调用所返回的地址,就是vm_start

struct vm_area_struct

{

struct mm_struct *vm_mm; //进程的mm_struct结构体

unsigned long vm_start; //虚拟内存区域起始地址

unsigned long vm_end; //虚拟内存区域结束地址

//....

} ;

//将一个page指针指向的物理页映射到用户虚拟地址空间

//vma:用户虚拟地址空间

//addr:用户空间的虚拟地址

int vm_insert_page(struct vm_area_struct *vma, unsigned long addr, struct page *page);

//内核空间的一段连续的虚拟内存区域, 除去那896M用于连续物理内存(主要DMA作用),其他的部分1G - 896 -8(安全)=120M 可以用于不连续物理页面,next的链表

struct vm_struct {

struct vm_struct *next; //指向下一个vm区域,所有的vm组成一个链表

void *addr; //虚拟内存区域的起始地址

unsigned long size; //该块内存区的大小

struct page **pages; //vm所映射的page

phys_addr_t phys_addr;//对应起始的物理地址和addr相对应

//...

};

//向内核空间申请一段虚拟的内存区域

struct vm_struct *get_vm_area(unsigned long size, unsigned long flags);

//将内核地址空间的和内存物理页面映射

int map_vm_area(struct vm_struct *area, pgprot_t prot, struct page ***pages);

struct binder_buffer {

struct list_head entry; /* free and allocated entries by address */

struct rb_node rb_node; /* free entry by size or allocated entry */

/* by address */

unsigned free:1;

unsigned allow_user_free:1;

unsigned async_transaction:1;

unsigned debug_id:29;

struct binder_transaction *transaction;

struct binder_node *target_node;

size_t data_size;

size_t offsets_size;

uint8_t data[0]; //0数组

};

shell

ps | grep system_server

cat /proc/xxxx/maps | grep "/dev/binder"

b3d42000-b3e40000 r--p 00000000 00:0c 2210 /dev/binder

应用 open ,mmap

frameworks/base/cmds/servicemanager目录下,主要是由binder.h、binder.c和service_manager.c

int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

if (binder_become_context_manager(bs)) {

LOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

return 0;

}

struct binder_state *binder_open(unsigned mapsize)

{

struct binder_state *bs;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return 0;

}

bs->fd = open("/dev/binder", O_RDWR);

if (bs->fd < 0) {

fprintf(stderr,"binder: cannot open device (%s)\n",

strerror(errno));

goto fail_open;

}

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

/* TODO: check version */

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return 0;

}