音视频开发(二)Android使用FFmpeg解码音频数据并播放原始PCM格式音频

Android使用FFmpeg解码音频数据并播放原始PCM格式音频

1、创建工程

- 1、将编译好的FFmpeg动态库和include的头文件放入工程

- 2、配置CmakeLists.txt文件引入并链接库文件

- 3、在gradle文件加入abi设置

工程结构如下:

将include放入cpp目录下,创建JniLibs放入FFmpeg编译好的arm和x86动态库放入,CMakeLists.txt放入工程根目录。

编写CMakeLists.txt文件

include_directories(src/main/cpp/include) //第一步加入路径

//第二步将so文件库引入

add_library( avcodec-57 SHARED IMPORTED)

set_target_properties( avcodec-57

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libavcodec-57.so)

add_library( avdevice-57 SHARED IMPORTED)

set_target_properties( avdevice-57

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libavdevice-57.so)

add_library( avfilter-6 SHARED IMPORTED)

set_target_properties( avfilter-6

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libavfilter-6.so)

add_library( avformat-57 SHARED IMPORTED)

set_target_properties( avformat-57

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libavformat-57.so)

add_library( avutil-55 SHARED IMPORTED)

set_target_properties( avutil-55

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libavutil-55.so)

add_library( postproc-54 SHARED IMPORTED)

set_target_properties( postproc-54

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libpostproc-54.so)

add_library( swresample-2 SHARED IMPORTED)

set_target_properties( swresample-2

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libswresample-2.so)

add_library( swscale-4 SHARED IMPORTED)

set_target_properties( swscale-4

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/jniLibs/${ANDROID_ABI}/libswscale-4.so)

//第三步,链接库

target_link_libraries( # Specifies the target library.

native-lib

avcodec-57

avdevice-57

avfilter-6

avformat-57

avutil-55

postproc-54

swresample-2

swscale-4

OpenSLES

# Links the target library to the log library

# included in the NDK.

${log-lib})

配置gradle文件

android {

compileSdkVersion 29

buildToolsVersion "29.0.2"

defaultConfig {

minSdkVersion 16

targetSdkVersion 29

versionCode 1

versionName "1.0"

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

consumerProguardFiles 'consumer-rules.pro'

//的加入部分---------------------------------------

externalNativeBuild {

cmake {

cppFlags "-frtti -fexceptions"

abiFilters "armeabi","x86"

}

}

sourceSets {

main {

jniLibs.srcDirs = ['src/main/jniLibs']

}

}

//-------------------------------------------------------

}

buildTypes {

release {

minifyEnabled false

proguardFiles getDefaultProguardFile('proguard-android-optimize.txt'), 'proguard-rules.pro'

}

}

//的加入部分---------------------------------------

externalNativeBuild {

cmake {

path "CMakeLists.txt"

}

}

//-------------------------------------------------------

}

abiFilters “armeabi”,“x86”,一般手机的指令架构位arm或者x86,所以过滤这两个就行了

在调用的Java类中加载动态库

public class WLPlayer {

static {

System.loadLibrary("native-lib");

System.loadLibrary("avcodec-57");

System.loadLibrary("avdevice-57");

System.loadLibrary("avfilter-6");

System.loadLibrary("avformat-57");

System.loadLibrary("avutil-55");

System.loadLibrary("postproc-54");

System.loadLibrary("swresample-2");

System.loadLibrary("swscale-4");

}

}

2、解码音频

在解码音频前我们还需要做几个准备

- 1、实现C++和Java在主线程和子线程之间的回调

- 2、C++多线程队列实现生产者消费者(一边解码一遍播放)

首先编写一个WlCallJava的C++类实现和java之间的互相调用(C++需要获取JAVA虚拟机对象,和Java的一些相关信息才能回调Java)

WlCalljava 头文件

#ifndef WY_MUSIC_WLCALLJAVA_H

#define WY_MUSIC_WLCALLJAVA_H

#include 使用队列和线程锁、信号量,编写WlQueue.cpp实现生产者消费者

#ifndef WY_MUSIC_WLQUEUE_H

#define WY_MUSIC_WLQUEUE_H

extern "C"{

#include 开始编写WlFFmpeg.cpp解码音频

#include "WlCallJava.h"

#include "pthread.h"

#include "WlAudio.h"

extern "C"{

#include 3、使用OpenEL播放解码后重采样PCM格式数据

· 引入OpenEL ES动态库

- 1、创建引擎

- 2、 创建混音器

- 3、 设置PCM数据

- 4、 缓冲队列接口

#include "WlQueue.h"

#include "WlPlaystatus.h"

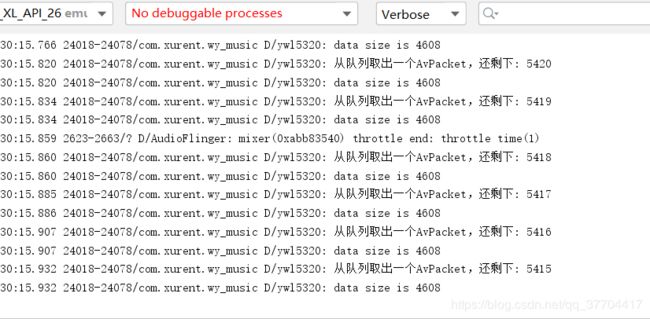

#include 演示成果

2、视频演示

演示视频播放地址