spark 环境搭建及几种模式测试

spark安装部署

标签(空格分隔): spark

hadoop,spark,kafka交流群:224209501

1,spark环境的安装

创建四个目录

sudo mkdir /opt/modules

sudo mkdir /opt/softwares

sudo mkdir /opt/tools

sudo mkdir /opt/datas

sudo chmod 777 -R /opt/1,安装jdk1.7

先卸载自带的jdk

rpm –qa | grep java

sudo rpm -e --nodeps (自带java包)安装jdk1.7

export JAVA_HOME=/opt/modules/jdk1.7.0_67

export PATH=$PATH:$JAVA_HOME/bin2,spark编译

安装mvn

export MAVEN_HOME=/usr/local/apache-maven-3.0.5

export PATH=$PATH:$MAVEN_HOME/bin3,安装scala

export SCALA_HOME=/opt/modules/scala-2.10.4

export PATH=$PATH:$SCALA_HOME/bin4,修改mvn镜像源

编译之前先配置镜像及域名服务器,来提高下载速度,进而提高编译速度,用nodepad++打开/opt/compileHadoop/apache-maven-3.0.5/conf/setting.xml。(nodepad已经通过sftp链接到了机器)

<mirror>

<id>nexus-springid>

<mirrorOf>cdh.repomirrorOf>

<name>springname>

<url>http://repo.spring.io/repo/url>

mirror>

<mirror>

<id>nexus-spring2id>

<mirrorOf>cdh.releases.repomirrorOf>

<name>spring2name>

<url>http://repo.spring.io/repo/url>

mirror>5,配置域名解析服务器

sudo vi /etc/resolv.conf

添加内容:

nameserver 8.8.8.8

nameserver 8.8.4.46,编译spark

为了提高编译速度,修改如下内容

VERSION=1.3.0

SPARK_HADOOP_VERSION=2.6.0-cdh5.4.0

SPARK_HIVE=1

#VERSION=$("$MVN" help:evaluate -Dexpression=project.version 2>/dev/null | grep -v "INFO" | tail -n 1)

#SPARK_HADOOP_VERSION=$("$MVN" help:evaluate -Dexpression=hadoop.version $@ 2>/dev/null\

# | grep -v "INFO"\

# | tail -n 1)

#SPARK_HIVE=$("$MVN" help:evaluate -Dexpression=project.activeProfiles -pl sql/hive $@ 2>/dev/null\

# | grep -v "INFO"\

# | fgrep --count "hive ";\

# # Reset exit status to 0, otherwise the script stops here if the last grep finds nothing\

# # because we use "set -o pipefail"

# echo -n)执行编译指令:

./make-distribution.sh --tgz -Pyarn -Phadoop-2.4 -Dhadoop.version=2.6.0-cdh5.4.0 -Phive-0.13.1 -Phive-thriftserver

去掉下面编译会很快,即使编译失败也不会每次都清除

-DskipTests clean package4 安装hadoop2.6

1,添加java主目录位置

hadoop-env.sh

mapred-env.sh

yarn-env.sh

添加如下:

export JAVA_HOME=/opt/modules/jdk1.7.0_672,core-site.xml配置

<property>

<name>hadoop.tmp.dirname>

<value>/opt/modules/hadoop-2.5.0/data/tmpvalue>

property>

<property>

<name>fs.defaultFSname>

<value>hdfs://spark.learn.com:8020value>

property>3,hdfs-site.xml配置

<property>

<name>dfs.replicationname>

<value>1value>

property>4,mapred-site.xml配置

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>5,yarn-site.xml配置

<property>

<name>yarn.resourcemanager.hostnamename>

<value>miaodonghua.hostvalue>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>6,slaves配置

miaodonghua.host//主机名:nodemanager和datanode地址7,格式化namenode

bin/hdfs namenode -format3,spark几种模式的安装部署

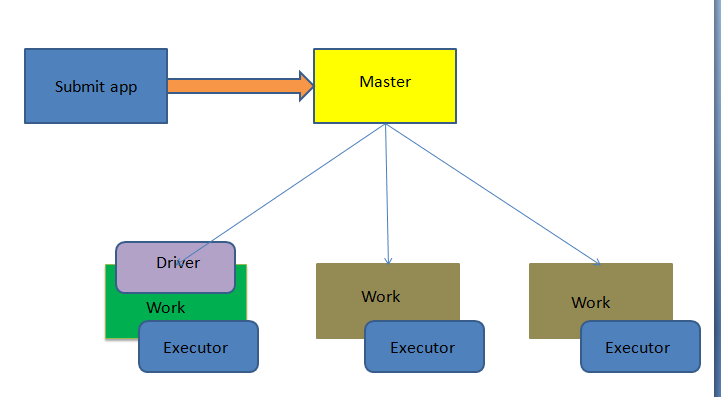

1 spark本地模式的安装

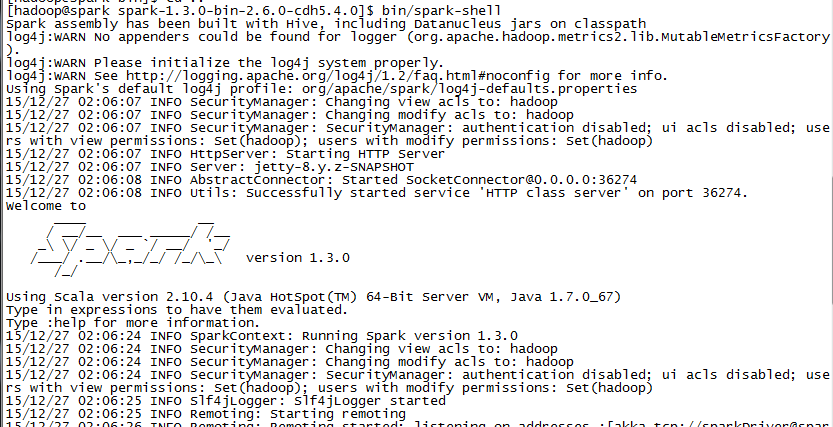

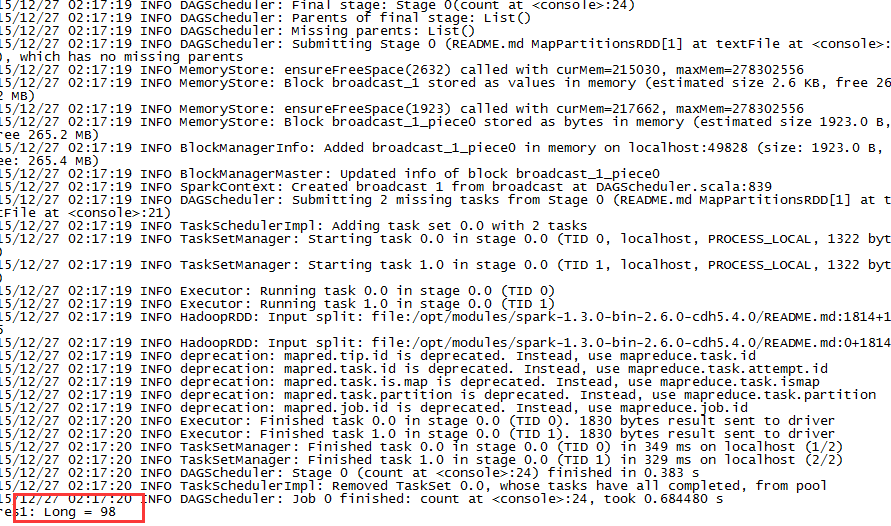

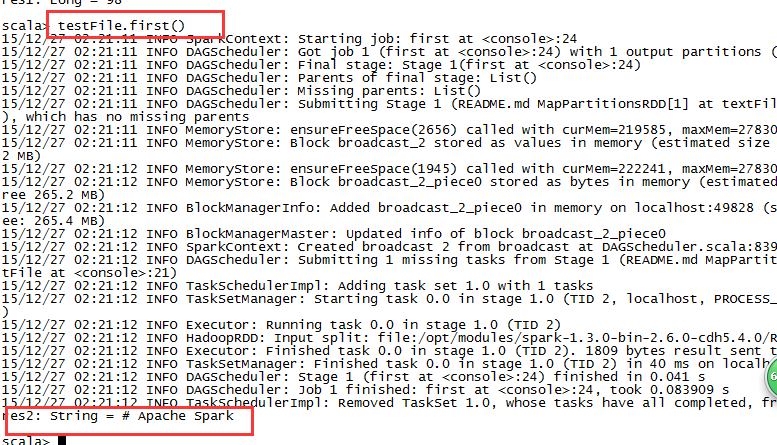

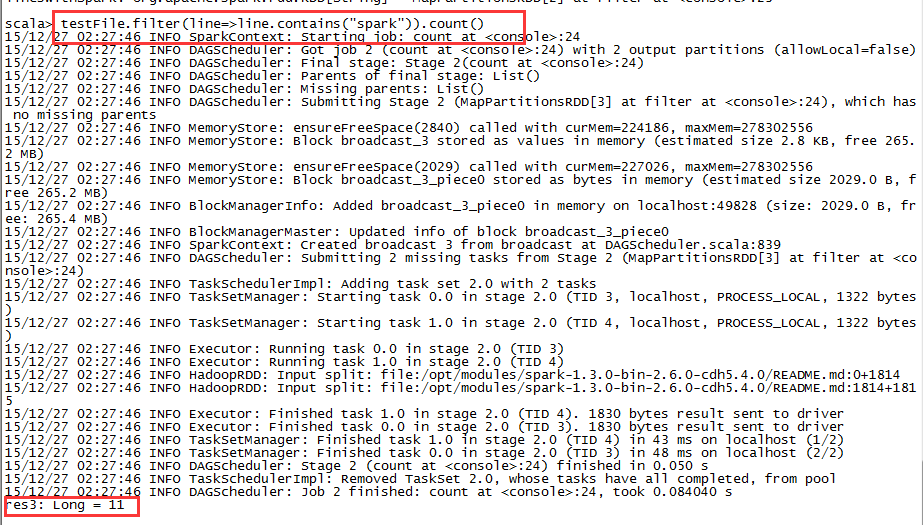

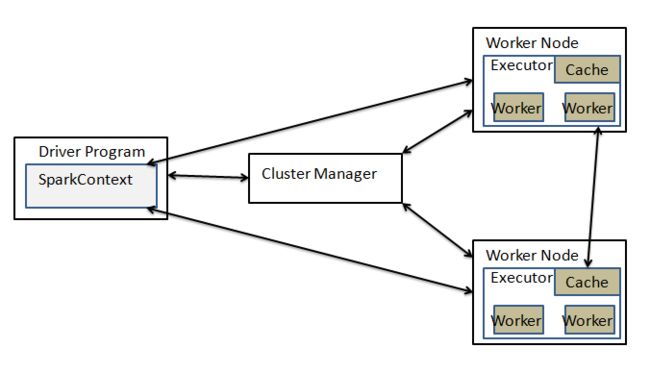

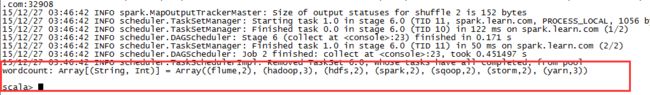

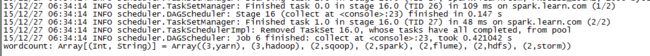

本地模式基础语法测试

1,直接运行

bin/spark-shellhttp://spark.learn.com:4040val textFile = sc.textFile("README.md")testFile.count()testFile.first()val linesWithSpark = testFile.filter(line => line.contains("Spark"))testFile.filter(line => line.contains("Spark")).count()testFile.map(line => line.split(" ").size).reduce((a, b) => if (a > b) a else b)import java.lang.Math

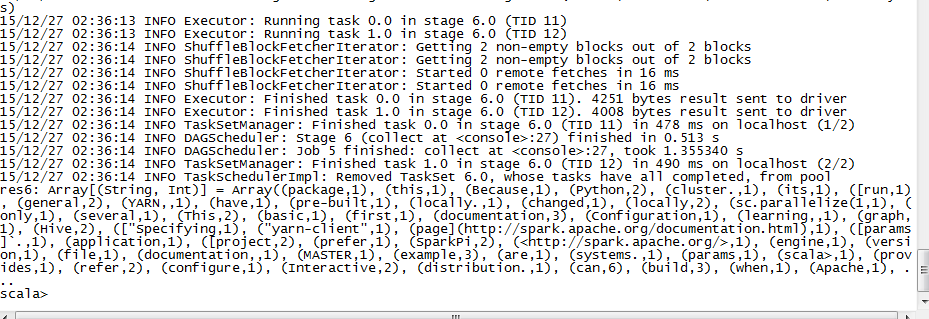

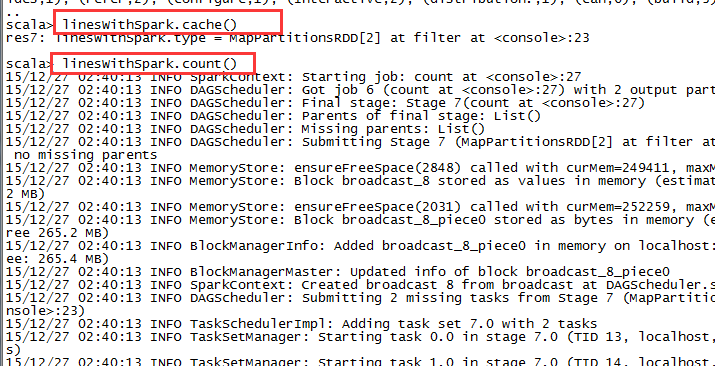

testFile.map(line => line.split(" ").size).reduce((a, b) => Math.max(a, b))val wordCounts = testFile.flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey((a, b) => a + b) wordCounts.collect()cache

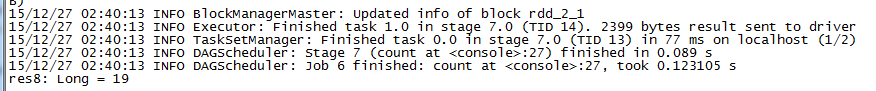

scala> linesWithSpark.cache()scala> linesWithSpark.count()2 spark standalone模式的安装

HADOOP_CONF_DIR=/opt/modules/hadoop-2.6.0-cdh5.4.0/etc/hadoop

JAVA_HOME=/opt/modules/jdk1.7.0_67

SCALA_HOME=/opt/modules/scala-2.10.4

SPARK_MASTER_IP=spark.learn.com

SPARK_MASTER_PORT=7077

SPARK_MASTER_WEBUI_PORT=8080

SPARK_WORKER_CORES=1

SPARK_WORKER_MEMORY=1000m

SPARK_WORKER_PORT=7078

SPARK_WORKER_WEBUI_PORT=8081

SPARK_WORKER_INSTANCES=12,配置spark-defaults.conf

spark.master spark://hadoop-spark.com:70773,配置slaves

spark.learn.com4,启动spark

sbin/start-master.sh

sbin/start-slaves.sh5,命令测试

读取本地的需要注释掉

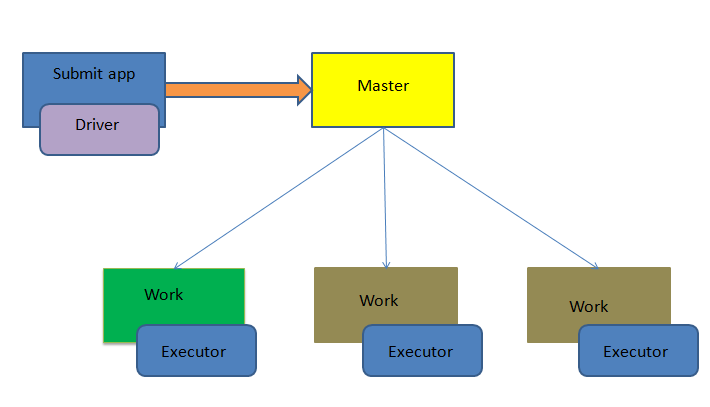

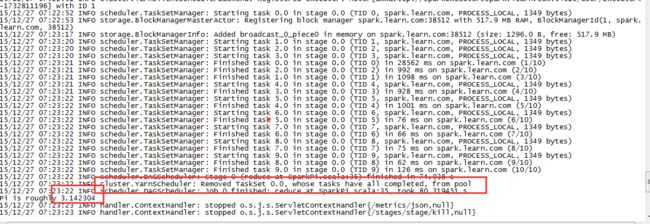

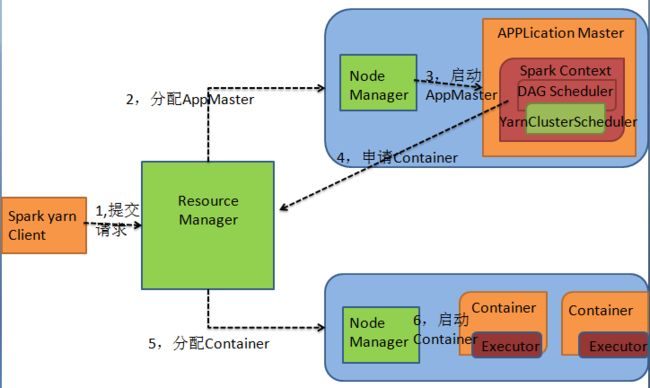

HADOOP_CONF_DIR=/opt/modules/hadoop-2.6.0-cdh5.4.0/etc/hadoopval textFile = sc.textFile("hdfs://spark.learn.com:8020/user/hadoop/spark")val wordcount = textFile.flatMap(x=>x.split(" ")).map(x=>(x,1)).reduceByKey((a,b)=>a+b).collect()val wordcount = textFile.flatMap(x=>x.split(" ")).map(x=>(x,1)).reduceByKey((a,b)=>a+b).sortByKey(true).collect()val wordcount = textFile.flatMap(x=>x.split(" ")).map(x=>(x,1)).reduceByKey((a,b)=>a+b).map(x=>(x._2,x._1)).sortByKey(false).collect()sc.textFile("hdfs://spark.learn.com:8020/user/cyhp/spark/wc.input").flatMap(_.split(" ")).map((_,1)).reduceByKey(_ + _).collect3 spark on yarn

查看spark-submit参数

[hadoop@spark spark-1.3.0-bin-2.6.0-cdh5.4.0]$ bin/spark-submit --helpSpark assembly has been built with Hive, including Datanucleus jars on classpath

Usage: spark-submit [options] [app arguments]

Usage: spark-submit --kill [submission ID] --master [spark://...]

Usage: spark-submit --status [submission ID] --master [spark://...]

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or

on one of the worker machines inside the cluster ("cluster")

(Default: client).

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--repositories Comma-separated list of additional remote repositories to

search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place

on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working

directory of each executor.

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not

specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 512M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver. Note that

jars added with --jars are automatically included in the

classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application.

--help, -h Show this help message and exit

--verbose, -v Print additional debug output

--version, Print the version of current Spark

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--executor-cores NUM Number of cores per executor (Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

--archives ARCHIVES Comma separated list of archives to be extracted into the

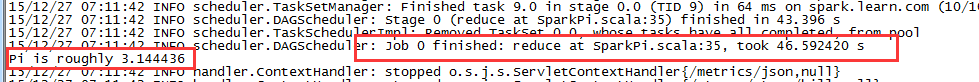

working directory of each executor. bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

lib/spark-examples-1.3.0-hadoop2.6.0-cdh5.4.0.jar \

10 bin/spark-submit \

--deploy-mode cluster \

--class org.apache.spark.examples.SparkPi \

lib/spark-examples-1.3.0-hadoop2.6.0-cdh5.4.0.jar \

103,submit提交到yarn上

yarn Cluster mode

yarn Client mode

bin/spark-submit \

--master yarn \

--class org.apache.spark.examples.SparkPi \

lib/spark-examples-1.3.0-hadoop2.6.0-cdh5.4.0.jar \

10 4,spark监控界面

1,启动spark监控服务

执行下面指令启动spark historyserver

./sbin/start-history-server.sh查看方式

http://:18080 2,spark监控的配置项介绍

1,整体配置项

我们可以对historyserver进行如下配置:

| Environment Variable | Meaning |

|---|---|

| SPARK_DAEMON_MEMORY | Memory to allocate to the history server (default: 512m). |

| SPARK_DAEMON_JAVA_OPTS | JVM options for the history server (default: none). |

| SPARK_PUBLIC_DNS | The public address for the history server. If this is not set, links to application history may use the internal address of the server, resulting in broken links (default: none). |

| SPARK_HISTORY_OPTS | spark.history.* configuration options for the history server (default: none). |

2,SPARK_HISTORY_OPTS配置

| Property Name | Default | Meaning |

|---|---|---|

| spark.history.provider | org.apache.spark.deploy.history.FsHistoryProvider | Name of the class implementing the application history backend. Currently there is only one implementation, provided by Spark, which looks for application logs stored in the file system. |

| spark.history.fs.logDirectory | file:/tmp/spark-events | Directory that contains application event logs to be loaded by the history server |

| spark.history.fs.updateInterval | 10 | The period, in seconds, at which information displayed by this history server is updated. Each update checks for any changes made to the event logs in persisted storage. |

| spark.history.retainedApplications | 50 | The number of application UIs to retain. If this cap is exceeded, then the oldest applications will be removed. |

| spark.history.ui.port | 18080 | |

| spark.history.kerberos.enabled | false | Indicates whether the history server should use kerberos to login. This is useful if the history server is accessing HDFS files on a secure Hadoop cluster. If this is true, it uses the configs spark.history.kerberos.principal and spark.history.kerberos.keytab. |

| spark.history.kerberos.principal | (none) | Kerberos principal name for the History Server. |

| spark.history.kerberos.keytab | (none) | Location of the kerberos keytab file for the History Server. |

| spark.history.ui.acls.enable | false | Specifies whether acls should be checked to authorize users viewing the applications. If enabled, access control checks are made regardless of what the individual application had set for spark.ui.acls.enable when the application was run. The application owner will always have authorization to view their own application and any users specified via spark.ui.view.acls when the application was run will also have authorization to view that application. If disabled, no access control checks are made. |

3,标记一个任务是否完成

要注意的是historyserver仅仅显示完成了的spark任务。标记任务完成的一种方式是直接调用sc.stop()。

4,spark-defaults.conf中的配置

| Property Name | Default Meaning |

|---|---|

| spark.eventLog.compress | false |

| spark.eventLog.dir | file:///tmp/spark-events |

| spark.eventLog.enabled | false |

2,具体配置

1,在spark-env.sh

SPARK_HISTORY_OPTS="-Dspark.history.fs.logDirectory=hdfs://spark.learn.com:8020/user/hadoop/spark/history"2,在spark-defaults.conf

spark.eventLog.enabled true

spark.eventLog.dir hdfs://spark.learn.com:8020/user/hadoop/spark/history

spark.eventLog.compress true3,测试

启动相关服务

sbin/start-master.sh

sbin/start-slaves.sh

sbin/start-history-server.sh

bin/spark-shell执行spark应用

val textFile = sc.textFile("hdfs://spark.learn.com:8020/user/hadoop/spark/input/")

textFile.count

sc.stop