【ffmpeg】常用结构体集合

看大牛雷霄骅的博客,再次精简出学习ffmpeg的一些知识,

本篇简要整理一下ffmpeg中常用的几个结构体,以作备忘。

本人所用ffmpeg源码版本:ffmpeg-2.8.3,注意,版本不同,某些代码结构及其及所在文件、位置可能不同。

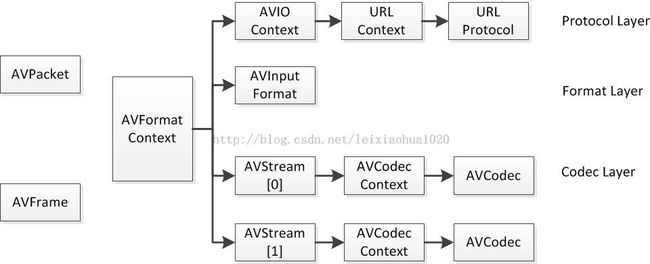

结构体AVFormatContext

该结构体在avformat.h中,AVFormatContext主要存储视音频封装格式中包含的信息,包含编解码码流丰富的信息,统领全局的基本结构体,主要用于处理封装格式(FLV/MKV/RMVB等),由avformat_alloc_context()初始化,由avformat_free_context()销毁。如下:比较重要的成员已做注释

typedef struct AVFormatContext {

const AVClass *av_class;

struct AVInputFormat *iformat; //输入数据的封装格式

struct AVOutputFormat *oformat;

void *priv_data;

AVIOContext *pb; //输入数据的缓存

int ctx_flags;

unsigned int nb_streams;

AVStream **streams; //视音频流

char filename[1024]; //文件名

int64_t start_time;

int64_t duration; //时长(单位:微秒us,转换为秒需要除以1000000)

int bit_rate; //比特率(单位bps,转换为kbps需要除以1000)

unsigned int packet_size;

int max_delay;

int flags;

#if FF_API_PROBESIZE_32

unsigned int probesize;

attribute_deprecated

int max_analyze_duration;

#endif

const uint8_t *key;

int keylen;

unsigned int nb_programs;

AVProgram **programs;

enum AVCodecID video_codec_id;

enum AVCodecID audio_codec_id;

enum AVCodecID subtitle_codec_id;

unsigned int max_index_size;

unsigned int max_picture_buffer;

unsigned int nb_chapters;

AVChapter **chapters;

AVDictionary *metadata; //元数据

int64_t start_time_realtime;

int fps_probe_size;

int error_recognition;

AVIOInterruptCB interrupt_callback;

int debug;

#define FF_FDEBUG_TS 0x0001

int64_t max_interleave_delta;

int strict_std_compliance;

int event_flags;

int max_ts_probe;

int avoid_negative_ts;

int ts_id;

int audio_preload;

int max_chunk_duration;

int max_chunk_size;

int use_wallclock_as_timestamps;

int avio_flags;

enum AVDurationEstimationMethod duration_estimation_method;

int64_t skip_initial_bytes;

unsigned int correct_ts_overflow;

int seek2any;

int flush_packets;

int probe_score;

int format_probesize;

char *codec_whitelist;

char *format_whitelist;

AVFormatInternal *internal;

int io_repositioned;

AVCodec *video_codec;

AVCodec *audio_codec;

AVCodec *subtitle_codec;

AVCodec *data_codec;

int metadata_header_padding;

void *opaque;

av_format_control_message control_message_cb;

int64_t output_ts_offset;

#if FF_API_PROBESIZE_32

int64_t max_analyze_duration2;

#else

int64_t max_analyze_duration;

#endif

#if FF_API_PROBESIZE_32

int64_t probesize2;

#else

int64_t probesize;

#endif

uint8_t *dump_separator;

enum AVCodecID data_codec_id;

int (*open_cb)(struct AVFormatContext *s, AVIOContext **p, const char *url, int flags, const AVIOInterruptCB *int_cb, AVDictionary **options);

} AVFormatContext;结构体AVFrame

该结构体在Frame.h中,AVFrame结构体一般用于存储原始数据(即非压缩数据,例如对视频来说是YUV,RGB,对音频来说是PCM),此外还包含了一些相关的信息。比如说,解码的时候存储了宏块类型表,QP表,运动矢量表等数据。编码的时候也存储了相关的数据。因此在使用FFMPEG进行码流分析的时候,AVFrame是一个很重要的结构体。由av_frame_alloc()或av_image_fill_arrays()初始化,由av_frame_free()销毁。如下:比较重要的成员已做注释

typedef struct AVFrame {

#define AV_NUM_DATA_POINTERS 8

uint8_t *data[AV_NUM_DATA_POINTERS]; //解码后原始数据(对视频来说是YUV,RGB,对音频来说是PCM)

int linesize[AV_NUM_DATA_POINTERS]; //data中“一行”数据的大小。注意:未必等于图像的宽,一般大于图像的宽。

uint8_t **extended_data; //视频帧宽和高(1920x1080,1280x720...)

int width, height;

int nb_samples; //音频的一个AVFrame中可能包含多个音频帧,在此标记包含了几个

int format; //解码后原始数据类型(YUV420,YUV422,RGB24...)

int key_frame; //是否是关键帧

enum AVPictureType pict_type; //帧类型(I,B,P...)

#if FF_API_AVFRAME_LAVC

attribute_deprecated

uint8_t *base[AV_NUM_DATA_POINTERS];

#endif

AVRational sample_aspect_ratio; //宽高比(16:9,4:3...)

int64_t pts; //显示时间戳

int64_t pkt_pts;

int64_t pkt_dts;

int coded_picture_number; //编码帧序号

int display_picture_number; //显示帧序号

int quality;

#if FF_API_AVFRAME_LAVC

attribute_deprecated

int reference;

attribute_deprecated

int8_t *qscale_table; //QP表

attribute_deprecated

int qstride;

attribute_deprecated

int qscale_type;

attribute_deprecated

uint8_t *mbskip_table; //跳过宏块表

int16_t (*motion_val[2])[2]; //运动矢量表

attribute_deprecated

uint32_t *mb_type; //宏块类型表

attribute_deprecated

short *dct_coeff; //DCT系数

attribute_deprecated

int8_t *ref_index[2]; //运动估计参考帧列表(貌似H.264这种比较新的标准才会涉及到多参考帧)

#endif

void *opaque;

uint64_t error[AV_NUM_DATA_POINTERS];

#if FF_API_AVFRAME_LAVC

attribute_deprecated

int type;

#endif

int repeat_pict;

int interlaced_frame; //是否是隔行扫描

int top_field_first;

int palette_has_changed;

#if FF_API_AVFRAME_LAVC

attribute_deprecated

int buffer_hints;

attribute_deprecated

struct AVPanScan *pan_scan;

#endif

int64_t reordered_opaque;

#if FF_API_AVFRAME_LAVC

attribute_deprecated void *hwaccel_picture_private;

attribute_deprecated

struct AVCodecContext *owner;

attribute_deprecated

void *thread_opaque;

uint8_t motion_subsample_log2; //一个宏块中的运动矢量采样个数,取log的

#endif

int sample_rate;

uint64_t channel_layout;

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

AVBufferRef **extended_buf;

int nb_extended_buf;

AVFrameSideData **side_data;

int nb_side_data;

#define AV_FRAME_FLAG_CORRUPT (1 << 0)

int flags;

enum AVColorRange color_range;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

enum AVColorSpace colorspace;

enum AVChromaLocation chroma_location;

int64_t best_effort_timestamp;

int64_t pkt_pos;

int64_t pkt_duration;

AVDictionary *metadata;

int decode_error_flags;

#define FF_DECODE_ERROR_INVALID_BITSTREAM 1

#define FF_DECODE_ERROR_MISSING_REFERENCE 2

int channels;

int pkt_size;

AVBufferRef *qp_table_buf;

} AVFrame;data[]

对于packed格式的数据(例如RGB24),会存到data[0]里面。

对于planar格式的数据(例如YUV420P),则会分开成data[0],data[1],data[2]…(YUV420P中data[0]存Y,data[1]存U,data[2]存V)qscale_table

QP表指向一块内存,里面存储的是每个宏块的QP值。宏块的标号是从左往右,一行一行的来的。每个宏块对应1个QP。

qscale_table[0]就是第1行第1列宏块的QP值;qscale_table[1]就是第1行第2列宏块的QP值;qscale_table[2]就是第1行第3列宏块的QP值。以此类推…

宏块的个数用下式计算:

注:宏块大小是16x16的。

每行宏块数:

int mb_stride = pCodecCtx->width/16+1 宏块的总数:

int mb_sum = ((pCodecCtx->height+15)>>4)*(pCodecCtx->width/16+1) 其他一些重要成员的理解,见http://blog.csdn.net/leixiaohua1020/article/details/14214577

结构体AVCodecContext

该结构体在avcodec.h中,其成员及其多,AVCodecContext中很多的参数是编码的时候使用的,而不是解码的时候使用的。由avcodec_alloc_context3()初始化,对其中比较重要的已做注释:

typedef struct AVCodecContext {

const AVClass *av_class;

int log_level_offset;

enum AVMediaType codec_type; //编解码器的类型(视频,音频...)

const struct AVCodec *codec; //采用的解码器AVCodec(H.264,MPEG2...)

#if FF_API_CODEC_NAME

attribute_deprecated

char codec_name[32];

#endif

enum AVCodecID codec_id; /* see AV_CODEC_ID_xxx */

unsigned int codec_tag;

#if FF_API_STREAM_CODEC_TAG

attribute_deprecated

unsigned int stream_codec_tag;

#endif

void *priv_data;

struct AVCodecInternal *internal;

void *opaque;

int bit_rate; //平均比特率

int bit_rate_tolerance;

int global_quality;

int compression_level;

int flags;

int flags2;

uint8_t *extradata; //针对特定编码器包含的附加信息(例如对于H.264解码器来说,存储SPS,PPS等)

int extradata_size; //

AVRational time_base; //根据该参数,可以把PTS转化为实际的时间(单位为秒s)

int ticks_per_frame;

int delay;

int width, height; //如果是视频的话,代表宽和高

int coded_width, coded_height;

int gop_size;

enum AVPixelFormat pix_fmt;

#if FF_API_MOTION_EST

attribute_deprecated int me_method;

#endif

void (*draw_horiz_band)(struct AVCodecContext *s,

const AVFrame *src, int offset[AV_NUM_DATA_POINTERS],

int y, int type, int height);

enum AVPixelFormat (*get_format)(struct AVCodecContext *s, const enum AVPixelFormat * fmt);

int max_b_frames;

float b_quant_factor;

#if FF_API_RC_STRATEGY

attribute_deprecated int rc_strategy;

#define FF_RC_STRATEGY_XVID 1

#endif

int b_frame_strategy;

float b_quant_offset;

int has_b_frames;

int mpeg_quant;

float i_quant_factor;

float i_quant_offset;

float lumi_masking;

float temporal_cplx_masking;

float spatial_cplx_masking;

float p_masking;

float dark_masking;

int slice_count;

int prediction_method;

int *slice_offset;

AVRational sample_aspect_ratio;

int me_cmp;

int me_sub_cmp;

int mb_cmp;

int ildct_cmp;

int dia_size;

int last_predictor_count;

int pre_me;

int me_pre_cmp;

int pre_dia_size;

int me_subpel_quality;

#if FF_API_AFD

attribute_deprecated int dtg_active_format;

int me_range;

#if FF_API_QUANT_BIAS

attribute_deprecated int intra_quant_bias;

attribute_deprecated int inter_quant_bias;

#endif

int slice_flags;

#if FF_API_XVMC

attribute_deprecated int xvmc_acceleration;

#endif /* FF_API_XVMC */

int mb_decision;

uint16_t *intra_matrix;

uint16_t *inter_matrix;

int scenechange_threshold;

int noise_reduction;

#if FF_API_MPV_OPT

attribute_deprecated

int me_threshold;

attribute_deprecated

int mb_threshold;

#endif

int intra_dc_precision;

int skip_top;

int skip_bottom;

#if FF_API_MPV_OPT

attribute_deprecated

float border_masking;

#endif

int mb_lmin;

int mb_lmax;

int me_penalty_compensation;

int bidir_refine;

int brd_scale;

int keyint_min;

int refs; //运动估计参考帧的个数(H.264的话会有多帧,MPEG2这类的一般就没有了)

int chromaoffset;

#if FF_API_UNUSED_MEMBERS

attribute_deprecated int scenechange_factor;

#endif

int mv0_threshold;

int b_sensitivity;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

enum AVColorSpace colorspace;

enum AVColorRange color_range;

enum AVChromaLocation chroma_sample_location;

int slices;

enum AVFieldOrder field_order;

int sample_rate; //采样率(音频)

int channels; //声道数(音频)

enum AVSampleFormat sample_fmt; //采样格式

int frame_size;

int frame_number;

int block_align;

int cutoff;

#if FF_API_REQUEST_CHANNELS

attribute_deprecated int request_channels;

#endif

uint64_t channel_layout;

uint64_t request_channel_layout;

enum AVAudioServiceType audio_service_type;

enum AVSampleFormat request_sample_fmt;

#if FF_API_GET_BUFFER

attribute_deprecated

int (*get_buffer)(struct AVCodecContext *c, AVFrame *pic);

attribute_deprecated

void (*release_buffer)(struct AVCodecContext *c, AVFrame *pic);

attribute_deprecated

int (*reget_buffer)(struct AVCodecContext *c, AVFrame *pic);

#endif

int (*get_buffer2)(struct AVCodecContext *s, AVFrame *frame, int flags);

int refcounted_frames;

float qcompress; ///< amount of qscale change between easy & hard scenes (0.0-1.0)

float qblur; ///< amount of qscale smoothing over time (0.0-1.0)

int qmin;

int qmax;

int max_qdiff;

#if FF_API_MPV_OPT

attribute_deprecated

float rc_qsquish;

attribute_deprecated

float rc_qmod_amp;

attribute_deprecated

int rc_qmod_freq;

#endif

int rc_buffer_size;

int rc_override_count;

RcOverride *rc_override;

#if FF_API_MPV_OPT

attribute_deprecated

const char *rc_eq;

#endif

int rc_max_rate;

int rc_min_rate;

#if FF_API_MPV_OPT

attribute_deprecated

float rc_buffer_aggressivity;

attribute_deprecated

float rc_initial_cplx;

#endif

float rc_max_available_vbv_use;

float rc_min_vbv_overflow_use;

int rc_initial_buffer_occupancy;

int coder_type;

int context_model;

#if FF_API_MPV_OPT

attribute_deprecated

int lmin;

attribute_deprecated

int lmax;

#endif

int frame_skip_threshold;

int frame_skip_factor;

int frame_skip_exp;

int frame_skip_cmp;

int trellis;

int min_prediction_order;

int max_prediction_order;

int64_t timecode_frame_start;

void (*rtp_callback)(struct AVCodecContext *avctx, void *data, int size, int mb_nb);

int rtp_payload_size;

int mv_bits;

int header_bits;

int i_tex_bits;

int p_tex_bits;

int i_count;

int p_count;

int skip_count;

int misc_bits;

int frame_bits;

char *stats_out;

char *stats_in;

int workaround_bugs;

int strict_std_compliance;

int error_concealment;

int debug;

#if FF_API_DEBUG_MV

int debug_mv;

#endif

int err_recognition;

int64_t reordered_opaque;

struct AVHWAccel *hwaccel;

void *hwaccel_context;

uint64_t error[AV_NUM_DATA_POINTERS];

int dct_algo;

int idct_algo;

int bits_per_coded_sample;

int bits_per_raw_sample;

#if FF_API_LOWRES

int lowres;

#endif

#if FF_API_CODED_FRAME

attribute_deprecated AVFrame *coded_frame;

#endif

int thread_count;

int thread_type;

int active_thread_type;

int thread_safe_callbacks;

int (*execute)(struct AVCodecContext *c, int (*func)(struct AVCodecContext *c2, void *arg), void *arg2, int *ret, int count, int size);

int (*execute2)(struct AVCodecContext *c, int (*func)(struct AVCodecContext *c2, void *arg, int jobnr, int threadnr), void *arg2, int *ret, int count);

#if FF_API_THREAD_OPAQUE

attribute_deprecated

void *thread_opaque;

#endif

int nsse_weight;

int profile; //型(H.264里面就有,其他编码标准应该也有)

int level; //级(和profile差不太多)

enum AVDiscard skip_loop_filter;

enum AVDiscard skip_idct;

enum AVDiscard skip_frame;

uint8_t *subtitle_header;

int subtitle_header_size;

#if FF_API_ERROR_RATE

attribute_deprecated

int error_rate;

#endif

#if FF_API_CODEC_PKT

attribute_deprecated

AVPacket *pkt;

#endif

uint64_t vbv_delay;

int side_data_only_packets;

int initial_padding;

AVRational framerate;

enum AVPixelFormat sw_pix_fmt;

AVRational pkt_timebase;

const AVCodecDescriptor *codec_descriptor;

#if !FF_API_LOWRES

int lowres;

#endif

int64_t pts_correction_num_faulty_pts; /// Number of incorrect PTS values so far

int64_t pts_correction_num_faulty_dts; /// Number of incorrect DTS values so far

int64_t pts_correction_last_pts; /// PTS of the last frame

int64_t pts_correction_last_dts; /// DTS of the last frame

char *sub_charenc;

int sub_charenc_mode;

int skip_alpha;

int seek_preroll;

#if !FF_API_DEBUG_MV

int debug_mv;

#endif

uint16_t *chroma_intra_matrix;

uint8_t *dump_separator;

char *codec_whitelist;

unsigned properties;

} AVCodecContext;

结构体AVIOContext

该结构体位于avio.h文件中,AVIOContext是FFMPEG管理输入输出数据的结构体,用于输入输出(读写文件,RTMP协议等)。该结构体由avio_alloc_context()初始化,一些成员已经做注释

typedef struct AVIOContext {

const AVClass *av_class;

unsigned char *buffer; //缓存开始位置

int buffer_size; //缓存大小(默认32768)

unsigned char *buf_ptr; //当前指针读取到的位置

unsigned char *buf_end; //缓存结束的位置

void *opaque; //URLContext结构体

int (*read_packet)(void *opaque, uint8_t *buf, int buf_size);

int (*write_packet)(void *opaque, uint8_t *buf, int buf_size);

int64_t (*seek)(void *opaque, int64_t offset, int whence);

int64_t pos; /**< position in the file of the current buffer */

int must_flush; /**< true if the next seek should flush */

int eof_reached; /**< true if eof reached */

int write_flag; /**< true if open for writing */

int max_packet_size;

unsigned long checksum;

unsigned char *checksum_ptr;

unsigned long (*update_checksum)(unsigned long checksum, const uint8_t *buf, unsigned int size);

int error;

int (*read_pause)(void *opaque, int pause);

int64_t (*read_seek)(void *opaque, int stream_index,

int64_t timestamp, int flags);

int seekable;

int64_t maxsize;

int direct;

int64_t bytes_read;

int seek_count;

int writeout_count;

int orig_buffer_size;

int short_seek_threshold;

} AVIOContext;

重要成员的理解,见http://blog.csdn.net/leixiaohua1020/article/details/14215369

结构体AVCodec

结构体位于avcodec.h中,

typedef struct AVCodec {

const char *name; //编解码器的名字,比较短

const char *long_name; //编解码器的名字,全称,比较长

enum AVMediaType type; //指明了类型,是视频,音频,还是字幕

enum AVCodecID id; //ID,不重复

int capabilities;

const AVRational *supported_framerates; //支持的帧率(仅视频)

const enum AVPixelFormat *pix_fmts; //支持的像素格式(仅视频)

const int *supported_samplerates; //支持的采样率(仅音频)

const enum AVSampleFormat *sample_fmts; //支持的采样格式(仅音频)

const uint64_t *channel_layouts; //支持的声道数(仅音频)

uint8_t max_lowres; ///< maximum value for lowres supported by the decoder, no direct access, use av_codec_get_max_lowres()

const AVClass *priv_class; ///< AVClass for the private context

const AVProfile *profiles; ///< array of recognized profiles, or NULL if unknown, array is terminated by {FF_PROFILE_UNKNOWN}

int priv_data_size; //私有数据的大小

struct AVCodec *next;

int (*init_thread_copy)(AVCodecContext *);

int (*update_thread_context)(AVCodecContext *dst, const AVCodecContext *src);

const AVCodecDefault *defaults;

void (*init_static_data)(struct AVCodec *codec);

int (*init)(AVCodecContext *);

int (*encode_sub)(AVCodecContext *, uint8_t *buf, int buf_size,

const struct AVSubtitle *sub);

int (*encode2)(AVCodecContext *avctx, AVPacket *avpkt, const AVFrame *frame,

int *got_packet_ptr);

int (*decode)(AVCodecContext *, void *outdata, int *outdata_size, AVPacket *avpkt);

int (*close)(AVCodecContext *);

void (*flush)(AVCodecContext *);

int caps_internal;

} AVCodec;

每一个编解码器对应一个AVCodec结构体,如H.264解码器的结构体定义:AVCodec ff_h264_decoder。

结构体AVStream

该结构体在avformat.h文件中,AVStream是存储每一个视频/音频流信息的结构体。由avformat_new_stream()初始化,

typedef struct AVStream {

int index; //标识该视频/音频流

int id;

AVCodecContext *codec; //指向该视频/音频流的AVCodecContext(它们是一一对应的关系)

void *priv_data;

#if FF_API_LAVF_FRAC

attribute_deprecated

struct AVFrac pts;

#endif

AVRational time_base; //时基。通过该值可以把PTS,DTS转化为真正的时间。FFMPEG其他结构体中也有这个字段,但是根据我的经验,只有AVStream中的time_base是可用的。PTS*time_base=真正的时间

int64_t start_time;

int64_t duration; //该视频/音频流长度

int64_t nb_frames; ///< number of frames in this stream if known or 0

int disposition; /**< AV_DISPOSITION_* bit field */

enum AVDiscard discard; ///< Selects which packets can be discarded at will and do not need to be demuxed.

AVRational sample_aspect_ratio;

AVDictionary *metadata; //元数据信息

AVRational avg_frame_rate; //帧率(注:对视频来说,这个挺重要的)

AVPacket attached_pic; //附带的图片。比如说一些MP3,AAC音频文件附带的专辑封面。

AVPacketSideData *side_data;

int nb_side_data;

int event_flags;

struct {

int64_t last_dts;

int64_t duration_gcd;

int duration_count;

int64_t rfps_duration_sum;

double (*duration_error)[2][MAX_STD_TIMEBASES];

int64_t codec_info_duration;

int64_t codec_info_duration_fields;

int found_decoder;

int64_t last_duration;

int64_t fps_first_dts;

int fps_first_dts_idx;

int64_t fps_last_dts;

int fps_last_dts_idx;

} *info;

int pts_wrap_bits; /**< number of bits in pts (used for wrapping control) */

int64_t first_dts;

int64_t cur_dts;

int64_t last_IP_pts;

int last_IP_duration;

int probe_packets;

int codec_info_nb_frames;

enum AVStreamParseType need_parsing;

struct AVCodecParserContext *parser;

struct AVPacketList *last_in_packet_buffer;

AVProbeData probe_data;

int64_t pts_buffer[MAX_REORDER_DELAY+1];

AVIndexEntry *index_entries;

int nb_index_entries;

unsigned int index_entries_allocated_size;

AVRational r_frame_rate;

int stream_identifier;

int64_t interleaver_chunk_size;

int64_t interleaver_chunk_duration;

int request_probe;

int skip_to_keyframe;

int skip_samples;

int64_t start_skip_samples;

int64_t first_discard_sample;

int64_t last_discard_sample;

int nb_decoded_frames;

int64_t mux_ts_offset;

int64_t pts_wrap_reference;

int pts_wrap_behavior;

int update_initial_durations_done;

int64_t pts_reorder_error[MAX_REORDER_DELAY+1];

uint8_t pts_reorder_error_count[MAX_REORDER_DELAY+1];

int64_t last_dts_for_order_check;

uint8_t dts_ordered;

uint8_t dts_misordered;

int inject_global_side_data;

char *recommended_encoder_configuration;

AVRational display_aspect_ratio;

struct FFFrac *priv_pts;

} AVStream;

结构体AVPacket

该结构体在avcodec.h文件中,AVPacket存储压缩数据(视频对应H.264等码流数据,音频对应AAC/MP3等码流数据),由av_init_packet()或av_new_packet()初始化,av_free_packet()销毁。

av_init_packet()和av_new_packet()都不负责申请AVPacket结构体空间,而是申请一块给AVpacket的成员data指针指向的空间,该空间用于存储数据。而av_free_packet()也只是释放AVPacket的data成员指向的空间

对其中重要的成员做注释:

typedef struct AVPacket {

AVBufferRef *buf;

int64_t pts; //显示时间戳

int64_t dts; //解码时间戳

uint8_t *data; //压缩编码的数据。

//例如对于H.264来说。1个AVPacket的data通常对应一个NAL。

//注意:在这里只是对应,而不是一模一样。他们之间有微小的差别:使用FFMPEG类库分离出多媒体文件中的H.264码流

//因此在使用FFMPEG进行视音频处理的时候,常常可以将得到的AVPacket的data数据直接写成文件,从而得到视音频的码流文件。

int size; //data的大小

int stream_index; //标识该AVPacket所属的视频/音频流。

int flags;

AVPacketSideData *side_data;

int side_data_elems;

int duration;

#if FF_API_DESTRUCT_PACKET

attribute_deprecated

void (*destruct)(struct AVPacket *);

attribute_deprecated

void *priv;

#endif

int64_t pos; ///< byte position in stream, -1 if unknown

int64_t convergence_duration;

} AVPacket;