Rancher 2.4.4-高可用集群HA部署-离线安装

文章目录

- 规划:

- 前期准备

- 部署:

- 一、安装负载均衡器

- 1、配置Nginx官方的Yum源

- 2、安装最新NGINX

- 3、配置nginx

- 4、启动nginx

- 二、安装镜像仓库Harbor

- 1、下载软件包

- 2、配置

- 3、安装

- 三、同步镜像到私有仓库

- 1、查找使用的 Rancher 版本所需要的资源

- 2、收集 cert-manager 镜像

- 四、RKE安装kubernetes

- 1、安装rke、helm、kubectl(在rancher1安装即可)

- 2、在主机创建`rancher`用户并分发密码

- 3、创建rke文件

- 4、配置完rancher-cluster.yml之后,启动Kubernetes 集群

- 5、测试集群以及检查集群状态

- 五、安装Rancher

- 1、添加 Helm Chart 仓库

- 2、使用 Rancher 默认的自签名证书在公网环境下获取最新的`cert-manager Chart`

- 3、使用期望的参数渲染 chart 模板

- 4、下载 cert-manager 所需的 CRD 文件。

- 5、渲染 Rancher 模板

- 6、安装 Cert-manager

- 7、安装Rancher

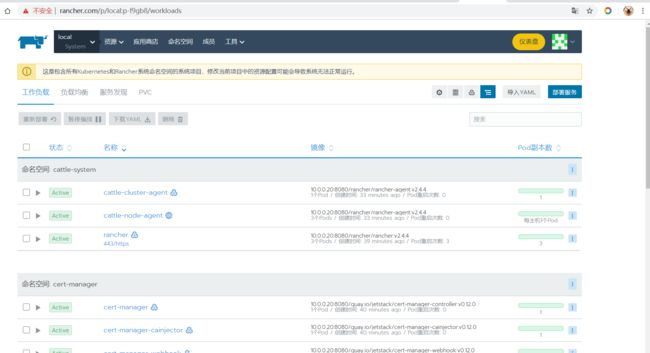

- 8、创建完rancher后查看状态

规划:

本次虚拟机使用的是双网卡,所以有2个ip,10段IP可忽略。负载均衡器需要10IP,作为公网入口。

| IP1 | IP2 | 描述 |

|---|---|---|

| 10.0.0.20 | 172.16.1.20 | nginx负载均衡器、Harbor |

| 10.0.0.21 | 172.16.1.21 | rancher1 |

| 10.0.0.22 | 172.16.1.22 | rancher2 |

| 10.0.0.23 | 172.16.1.23 | rancher3 |

官网推荐架构

- Rancher 的 DNS 应该解析为 4 层负载均衡器

- 负载均衡器应将端口 TCP/80 和 TCP/443 流量转发到Kubernetes 集群中的所有 3 个节点。

- Ingress 控制器会将 HTTP 重定向到 HTTPS,并在端口 TCP/443上终止 SSL/TLS。

- Ingress 控制器会将流量转发到 Rancher deployment 中 Pod 上的端口 TCP/80。

前期准备

1、主机OS调优

echo "

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

net.ipv4.conf.all.forwarding=1

net.ipv4.neigh.default.gc_thresh1=4096

net.ipv4.neigh.default.gc_thresh2=6144

net.ipv4.neigh.default.gc_thresh3=8192

net.ipv4.neigh.default.gc_interval=60

net.ipv4.neigh.default.gc_stale_time=120

# 参考 https://github.com/prometheus/node_exporter#disabled-by-default

kernel.perf_event_paranoid=-1

#sysctls for k8s node config

net.ipv4.tcp_slow_start_after_idle=0

net.core.rmem_max=16777216

fs.inotify.max_user_watches=524288

kernel.softlockup_all_cpu_backtrace=1

kernel.softlockup_panic=0

kernel.watchdog_thresh=30

fs.file-max=2097152

fs.inotify.max_user_instances=8192

fs.inotify.max_queued_events=16384

vm.max_map_count=262144

fs.may_detach_mounts=1

net.core.netdev_max_backlog=16384

net.ipv4.tcp_wmem=4096 12582912 16777216

net.core.wmem_max=16777216

net.core.somaxconn=32768

net.ipv4.ip_forward=1

net.ipv4.tcp_max_syn_backlog=8096

net.ipv4.tcp_rmem=4096 12582912 16777216

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1

kernel.yama.ptrace_scope=0

vm.swappiness=0

# 可以控制core文件的文件名中是否添加pid作为扩展。

kernel.core_uses_pid=1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route=0

net.ipv4.conf.all.accept_source_route=0

# Promote secondary addresses when the primary address is removed

net.ipv4.conf.default.promote_secondaries=1

net.ipv4.conf.all.promote_secondaries=1

# Enable hard and soft link protection

fs.protected_hardlinks=1

fs.protected_symlinks=1

# 源路由验证

# see details in https://help.aliyun.com/knowledge_detail/39428.html

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_announce=2

# see details in https://help.aliyun.com/knowledge_detail/41334.html

net.ipv4.tcp_max_tw_buckets=5000

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_fin_timeout=30

net.ipv4.tcp_synack_retries=2

kernel.sysrq=1

" >> /etc/sysctl.conf

2、所有机器安装Docker

# 1) 安装必要的一些系统工具

yum install -y yum-utils device-mapper-persistent-data lvm2

# 2) 添加软件源信息

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 3) 更新并安装 Docker-CE

yum makecache fast

yum -y install docker-ce

# 4) 修改为国内镜像源

mkdir /etc/docker

cat >> /etc/docker/daemon.json<3、在NGINX机器安装helm

[root@rancher0 ~]# wget https://docs.rancher.cn/download/helm/helm-v3.0.3-linux-amd64.tar.gz \

&& tar xf helm-v3.0.3-linux-amd64.tar.gz \

&& cd linux-amd64 \

&& mv helm /usr/sbin/

部署:

一、安装负载均衡器

1、配置Nginx官方的Yum源

# 负载均衡需要stream模块,所以本次安装最新1.18版本nginx

vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=0

enabled=1

2、安装最新NGINX

yum -y install nginx

3、配置nginx

[root@nginx ~]# cd /etc/nginx && rm -rf conf.d && mv nginx.conf nginx.conf.bak

[root@nginx ~]# vim /etc/nginx/nginx.conf

worker_processes 4;

worker_rlimit_nofile 40000;

events {

worker_connections 8192;

}

stream {

upstream rancher_servers_http {

least_conn;

server 172.16.1.21:80 max_fails=3 fail_timeout=5s;

server 172.16.1.22:80 max_fails=3 fail_timeout=5s;

server 172.16.1.23:80 max_fails=3 fail_timeout=5s;

}

server {

listen 80;

proxy_pass rancher_servers_http;

}

upstream rancher_servers_https {

least_conn;

server 172.16.1.21:443 max_fails=3 fail_timeout=5s;

server 172.16.1.22:443 max_fails=3 fail_timeout=5s;

server 172.16.1.23:443 max_fails=3 fail_timeout=5s;

}

server {

listen 443;

proxy_pass rancher_servers_https;

}

}

4、启动nginx

systemctl start nginx && systemctl enable nginx

二、安装镜像仓库Harbor

1、下载软件包

wget https://docs.rancher.cn/download/harbor/harbor-online-installer-v2.0.0.tgz

tar xf harbor-online-installer-v2.0.0.tgz

mv harbor /opt/

2、配置

## 本次注释了443端口,不使用SSL,

[root@nginx harbor]# vim harbor.yml

[root@nginx harbor]# grep "^\s*[^# \t].*$" harbor.yml

hostname: 10.0.0.20

http:

port: 8080

harbor_admin_password: Harbor12345

database:

password: root123

max_idle_conns: 50

max_open_conns: 100

data_volume: /data

clair:

updaters_interval: 12

ignore_unfixed: false

skip_update: false

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 10

chart:

absolute_url: disabled

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor

_version: 2.0.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- clair

- trivy

3、安装

# 1.脚本会调用docker-compose所以在此先安装docker-compose

[root@nginx harbor]# yum install docker-compose -y

# 2.执行Harbor安装脚本

[root@nginx harbor]# sh install.sh

安装完成后会在脚本目录生成docker-compose.yml文件,可用docker-compose命令管理Harbor的生命周期。

安装完成后可登陆查看http://10.0.0.20:8080默认用户名admin,密码Harbor12345

三、同步镜像到私有仓库

1、查找使用的 Rancher 版本所需要的资源

https://github.com/rancher/rancher/releases

进入文档版本下载以下几个文件

| Release 文件 | 描述 |

|---|---|

| rancher-images.txt | 此文件包含安装 Rancher、创建集群和运行 Rancher 工具所需的镜像列表。 |

| rancher-save-images.sh | 这个脚本会从 DockerHub 中拉取在文件rancher-images.txt中描述的所有镜像,并将它们保存为文件rancher-images.tar.gz。 |

| rancher-images.txt | 此文件包含安装 Rancher、创建集群和运行 Rancher 工具所需的镜像列表。 |

| rancher-load-images.sh | 这个脚本会载入文件rancher-images.tar.gz中的镜像,并将它们推送到您自己的私有镜像库。 |

本次离线安装完成整理后的镜像名称列表: rancher_images.txt,可以用这份txt文件尝试安装。(按照官网步骤从GitHub下载的好像有遗漏)

2、收集 cert-manager 镜像

在安装高可用过程中,如果选择使用 Rancher 默认的自签名 TLS 证书,则还必须将

cert-manager镜像添加到rancher-images.txt文件中。如果使用自己的证书,则跳过此步骤。

2.1、获取最新的cert-manager Helm chart,解析模板,获取镜像详细信息:

helm repo add jetstack https://charts.jetstack.io

helm repo update

helm fetch jetstack/cert-manager --version v0.12.0

helm template ./cert-manager-v0.12.0.tgz | grep -oP '(?<=image: ").*(?=")' >> ./rancher-images.txt

2.2、对镜像列表进行排序和唯一化,去除重复的镜像源:

sort -u rancher-images.txt -o rancher-images.txt

2.3、将镜像保存到您的工作站中

# 1.为rancher-save-images.sh 文件添加可执行权限:

chmod +x rancher-save-images.sh

# 2.执行脚本rancher-save-images.sh并以--image-list ./rancher-images.txt 作为参数,创建所有需要镜像的压缩包

./rancher-save-images.sh --image-list ./rancher-images.txt

结果:

Docker 会开始拉取用于离线安装所需的镜像。这个过程会花费几分钟时间。完成时,您的当前目录会输出名为rancher-images.tar.gz的压缩包。请确认输出文件是否存在。

2.4、推送镜像到私有镜像库

文件 rancher-images.txt 和 rancher-images.tar.gz 应该位于工作站中运行 rancher-load-images.sh 脚本的同一目录下。

首先配置/etc/docker/daemon.json不然会报错Error response from daemon: Get https://10.0.0.20:8080/v2/: http: server gave HTTP response to HTTPS client

vim /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.20:8080"],

"registry-mirrors": ["https://7kmehv9e.mirror.aliyuncs.com"]

}

修改完重启docker systemctl restart docker

# 1.登录私有镜像库:

[root@nginx images]# docker login 10.0.0.20:8080

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

# 2.为 rancher-load-images.sh 添加可执行权限:

chmod +x rancher-load-images.sh

# 3.使用脚本 rancher-load-images.sh提取rancher-images.tar.gz文件中的镜像,根据文件rancher-images.txt中的镜像列表对提取的镜像文件重新打 tag 并推送到您的私有镜像库

./rancher-load-images.sh --image-list ./rancher-images.txt --registry 10.0.0.20:8080

四、RKE安装kubernetes

1、安装rke、helm、kubectl(在rancher1安装即可)

[rancher@rancher1 ~]$ su - root

# 1、下载rke文件并移动到/usr/sbin

[root@rancher1 ~]# wget https://github.com/rancher/rke/releases/download/v1.1.2/rke_linux-amd64 \

&& chmod +x rke_linux-amd64 \

&& mv rke_linux-amd64 /usr/bin/rke

# 2、安装kubectl

[root@rancher1 ~]# wget https://docs.rancher.cn/download/kubernetes/linux-amd64-v1.18.3-kubectl \

&& chmod +x linux-amd64-v1.18.3-kubectl \

&& mv linux-amd64-v1.18.3-kubectl /usr/bin/kubectl

# 3、安装helm

[root@rancher1 ~]# wget https://docs.rancher.cn/download/helm/helm-v3.0.3-linux-amd64.tar.gz \

&& tar xf helm-v3.0.3-linux-amd64.tar.gz \

&& cd linux-amd64 \

&& mv helm /usr/sbin/ \

&& cd \

&& rm -rf helm-v3.0.3-linux-amd64.tar.gz linux-amd64

2、在主机创建rancher用户并分发密码

# 1、在所有机器都操作创建用户rancher

groupadd docker

useradd rancher -G docker

echo "123456" | passwd --stdin rancher

# 2、在所有机器授权

[root@rancher1 ~]# vim /etc/sudoers +100

rancher ALL=(ALL) NOPASSWD: ALL

# 3、以下在rancher1机器执行即可

su - rancher

ssh-keygen

ssh-copy-id [email protected]

ssh-copy-id [email protected]

ssh-copy-id [email protected]

3、创建rke文件

[rancher@rancher1 opt]$ cd ~

[rancher@rancher1 opt]$ vim rancher-cluster.yml

nodes:

- address: 10.0.0.21

internal_address: 172.16.1.21

user: rancher

role: ["controlplane", "etcd", "worker"]

ssh_key_path: /home/rancher/.ssh/id_rsa

- address: 10.0.0.22

internal_address: 172.16.1.22

user: rancher

role: ["controlplane", "etcd", "worker"]

ssh_key_path: /home/rancher/.ssh/id_rsa

- address: 10.0.0.23

internal_address: 172.16.1.23 # 节点内网 IP

user: rancher

role: ["controlplane", "etcd", "worker"]

ssh_key_path: /home/rancher/.ssh/id_rsa

private_registries:

- url: 10.0.0.20:8080

user: admin

password: Harbor12345

is_default: true

常用RKE节点选项

| 选项 | 必填 | 描述 |

|---|---|---|

| address | 是 | 公用 DNS 或 IP 地址 |

| user | 是 | 可以运行 docker 命令的用户 |

| role | 是 | 分配给节点的 Kubernetes 角色列表 |

| internal_address | 是 | 内部集群流量的专用 DNS 或 IP 地址 |

| ssh_key_path | 否 | 用于对节点进行身份验证的 SSH 私钥的路径(默认为~/.ssh/id_rsa) |

4、配置完rancher-cluster.yml之后,启动Kubernetes 集群

# 1、在启动之前,所有主机先配置/etc/docker/daemon.json,加入镜像仓库地址

vim /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.20:8080"],

"registry-mirrors": ["https://7kmehv9e.mirror.aliyuncs.com"]

}

systemctl restart docker

# 2、启动Kubernetes 集群

[root@rancher1 ~]# su - rancher

rke up --config ./rancher-cluster.yml

若提示缺少镜像

rancher/hyperkube:v1.17.5-rancher1,公网pull,然后push到镜像仓库即可。

执行完成出现INFO[0220] Finished building Kubernetes cluster successfully以及多出以下两个文件

5、测试集群以及检查集群状态

[rancher@rancher1 ~]$ mkdir -p /home/rancher/.kube

[rancher@rancher1 ~]$ cp kube_config_rancher-cluster.yml $HOME/.kube/config

[rancher@rancher1 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.0.21 Ready controlplane,etcd,worker 3m39s v1.17.5

10.0.0.22 Ready controlplane,etcd,worker 3m40s v1.17.5

10.0.0.23 Ready controlplane,etcd,worker 3m39s v1.17.5

- Pod 是Running或Completed状态。

- STATUS 为 Running 的 Pod,READY 应该显示所有容器正在运行 (例如,3/3)。

- STATUS 为 Completed的 Pod 是一次运行的作业。对于这些 Pod,READY应为0/1。

[rancher@rancher1 ~]$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx default-http-backend-74858d6d44-fr9g2 1/1 Running 0 3m42s

ingress-nginx nginx-ingress-controller-dq29w 1/1 Running 0 3m42s

ingress-nginx nginx-ingress-controller-mwnfx 1/1 Running 0 3m42s

ingress-nginx nginx-ingress-controller-zzl5v 1/1 Running 0 3m42s

kube-system canal-44lzq 2/2 Running 0 4m17s

kube-system canal-c6drc 2/2 Running 0 4m17s

kube-system canal-mz9bh 2/2 Running 0 4m17s

kube-system coredns-7c7966fdb8-b4445 1/1 Running 0 3m5s

kube-system coredns-7c7966fdb8-sjgtl 1/1 Running 0 4m2s

kube-system coredns-autoscaler-57879bf9b8-krxqx 1/1 Running 0 4m1s

kube-system metrics-server-59db96dbdd-fwlc8 1/1 Running 0 3m52s

kube-system rke-coredns-addon-deploy-job-vsz7d 0/1 Completed 0 4m7s

kube-system rke-ingress-controller-deploy-job-7g8pt 0/1 Completed 0 3m46s

kube-system rke-metrics-addon-deploy-job-hkwlj 0/1 Completed 0 3m57s

kube-system rke-network-plugin-deploy-job-hvfnb 0/1 Completed 0 4m19s

五、安装Rancher

1、添加 Helm Chart 仓库

此步骤在有公网的主机执行即可,为了得到tgz文件

# 1、使用helm repo add来添加仓库,不同的地址适应不同的 Rancher 版本,请替换命令中的,替换为latest,stable或alpha。

[rancher@rancher1 ~]$ helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

"rancher-stable" has been added to your repositories

# 2、获取最新的 Rancher Chart, tgz 文件会下载到本地。

[rancher@rancher1 ~]$ helm fetch rancher-stable/rancher

# 3、将tgz文件拷贝到内网rancher1中的rancher用户家目录下

2、使用 Rancher 默认的自签名证书在公网环境下获取最新的cert-manager Chart

# 1.在可以连接互联网的系统中,添加 cert-manager 仓库。

helm repo add jetstack https://charts.jetstack.io

helm repo update

# 2.从 [Helm Chart 仓库](https://hub.helm.sh/charts/jetstack/cert-manager) 中获取最新的 cert-manager Chart。

helm fetch jetstack/cert-manager --version v0.12.0

将生成的cert-manager-v0.12.0.tgz文件拷贝到内网主机rancher1中

[rancher@rancher1 ~]$ scp [email protected]:/root/install/cert-manager-v0.12.0.tgz .

3、使用期望的参数渲染 chart 模板

[rancher@rancher1 ~]$ helm template cert-manager ./cert-manager-v0.12.0.tgz --output-dir . \

> --namespace cert-manager \

> --set image.repository=10.0.0.20:8080/quay.io/jetstack/cert-manager-controller \

> --set webhook.image.repository=10.0.0.20:8080/quay.io/jetstack/cert-manager-webhook \

> --set cainjector.image.repository=10.0.0.20:8080/quay.io/jetstack/cert-manager-cainjector

执行完成会得到一个包含相关 YAML文件的cert-manager目录

[rancher@rancher1 ~]$ tree -L 3 cert-manager

cert-manager

└── templates

├── cainjector-deployment.yaml

├── cainjector-rbac.yaml

├── cainjector-serviceaccount.yaml

├── deployment.yaml

├── rbac.yaml

├── serviceaccount.yaml

├── service.yaml

├── webhook-deployment.yaml

├── webhook-mutating-webhook.yaml

├── webhook-rbac.yaml

├── webhook-serviceaccount.yaml

├── webhook-service.yaml

└── webhook-validating-webhook.yaml

4、下载 cert-manager 所需的 CRD 文件。

curl -L -o cert-manager/cert-manager-crd.yaml https://raw.githubusercontent.com/jetstack/cert-manager/release-0.12/deploy/manifests/00-crds.yaml

# 可能会下载失败,可以从我的网络地址下载

http://img.ljcccc.com/Ranchercert-manager-crd.yaml.txt

5、渲染 Rancher 模板

声明您选择的选项。需要将 Rancher 配置为在由 Rancher 启动 Kubernetes 集群或 Rancher 工具时,使用私有镜像库。

[rancher@rancher1 ~]$ helm template rancher ./rancher-2.4.4.tgz --output-dir . \

> --namespace cattle-system \

> --set hostname=rancher.com \

> --set certmanager.version=v0.12.0 \

> --set rancherImage=10.0.0.20:8080/rancher/rancher \

> --set systemDefaultRegistry=10.0.0.20:8080 \

> --set useBundledSystemChart=true

# 执行会输出以下内容

wrote ./rancher/templates/serviceAccount.yaml

wrote ./rancher/templates/clusterRoleBinding.yaml

wrote ./rancher/templates/service.yaml

wrote ./rancher/templates/deployment.yaml

wrote ./rancher/templates/ingress.yaml

wrote ./rancher/templates/issuer-rancher.yaml

6、安装 Cert-manager

(仅限使用 Rancher 默认自签名证书)

# 1、为 cert-manager 创建 namespace。

[rancher@rancher1 ~]$ kubectl create namespace cert-manager

namespace/cert-manager created

# 2、创建 cert-manager CRD

[rancher@rancher1 ~]$ kubectl apply -f cert-manager/cert-manager-crd.yaml

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io created

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io created

# 3、启动 cert-manager。

[rancher@rancher1 ~]$ kubectl apply -R -f ./cert-manager

customresourcedefinition.apiextensions.k8s.io/certificaterequests.cert-manager.io unchanged

customresourcedefinition.apiextensions.k8s.io/certificates.cert-manager.io unchanged

customresourcedefinition.apiextensions.k8s.io/challenges.acme.cert-manager.io unchanged

customresourcedefinition.apiextensions.k8s.io/clusterissuers.cert-manager.io unchanged

customresourcedefinition.apiextensions.k8s.io/issuers.cert-manager.io unchanged

customresourcedefinition.apiextensions.k8s.io/orders.acme.cert-manager.io unchanged

deployment.apps/cert-manager-cainjector created

clusterrole.rbac.authorization.k8s.io/cert-manager-cainjector created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-cainjector created

role.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection created

rolebinding.rbac.authorization.k8s.io/cert-manager-cainjector:leaderelection created

serviceaccount/cert-manager-cainjector created

deployment.apps/cert-manager created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-orders created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-issuers created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-certificates created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim created

clusterrole.rbac.authorization.k8s.io/cert-manager-view created

clusterrole.rbac.authorization.k8s.io/cert-manager-edit created

clusterrole.rbac.authorization.k8s.io/cert-manager-controller-challenges created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-challenges created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-issuers created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-orders created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-ingress-shim created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-certificates created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-controller-clusterissuers created

role.rbac.authorization.k8s.io/cert-manager:leaderelection created

rolebinding.rbac.authorization.k8s.io/cert-manager:leaderelection created

service/cert-manager created

serviceaccount/cert-manager created

deployment.apps/cert-manager-webhook created

mutatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook created

clusterrole.rbac.authorization.k8s.io/cert-manager-webhook:webhook-requester created

clusterrolebinding.rbac.authorization.k8s.io/cert-manager-webhook:auth-delegator created

rolebinding.rbac.authorization.k8s.io/cert-manager-webhook:webhook-authentication-reader created

service/cert-manager-webhook created

serviceaccount/cert-manager-webhook created

validatingwebhookconfiguration.admissionregistration.k8s.io/cert-manager-webhook created

7、安装Rancher

[rancher@rancher1 ~]$ kubectl create namespace cattle-system

namespace/cattle-system created

[rancher@rancher1 ~]$ kubectl -n cattle-system apply -R -f ./rancher

clusterrolebinding.rbac.authorization.k8s.io/rancher created

deployment.apps/rancher created

ingress.extensions/rancher created

service/rancher created

serviceaccount/rancher created

issuer.cert-manager.io/rancher created

8、创建完rancher后查看状态

[rancher@rancher1 templates]$ kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

cattle-system rancher-657756bbb6-fgjgz 1/1 Running 0 3m49s

cattle-system rancher-657756bbb6-l4gcs 1/1 Running 0 3m49s

cattle-system rancher-657756bbb6-m4x5v 1/1 Running 3 3m49s

cert-manager cert-manager-6b89685c5f-zfw9v 1/1 Running 0 4m42s

cert-manager cert-manager-cainjector-64bdfd6596-ck2k8 1/1 Running 0 4m43s

cert-manager cert-manager-webhook-7c49498d4f-98j98 1/1 Running 0 4m40s

ingress-nginx default-http-backend-74858d6d44-fr9g2 1/1 Running 0 39m

ingress-nginx nginx-ingress-controller-dq29w 1/1 Running 0 39m

ingress-nginx nginx-ingress-controller-mwnfx 1/1 Running 0 39m

ingress-nginx nginx-ingress-controller-zzl5v 1/1 Running 0 39m

kube-system canal-44lzq 2/2 Running 0 40m

kube-system canal-c6drc 2/2 Running 0 40m

kube-system canal-mz9bh 2/2 Running 0 40m

kube-system coredns-7c7966fdb8-b4445 1/1 Running 0 39m

kube-system coredns-7c7966fdb8-sjgtl 1/1 Running 0 40m

kube-system coredns-autoscaler-57879bf9b8-krxqx 1/1 Running 0 40m

kube-system metrics-server-59db96dbdd-fwlc8 1/1 Running 0 40m

kube-system rke-coredns-addon-deploy-job-vsz7d 0/1 Completed 0 40m

kube-system rke-ingress-controller-deploy-job-7g8pt 0/1 Completed 0 39m

kube-system rke-metrics-addon-deploy-job-hkwlj 0/1 Completed 0 40m

kube-system rke-network-plugin-deploy-job-hvfnb 0/1 Completed 0 40m

本地电脑绑定host解析后,浏览器访问:https://rancher.com

创建密码

点击save保存

进入后查看状态,发现pod有问题

登录主机查看有个pod是error状态

由于我们通过hosts文件来添加映射,所以需要为Agent Pod添加主机别名(/etc/hosts):

[rancher@rancher1 templates]$ kubectl -n cattle-system patch deployments cattle-cluster-agent --patch '{

"spec": {

"template": {

"spec": {

"hostAliases": [

{

"hostnames":

[

"rancher.com"

],

"ip": "10.0.0.20"

}

]

}

}

}

}'

添加后可以在配置文件中看到

[rancher@rancher1 templates]$ kubectl edit pod cattle-cluster-agent-5598c6557c-9ttkw -n cattle-system

集群状态正常

参考官网文档:Rancher2.docs