kubernetes(k8s):部署高可用集群

文章目录

- 背景:两种高可用集群架构

- 1.实验环境

- 1.1 Loadbalancer部署:三台主机分别配置haproxy,keepalived

- 1.2 分别部署k8s,docker

- 1.3 将三台主机均设置为master

- 1.4 安装flannel网络组件

- 1.5 再开启一台server5做worker

- 1.6 测试

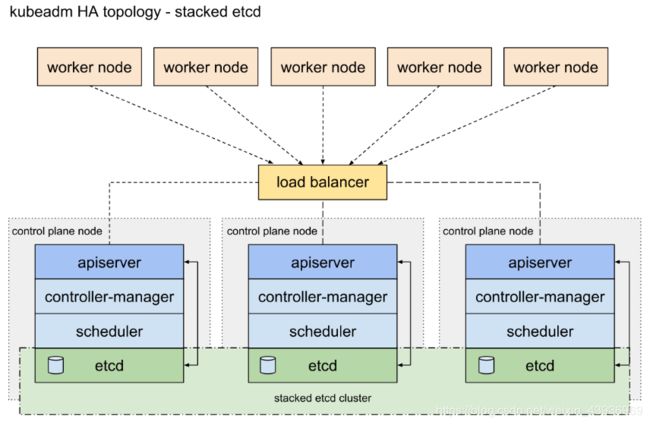

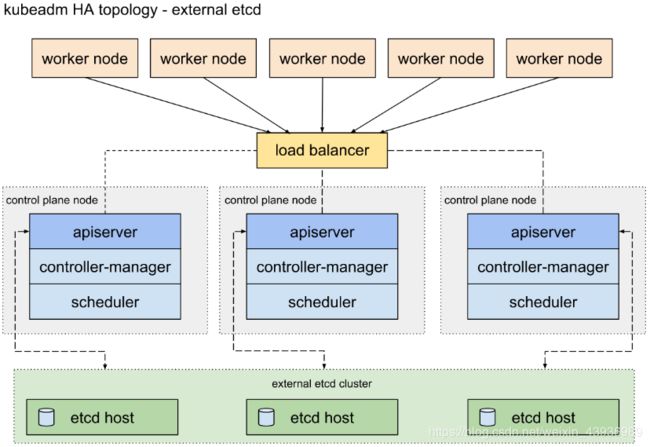

Kubernetes 集群,在生产环境,必须实现高可用:

实现Master节点及其核心组件的高可用;如果Master节点出现问题的话,那整个集群就失去了控制;

具体工作原理:

- etcd 集群:部署了3个Master节点,每个Master节点的etcd组成集群入口

- 集群:3个Master节点上的APIServer的前面放一个负载均衡器,工作节点和客户端通过这个负载均衡和APIServer进行通信

- pod-master保证仅是主master可用,scheduler、controller-manager在集群中多个实例只有一个工作,其他为备用

背景:两种高可用集群架构

1.实验环境

- 重新准备server2、server3、server4,三台虚拟机,分别安装haproxy和keepalived

安装keepalived、haproxy,keepalived用来监控集群中各个服务节点的状态,heproxy是一个适合于高可用性环境的tcp/http开元的反向代理和负载均衡软件

1.1 Loadbalancer部署:三台主机分别配置haproxy,keepalived

[root@server2 ~]# yum install -y haproxy keepalived -y

[root@server3 ~]# yum install -y haproxy keepalived -y

[root@server4 ~]# yum install -y haproxy keepalived -y

[root@server2 haproxy]# pwd

/etc/haproxy

[root@server2 haproxy]# vim haproxy.cfg

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:8443 // apiserver监听加密的6443端口,所以指定的监听端口不能与apiserver的端口冲突

mode tcp // 使用tcp模式转发

default_backend apiserver // 后端为apiserver,k8s集群接口

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend apiserver

mode tcp // tcp模式

balance roundrobin

server app1 172.25.60.2:6443 check //后端server,均为k8s集群中master节点

server app2 172.25.60.3:6443 check

server app3 172.25.60.4:6443 check

[root@server2 ~]# systemctl start haproxy

[root@server2 ~]# systemctl enable haproxy //开机自启

[root@server2 ~]# netstat -antlpe|grep haproxy

tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 0 31732 3668/haproxy

[root@server2 keepalived]# pwd

/etc/keepalived

[root@server2 keepalived]# cat check_haproxy.sh //编写脚本文件check_haproxy.sh,检测haproxy状态,将haproxy与keepalived联系起来

#!/bin/bash

systemctl status haproxy &> /dev/null

if [ $? != 0 ];then

systemctl stop keepalived

fi

[root@server2 keepalived]# chmod +x check_haproxy.sh //加执行权限

[root@server2 keepalived]# ./check_haproxy.sh //测试是否可以正常运行

[root@server2 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh" // 放入检测脚本

interval 5 //时间间隔5s

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100 // 备机设备优先级 (数值越大优先级越高)

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_haproxy

}

virtual_ipaddress {

172.25.60.100 //VIP

}

}

[root@server2 keepalived]# systemctl stop haproxy // 停止haproxy

[root@server2 keepalived]# systemctl status keepalived // keepalived因为脚本的执行也会停止

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Thu 2020-05-14 17:06:47 CST; 8s ago

[root@server2 keepalived]# systemctl start haproxy

[root@server2 keepalived]# systemctl start keepalived

[root@server2 keepalived]# ssh-keygen // 三台master节点主机,做免密

[root@server2 keepalived]# ssh-copy-id server3

[root@server2 keepalived]# ssh-copy-id server4

[root@server2 keepalived]# scp /etc/haproxy/haproxy.cfg server3:/etc/haproxy/

haproxy.cfg 100% 2658 3.0MB/s 00:00

[root@server2 keepalived]# scp /etc/haproxy/haproxy.cfg server4:/etc/haproxy/

haproxy.cfg 100% 2658 3.1MB/s 00:00

[root@server2 keepalived]# scp /etc/keepalived/keepalived.conf server3:/etc/keepalived/

keepalived.conf 100% 607 913.9KB/s 00:00

[root@server2 keepalived]# scp /etc/keepalived/keepalived.conf server4:/etc/keepalived/

keepalived.conf

[root@server3 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

}

vrrp_instance VI_1 {

state BACKUP // 备机设置为backup

interface eth0

virtual_router_id 51

priority 90 // 优先级降低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_haproxy

}

virtual_ipaddress {

172.25.60.100

}

}

[root@server3 ~]# systemctl start haproxy

[root@server3 ~]# systemctl enable haproxy

[root@server3 ~]# systemctl start keepalived

[root@server3 ~]# systemctl enable keepalived

[root@server4 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

}

vrrp_instance VI_1 {

state BACKUP //改为backup备机

interface eth0

virtual_router_id 51

priority 80 //降低优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_haproxy

}

virtual_ipaddress {

172.25.60.100

}

}

[root@server4 ~]# systemctl start haproxy

[root@server4 ~]# systemctl enable haproxy

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@server4 ~]# systemctl start keepalived

[root@server4 ~]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

1.2 分别部署k8s,docker

(在所有节点都要做)

时间同步(此处省略)

[root@server2 ~]# cat /etc/yum.repos.d/docker-ce.repo // docker安装的yum源

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable

enabled=1

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[root@server2 ~]# yum install docker-ce container-selinux-2.77-1.el7.noarch.rpm -y # 需要container-selinux-2.77-1.el7.noarch.rpm包解决依赖性,自行下载

[root@server2 ~]# scp /etc/yum.repos.d/docker-ce.repo root@172.25.60.3:/etc/yum.repos.d/

docker-ce.repo 100% 213 145.9KB/s 00:00

[root@server2 ~]# scp /etc/yum.repos.d/docker-ce.repo root@172.25.60.4:/etc/yum.repos.d/

docker-ce.repo

[root@server2 ~]# scp container-selinux-2.77-1.el7.noarch.rpm server3:/root/

container-selinux-2.77-1.el7.noarch.rpm 100% 37KB 12.4MB/s 00:00

[root@server2 ~]# scp container-selinux-2.77-1.el7.noarch.rpm server4:/root/

container-selinux-2.77-1.el7.noarch.rpm

[root@server3 ~]# yum install docker-ce container-selinux-2.77-1.el7.noarch.rpm -y

[root@server4 ~]# yum install docker-ce container-selinux-2.77-1.el7.noarch.rpm -y

[root@server2 ~]# systemctl start docker

[root@server2 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@server2 ~]# docker info

//如果出现一下错误,执行下面的排错步骤

排错:

此处可参考k8s部署的博客https://blog.csdn.net/weixin_43936969/article/details/105773756#3_Kubernetes_57

[root@server2 sysctl.d]# pwd

/etc/sysctl.d

[root@server2 sysctl.d]# vim k8s.conf

[root@server2 sysctl.d]# cat k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@server2 sysctl.d]# sysctl --syste // 使其生效

将cgroupdriver修改为system的方式:

[root@server2 docker]# pwd

/etc/docker

[root@server2 docker]# cat daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"], // 修改为system的方式

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@server2 docker]# systemctl restart docker

[root@server2 docker]# scp /etc/sysctl.d/k8s.conf server3:/etc/sysctl.d/

k8s.conf 100% 79 77.0KB/s 00:00

[root@server2 docker]# scp /etc/sysctl.d/k8s.conf server4:/etc/sysctl.d/

k8s.conf

[root@server3 ~]# systemctl start docker

[root@server3 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@server4 ~]# systemctl start docker

[root@server4 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@server2 docker]# scp /etc/docker/daemon.json server3:/etc/docker/

daemon.json 100% 224 18.0KB/s 00:00

[root@server2 docker]# scp /etc/docker/daemon.json server4:/etc/docker/

daemon.json

[root@server3 ~]# systemctl restart docker

[root@server4 ~]# systemctl restart docker

禁用swap:

[root@server2 ~]# swapoff -a // 禁用swap

[root@server2 ~]# cat /etc/fstab

# /dev/mapper/rhel-swap swap swap defaults 0 0

[root@server3 ~]# swapoff -a

[root@server3 ~]# vim /etc/fstab

# /dev/mapper/rhel-swap swap swap defaults 0 0

[root@server4 ~]# swapoff -a

[root@server4 ~]# vim /etc/fstab

# /dev/mapper/rhel-swap swap swap defaults 0 0

[root@server2 ~]# cat /etc/yum.repos.d/k8s.repo // kubernetes的yum源

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@server2 ~]# scp /etc/yum.repos.d/k8s.repo server3:/etc/yum.repos.d/

k8s.repo 100% 129 18.6KB/s 00:00

[root@server2 ~]# scp /etc/yum.repos.d/k8s.repo server4:/etc/yum.repos.d/

k8s.repo

[root@server2 ~]# yum install -y kubelet kubeadm kubectl

[root@server3 ~]# yum install -y kubelet kubeadm kubectl

[root@server4 ~]# yum install -y kubelet kubeadm kubectl

[root@server2 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@server3 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@server4 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@server2 ~]# kubeadm config images list // 查看所用镜像,都是从ks8官网下载

W0514 22:34:13.033279 16384 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.18.2

k8s.gcr.io/kube-controller-manager:v1.18.2

k8s.gcr.io/kube-scheduler:v1.18.2

k8s.gcr.io/kube-proxy:v1.18.2

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.7

[root@server2 ~]# kubeadm config print init-defaults > kubeadm.yaml //将k8s初始化配置文件导入成kubeadm.yaml,方便使用

[root@server2 ~]# vim kubeadm.yaml //查看初始化配置文件 kubeadm.yaml

localAPIEndpoint:

advertiseAddress: 172.25.60.2

bindPort: 6443

clusterName: kubernetes

controlPlaneEndpoint: "172.25.60.100:8443"

controllerManager: {}

imageRepository: registry.aliyuncs.com/google_containers // 镜像仓库,改为阿里云提供的仓库,方便下载镜像

networking: //网络设置

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

[root@server2 ~]# modprobe ip_vs //添加ipvs模块

[root@server2 ~]# modprobe ip_vs_rr

[root@server2 ~]# modprobe ip_vs_wrr

[root@server2 ~]# modprobe ip_vs_sh

[root@server2 ~]# modprobe nf_conntrack_ipv4

[root@server2 ~]# lsmod |grep ip_vs //查看模块

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server3 ~]# modprobe ip_vs

[root@server3 ~]# modprobe ip_vs_rr

[root@server3 ~]# modprobe ip_vs_wrr

[root@server3 ~]# modprobe ip_vs_sh

[root@server3 ~]# modprobe nf_conntrack_ipv4

[root@server3 ~]# lsmod |grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server4 ~]# modprobe ip_vs

[root@server4 ~]# modprobe ip_vs_rr

[root@server4 ~]# modprobe ip_vs_wrr

[root@server4 ~]# modprobe ip_vs_sh

[root@server4 ~]# modprobe nf_conntrack_ipv4

[root@server4 ~]# lsmod |grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server2 ~]# kubeadm config images pull --config kubeadm.yaml // 预先拉取镜像

[root@server2 ~]# docker images //查看镜像

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.18.2 0d40868643c6 4 weeks ago 117MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.18.2 a3099161e137 4 weeks ago 95.3MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.18.2 6ed75ad404bd 4 weeks ago 173MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.18.2 ace0a8c17ba9 4 weeks ago 162MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 2 months ago 683kB

registry.aliyuncs.com/google_containers/coredns 1.6.7 67da37a9a360 3 months ago 43.8MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 6 months ago 288MB

[root@server2 ~]# docker save -o k8s.tar `docker images|grep -v TAG|awk '{print $1":"$2}'` // grep -v标识反选,不包含TAG,将拉取的k8s镜像打包

1.3 将三台主机均设置为master

[root@server2 ~]# scp k8s.tar server3:/root/

k8s.tar 100% 693MB 12.3MB/s 00:56

[root@server2 ~]# scp k8s.tar server4:/root/

k8s.tar

[root@server3 ~]# docker load -i k8s.tar //将镜像包导入

[root@server4 ~]# docker load -i k8s.tar

[root@server2 ~]# kubeadm init --config kubeadm.yaml --upload-certs //kubeadm初始化,--upload-certs上传证书,将证书共享出去

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root: //master节点加入命令:

kubeadm join 172.25.60.100:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8f7d2ab1a5e98aadbee8caba2426c79a48b17ce3a725d1bb56bc48624725e5d2 \

--control-plane --certificate-key 245fba0c8185c4b06578dce61ab6c693bb058fbd551c9be2dc214333e8f06bfc

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root: //worker节点加入命令:

kubeadm join 172.25.60.100:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8f7d2ab1a5e98aadbee8caba2426c79a48b17ce3a725d1bb56bc48624725e5d2

[root@server2 ~]# mkdir -p $HOME/.kube // 我这里没用创建普通用户,直接使用了root用户

[root@server2 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@server2 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 NotReady master 2m30s v1.18.2

[root@server3 ~]# kubeadm join 172.25.60.100:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8f7d2ab1a5e98aadbee8caba2426c79a48b17ce3a725d1bb56bc48624725e5d2 \

--control-plane --certificate-key 245fba0c8185c4b06578dce61ab6c693bb058fbd551c9be2dc214333e8f06bfc

// 将server3加入master节点

[root@server3 ~]# mkdir -p $HOME/.kube

[root@server3 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

//只要有config就可以操作集群

[root@server3 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 NotReady master 7m30s v1.18.2

server3 NotReady master 119s v1.18.2

[root@server4 ~]# kubeadm join 172.25.60.100:8443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:8f7d2ab1a5e98aadbee8caba2426c79a48b17ce3a725d1bb56bc48624725e5d2 \

> --control-plane --certificate-key 245fba0c8185c4b06578dce61ab6c693bb058fbd551c9be2dc214333e8f06bfc

// 将server4加入master节点

[root@server4 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 NotReady master 13m v1.18.2

server3 NotReady master 7m53s v1.18.2

server4 NotReady master 3m57s v1.18.2

1.4 安装flannel网络组件

导入flannel包flanneld-v0.12.0-amd64.docker

//导入flannel包flanneld-v0.12.0-amd64.docker

[root@server2 ~]# docker load -i flanneld-v0.12.0-amd64.docker

[root@server2 ~]# scp flanneld-v0.12.0-amd64.docker server3:/root/

flanneld-v0.12.0-amd64.docker 100% 51MB 8.1MB/s 00:06

[root@server2 ~]# scp flanneld-v0.12.0-amd64.docker server4:/root/

flanneld-v0.12.0-amd64.docker

[root@server3 ~]# docker load -i flanneld-v0.12.0-amd64.docker

[root@server4 ~]# docker load -i flanneld-v0.12.0-amd64.docker

[root@server2 ~]# vim kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@server2 ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@server2 ~]# kubectl get pod -n kube-system //查看

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-2nndl 0/1 Running 0 29m

coredns-7ff77c879f-6cwlc 1/1 Running 0 29m

etcd-server2 1/1 Running 0 29m

etcd-server3 1/1 Running 0 24m

etcd-server4 1/1 Running 0 20m

kube-apiserver-server2 1/1 Running 0 29m

kube-apiserver-server3 1/1 Running 0 24m

kube-apiserver-server4 1/1 Running 0 20m

kube-controller-manager-server2 1/1 Running 2 29m

kube-controller-manager-server3 1/1 Running 1 24m

kube-controller-manager-server4 1/1 Running 0 20m

kube-flannel-ds-amd64-6jjvw 1/1 Running 0 112s

kube-flannel-ds-amd64-h9sgg 1/1 Running 1 111s

kube-flannel-ds-amd64-jg7rk 1/1 Running 0 111s

kube-proxy-24vrj 1/1 Running 0 29m

kube-proxy-9mthn 1/1 Running 0 20m

kube-proxy-fjk78 1/1 Running 0 24m

kube-scheduler-server2 1/1 Running 2 29m

kube-scheduler-server3 1/1 Running 0 24m

kube-scheduler-server4 1/1 Running 1 20m

补齐命令

[root@server2 ~]# yum install bash-* -y

[root@server2 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@server2 ~]# source .bashrc

[root@server1 yum.repos.d]# cat k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@server1 yum.repos.d]# yum install kubectl -y

[root@server2 ~]# scp -r .kube/ server1:/root/

[root@server1 yum.repos.d]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 Ready master 38m v1.18.2

server3 Ready master 33m v1.18.2

server4 Ready master 29m v1.18.2

1.5 再开启一台server5做worker

[root@server2 ~]# ssh-copy-id server5 //免密

[root@server2 ~]# scp /etc/yum.repos.d/docker-ce.repo server5:/etc/yum.repos.d/

docker-ce.repo 100% 213 237.3KB/s 00:00

[root@server2 ~]# scp /etc/yum.repos.d/k8s.repo server5:/etc/yum.repos.d/

k8s.repo

[root@server2 ~]# scp container-selinux-2.77-1.el7.noarch.rpm server5:~

container-selinux-2.77-1.el7.noarch.rpm 100% 37KB 7.9MB/s 00:00

[root@server5 ~]# yum install docker-ce container-selinux-2.77-1.el7.noarch.rpm -y //安装docker

[root@server5 ~]# yum install -y kubelet kubeadm

[root@server5 ~]# systemctl start docker

[root@server5 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@server2 ~]# scp /etc/docker/daemon.json server5:/etc/docker/

daemon.json

[root@server2 ~]# scp /etc/sysctl.d/k8s.conf server5:/etc/sysctl.d/

k8s.conf

[root@server5 ~]# sysctl --system

[root@server5 ~]# systemctl restart docker

[root@server5 ~]# systemctl start kubelet

[root@server5 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@server2 ~]# scp flanneld-v0.12.0-amd64.docker server5:~

flanneld-v0.12.0-amd64.docker 100% 51MB 12.2MB/s 00:04

[root@server2 ~]# scp k8s.tar server5:~

k8s.tar 100% 693MB 6.3MB/s 01:50

[root@server5 ~]# docker load -i flanneld-v0.12.0-amd64.docker //安装网络插件flannel

[root@server5 ~]# docker load -i k8s.tar //导入k8s所需镜像

[root@server5 ~]# modprobe ip_vs //添加ipvs模块

[root@server5 ~]# modprobe ip_vs_rr

[root@server5 ~]# modprobe ip_vs_wrr

[root@server5 ~]# modprobe ip_vs_sh

[root@server5 ~]# modprobe nf_conntrack_ipv4

[root@server5 ~]# lsmod |grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133095 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

[root@server5 ~]# swapoff -a // 关闭swap

[root@server5 ~]# vim /etc/fstab

# /dev/mapper/rhel-swap swap swap defaults 0 0

[root@server5 ~]# kubeadm join 172.25.60.100:8443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:8f7d2ab1a5e98aadbee8caba2426c79a48b17ce3a725d1bb56bc48624725e5d2

// 加入kubernetes集群工作节点worker

将server5加入kubernetes集群

[root@server1 yum.repos.d]# kubectl get node

NAME STATUS ROLES AGE VERSION

server2 Ready master 77m v1.18.2

server3 Ready master 72m v1.18.2

server4 Ready master 68m v1.18.2

server5 Ready <none> 76s v1.18.2

[root@server1 yum.repos.d]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7ff77c879f-2nndl 1/1 Running 0 78m 10.244.2.2 server4 <none> <none>

coredns-7ff77c879f-6cwlc 1/1 Running 0 78m 10.244.1.2 server3 <none> <none>

etcd-server2 1/1 Running 2 78m 172.25.60.2 server2 <none> <none>

etcd-server3 1/1 Running 1 73m 172.25.60.3 server3 <none> <none>

etcd-server4 1/1 Running 2 69m 172.25.60.4 server4 <none> <none>

kube-apiserver-server2 1/1 Running 2 78m 172.25.60.2 server2 <none> <none>

kube-apiserver-server3 1/1 Running 1 73m 172.25.60.3 server3 <none> <none>

kube-apiserver-server4 1/1 Running 1 69m 172.25.60.4 server4 <none> <none>

kube-controller-manager-server2 1/1 Running 7 78m 172.25.60.2 server2 <none> <none>

kube-controller-manager-server3 1/1 Running 2 73m 172.25.60.3 server3 <none> <none>

kube-controller-manager-server4 1/1 Running 3 69m 172.25.60.4 server4 <none> <none>

kube-flannel-ds-amd64-6jjvw 1/1 Running 0 50m 172.25.60.3 server3 <none> <none>

kube-flannel-ds-amd64-9vmcj 1/1 Running 0 2m29s 172.25.60.5 server5 <none> <none>

kube-flannel-ds-amd64-h9sgg 1/1 Running 1 50m 172.25.60.2 server2 <none> <none>

kube-flannel-ds-amd64-jg7rk 1/1 Running 0 50m 172.25.60.4 server4 <none> <none>

kube-proxy-24vrj 1/1 Running 0 78m 172.25.60.2 server2 <none> <none>

kube-proxy-9mthn 1/1 Running 0 69m 172.25.60.4 server4 <none> <none>

kube-proxy-fjk78 1/1 Running 0 73m 172.25.60.3 server3 <none> <none>

kube-proxy-lw4z8 1/1 Running 0 2m30s 172.25.60.5 server5 <none> <none>

kube-scheduler-server2 1/1 Running 8 78m 172.25.60.2 server2 <none> <none>

kube-scheduler-server3 1/1 Running 2 73m 172.25.60.3 server3 <none> <none>

kube-scheduler-server4 1/1 Running 6 69m 172.25.60.4 server4 <none> <none>

[root@server1 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@server1 ~]# source .bashrc

1.6 测试

测试pod可以在server5上运行:

[root@server1 ~]# kubectl run test --image=busybox -it --restart=Never

If you don't see a command prompt, try pressing enter.

/ # [root@server1 ~]#

[root@server1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 0/1 Completed 0 97s 10.244.3.2 server5 <none> <none>

测试:server2关掉,查看负载均衡:

[root@server3 ~]# ip addr show // server3接管了集群(允许down掉的主节点个数(n-1)/2---(3-1)/2=1,我们当前是3个主节点,说明只能down掉一个主节点)

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:59:12:9d brd ff:ff:ff:ff:ff:ff

inet 172.25.60.3/24 brd 172.25.60.255 scope global eth0

valid_lft forever preferred_lft forever

inet 172.25.60.100/32 scope global eth0

可以看出VIP漂移到了server3上,此时server3为k8s:master节点,当server2起来时,由于server2优先级高于其他,此时server2又会变为master。