SQ 小车避障 Intel Realsense D435 基于线性梯度的深度值过滤

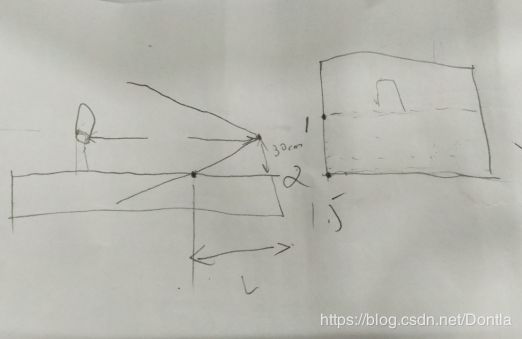

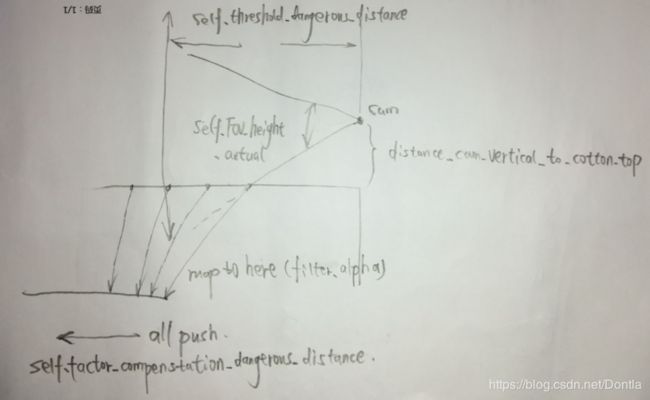

原理图

相关代码

# -*- coding: utf-8 -*-

"""

@File : 191224_obstacle_detection_建立梯度.py

@Time : 2019/12/24 14:51

@Author : Dontla

@Email : [email protected]

@Software: PyCharm

"""

import time

import numpy as np

import pyrealsense2 as rs

import cv2

import sys

from numba import jit

# @jit

# 貌似开不了jit,不知啥原因,开了也没明显看到加速

def filter_alpha(depth_image, filter_alpha):

if filter_alpha > 1:

# 获取depth_image宽高

h, w = depth_image.shape[0], depth_image.shape[1] # 360,640

# 创建上下alpha(不同方法都能创建)

# filter_upper = np.array([1] * int(h / 2))

filter_upper = np.full(int(h / 2), 1)

filter_lower = np.linspace(1, filter_alpha, h / 2)

# 将filter_upper和filter_lower连在一起

filter = np.r_[filter_upper, filter_lower]

# print(filter)

# print(filter.shape) # (360,)

# print(filter_alpha_upper)

# print(filter_alpha_upper.shape) # (180,)

# print(filter_alpha_lower)

# print(filter_alpha_lower.shape) # (180,)

return (depth_image.T * filter).T

else:

return depth_image

# 如果要防止下面cotton过近被误探测,可用两层for循环设置梯度过滤

# 不过貌似还得中间对半分,下面直接舍弃掉,只用上面作为判断,因为就算下面用了梯度...(还是得用梯度...)

@jit

def traversing_pixels(depth_image, threshold_dangerous_distance):

num_dangerous = 0

num_all_pixels = 0

depth_image_ravel = depth_image.ravel()

# depth_image_segmentation为分割后的图像(红蓝两色)

depth_image_segmentation_ravel = []

for pixel in depth_image_ravel:

num_all_pixels += 1

# 第一种效果要好一些

if pixel < threshold_dangerous_distance and pixel != 0:

# if pixel < threshold_dangerous_distance:

num_dangerous += 1

depth_image_segmentation_ravel.append(0)

else:

depth_image_segmentation_ravel.append(6000)

depth_image_segmentation = np.array(depth_image_segmentation_ravel).reshape(depth_image.shape)

return num_all_pixels, num_dangerous, depth_image_segmentation

class ObstacleDetection(object):

def __init__(self):

# self.cam_serials = ['838212073161', '827312071726']

# self.cam_serials = ['838212073161', '827312071726', '838212073249', '827312070790', '836612072369',

# '826212070395']

self.cam_serials = ['838212073161']

self.cam_width, self.cam_height = 640, 360

# 【危险距离:单位mm】

self.threshold_dangerous_distance = 3000

# 【摄像头到cotton平面垂直距离(单位mm)】

self.distance_cam_vertical_to_cotton_top = 260

# 【危险距离补偿系数】用于让最下面深度远离临界值,避免造成误检测

self.factor_compensation_dangerous_distance = 1.5

# 【危险距离像素占比】

self.threshold_dangerous_scale = 0.05

# 【摄像头视场角(单位°)】

self.FOV_width = 69.4

self.FOV_height = 42.5

self.FOV_scale = self.FOV_height / self.FOV_width # 0.6123919308357348

# 【实际变换后height视场角】

if self.cam_height / self.cam_width < self.FOV_scale:

self.FOV_height_actual = self.FOV_width * self.cam_height / self.cam_width

else:

self.FOV_height_actual = self.FOV_height

# 【计算过滤α值(distance_min为图像最下方的深度,看到最近cotton的距离)】

# 当摄像头到cotton顶垂直距离为800,最小距离为2256,当危险距离为2000时,alpha滤值为0.88

# 当摄像头到cotton顶垂直距离为800,最小距离为2256,当危险距离为3000时,alpha滤值为1.32

# 所以,后面进行滤值时需判断self.filter_alpha的值是否大于1(已添加进filter_alpha()函数中)

self.distance_min = self.distance_cam_vertical_to_cotton_top / (

np.tan(self.FOV_height_actual / 2 * np.pi / 180))

self.filter_alpha = self.threshold_dangerous_distance / self.distance_min * self.factor_compensation_dangerous_distance

def obstacle_detection(self):

# print(self.distance_min) # 2256.7829632201597

# print(self.filter_alpha) # 0.8862172537611853

# 摄像头个数(在这里设置所需使用摄像头的总个数)

cam_num = 6

ctx = rs.context()

'''连续验证机制'''

# D·C 1911202:创建最大验证次数max_veri_times;创建连续稳定值continuous_stable_value,用于判断设备重置后是否处于稳定状态

max_veri_times = 100

continuous_stable_value = 5

print('\n', end='')

print('开始连续验证,连续验证稳定值:{},最大验证次数:{}:'.format(continuous_stable_value, max_veri_times))

continuous_value = 0

veri_times = 0

while True:

devices = ctx.query_devices()

connected_cam_num = len(devices)

print('摄像头个数:{}'.format(connected_cam_num))

if connected_cam_num == cam_num:

continuous_value += 1

if continuous_value == continuous_stable_value:

break

else:

continuous_value = 0

veri_times += 1

if veri_times == max_veri_times:

print("检测超时,请检查摄像头连接!")

sys.exit()

'''循环reset摄像头'''

# hardware_reset()后是不是应该延迟一段时间?不延迟就会报错

print('\n', end='')

print('开始初始化摄像头:')

for dev in ctx.query_devices():

# 先将设备的序列号放进一个变量里,免得在下面for循环里访问设备的信息过多(虽然不知道它会不会每次都重新访问)

dev_serial = dev.get_info(rs.camera_info.serial_number)

# 匹配序列号,重置我们需重置的特定摄像头(注意两个for循环顺序,哪个在外哪个在内很重要,不然会导致刚重置的摄像头又被访问导致报错)

for serial in self.cam_serials:

if serial == dev_serial:

dev.hardware_reset()

# 像下面这条语句居然不会报错,不是刚刚才重置了dev吗?莫非区别在于没有通过for循环ctx.query_devices()去访问?

# 是不是刚重置后可以通过ctx.query_devices()去查看有这个设备,但是却没有存储设备地址?如果是这样,

# 也就能够解释为啥能够通过len(ctx.query_devices())函数获取设备数量,但访问序列号等信息就会报错的原因了

print('摄像头{}初始化成功'.format(dev.get_info(rs.camera_info.serial_number)))

'''连续验证机制'''

# D·C 1911202:创建最大验证次数max_veri_times;创建连续稳定值continuous_stable_value,用于判断设备重置后是否处于稳定状态

print('\n', end='')

print('开始连续验证,连续验证稳定值:{},最大验证次数:{}:'.format(continuous_stable_value, max_veri_times))

continuous_value = 0

veri_times = 0

while True:

devices = ctx.query_devices()

connected_cam_num = len(devices)

print('摄像头个数:{}'.format(connected_cam_num))

if connected_cam_num == cam_num:

continuous_value += 1

if continuous_value == continuous_stable_value:

break

else:

continuous_value = 0

veri_times += 1

if veri_times == max_veri_times:

print("检测超时,请检查摄像头连接!")

sys.exit()

'''配置各个摄像头的基本对象'''

for i in range(len(self.cam_serials)):

locals()['pipeline' + str(i)] = rs.pipeline(ctx)

locals()['config' + str(i)] = rs.config()

locals()['config' + str(i)].enable_device(self.cam_serials[i])

# 为啥我设置成1280×720就报错呢?明明Intel Realsense的usb接口已经显示为3.0了

# locals()['config' + str(i)].enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

# locals()['config' + str(i)].enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

locals()['config' + str(i)].enable_stream(rs.stream.depth, self.cam_width, self.cam_height, rs.format.z16,

30)

locals()['config' + str(i)].enable_stream(rs.stream.color, self.cam_width, self.cam_height, rs.format.bgr8,

30)

locals()['pipeline' + str(i)].start(locals()['config' + str(i)])

# 创建对齐对象(深度对齐颜色)

locals()['align' + str(i)] = rs.align(rs.stream.color)

'''运行摄像头'''

try:

while True:

start_time = time.time()

for i in range(len(self.cam_serials)):

locals()['frames' + str(i)] = locals()['pipeline' + str(i)].wait_for_frames()

# 获取对齐帧集

locals()['aligned_frames' + str(i)] = locals()['align' + str(i)].process(

locals()['frames' + str(i)])

# 获取对齐后的深度帧和彩色帧

locals()['aligned_depth_frame' + str(i)] = locals()[

'aligned_frames' + str(i)].get_depth_frame()

locals()['color_frame' + str(i)] = locals()['aligned_frames' + str(i)].get_color_frame()

if not locals()['aligned_depth_frame' + str(i)] or not locals()['color_frame' + str(i)]:

continue

# 获取颜色帧内参

locals()['color_profile' + str(i)] = locals()['color_frame' + str(i)].get_profile()

locals()['cvsprofile' + str(i)] = rs.video_stream_profile(

locals()['color_profile' + str(i)])

locals()['color_intrin' + str(i)] = locals()['cvsprofile' + str(i)].get_intrinsics()

locals()['color_intrin_part' + str(i)] = [locals()['color_intrin' + str(i)].ppx,

locals()['color_intrin' + str(i)].ppy,

locals()['color_intrin' + str(i)].fx,

locals()['color_intrin' + str(i)].fy]

locals()['color_image' + str(i)] = np.asanyarray(

locals()['color_frame' + str(i)].get_data())

locals()['depth_image' + str(i)] = np.asanyarray(

locals()['aligned_depth_frame' + str(i)].get_data())

# 【阿尔法过滤】

locals()['depth_image_alpha_filter' + str(i)] = filter_alpha(locals()['depth_image' + str(i)],

self.filter_alpha)

# 【遍历深度图像素值,如存在小于危险值范围比例超过阈值,则告警】

locals()['num_all_pixels' + str(i)], locals()['num_dangerous' + str(i)], locals()[

'depth_image_segmentation' + str(i)] = traversing_pixels(

locals()['depth_image_alpha_filter' + str(i)], self.threshold_dangerous_distance)

print('num_all_pixels:{}'.format(locals()['num_all_pixels' + str(i)]))

print('num_dangerous:{}'.format(locals()['num_dangerous' + str(i)]))

locals()['dangerous_scale' + str(i)] = locals()['num_dangerous' + str(i)] / locals()[

'num_all_pixels' + str(i)]

print('危险比例:{}'.format(locals()['dangerous_scale' + str(i)]))

if locals()['dangerous_scale' + str(i)] > self.threshold_dangerous_scale:

print("距离警告!")

locals()['depth_colormap' + str(i)] = cv2.applyColorMap(

cv2.convertScaleAbs(locals()['depth_image_segmentation' + str(i)], alpha=0.0425),

cv2.COLORMAP_JET)

locals()['image' + str(i)] = np.hstack(

(locals()['color_image' + str(i)], locals()['depth_colormap' + str(i)]))

# 注意: 窗口名不要用中文字符, 小心乱码

cv2.imshow('win{}:{}'.format(i, self.cam_serials[i]), locals()['image' + str(i)])

# cv2.imshow('colorWin{}: {}'.format(i, self.cam_serials[i]), locals()['color_image' + str(i)])

# cv2.imshow('depthWin{}: {}'.format(i, self.cam_serials[i]), locals()['depth_colormap' + str(i)])

cv2.waitKey(1)

end_time = time.time()

# print('单帧运行时间:{}'.format(end_time - start_time))

# 遇到异常再次启动检测函数,如有需要可以将连续监测和摄像头重置全放进去

except:

print('\n出现异常,请重新检查摄像头连接!\n')

for i in range(len(self.cam_serials)):

cv2.destroyAllWindows()

locals()['pipeline' + str(i)].stop()

ObstacleDetection().obstacle_detection()

finally:

for i in range(len(self.cam_serials)):

locals()['pipeline' + str(i)].stop()

if __name__ == '__main__':

ObstacleDetection().obstacle_detection()

参考文章:为什么Intel Realsense D435深度摄像头在基于深度的水平方向障碍物检测(避障)方案中,摄像头不宜安装太高?