Hadoop2.8.5的YARN的高可用集群搭建(YARN HA)

参照:Hadoop2.8.5的HDFS的高可用集群搭建(HDFS HA)

https://blog.csdn.net/u014635374/article/details/104997451

搭建好HDFS HA 后只需要安装下面修改yarn-site.xml文件即可

YARN HA搭建

与HDFS HA类似,YARN集群也可以搭建HA功能,下面我们来讲解YARN集群的HA架构原理和HA的具体搭建步骤。

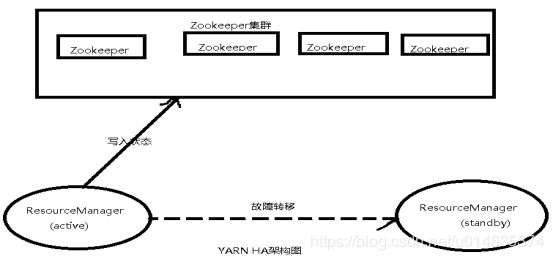

7.1 架构原理

在Hadoop的YARN集群中,ResourceManager负责跟踪集群中的资源,以及调度应用程序(例如MapReduce作业)。在Hadoop2.4之前,集群中只有一个ResourceManager,当其中一个岩机时,将影响整个集群。高可用特性增加了冗余的形式,即一个活动/备用的ResourceManager对,以便可用进行故障转移。

YARN HA的架构如下图所示:

与HDFS HA类似,同一时间只有一个ResourceManager处于活动状态,当不启用自动故障转移时,我们必须手动将其中一个ResourceManager转换为活动状态。可用结合Zookeeper实现自动故障转移,当活动ResourceManager无响应或故障时,另一个ResourceManager自动被Zookeeper选为活动ResourceManager。与HDFS HA不同的是,ResourceManager中的ZKFC只作为ResourceManager的一个线程运行,而不是一个独立的进程。

7.2 YARN集群的搭建

集群打架的总体思路:先在centosnode01节点上配置完毕之后,在发送到centosnode02,centosnode03,centoshadoop4节点上,集群中的各个节点角色分配如下表:

YARN结合Zookeeper搭建HA 的集群角色分配

| 节点 |

角色 |

| centoshadoop1 |

ResourceManger NodeManger QuorumPeerMain ------Zookeeper进程 |

| centoshadoop2 |

ResourceManger NodeManger QuorumPeerMain ------Zookeeper进程 |

| centoshadoop3 |

NodeManager QuorumPeerMain ------Zookeeper进程 |

| centoshadoop4 |

NodeManager QuorumPeerMain ------Zookeeper进程 |

YARN HA的配置步骤:

7.2.1 yarn-site.xml文件配置

YARN HA 的配置需要在Hadoop配置文件yarn-site.xml中继续加入新的配置项,以完成HA功能。yarn-site.xml文件的完整配置内容如下:

分别在centoshadoop1,centoshadoop2节点创建下面的两个目录

mkdir -p /home/hadoop/yarn/local

mkdir -p /home/hadoop/yarn/logs

centoshadoop2上启动报错

WARN org.apache.hadoop.ipc.Client:

Failed to connect to server: centoshadoop1/192.168.227.140:8031: retries get failed due to exceeded maximum allowed retries number: 0

firewall-cmd --zone=public --add-port=8030/tcp --permanent

firewall-cmd --zone=public --add-port=8031/tcp --permanent

firewall-cmd --zone=public --add-port=8032/tcp --permanent

firewall-cmd --zone=public --add-port=8033/tcp --permanent

firewall-cmd --zone=public --add-port=23142/tcp --permanent

Firewall-cmd --reload

分发至其他三台机器

scp -r yarn-site.xml

hadoop@centoshadoop2:/home/hadoop/hadoop-ha/hadoop/hadoop-2.8.5/etc/hadoop/

修改centoshadoop2上的yarn-site.xml文件配置,如下

停止各台机器的resourcemanager进程

cd /home/hadoop/hadoop-ha/hadoop/hadoop-2.8.5/

sbin/yarn-daemon.sh stop resourcemanager

启动centoshadoop1,centoshadoop2节点的进程

sbin/yarn-daemon.sh stop resourcemanager

监控centoshadoop1与centoshadoop2的resourcemanager文件

cd /home/hadoop/hadoop-ha/hadoop/hadoop-2.8.5/logs

tail -f yarn-hadoop-resourcemanager-centoshadoop1.log

tail -f yarn-hadoop-resourcemanager-centoshadoop2.log

分别启动四个节点的nodemanager

sbin/yarn-daemon.sh start nodemanager

分别监控四台机器的nodemanager日志

tail -f yarn-hadoop-nodemanager-centoshadoop1.log

tail -f yarn-hadoop-nodemanager-centoshadoop2.log

tail -f yarn-hadoop-nodemanager-centoshadoop3.log

tail -f yarn-hadoop-nodemanager-centoshadoop4.log

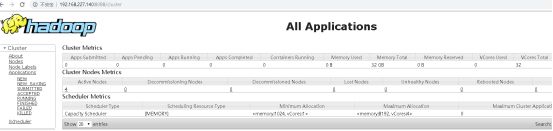

访问http://centoshadoop1:8088/cluster

如果访问设备ResourceManager地址http://centoshadoop2:8088,发现自动跳转到ResourceManager的地址http://centoshadoop1:8088。这是因为活动状态的ResourceManager在centoshadoop1节点上,访问设备ResourceManager会自动跳转到活动的ResourceManager。

- 查看两个resourceManager的状态

查看centoshadoop1上yarn的激活状态

bin/yarn rmadmin -getServiceState rm1

active

同时查看centoshadoop2的激活状态

bin/yarn rmadmin -getServiceState rm2

standby

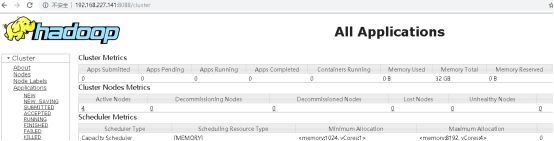

Yarn HA 高可用测试

停止centoshadoop1节点的resourcemanager进程

sbin/yarn-daemon.sh stop resourcemanager

查看centoshadoop2上yarn的激活状态

bin/yarn rmadmin -getServiceState rm2

active

http://centoshadoop2:8088/cluster

注意:搭建的过程中要监控每个节点的日志,直到所有节点的日志正常