datax从hive导数据到mysql时hadoop版本不兼容异常

异常原因

写在前面:解决了一些异常情况但是最后没彻底打通,换个方向继续搞,参考的朋友直接跳过

java.lang.NoSuchMethodError: org.apache.hadoop.tracing.SpanReceiverHost.get(Lorg/apache/hadoop/conf/Configuration;Ljava/lang/String;)Lorg/apache/hadoop/tracing/SpanReceiverHost;

at org.apache.hadoop.hdfs.DFSClient.(DFSClient.java:634)

at org.apache.hadoop.hdfs.DFSClient.(DFSClient.java:619)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2596)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:91)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2630)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2612)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:169)

at com.alibaba.datax.plugin.reader.hdfsreader.DFSUtil.getHDFSAllFiles(DFSUtil.java:123)

at com.alibaba.datax.plugin.reader.hdfsreader.DFSUtil.getAllFiles(DFSUtil.java:112)

at com.alibaba.datax.plugin.reader.hdfsreader.HdfsReader$Job.prepare(HdfsReader.java:169)

at com.alibaba.datax.core.job.JobContainer.prepareJobReader(JobContainer.java:715)

at com.alibaba.datax.core.job.JobContainer.prepare(JobContainer.java:308)

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:115)

at com.alibaba.datax.core.Engine.start(Engine.java:92)

at com.alibaba.datax.core.Engine.entry(Engine.java:171)

at com.daqsoft.bdsp.service.impl.PushServiceImpl.test(PushServiceImpl.java:32)

at com.daqsoft.bdsp.controller.PushController.test(PushController.java:32)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:209)

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:136)

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:102)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:877)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:783)

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:87)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:991)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:925)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:974)

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:877)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:661)

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:851)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:742)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:231)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.CorsFilter.doFilterInternal(CorsFilter.java:96)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.boot.actuate.metrics.web.servlet.WebMvcMetricsFilter.filterAndRecordMetrics(WebMvcMetricsFilter.java:158)

at org.springframework.boot.actuate.metrics.web.servlet.WebMvcMetricsFilter.filterAndRecordMetrics(WebMvcMetricsFilter.java:126)

at org.springframework.boot.actuate.metrics.web.servlet.WebMvcMetricsFilter.doFilterInternal(WebMvcMetricsFilter.java:111)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.boot.actuate.web.trace.servlet.HttpTraceFilter.doFilterInternal(HttpTraceFilter.java:90)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.RequestContextFilter.doFilterInternal(RequestContextFilter.java:99)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.HttpPutFormContentFilter.doFilterInternal(HttpPutFormContentFilter.java:109)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.HiddenHttpMethodFilter.doFilterInternal(HiddenHttpMethodFilter.java:93)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:200)

at org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:193)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:198)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:96)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:496)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:140)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:81)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:87)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:342)

at org.apache.coyote.http11.Http11Processor.service(Http11Processor.java:803)

at org.apache.coyote.AbstractProcessorLight.process(AbstractProcessorLight.java:66)

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:790)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1468)

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61)

at java.lang.Thread.run(Thread.java:748)

报错的原因是nosuchmethod异常,查看datax的源码,发现hdfsreader使用的hadoop集群版本是2.7.1版本的.而我们的集群版本是2.6.0;

下载datax源码

下载源码后,导入idea,找到hdfsreader

查看代码得知对hdfs文件的读取发生在DFSUtil方法中,正是这个方法里面调用了pom文件中的hadoop相关依赖.

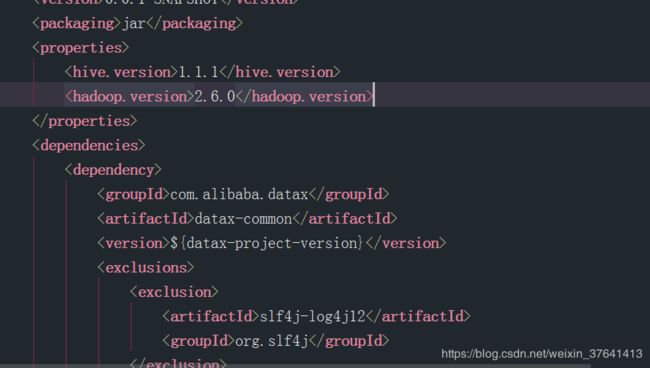

修改pom文件

修改为2.6版本后重新打包,由于我这里只是先做一个能否实现的初步验证.因此直接到datax的根目录下,进入plugins文件夹.

用打包后的文件替换此文件.

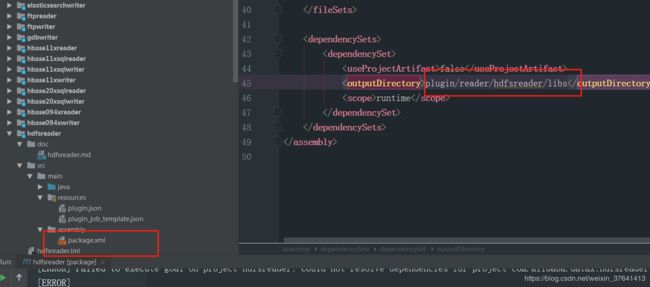

这里有个地方需要注意一下,打包之后没有target目录,是assembly目录下的package.xml中进行了设置,到相应的地方去找打包好的文件.

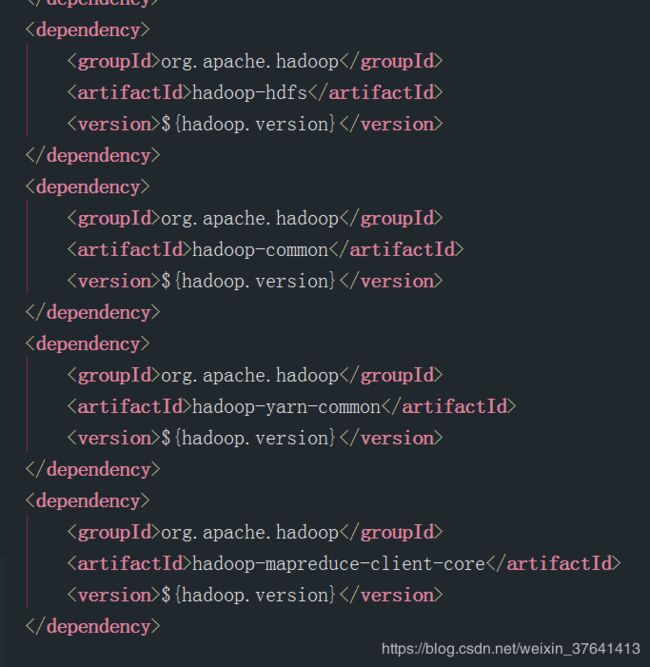

修改libs中的依赖

将这里面的所有的依赖换成需要的版本的依赖.到相应的lib目录底下去替换

进入datax的libs目录下

替换掉hadoop这个jar包的版本,我这里用2.6替换掉了2.7.1这个版本的jar包.

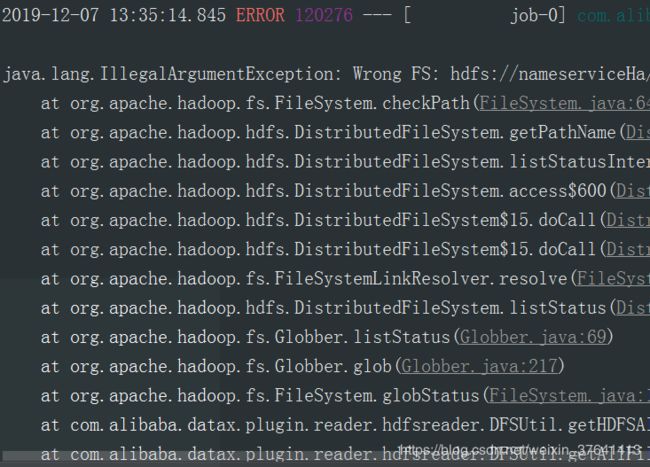

然后再次运行:

java.lang.IllegalArgumentException: Wrong FS: hdfs://nameserviceHa/user/hive/warehouse/spider_test.db/region_test23, expected: hdfs://192.168.100.226:8889

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:645)

at org.apache.hadoop.hdfs.DistributedFileSystem.getPathName(DistributedFileSystem.java:193)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatusInternal(DistributedFileSystem.java:690)

at org.apache.hadoop.hdfs.DistributedFileSystem.access$600(DistributedFileSystem.java:105)

at org.apache.hadoop.hdfs.DistributedFileSystem$15.doCall(DistributedFileSystem.java:755)

at org.apache.hadoop.hdfs.DistributedFileSystem$15.doCall(DistributedFileSystem.java:751)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.listStatus(DistributedFileSystem.java:751)

at org.apache.hadoop.fs.Globber.listStatus(Globber.java:69)

at org.apache.hadoop.fs.Globber.glob(Globber.java:217)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:1625)

at com.alibaba.datax.plugin.reader.hdfsreader.DFSUtil.getHDFSAllFiles(DFSUtil.java:127)

at com.alibaba.datax.plugin.reader.hdfsreader.DFSUtil.getAllFiles(DFSUtil.java:112)

at com.alibaba.datax.plugin.reader.hdfsreader.HdfsReader$Job.prepare(HdfsReader.java:169)

at com.alibaba.datax.core.job.JobContainer.prepareJobReader(JobContainer.java:715)

at com.alibaba.datax.core.job.JobContainer.prepare(JobContainer.java:308)

at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:115)

at com.alibaba.datax.core.Engine.start(Engine.java:92)

at com.alibaba.datax.core.Engine.entry(Engine.java:171)

at com.daqsoft.bdsp.service.impl.PushServiceImpl.test(PushServiceImpl.java:32)

at com.daqsoft.bdsp.controller.PushController.test(PushController.java:32)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.web.method.support.InvocableHandlerMethod.doInvoke(InvocableHandlerMethod.java:209)

at org.springframework.web.method.support.InvocableHandlerMethod.invokeForRequest(InvocableHandlerMethod.java:136)

at org.springframework.web.servlet.mvc.method.annotation.ServletInvocableHandlerMethod.invokeAndHandle(ServletInvocableHandlerMethod.java:102)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.invokeHandlerMethod(RequestMappingHandlerAdapter.java:877)

at org.springframework.web.servlet.mvc.method.annotation.RequestMappingHandlerAdapter.handleInternal(RequestMappingHandlerAdapter.java:783)

at org.springframework.web.servlet.mvc.method.AbstractHandlerMethodAdapter.handle(AbstractHandlerMethodAdapter.java:87)

at org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:991)

at org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:925)

at org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:974)

at org.springframework.web.servlet.FrameworkServlet.doPost(FrameworkServlet.java:877)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:661)

at org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:851)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:742)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:231)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:166)

at org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

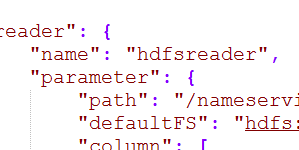

部分异常如以上代码所示,应该是json文件写,在hdfsreader中的path中应该去掉hdfs的有问题.修改后的json文件如图所示

这个依然是不正常的,显示报错提示path路径配置错误,在仔细康康吧.

后面解决了路径问题后报了这个错误,网上找了一圈资料说还是集群版本的问题,需要在hdfsreader中放两个文件.

放上了也没啥用,继续报错.一脸懵逼,问题解决到这里,我准备用sqoop开发了.如果有知道的大神还望不吝赐教.