VINS-Mono代码学习记录(二)---feature_tracker

一、feature_tracker总体流程图解

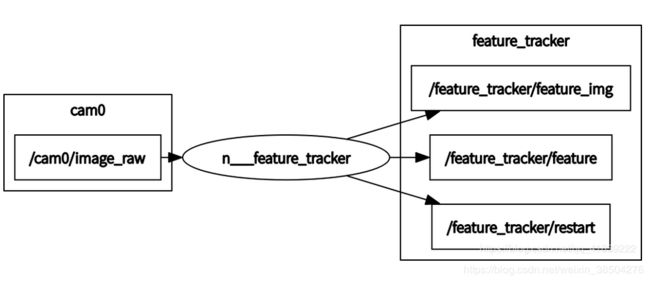

先给出一张feature_tracker的总体流程图,让自己有一个全局意识。这张图真好,感谢原作者。基本这就是feature_tracker这个node搞的事情啦!重点关注它,对! 就是它!

图片转自:https://blog.csdn.net/qq_41839222/article/details/85797156

图片转自:https://blog.csdn.net/qq_41839222/article/details/85797156

二、main()函数分析

开始进入feature_tracker–>src文件夹可以看到,里面的文件共6个,先看feature_tracker_node.cpp中的主函数main()

int main(int argc, char **argv)

{

//【1】初始化,设置句柄,设置logger级别

ros::init(argc, argv, "feature_tracker");

ros::NodeHandle n("~");

ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Info);

//【2】从config-->euroc-->euroc_config.yaml文件中读取参数,包括话题,图像宽高,追踪的特征点数量等等

readParameters(n);

//【3】循环读取每个相机的内参

for (int i = 0; i < NUM_OF_CAM; i++) //NUM_OF_CAM=1

trackerData[i].readIntrinsicParameter(CAM_NAMES[i]);

//【4】如果是鱼眼相机,添加mask去除边缘噪声

if(FISHEYE)

{

for (int i = 0; i < NUM_OF_CAM; i++)

{

trackerData[i].fisheye_mask = cv::imread(FISHEYE_MASK, 0);

if(!trackerData[i].fisheye_mask.data)

{

ROS_INFO("load mask fail");

ROS_BREAK();

}

else

ROS_INFO("load mask success");

}

}

//【5】订阅话题IMAGE_TOPIC(/cam0/image_raw),执行回调函数img_callback

ros::Subscriber sub_img = n.subscribe(IMAGE_TOPIC, 100, img_callback);//当订阅的topic有新的信息时,激活回调函数

//【6】发布feature,实例feature_points,跟踪的特征点,给后端优化用

//发布feature_img,实例ptr,跟踪的特征点图,给RVIZ用和调试用

//发布restart

pub_img = n.advertise("feature", 1000);

pub_match = n.advertise("feature_img",1000);

pub_restart = n.advertise("restart",1000);

/*

if (SHOW_TRACK)

cv::namedWindow("vis", cv::WINDOW_NORMAL);

*/

ros::spin();//ros::spin() 在调用后不会再返回,也就是主程序到这儿就不往下执行了

return 0;

}

上面每一步都给出了详细的注释,其中步骤【2】读取参数的函数readParameters()如下:

void readParameters(ros::NodeHandle &n)

{

std::string config_file;

config_file = readParam(n, "config_file");//ans-->config_file

std::cout<<"config_file"<(n, "vins_folder");

fsSettings["image_topic"] >> IMAGE_TOPIC; //image_topic: "/cam0/image_raw"

fsSettings["imu_topic"] >> IMU_TOPIC; //imu_topic: "/imu0"

MAX_CNT = fsSettings["max_cnt"];//tracking最大特征点数量150

MIN_DIST = fsSettings["min_dist"];//两个特征点之间的最小距离30

ROW = fsSettings["image_height"];//480

COL = fsSettings["image_width"];//752

FREQ = fsSettings["freq"];//publish tracking result 的频率,默认设置为10hz

F_THRESHOLD = fsSettings["F_threshold"];//ransac threshold (pixel)值为1

SHOW_TRACK = fsSettings["show_track"];//发布image topic 值为1

EQUALIZE = fsSettings["equalize"];//if image is too dark or light, trun on equalize to find enough features,默认为1

FISHEYE = fsSettings["fisheye"];//默认不用fisheye 默认值为0

if (FISHEYE == 1)

FISHEYE_MASK = VINS_FOLDER_PATH + "config/fisheye_mask.jpg";

CAM_NAMES.push_back(config_file);//string类型的config_file push到CAM_NAMES vector中

WINDOW_SIZE = 20;

STEREO_TRACK = false;//如果双目 把这个参数改为true

FOCAL_LENGTH = 460;

PUB_THIS_FRAME = false;

if (FREQ == 0)

FREQ = 100;

fsSettings.release();

}

代码中涉及到的变量基本根据变量名理解即可。

步骤【3】读取相机内参,根据config_file文件中model_type的值决定采用何种相机模型,并创建相应模型的对象指针,读取文件在camera_models–>CameraFctory.cc文件中。

步骤【5】和【6】发布和订阅的topic图形表示如下:

在上一节给出的是整个算法中的所有nodes和topics,这里就单独把feature_tracker这个node和相关的topic拿出来了。

在上一节给出的是整个算法中的所有nodes和topics,这里就单独把feature_tracker这个node和相关的topic拿出来了。

三、img_callback()回调函数

接下来看img_callback()回调函数:

void img_callback(const sensor_msgs::ImageConstPtr &img_msg)

{

//[1]判断是否是第一帧

if(first_image_flag)//首先是true

{

first_image_flag = false;

first_image_time = img_msg->header.stamp.toSec();

last_image_time = img_msg->header.stamp.toSec();

return;

}

//[2] 判断时间间隔是否正确,有问题则restart

if (img_msg->header.stamp.toSec() - last_image_time > 1.0 || img_msg->header.stamp.toSec() < last_image_time)

{

ROS_WARN("image discontinue! reset the feature tracker!");

first_image_flag = true;

last_image_time = 0;

pub_count = 1;

std_msgs::Bool restart_flag;

restart_flag.data = true;

pub_restart.publish(restart_flag);

return;

}

last_image_time = img_msg->header.stamp.toSec();

//[3] 发布频率控制,并不是每读入一帧图像,就要发布特征点,通过判断间隔时间内的发布次数

if (round(1.0 * pub_count / (img_msg->header.stamp.toSec() - first_image_time)) <= FREQ)//FREQ=10hz

{

PUB_THIS_FRAME = true;

// reset the frequency control,pub_count=1 时间间隔内的发布频率十分接近设定频率时,更新时间间隔起始时刻,并将数据发布次数置0

if (abs(1.0 * pub_count / (img_msg->header.stamp.toSec() - first_image_time) - FREQ) < 0.01 * FREQ)

{

first_image_time = img_msg->header.stamp.toSec();

pub_count = 0;

}

}

else

PUB_THIS_FRAME = false;

//[4]将图像编码8UC1转换为mono8

cv_bridge::CvImageConstPtr ptr;

if (img_msg->encoding == "8UC1")

{

sensor_msgs::Image img;

img.header = img_msg->header;

img.height = img_msg->height;

img.width = img_msg->width;

img.is_bigendian = img_msg->is_bigendian;

img.step = img_msg->step;

img.data = img_msg->data;

img.encoding = "mono8";

ptr = cv_bridge::toCvCopy(img, sensor_msgs::image_encodings::MONO8);

}

else

ptr = cv_bridge::toCvCopy(img_msg, sensor_msgs::image_encodings::MONO8);

cv::Mat show_img = ptr->image;

TicToc t_r;

for (int i = 0; i < NUM_OF_CAM; i++) //NUM_OF_CAM默认为1

{

ROS_DEBUG("processing camera %d", i);

//[5]单目时:FeatureTracker::readImage() 函数读取图像数据进行处理

if (i != 1 || !STEREO_TRACK) //STEREO_TRACK默认为false

trackerData[i].readImage(ptr->image.rowRange(ROW * i, ROW * (i + 1)), img_msg->header.stamp.toSec());//ROW为图像的height

else

{

if (EQUALIZE)//默认为1,均衡化

{

cv::Ptr clahe = cv::createCLAHE();//直方图均衡化

clahe->apply(ptr->image.rowRange(ROW * i, ROW * (i + 1)), trackerData[i].cur_img);//cur_img是光流跟踪的前一帧的图像数据

}

else

trackerData[i].cur_img = ptr->image.rowRange(ROW * i, ROW * (i + 1));

}

#if SHOW_UNDISTORTION

trackerData[i].showUndistortion("undistrotion_" + std::to_string(i));

#endif

}

//【6】更新全局ID

for (unsigned int i = 0;; i++)

{

bool completed = false;

for (int j = 0; j < NUM_OF_CAM; j++)

if (j != 1 || !STEREO_TRACK)

completed |= trackerData[j].updateID(i);

if (!completed)

break;

}

//[7]如果PUB_THIS_FRAME=1则进行发布

if (PUB_THIS_FRAME)

{

//将特征点id,矫正后归一化平面的3D点(x,y,z=1),像素2D点(u,v),像素的速度(vx,vy),封装成sensor_msgs::PointCloudPtr类型的feature_points实例中,发布到pub_img;

pub_count++;

sensor_msgs::PointCloudPtr feature_points(new sensor_msgs::PointCloud);

sensor_msgs::ChannelFloat32 id_of_point;

sensor_msgs::ChannelFloat32 u_of_point;

sensor_msgs::ChannelFloat32 v_of_point;

sensor_msgs::ChannelFloat32 velocity_x_of_point;

sensor_msgs::ChannelFloat32 velocity_y_of_point;

feature_points->header = img_msg->header;

feature_points->header.frame_id = "world";

vector> hash_ids(NUM_OF_CAM);

for (int i = 0; i < NUM_OF_CAM; i++)

{

auto &un_pts = trackerData[i].cur_un_pts;

auto &cur_pts = trackerData[i].cur_pts;

auto &ids = trackerData[i].ids;

auto &pts_velocity = trackerData[i].pts_velocity;

for (unsigned int j = 0; j < ids.size(); j++)

{

if (trackerData[i].track_cnt[j] > 1)

{

int p_id = ids[j];

hash_ids[i].insert(p_id);

geometry_msgs::Point32 p;

p.x = un_pts[j].x;

p.y = un_pts[j].y;

p.z = 1;

feature_points->points.push_back(p);

id_of_point.values.push_back(p_id * NUM_OF_CAM + i);

u_of_point.values.push_back(cur_pts[j].x);

v_of_point.values.push_back(cur_pts[j].y);

velocity_x_of_point.values.push_back(pts_velocity[j].x);

velocity_y_of_point.values.push_back(pts_velocity[j].y);

}

}

}

feature_points->channels.push_back(id_of_point);

feature_points->channels.push_back(u_of_point);

feature_points->channels.push_back(v_of_point);

feature_points->channels.push_back(velocity_x_of_point);

feature_points->channels.push_back(velocity_y_of_point);

ROS_DEBUG("publish %f, at %f", feature_points->header.stamp.toSec(), ros::Time::now().toSec());

// skip the first image; since no optical speed on frist image

if (!init_pub)

{

init_pub = 1;

}

else

pub_img.publish(feature_points);

//将图像封装到cv_bridge::cvtColor类型的ptr实例中发布到pub_match;

if (SHOW_TRACK)

{

ptr = cv_bridge::cvtColor(ptr, sensor_msgs::image_encodings::BGR8);

//cv::Mat stereo_img(ROW * NUM_OF_CAM, COL, CV_8UC3);

cv::Mat stereo_img = ptr->image;

for (int i = 0; i < NUM_OF_CAM; i++)

{

cv::Mat tmp_img = stereo_img.rowRange(i * ROW, (i + 1) * ROW);//Mat的rowRange和colRange函数可以获取某些范围内行或列的指针

cv::cvtColor(show_img, tmp_img, CV_GRAY2RGB);

for (unsigned int j = 0; j < trackerData[i].cur_pts.size(); j++)

{

double len = std::min(1.0, 1.0 * trackerData[i].track_cnt[j] / WINDOW_SIZE);

cv::circle(tmp_img, trackerData[i].cur_pts[j], 2, cv::Scalar(255 * (1 - len), 0, 255 * len), 2);

//draw speed line

/*

Vector2d tmp_cur_un_pts (trackerData[i].cur_un_pts[j].x, trackerData[i].cur_un_pts[j].y);

Vector2d tmp_pts_velocity (trackerData[i].pts_velocity[j].x, trackerData[i].pts_velocity[j].y);

Vector3d tmp_prev_un_pts;

tmp_prev_un_pts.head(2) = tmp_cur_un_pts - 0.10 * tmp_pts_velocity;

tmp_prev_un_pts.z() = 1;

Vector2d tmp_prev_uv;

trackerData[i].m_camera->spaceToPlane(tmp_prev_un_pts, tmp_prev_uv);

cv::line(tmp_img, trackerData[i].cur_pts[j], cv::Point2f(tmp_prev_uv.x(), tmp_prev_uv.y()), cv::Scalar(255 , 0, 0), 1 , 8, 0);

*/

//char name[10];

//sprintf(name, "%d", trackerData[i].ids[j]);

//cv::putText(tmp_img, name, trackerData[i].cur_pts[j], cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

}

//cv::imshow("vis", stereo_img);

//cv::waitKey(5);

pub_match.publish(ptr->toImageMsg());

}

}

ROS_INFO("whole feature tracker processing costs: %f", t_r.toc());

}

接下来剩下的内容就是feature_track.h变量的认识以及feature_track.cpp中几个主要函数的介绍,包括

void readImage(const cv::Mat &_img,double _cur_time),void rejectWithF()等函数。下一节再见吧,朋友们!!!