基于Hadoop的数据云盘的实现

环境:centos6.6 Tomcat7.0 eclipse MySQL

首先介绍一下这个简单网盘的功能:实现用户的注册,登录;上传、下载、删除文件;

基于Hadoop hdfs的集群分布式系统做成的这个简单的云盘实现;

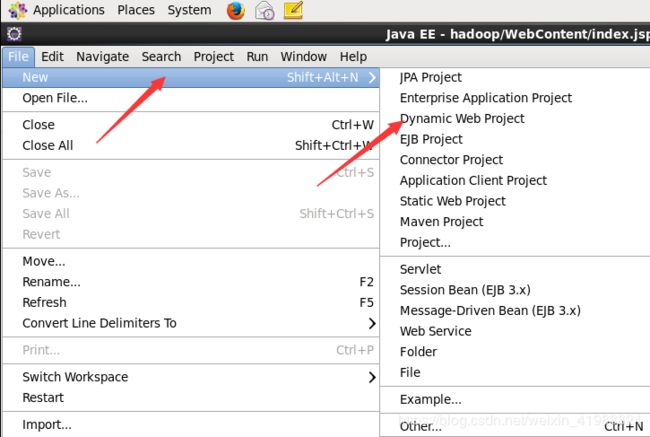

首先启动centos,打开安装好的eclipse,file-->New-->Dynamic Application Project

注:如果没有这个项目,安装Java EE

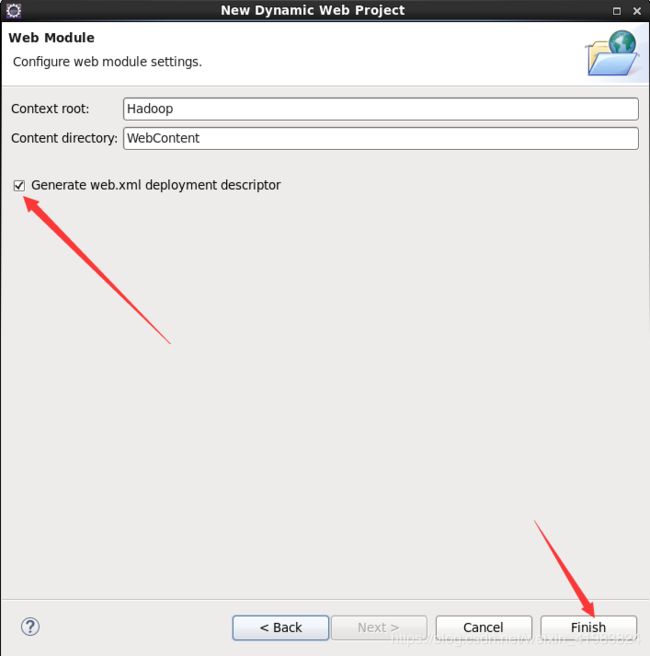

项目名称自己设置,一直点击下一步,最后一步时,最后一步最好勾选创建web.xml,没有勾选后面自己在WEB-INF下new一个名为web.xml的xml File

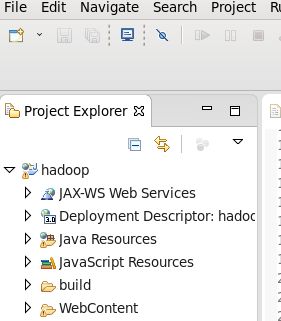

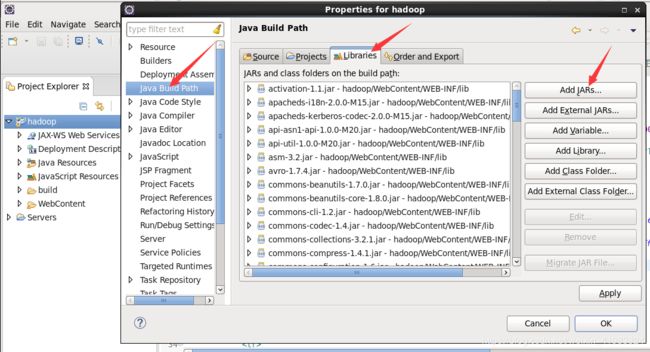

创建后,导入Hadoop下的jar包,位于安装Hadoop文件下下的share文件中,选中后粘贴到WEB-INF下的lib文件中

eclipse中选中所有的jar包,右键-->Build Path-->Add to build path。或者是选中项目,按alt+enter,进入界面,左侧选择java build path ,右侧libraries, add jars,

接下来就是真正开始创建了,选中java Resources,右键new-->package,创建三个package,可自己命名,我这里分别是,com.Bean, com.model, com.controller

右键com.controller,创建上传文件的名为uploadServlet.java类文件

package com.controller;

import java.io.File;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import javax.servlet.ServletContext;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.commons.fileupload.FileItem;

import org.apache.commons.fileupload.disk.DiskFileItemFactory;

import org.apache.commons.fileupload.servlet.ServletFileUpload;

import org.apache.hadoop.fs.FileStatus;

import com.simple.model.HdfsDAO;

public class UploadServlet extends HttpServlet{

protected void doGet(HttpServletRequest request, HttpServletResponse response)throws ServletException, IOException{

this.doPost(request,response);

}

protected void doPost(HttpServletRequest request, HttpServletResponse response)throws ServletException, IOException{

request.setCharacterEncoding("utf-8");

File file;

int maxFileSize = 50 * 1024 * 1024; //50M

int maxMemSize = 50 * 1024 * 1024; //50M

ServletContext context = getServletContext();

String filePath = context.getInitParameter("file-upload");

System.out.println("source file path:"+filePath+"");

//验证上传内容的类型

String contentType = request.getContentType();

if((contentType.indexOf("multipart/form-data") >= 0)){

DiskFileItemFactory factory = new DiskFileItemFactory();

//设置内存中存储文件的最大值

factory.setSizeThreshold(maxMemSize);

//本地存储的数据大于maxMemSize

factory.setRepository(new File("/soft/file-directory"));

//创建一个新的文件上传处理程序

ServletFileUpload upload = new ServletFileUpload(factory);

//设置最大上传文件大小

upload.setSizeMax(maxFileSize);

try{

//解析获取新文件

List fileItems = upload.parseRequest(request);

//处理上传的文件

Iterator i = fileItems.iterator();

System.out.println("begin to upload file to tomcat server");

while(i.hasNext()){

FileItem fi = (FileItem)i.next();

if(!fi.isFormField()){

//获取上传文件的参数

String fieldName = fi.getFieldName();

String fileName = fi.getName();

String fn = fileName.substring(fileName.lastIndexOf("/")+1);

System.out.println("

"+fn+"

");

boolean isInMemory = fi.isInMemory();

long sizeInBytes = fi.getSize();

//写入文件

if(fileName.lastIndexOf("/") >= 0 ){

file = new File(filePath,fileName.substring(fileName.lastIndexOf("/")));

}else{

file = new File(filePath,fileName.substring(fileName.lastIndexOf("/")+1));

}

fi.write(file);

System.out.println("upload file to tomcat server success!");

System.out.println("begin to upload file to hadoop hdfs");

String name = filePath + "/"+fileName;

//将tomcat上的文件上传到hadoop上

HdfsDAO hdfs = new HdfsDAO();

hdfs.copyFile(name);

System.out.println("upload file to hadoop hdfs success!");

FileStatus[] documentList = hdfs.getDirectoryFromHdfs();

request.setAttribute("documentList", documentList);

System.out.println("得到list数据"+documentList);

request.getRequestDispatcher("index.jsp").forward(request, response);

}

}

}catch(Exception ex){

System.out.println(ex);

}

}else{

System.out.println("No file uploaded

");

}

}

}继续在com.controller下创建名为downloadServlet.java类文件,

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.hadoop.fs.FileStatus;

import com.simple.model.HdfsDAO;

public class DownloadServlet extends HttpServlet{

private static final long serialVersionUID = 1L;

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

String local = "/soft/download-directory";//选择一个你centos下的目录作为你的下载文件存放地址

String filePath = new String(request.getParameter("filePath").getBytes("ISO-8859-1"),"GB2312");

HdfsDAO hdfs = new HdfsDAO();

hdfs.download(filePath, local);

FileStatus[] documentList = hdfs.getDirectoryFromHdfs();

request.setAttribute("documentList", documentList);

System.out.println("得到list数据"+documentList);

request.getRequestDispatcher("index.jsp").forward(request, response);

}

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

this.doGet(request, response);

}

}继续在com.controller下创建名为registerServlet.java的类文件,和注册你的用户有关的文件

package com.controller;

import java.io.IOException;

import java.sql.SQLException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.http.HttpSession;

import org.apache.hadoop.fs.FileStatus;

import com.simple.model.HdfsDAO;

import com.simple.model.UserDAO;

public class RegisterServlet extends HttpServlet{

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

this.doPost(request, response);

}

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

String username = request.getParameter("username");

String password = request.getParameter("password");

HttpSession session = request.getSession();

session.setAttribute("username", username);

UserDAO user = new UserDAO();

try{

user.insert(username, password);

}catch (SQLException e){

e.printStackTrace();

}

HdfsDAO hdfs = new HdfsDAO();

FileStatus[] documentList = hdfs.getDirectoryFromHdfs();

request.setAttribute("documentList", documentList);

request.getRequestDispatcher("index.jsp").forward(request, response);

}

}继续在com.controller下创建名为loginServlet.java的类文件,和你的用户登录有关的文件

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.http.HttpSession;

import org.apache.hadoop.fs.FileStatus;

import com.simple.model.HdfsDAO;

import com.simple.model.UserDAO;

public class LoginServlet extends HttpServlet{

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

this.doPost(request, response);

}

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

String username = request.getParameter("username");

String password = request.getParameter("password");

UserDAO user = new UserDAO();

if(user.checkUser(username,password)){

//用户合法,跳转到页面

HttpSession session = request.getSession();

session.setAttribute("username", username);

HdfsDAO hdfs = new HdfsDAO();

FileStatus[] documentList = hdfs.getDirectoryFromHdfs();

request.setAttribute("documentList", documentList);

System.out.println("得到list数据"+documentList);

request.getRequestDispatcher("index.jsp").forward(request, response);

}else{

//用户不合法,调回登录界面,并提示错误信息

request.getRequestDispatcher("login.jsp").forward(request, response);

}

}

}继续com.controller下创建deleteServlet.java的类文件,与删除文件有关系的文件

package com.controller;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.apache.hadoop.fs.FileStatus;

import com.simple.model.HdfsDAO;

public class DeleteFileServlet extends HttpServlet{

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

String filePath = new String(request.getParameter("filePath").getBytes("ISO-8859-1"),"GB2312");

HdfsDAO hdfs = new HdfsDAO();

hdfs.deleteFromHdfs(filePath);

System.out.println("===="+filePath+"====");

FileStatus[] documentList = hdfs.getDirectoryFromHdfs();

request.setAttribute("documentList", documentList);

System.out.println("得到list数据"+documentList);

request.getRequestDispatcher("index.jsp").forward(request, response);

}

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException{

this.doGet(request, response);

}

}接下来右键-->com.Bean,new-->class,名为UserBean,

package com.bean;

public class UserBean {

private int id;

private String name;

private String password;

public int getID(){

return id;

}

public void setID(int id){

this.id = id;

}

public String getName(){

return name;

}

public void setName(){

this.name = name;

}

public String getPassword(){

return password;

}

public void setPassword(String password){

this.password = password;

}

@Override

public String toString(){

return "UserBean [id=" + id + ", name=" + name + ", password=" + password + "]";

}

public UserBean(int id, String name, String password){

super();

this.id = id;

this.name = name;

this.password = password;

}

public UserBean(){

super();

}

}右键,com.model,分别创建ConnDB.java, HdfsDAO.java, UserDAO.ja

package com.model;

import java.sql.Connection;

import java.sql.DriverManager;

public class ConnDB {

private Connection ct = null;

public Connection getConn(){

try{

//加载驱动

Class.forName("com.mysql.jdbc.Driver");

//得到链接

ct = DriverManager.getConnection("jdbc:mysql://192.168.100.12:3306/hadoop?user=root&password=*****&useSSL=false");//该IP写你的集群分布的datanode的IP地址,3306是连接数据库的端口号,后面的user和password和你创建MySQL数据库时的用户有关系,一般用户是root,密码根据你自己设置的密码填写

} catch (Exception e){

//TODO Auto-genetated catch block

e.printStackTrace();

}

return ct;

}

}package com.model;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HdfsDAO {

private static String hdfsPath = "hdfs://192.168.100.10:8020/aa";//该IP和端口是你在配置分布式集群系统时候的DataNode的ip,端口也是你自己设置的一个端口,我这里设置的是8020,后面的路径,就是在Hadoop hdfs上的路径,可以通过在终端输入hadoop fs -ls /来查看你上面的目录,之后上传或者下载文件等操作会运用到这个目录

Configuration conf = new Configuration();

public void copyFile(String local) throws IOException{

FileSystem fs = FileSystem.get(URI.create(hdfsPath),conf);

//remote---/用户/用户下的文件或文件夹

fs.copyFromLocalFile(new Path(local), new Path(hdfsPath));

fs.close();

}

/**从HDFS上下载数据*/

public void download(String remote,String local) throws IOException{

FileSystem fs = FileSystem.get(URI.create(hdfsPath),conf);

fs.copyToLocalFile(false, new Path(remote),new Path(local),true);

System.out.println("download: from"+remote+" to "+local);

fs.close();

}

/**从HDFS上删除文件*/

public void deleteFromHdfs(String deletePath) throws FileNotFoundException, IOException{

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(deletePath),conf);

fs.deleteOnExit(new Path(deletePath));

fs.close();

}

/**遍历HDFS上的文件和目录*/

public static FileStatus[] getDirectoryFromHdfs() throws FileNotFoundException, IOException{

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(hdfsPath),conf);

FileStatus[] list = fs.listStatus(new Path(hdfsPath));

if(list != null)

for(FileStatus f:list){

System.out.printf("name: %s, folder: %s,size: %d\n",f.getPath().getName(),f.isDir(),f.getLen());

}

fs.close();

return list;

}

}package com.model;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

public class UserDAO {

private Statement sm = null;

private Connection ct = null;

private ResultSet rs = null;

public void close(){

try{

if(sm != null){

sm.close();

sm = null;

}

if(ct != null){

ct.close();

ct = null;

}

if(rs != null){

rs.close();

rs = null;

}

}catch (SQLException e){

//TODO Auto-generated catch block

e.printStackTrace();

}

}

//检查用户是否合法

public boolean checkUser(String user,String password){

boolean b = false;

try{

//获得连接

ct = new ConnDB().getConn();

//创建statement

sm = ct.createStatement();

String sql = "select * from student where name=\""+user+"\"";

rs = sm.executeQuery(sql);

if(rs.next()){

//说明用户存在

String pwd = rs.getString(3);

if(password.equals(pwd)){

//说明密码正确

b = true;

}

else{

b = false;

}

}

else{

b = false;

}

}

catch (SQLException e){

e.printStackTrace();

}finally{

this.close();

}

return b;

}

public void insert(String name,String password) throws SQLException{

int i = 0;

//获得连接

ct = new ConnDB().getConn();

//创建statement

sm = ct.createStatement();

String sql = "insert into student (name,password) values ('"+name+"','"+password+"')";

System.out.println(sql+"333333333");

i = sm.executeUpdate(sql);

}

}配置一下你的web.xml文件,该文件位于WEB-INF下面,如果没有,右键new一个就可以了

hadoop

login.html

login.htm

login.jsp

default.html

default.htm

default.jsp

UploadServlet

UploadServlet

com.simple.controller.UploadServlet

Location to store uploaded file

file-upload

/soft/file-directory

//这个目录是你centos上的目录,可自己设定,确保目录存在

UploadServlet

/UploadServlet

DeleteFileServlet

DeleteFileServlet

com.simple.controller.DeleteFileServlet

DeleteFileServlet

/DeleteFileServlet

DownloadServlet

DownloadServlet

com.simple.controller.DownloadServlet

DownloadServlet

/DownloadServlet

LoginServlet

LoginServlet

com.simple.controller.LoginServlet

LoginServlet

/LoginServlet

RegisterServlet

RegisterServlet

com.simple.controller.RegisterServlet

RegisterServlet

/RegisterServlet

如果对于web.xml里面的内容不理解,尽量自己搜索理解清楚,上面涉及到的内容还是比较容易理解的。

接下来,我们应该创建页面内容了,这些内容基于jsp文件的实现,下面做的是最简单的例子,如果自己需要更好美观的界面,可以自行去设置调整,创建注册登录页面,选中WEB-INF-->右键-->new-->JSP File,(没有这个选项就选择other,里面搜索会出现JSP文件)

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

数据云盘

适用浏览器:360、FireFox、Chrome、Opera、傲游、搜狗、世界之窗。不支持IE8及以下浏览器。

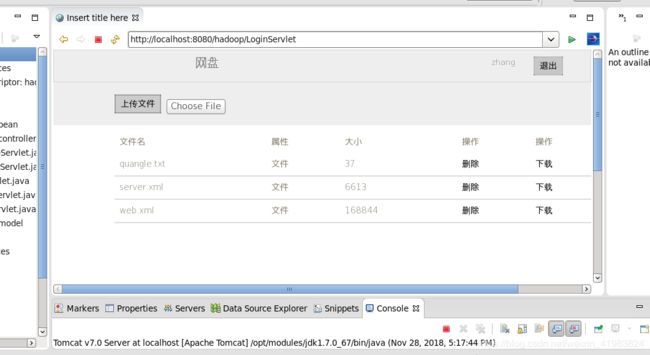

同样的方法创建主界面index.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@ include file="head.jsp" %>

<%@page import="org.apache.hadoop.fs.FileStatus" %>

数据云盘

文件名

属性

大小

操作

操作

<%

FileStatus[] list = (FileStatus[])request.getAttribute("documentList");

String name = (String)request.getAttribute("username");

if(list != null)

for(int i=0;i

<%

if(list[i].isDir())//DocumentServlet

{

out.print(""+list[i].getPath().getName()+"> ");

}

else{

out.print(""+list[i].getPath().getName()+" ");

}

%>

<%= (list[i].isDir()?"目录":"文件")%>

<%= (list[i].getLen()) %>

">删除

">下载

<%

}

%>

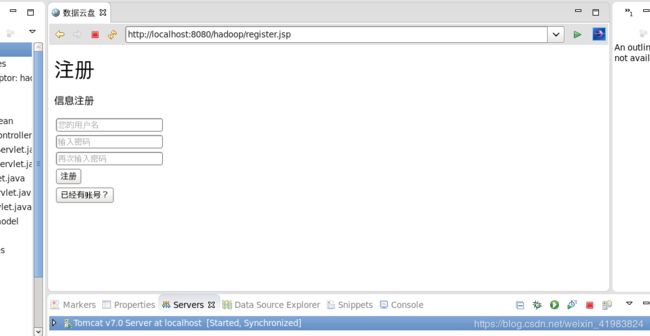

同样的方法创建注册页面register.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@ include file="head.jsp" %>

<%@page import="org.apache.hadoop.fs.FileStatus" %>

数据云盘

文件名

属性

大小

操作

操作

<%

FileStatus[] list = (FileStatus[])request.getAttribute("documentList");

String name = (String)request.getAttribute("username");

if(list != null)

for(int i=0;i

<%

if(list[i].isDir())//DocumentServlet

{

out.print(""+list[i].getPath().getName()+"> ");

}

else{

out.print(""+list[i].getPath().getName()+" ");

}

%>

<%= (list[i].isDir()?"目录":"文件")%>

<%= (list[i].getLen()) %>

">删除

">下载

<%

}

%>

同样的方法创建,head.jsp文件,该文件引用了一些css等HTML文件

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

Insert title here

网上下载bootmetro-master框架(搜索就会出现),将里面的assets下面的文件夹导入 项目的WebContent文件夹下面,美化用户的操作界面

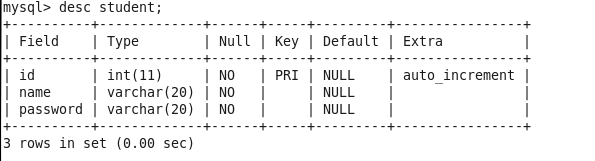

将MySQL的驱动包导入至lib文件夹下,这个包在网上可以下载,右击jar包选择“Build Path”→“Add to Build Path”在项目中添加。,在MySQL中创建名为student的表create table student; 创建一下属性

涉及MySQL的语句的简单使用,

接下来就可以运行啦,确保tomcat已经安装好了,MySQL也已经启动了,最重要的是,终端启动start-dfs.sh以及start-yarn.sh

输入jps命令,查看集群启动是否成功,成功后就可以执行啦。

eclipse选中你的项目,右键-->RUN AS-->Run on server -->选择tomcat服务器

接下来就是页面啦

一定要装好MySQL,为了让我的第一台机器轻松一点,MySQL我装在了第二台上面,web.xml一定要配置正确!!!,这个文件连接了你的前端和后端,所以字母拼错了就可能导致报错。

如果大家有什么报错的地方,可以留言给大家一起交流。我也还只是在慢慢学习中!!!