Hadoop完全分布式环境搭建(实操)

文章目录

- 1、环境准备

- 1.1配置静态ip

- 1.2修改主机名

- 1.3配置主机映射

- 2、安装jdk

- 3、安装Hadoop

- 4、完全分布式

- 4.1集群准备

- 4.2 设置免密登录

- 4.3修改配置文件

- 五、启动集群

- 启动集群

- 六、时间同步

1、环境准备

先准备一个纯净的centos7虚拟机,配置好静态ip,主机名,主机映射

1.1配置静态ip

vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="d1baf787-d970-475b-a869-6a6cf56a3c85"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.78.130"

NETMASK="255.255.255.0"

GATEWAY="192.168.78.2"

DNS1="8.8.8.8"

1.2修改主机名

hostnamectl set-hostname hadoop1

1.3配置主机映射

vi /etc/hosts

192.168.78.130 hadoop1

192.168.78.131 hadoop2

192.168.78.132 hadoop3

同时在opt下创建文件子文件夹 soft 备用

2、安装jdk

**解压**

tar -zxf jdk-8u171-linux-x64.tar.gz

**移动**

mv jdk1.8........ /opt/soft/jdk180

**配置环境变量**

vi /etc/profile

export JAVA_HOME=/opt/soft/jdk180

export PATH=$JAVA_HOME/bin:$PATH

**重启环境变量**

source /etc/profile

**验证**

java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

3、安装Hadoop

Hadoop的安装模式分为3种,单机(本地)模式,伪分布式,完全分布式(集群模式)

**解压**

tar zxvf hadoop-2.6.0-cdh5.14.2.tar.gz

**移动**

mv hadoop-......... /opt/soft/hadoop260

**配置环境变量**

vi /etc/profile

export HADOOP_HOME=/opt/soft/hadoop260

export PATH=$HADOOP_HOME/bin:$PATH

**重启环境变量**

4、完全分布式

4.1集群准备

将hadoop1完全复制二份,分别为:hadoop2,hadoop3;

并修改主机ip地址为:192.168.78.131,192.168.78.132

4.2 设置免密登录

**生成本机密钥:**

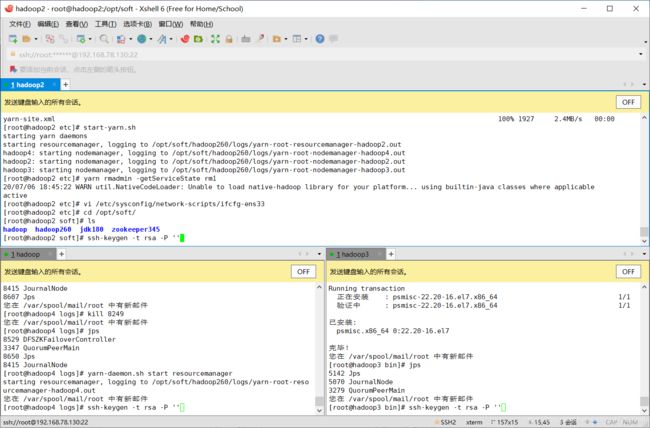

**下达命令,先简单设置一下,见图**

ssh-keygen -t rsa -P ''

**拷贝密钥**

分别在hadoop1,hadoop2,hadoop3,窗口执行

hadoop1窗口:ssh-copy-id hadoop1

hadoop2窗口:ssh-copy-id hadoop2

hadoop3窗口:ssh-copy-id hadoop3

4.3修改配置文件

hadoop-env.sh

export JAVA_HOME=/opt/install/自己的路径

core-site.xml

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop2:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/opt/install/hadoop/data/tmpvalue>

property>

hdfs-site.xml

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>hadoop4:50090value>

property>

yarn-env.sh

export JAVA_HOME=/opt/install/jdk

yarn-site.xml

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop3value>

property>

mapred-site.xml

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

slaves

hadoop2

hadoop3

hadoop4

五、启动集群

启动集群

格式化HDFS

hdfs namenode -format

启动HDFS【hadoop2】

start-dfs.sh

启动Yarn【hadoop3】

start-yarn.sh

jps和web页面查看【50070 和 8088端口】

六、时间同步

安装ntp【hadoop2】

rpm -qa|grep ntp

yum -y install ntp

vi /etc/ntp.conf

-----------------------

# 修改1(设置本地网络上的主机不受限制。)

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap为

restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

# 修改2(设置为不采用公共的服务器)

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst为

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

# 添加3(添加默认的一个内部时钟数据,使用它为局域网用户提供服务。)

server 127.127.1.0

fudge 127.127.1.0 stratum 10

修改/etc/sysconfig/ntpd

vim /etc/sysconfig/ntpd

-----------------------

# 增加内容如下(让硬件时间与系统时间一起同步)

SYNC_HWCLOCK=yes

重新启动ntpd

service ntpd status

service ntpd start

chkconfig ntpd on

其他机器配置

crontab -e

----------------

*/10 * * * * /usr/sbin/ntpdate hadoop2