官网目前提供的下载包为32位系统的安装包,在linux 64位系统下安装后会一直提示错误“WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable ”,但官网又不提供64位系统下的安装包,所以你只能自己去编译打包64位系统下的安装包。

如何查看自己的Hadoop是32位还是64位呢,这里我的Hadoop安装在/opt/hadoop-2.7.1/,那么在/opt/hadoop-2.7.1/lib/native目录下,可以查看文件libhadoop.so.1.0.0,里面会显示Hadoop的位数,这里我的是已经自己编译了Hadoop,所以是64位的,截图如下:

如果上述问题解决了,仍然提示“WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable”,那么请在/etc/profile里设置export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native:/usr/local/lib

对所有用户生效,或者在/home/hadoop/.bashrc里设置export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_HOME/lib/native:/usr/local/lib

只对hadoop用户生效,就能解决该问题。

2.编译hadoop2.7.1的正确方法(官网)

网上关于Hadoop编译的文章一大堆,编译前准备工作五花八门,很少有人告诉你为什么这么做,初学者只能被动接受整个过程。

当你的操作系统是64位linux,但在官网下载的hadoop2.7.1是32位的时候,你就得考虑自己编译打包,获得hadoop2.7.1在64位操作系统下的安装包了,问题是网上编译hadoop2.7.1一大堆,为什么要这么做你知道吗?别人为什么要这么做呢?

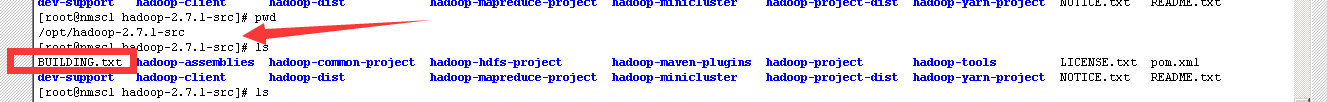

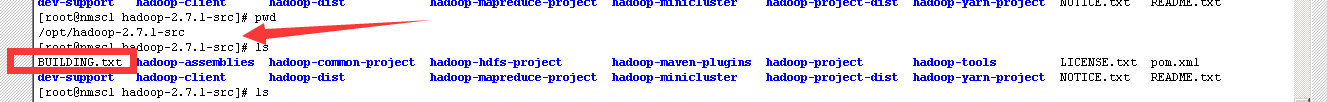

当你遇到上述问题的时候,最可靠的还是官网对于编译的说明,这个说明在hadoop2.7.1的源代码根目录下的BUILDING.txt文件里面,这里我下载的hadoop2.7.1源代码根目录在/opt/hadoop-2.7.1-src/,截图如下:

这里将BUILDING.txt重要内容分析如下:

1)编译前必备条件

来自BUILDING.txt

Requirements:

* Unix System

* JDK 1.7+

* Maven 3.0 or later

* Findbugs 1.3.9 (if running findbugs)

* ProtocolBuffer 2.5.0

* CMake 2.6 or newer (if compiling native code), must be 3.0 or newer on Mac

* Zlib devel (if compiling native code)

* openssl devel ( if compiling native hadoop-pipes and to get the best HDFS encryption performance )

* Jansson C XML parsing library ( if compiling libwebhdfs )

* Linux FUSE (Filesystem in Userspace) version 2.6 or above ( if compiling fuse_dfs )

* Internet connection for first build (to fetch all Maven and Hadoop dependencies)

2)hadoop maven模块介绍

- hadoop-project (Parent POM for all Hadoop Maven modules.All plugins & dependencies versions are defined here.)

- hadoop-project-dist (Parent POM for modules that generate distributions.)

- hadoop-annotations (Generates the Hadoop doclet used to generated the Javadocs)

- hadoop-assemblies (Maven assemblies used by the different modules)

- hadoop-common-project (Hadoop Common)

- hadoop-hdfs-project (Hadoop HDFS)

- hadoop-mapreduce-project (Hadoop MapReduce)

- hadoop-tools (Hadoop tools like Streaming, Distcp, etc.)

- hadoop-dist (Hadoop distribution assembler)

3)maven工程从哪里开始编译

来自BUILDING.txt

Where to run Maven from?

It can be run from any module. The only catch is that if not run from utrunk

all modules that are not part of the build run must be installed in the local

Maven cache or available in a Maven repository.

可以编译单个模块,可以在主模块下编译所有模块,唯一不同是,编译单个模块只会将变异的jar包放置于maven本地资源库中,在主模块下编译也会将各模块编译放置于maven本地资源库中,还会打包Hadoop针对该机的tar.gz安装包。

4)关于snappy

来自BUILDING.txt

Snappy build options:

Snappy is a compression library that can be utilized by the native code.

It is currently an optional component, meaning that Hadoop can be built with

or without this dependency.

* Use -Drequire.snappy to fail the build if libsnappy.so is not found.

If this option is not specified and the snappy library is missing,

we silently build a version of libhadoop.so that cannot make use of snappy.

This option is recommended if you plan on making use of snappy and want

to get more repeatable builds.

* Use -Dsnappy.prefix to specify a nonstandard location for the libsnappy

header files and library files. You do not need this option if you have

installed snappy using a package manager.

* Use -Dsnappy.lib to specify a nonstandard location for the libsnappy library

files. Similarly to snappy.prefix, you do not need this option if you have

installed snappy using a package manager.

* Use -Dbundle.snappy to copy the contents of the snappy.lib directory into

the final tar file. This option requires that -Dsnappy.lib is also given,

and it ignores the -Dsnappy.prefix option.

Hadoop支持用特定的压缩算法将要存储的文件进行压缩,在客户端访问时,又自动解压缩返回给客户端原始格式文件,目前Hadoop支持的压缩格式有LZO、SNAPPY等,这里SNAPPY默认是不支持的,如果要使得Hadoop支持SNAPPY,需要首先安装linux关于SNAPPY库,然后编译Hadoop得到安装包。

目前市面上普遍采用的压缩方式为SNAPPY,SNAPPY也是后期分布式列存储数据库HBASE的首选,而hbase必须依赖Hadoop环境,所以如果后期采用hbase又想用压缩SNAPPY的话,这里将SNAPPY一起编译进来是有必要的。

5)编译方式选择

来自BUILDING.txt

----------------------------------------------------------------------------------

Building distributions:

Create binary distribution without native code and without documentation:

$ mvn package -Pdist -DskipTests -Dtar

Create binary distribution with native code and with documentation:

$ mvn package -Pdist,native,docs -DskipTests -Dtar

Create source distribution:

$ mvn package -Psrc -DskipTests

Create source and binary distributions with native code and documentation:

$ mvn package -Pdist,native,docs,src -DskipTests -Dtar

Create a local staging version of the website (in /tmp/hadoop-site)

$ mvn clean site; mvn site:stage -DstagingDirectory=/tmp/hadoop-site

----------------------------------------------------------------------------------

大致意思如下:

6)Hadoop单机和集群安装方式介绍

来自BUILDING.txt

----------------------------------------------------------------------------------

Installing Hadoop

Look for these HTML files after you build the document by the above commands.

* Single Node Setup:

hadoop-project-dist/hadoop-common/SingleCluster.html

* Cluster Setup:

hadoop-project-dist/hadoop-common/ClusterSetup.html

----------------------------------------------------------------------------------

7)maven编译Hadoop时候内存设置项

来自BUILDING.txt

----------------------------------------------------------------------------------

If the build process fails with an out of memory error, you should be able to fix

it by increasing the memory used by maven -which can be done via the environment

variable MAVEN_OPTS.

Here is an example setting to allocate between 256 and 512 MB of heap space to

Maven

export MAVEN_OPTS="-Xms256m -Xmx512m"

----------------------------------------------------------------------------------

大致意思是,如果maven编译遇到内存方面错误,请先设置maven内存配置,例如linux下请设置export MAVEN_OPTS="-Xms256m -Xmx512m",这点对于后期编译spark源代码也一样好使

3.Hadoop编译必备条件准备

1)Unix System(操作系统为linux,操作系统请自行安装,没条件的就弄虚拟机)

2)JDK 1.7+

#1.首先不建议用openjdk,建议采用oracle官网JDK

#2.首先卸载系统自带的低版本或者自带openjdk

#首先用命令java -version 查看系统中原有的java版本

#然后用用 rpm -qa | gcj 命令查看具体的信息

#最后用 rpm -e --nodeps java-1.5.0-gcj-1.5.0.0-29.1.el6.x86_64卸载

#3.安装jdk-7u65-linux-x64.gz

#下载jdk-7u65-linux-x64.gz放置于/opt/java/jdk-7u65-linux-x64.gz并解压

cd /opt/java/

tar -zxvf jdk-7u65-linux-x64.gz

#配置linux系统环境变量

vi /etc/profile

#在文件末尾追加如下内容

export JAVA_HOME=/opt/java/jdk1.7.0_65

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

#使配置生效

source /etc/profile

#4检查JDK环境是否配置成功

java -version

3)Maven 3.0 or later

#1.下载apache-maven-3.3.3.tar.gz放置于/opt/下并解压

cd /opt

tar zxvf apache-maven-3.3.3.tar.gz

#2.配置环境变量

vi /etc/profile

#添加如下内容

MAVEN_HOME=/opt/apache-maven-3.3.3

export MAVEN_HOME

export PATH=${PATH}:${MAVEN_HOME}/bin

#3.使配置生效

source /etc/profile

#4.检测maven是否安装成功

mvn -version

#5.配置maven中央仓库,maven默认是从中央仓库去下载依赖的jar和插件,中央仓库在过完,对于国内,

有其他中央仓库镜像可供下载,这里我设置maven在国内镜像仓库oschina

#maven不懂的话先去了解下,最好不要修改/opt/apache-maven-3.3.3/conf/settings.xml配置文件,因为该文件对所有

用户生效,而是修改当前用户所在根目录,比如对于hadoop用户,你要修改的文件是/home/hadoop/.m2/settings.xml配置文件,

#在该文件中添加如下内容

目前oschina的maven库已经不可用,请选择阿里的maven库,配置如下:

/opt/maven-localRepository

alimaven

aliyun maven

http://maven.aliyun.com/nexus/content/groups/public/

central

4)Findbugs 3.0.1 (if running findbugs)

#1.安装

tar zxvf findbugs-3.0.1.tar.gz

#2.配置环境变量

vi /etc/profile

#内容如下:

export FINDBUGS_HOME=/opt/findbugs-3.0.1

export PATH=$PATH:$FINDBUGS_HOME/bin

#3.使配置生效

source /etc/profile

#4.键入findbugs检测是否安装成功

findbugs

5)ProtocolBuffer 2.5.0

#1.安装(需要先安装cmake,条件六需要先做)

tar zxvf protobuf-2.5.0.tar.gz

cd protobuf-2.5.0

./configure --prefix=/usr/local/protobuf

make

make check

make install

#2.配置环境变量

vi /etc/profile

#编辑内容如下:

export PATH=$PATH:/usr/local/protobuf/bin

export PKG_CONFIG_PATH=/usr/local/protobuf/lib/pkgconfig/

#3.使配置生效,输入命令,source /etc/profile

#4.键入protoc --version检测是否安装成功

protoc --version

6)CMake 2.6 or newer (if compiling native code), must be 3.0 or newer on Mac

#1.安装前提

yum install gcc-c++

yum install ncurses-devel

#2.安装

#方法一是直接yum install cmake

#方法二下载tar.gz编译安装

#下载cmake-3.3.2.tar.gz编译并安装

tar -zxv -f cmake-3.3.2.tar.gz

cd cmake-3.3.2

./bootstrap

make

make install

#3.键入cmake检测是否安装成功

cmake

7)Zlib devel (if compiling native code)

yum -y install build-essential autoconf automake libtool cmake zlib1g-dev pkg-config libssl-dev zlib-devel

8)openssl devel ( if compiling native hadoop-pipes and to get the best HDFS encryption performance )

yum install openssl-devel

9)Jansson C XML parsing library ( if compiling libwebhdfs )

10)Linux FUSE (Filesystem in Userspace) version 2.6 or above ( if compiling fuse_dfs )

11Internet connection for first build (to fetch all Maven and Hadoop dependencies)

上面三个看自己情况选择,不是必须的。

在我搭建环境过程中,安装的东西很多,我个人当时还执行过如下命令安装一些缺失的库,命令如下:

yum -y install build-essential autoconf automake libtool zlib1g-dev pkg-config libssl-dev

4.正式编译Hadoop源代码

进入源代码根目录执行/opt/hadoop-2.7.1-src

cd /opt/hadoop-2.7.1-src

export MAVEN_OPTS="-Xms256m -Xmx512m"

mvn package -Pdist,native,docs -DskipTests -Dtar

源代码编译需要半小时以上,会从公网去下载源码依赖的jar包,所以请耐心等待编译完成,编译完成后提示信息如下:

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Apache Hadoop Common ............................... SUCCESS [02:27 min]

[INFO] Apache Hadoop NFS .................................. SUCCESS [ 4.841 s]

[INFO] Apache Hadoop KMS .................................. SUCCESS [ 15.176 s]

[INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.055 s]

[INFO] Apache Hadoop HDFS ................................. SUCCESS [03:36 min]

[INFO] Apache Hadoop HttpFS ............................... SUCCESS [ 21.601 s]

[INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 4.182 s]

[INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 3.577 s]

[INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.036 s]

[INFO] hadoop-yarn ........................................ SUCCESS [ 0.033 s]

[INFO] hadoop-yarn-api .................................... SUCCESS [01:53 min]

[INFO] hadoop-yarn-common ................................. SUCCESS [ 23.525 s]

[INFO] hadoop-yarn-server ................................. SUCCESS [ 0.042 s]

[INFO] hadoop-yarn-server-common .......................... SUCCESS [ 8.896 s]

[INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 11.562 s]

[INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 3.324 s]

[INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 6.115 s]

[INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 14.149 s]

[INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 3.887 s]

[INFO] hadoop-yarn-client ................................. SUCCESS [ 5.333 s]

[INFO] hadoop-yarn-server-sharedcachemanager .............. SUCCESS [ 2.249 s]

[INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.032 s]

[INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 1.915 s]

[INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 1.450 s]

[INFO] hadoop-yarn-site ................................... SUCCESS [ 0.049 s]

[INFO] hadoop-yarn-registry ............................... SUCCESS [ 4.165 s]

[INFO] hadoop-yarn-project ................................ SUCCESS [ 4.168 s]

[INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.077 s]

[INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 15.869 s]

[INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 15.401 s]

[INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 2.696 s]

[INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 5.780 s]

[INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 4.528 s]

[INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 3.592 s]

[INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 1.262 s]

[INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 3.969 s]

[INFO] hadoop-mapreduce ................................... SUCCESS [ 3.829 s]

[INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 2.999 s]

[INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 7.995 s]

[INFO] Apache Hadoop Archives ............................. SUCCESS [ 1.425 s]

[INFO] Apache Hadoop Rumen ................................ SUCCESS [ 4.508 s]

[INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 3.023 s]

[INFO] Apache Hadoop Data Join ............................ SUCCESS [ 1.896 s]

[INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 1.633 s]

[INFO] Apache Hadoop Extras ............................... SUCCESS [ 2.256 s]

[INFO] Apache Hadoop Pipes ................................ SUCCESS [ 1.738 s]

[INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 3.198 s]

[INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [ 8.421 s]

[INFO] Apache Hadoop Azure support ........................ SUCCESS [ 2.808 s]

[INFO] Apache Hadoop Client ............................... SUCCESS [ 10.124 s]

[INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 0.097 s]

[INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 3.395 s]

[INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 10.150 s]

[INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.035 s]

[INFO] Apache Hadoop Distribution ......................... SUCCESS [01:48 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 14:12 min

[INFO] Finished at: 2015-11-04T16:08:14+08:00

[INFO] Final Memory: 139M/1077M

[INFO] ------------------------------------------------------------------------

编译后获得Hadoop安装包位置如下:

cd /opt/hadoop-2.7.1-src/hadoop-dist/target

ls

antrun hadoop-2.7.1.tar.gz maven-archiver

dist-layout-stitching.sh hadoop-dist-2.7.1.jar test-dir

dist-tar-stitching.sh hadoop-dist-2.7.1-javadoc.jar

hadoop-2.7.1 javadoc-bundle-options

5.Hadoop编译环境相关依赖包分享