FastDFS集群搭建+keepalive+nginx高可用

这里以我搭建好的FastDFS集群高可用+负载均衡的虚拟机为例,下载地址:集群节点下载

一、环境准备

centOS-6.6,VMware

8个节点,node22,node23,node25~node30;其中node22,node23作为keepalived+nginx高可用负载均衡,具体思路看框架原理图。node25,node26作为tracker,node27~node30作为storage【node27,node28作为一个组,node29,node30作为一个组,文件上传到组中一个节点后,会根据内部算法同步到同组中的其他节点上】

框架结构图

原理:用keeplived模拟一个VIP,通过这个VIP请求负载均衡过的nginx的其中一个节点,再利用nginx做后端两个tracker的负载均衡上的nginx,最后通过tracker上的nginx访问其中的一组storage

fastDFS说明:

同一个组(Volume)中的节点是相互备份的,同一个组中的存储容量是以最小的那个节点的存储容量的大小来衡量的,同一个组中各个节点的存储数据是相同的,所以若是一个组中的节点数量多,那么该组的读操作能力就越强,类似该组内部实现了一个负载均衡,可以提高访问性能,一个集群中,不同的组起到的作用就是横向扩容的作用,每个组中的存储内容不一样,所以扩容增加组或者增加组中的节点的容量即可

资料:

相关配置文件下载

fastdfs_client.zipfastdfs_client_java._v1.25.tar.gz,fastdfs_client_v1.24.jar,FastDFS_v5.05.tar.gz,fastdfs-nginx-module_v1.16.tar.gz,libfastcommon-1.0

相关安装包下载

二、安装

第一步:所有节点安装libfastcommon

将安装包分别发送到这6个节点

编译和安装所需的依赖包、环境,利用xshell的to all sessions操作,每个节点都安装环境

yum install make cmake gcc gcc-c++

暂时将所有的资料都放在root目录下,接下来所有节点安装libfastcommon

tar -zxvf libfastcommon-1.0.7.tar.gz

cd libfastcommon-1.0.7

./make.sh

./make.sh install

libfastcommon 默认安装到了

/usr/lib64/libfastcommon.so

/usr/lib64/libfdfsclient.so

(如果是32位系统,则在当前目录下将这个文件拷贝一份,64位则不用,cp /usr/lib64/libfastcommon.so /usr/lib/)

第二步:所有节点安装FastDFS

cd

tar -zxvf FastDFS_v5.05.tar.gz

cd FastDFS

编译前要确保已经成功安装了 libfastcommon

./make.sh

./make.sh install

说明:默认服务脚本在/etc/init.d/目录下

/etc/init.d/fdfs_storaged

/etc/init.d/fdfs_tracker

说明:命令工具在/usr/bin/目录下的

fdfs_appender_test

fdfs_appender_test1

fdfs_append_file

fdfs_crc32

fdfs_delete_file

fdfs_download_file

fdfs_file_info

fdfs_monitor

fdfs_storaged

fdfs_test

fdfs_test1

fdfs_trackerd

fdfs_upload_appender

fdfs_upload_file

stop.sh

restart.sh

服务脚本需要连接到/usr/bin/目录下的命令工具,默认服务脚本中连接到的却是/usr/local/bin

所以先将两个tracker节点node25,node26改成对应目录,

vi /etc/init.d/fdfs_trackerd

%s+/usr/local/bin+/usr/bin

保存退出

将剩下的四个storage节点进行如下更改

vi /etc/init.d/fdfs_storaged

%s+/usr/local/bin+/usr/bin

以 上操作无论是配置 tracker 还是配置 storage 都是必须的,而 tracker 和storage 的区别主要是在安装完fastdfs 之后的配置过程中,自此各个节点的安装已经结束,下面是配置

三、配置

第一步:所有节点拷贝配置文件

样例配置文件在:

/etc/fdfs/client.conf.sample

/etc/fdfs/storage.conf.sample

/etc/fdfs/tracker.conf.sample

进入root目录

cd 回到root目录

cd FastDFS/conf/

to all sessions 所有节点执行下面操作

[root@node30 conf]# cp * /etc/fdfs/

![]()

cd /etc/fdfs

cd /home

mkdir fastdfs

cd fastdfs

新建目录,所有节点一次性将所有需要用到的目录都建立,to all sessions操作实现,这里的所有操作都是如此

mkdir client

mkdir storage

mkdir tracker

第二步:配置跟踪器:25,26两个tracker节点(暂时只需要tracker.conf),这里就不需要to all sessions

vi /etc/fdfs/tracker.conf

只要更改base_path

base_path=/home/fastdfs/tracker

防火墙中打开跟踪器端口(默认为 22122):25,26两个tracker节点

vi /etc/sysconfig/iptables

-A INPUT -m state --state NEW -m tcp -p tcp --dport 22122 -j ACCEPT

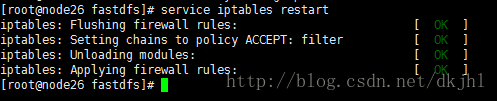

重启25,26两个节点的防火墙

service iptables restart

启动tracker之前/home/fastdfs/traker目录下是没有文件的

25,26节点执行 启动命令

/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf

停止

/usr/bin/stop.sh /usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf

初次启动会在/home/fastdfs/tracker目录下创建data,logs两个目录,可以通过两个方法查看traker是否启动成功

(1)查看 22122 端口监听情况:netstat -unltp|grep fdfs

(2)通过以下命令查看 tracker 的启动日志,看是否有错误

tail -100f /home/fastdfs/tracker/logs/trackerd.log

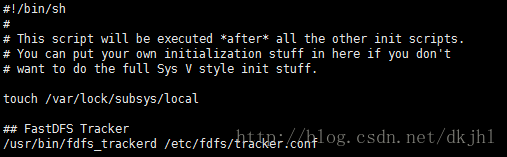

设置 FastDFS 跟踪器开机启动

vi /etc/rc.d/rc.local

## FastDFS Tracker

/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf

至此,tracker配置成功

第三步:配置storage(27,28,29,30四个节点)

cd /etc/fdfs/

vi /etc/fdfs/storage.conf

修改的内容如下:(绿色不用改,红色要改)27,28和29,30在以下的配置只有group_name不同,其他配置相同

disabled=false #启用配置文件

group_name=group1 #组名(第一组(27,28)为 group1,第二组(29,30)为 group2)

port=23000 #storage 的端口号,同一个组的 storage 端口号必须相同

base_path=/home/fastdfs/storage #设置 storage 的日志目录

store_path0=/home/fastdfs/storage #存储路径

store_path_count=1 #存储路径个数,需要和 store_path 个数匹配

tracker_server=192.168.25.125:22122 #tracker 服务器的 IP 地址和端口

tracker_server=192.168.25.126:22122 #多个 tracker 直接添加多条配置

http.server_port=8888 #设置 http 端口号

(其它参数保留默认配置,具体配置解释请参考官方文档说明:http://bbs.chinaunix.net/thread-1941456-1-1.html )

防火墙开放端口,四个storage节点

防火墙中打开存储器端口(默认为 23000):

vi /etc/sysconfig/iptables

添加如下端口行:

-A INPUT -m state --state NEW -m tcp -p tcp --dport 23000 -j ACCEPT

重启防火墙:

service iptables restart

启动四个storage节点,启动storage之前/home/fastdfs/storage目录下是没有文件的

/usr/bin/fdfs_storaged /etc/fdfs/storage.conf

启动之后,storage目录下生成data,logs目录,data目录下有很多的存储目录

检查是否成功

tail -f /home/fastdfs/storage/logs/storaged.log

查看 23000 端口监听情况:

netstat -unltp|grep fdfs

![]()

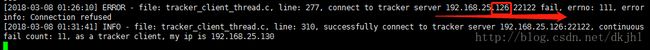

tracker节点26挂机测试,查看storage节点状态

/usr/bin/stop.sh /usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf

storage节点日志查看,停止前leader是126,停止后leader是125节点,

26节点的tracker重新启动后,storage都会监听得到

所有 Storage 节点都启动之后,可以在任一 Storage 节点上使用如下命令查看集群信息:

/usr/bin/fdfs_monitor /etc/fdfs/storage.conf

[root@node27 fdfs]# /usr/bin/fdfs_monitor /etc/fdfs/storage.conf

[2018-03-08 01:36:51] DEBUG - base_path=/home/fastdfs/storage, connect_timeout=30, network_timeout=60, tracker_server_count=2, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0

server_count=2, server_index=1

tracker server is 192.168.25.126:22122

group count: 2

Group 1:

group name = group1

disk total space = 12669 MB

disk free space = 10158 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 8888

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1:

id = 192.168.25.127

ip_addr = 192.168.25.127 ACTIVE

http domain =

version = 5.05

join time = 2018-03-08 01:05:20

up time = 2018-03-08 01:05:20

total storage = 12669 MB

free storage = 10158 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id = 192.168.25.128

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2018-03-08 01:36:31

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.25.128

ip_addr = 192.168.25.128 ACTIVE

http domain =

version = 5.05

join time = 2018-03-08 01:05:16

up time = 2018-03-08 01:05:16

total storage = 12669 MB

free storage = 10158 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2018-03-08 01:36:31

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Group 2:

group name = group2

disk total space = 12669 MB

disk free space = 10158 MB

trunk free space = 0 MB

storage server count = 2

active server count = 2

storage server port = 23000

storage HTTP port = 8888

store path count = 1

subdir count per path = 256

current write server index = 0

current trunk file id = 0

Storage 1:

id = 192.168.25.129

ip_addr = 192.168.25.129 ACTIVE

http domain =

version = 5.05

join time = 2018-03-08 01:05:13

up time = 2018-03-08 01:05:13

total storage = 12669 MB

free storage = 10158 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id = 192.168.25.130

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2018-03-08 01:36:34

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

Storage 2:

id = 192.168.25.130

ip_addr = 192.168.25.130 ACTIVE

http domain =

version = 5.05

join time = 2018-03-08 01:05:02

up time = 2018-03-08 01:05:02

total storage = 12669 MB

free storage = 10158 MB

upload priority = 10

store_path_count = 1

subdir_count_per_path = 256

storage_port = 23000

storage_http_port = 8888

current_write_path = 0

source storage id =

if_trunk_server = 0

connection.alloc_count = 256

connection.current_count = 1

connection.max_count = 1

total_upload_count = 0

success_upload_count = 0

total_append_count = 0

success_append_count = 0

total_modify_count = 0

success_modify_count = 0

total_truncate_count = 0

success_truncate_count = 0

total_set_meta_count = 0

success_set_meta_count = 0

total_delete_count = 0

success_delete_count = 0

total_download_count = 0

success_download_count = 0

total_get_meta_count = 0

success_get_meta_count = 0

total_create_link_count = 0

success_create_link_count = 0

total_delete_link_count = 0

success_delete_link_count = 0

total_upload_bytes = 0

success_upload_bytes = 0

total_append_bytes = 0

success_append_bytes = 0

total_modify_bytes = 0

success_modify_bytes = 0

stotal_download_bytes = 0

success_download_bytes = 0

total_sync_in_bytes = 0

success_sync_in_bytes = 0

total_sync_out_bytes = 0

success_sync_out_bytes = 0

total_file_open_count = 0

success_file_open_count = 0

total_file_read_count = 0

success_file_read_count = 0

total_file_write_count = 0

success_file_write_count = 0

last_heart_beat_time = 2018-03-08 01:36:42

last_source_update = 1970-01-01 08:00:00

last_sync_update = 1970-01-01 08:00:00

last_synced_timestamp = 1970-01-01 08:00:00

可以看到存储节点状态为 ACTIVE 则可

停止storage

/usr/bin/stop.sh /usr/bin/fdfs_storaged /etc/fdfs/storage.conf

给storage配置开机启动

vi /etc/rc.d/rc.local

/usr/bin/fdfs_storaged /etc/fdfs/storage.conf

四、测试

第一步:修改 Tracker 服务器中的客户端配置文件client.conf(以25节点为例作为测试即可,26节点也可以做同样的配置)

vi /etc/fdfs/client.conf

base_path=/home/fastdfs/tracker

tracker_server=192.168.25.125:22122

tracker_server=192.168.25.126:22122

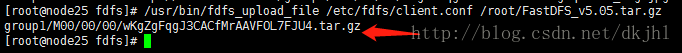

文件上传测试

/usr/bin/fdfs_test /etc/fdfs/client.conf upload anti-steal.jpg

再测试一个,节点的同步时间还是很快的

/usr/bin/fdfs_upload_file /etc/fdfs/client.conf /root/FastDFS_v5.05.tar.gz

将上面两个测试再执行一次,文件则上传到了group2中

五、四个的storage节点nginx安装fastdfs模块

第一步:nginx安装fastdfs模块并且配置

cd 回到root目录

tar -zxvf fastdfs-nginx-module_v1.16.tar.gz

cd fastdfs-nginx-module

cd src/

pwd 复制

/root/fastdfs-nginx-module/src

vi config

将里面所有的local去掉 保存这个路径修改是很重要,不然在 nginx 编译的时候会报错

本例子中所有的节点都预先安装了nginx,接下来只要重新编译安装nginx即可,

(如果没有安装则需要安装yum install gcc gcc-c++ make automake autoconf libtool pcre pcre-devel zlib zlib-devel

openssl openssl-devel)

所有storage节点都重新安装配置nginx

cd /root/nginx-1.8.0

(或者./configure --prefix=/usr/local/nginx --add-module=/usr/local/src/fastdfs-nginx-module/src)

./configure --prefix=/usr/nginx-1.8 \

--add-module=/root/fastdfs-nginx-module/src

make

make install

cp /root/fastdfs-nginx-module/src/mod_fastdfs.conf /etc/fdfs

vi /etc/fdfs/mod_fastdfs.conf

第一组 Storage 的 mod_fastdfs.conf 配置与第二组配置只有 group_name 不同:

group_name=group2

connect_timeout=10

base_path=/tmp

tracker_server=192.168.25.125:22122

tracker_server=192.168.25.126:22122

storage_server_port=23000

group_name=group1 #27,28节点group1 29,30节点group2

url_have_group_name = true

store_path0=/home/fastdfs/storage

group_count = 2

[group1]

group_name=group1

storage_server_port=23000

store_path_count=1

store_path0=/home/fastdfs/storage

[group2]

group_name=group2

storage_server_port=23000

store_path_count=1

store_path0=/home/fastdfs/storage

其中一个组中配置文件

# connect timeout in seconds

# default value is 30s

connect_timeout=10

# network recv and send timeout in seconds

# default value is 30s

network_timeout=30

# the base path to store log files

base_path=/tmp

# if load FastDFS parameters from tracker server

# since V1.12

# default value is false

load_fdfs_parameters_from_tracker=true

# storage sync file max delay seconds

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# since V1.12

# default value is 86400 seconds (one day)

storage_sync_file_max_delay = 86400

# if use storage ID instead of IP address

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# default value is false

# since V1.13

use_storage_id = false

# specify storage ids filename, can use relative or absolute path

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# since V1.13

storage_ids_filename = storage_ids.conf

# FastDFS tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

# valid only when load_fdfs_parameters_from_tracker is true

tracker_server=192.168.25.125:22122

tracker_server=192.168.25.126:22122

# the port of the local storage server

# the default value is 23000

storage_server_port=23000

# the group name of the local storage server

group_name=group1

# if the url / uri including the group name

# set to false when uri like /M00/00/00/xxx

# set to true when uri like ${group_name}/M00/00/00/xxx, such as group1/M00/xxx

# default value is false

url_have_group_name = true

# path(disk or mount point) count, default value is 1

# must same as storage.conf

store_path_count=1

# store_path#, based 0, if store_path0 not exists, it's value is base_path

# the paths must be exist

# must same as storage.conf

store_path0=/home/fastdfs/storage

#store_path1=/home/yuqing/fastdfs1

# standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info

# set the log filename, such as /usr/local/apache2/logs/mod_fastdfs.log

# empty for output to stderr (apache and nginx error_log file)

log_filename=

# response mode when the file not exist in the local file system

## proxy: get the content from other storage server, then send to client

## redirect: redirect to the original storage server (HTTP Header is Location)

response_mode=proxy

# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a

# multi aliases split by comma. empty value means auto set by OS type

# this paramter used to get all ip address of the local host

# default values is empty

if_alias_prefix=

# use "#include" directive to include HTTP config file

# NOTE: #include is an include directive, do NOT remove the # before include

#include http.conf

# if support flv

# default value is false

# since v1.15

flv_support = true

# flv file extension name

# default value is flv

# since v1.15

flv_extension = flv

# set the group count

# set to none zero to support multi-group

# set to 0 for single group only

# groups settings section as [group1], [group2], ..., [groupN]

# default value is 0

# since v1.14

group_count = 2

# group settings for group #1

# since v1.14

# when support multi-group, uncomment following section

[group1]

group_name=group1

storage_server_port=23000

store_path_count=1

store_path0=/home/fastdfs/storage

#store_path1=/home/yuqing/fastdfs1

# group settings for group #2

# since v1.14

# when support multi-group, uncomment following section as neccessary

[group2]

group_name=group2

storage_server_port=23000

store_path_count=1

store_path0=/home/fastdfs/storage

第二步:四个storage节点配置nginx

cd /usr/nginx-1.8/conf

vi nginx.conf

server {

listen 8888;

server_name localhost;

location ~/group([0-9])/M00 {

ngx_fastdfs_module;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

说明:

A、8888 端口值是要与/etc/fdfs/storage.conf 中的 http.server_port=8888 相对应,因为 http.server_port 默认为 8888,如果想改成 80,则要对应修改过来。

B、Storage 对应有多个 group 的情况下,访问路径带 group 名,如/group1/M00/00/00/xxx,对应的 Nginx 配置为:

location ~/group([0-9])/M00 {

ngx_fastdfs_module;

}

C、如查下载时如发现老报 404,将 nginx.conf 第一行 user nobody 修改为 user root 后重新启动。

四个storage节点,在防火墙中打开 Nginx 的 8888 端口

vi /etc/sysconfig/iptables

-A INPUT -m state --state NEW -m tcp -p tcp --dport 8888 -j ACCEPT

service iptables restart

启动 Nginx

cd /usr/nginx-1.8

./sbin/nginx

设置 Nginx 开机启动

vi /etc/rc.local

/usr/nginx-1.8/sbin/nginx

浏览器访问测试时的上传文件

http://192.168.25.129:8888/group2/M00/00/00/wKgZgVqgKLKAVhNNAABdrZgsqUU428_big.jpg

http://192.168.25.129:8888/group2/M00/00/00/wKgZglqgKLWACQnpAAVFOL7FJU4.tar.gz

http://192.168.25.128:8888/group1/M00/00/00/wKgZgFqgJ3CACfMrAAVFOL7FJU4.tar.gz

第三步:在跟踪器tracker节点( 192 .168.25.125 、 192 .168.25.126 )上安装 Nginx清除缓存并且配置

ngx_cache_purge模块的作用:用于清除指定url的缓存

tar -zxvf ngx_cache_purge-2.3.tar.gz

cd /root/nginx-1.8.0

./configure --prefix=/usr/nginx-1.8 \

--add-module=/root/ngx_cache_purge-2.3

make && make install

配置 Nginx,设置负载均衡以及缓存

vi /usr/nginx-1.8/conf/nginx.conf

配置内容如下

user root;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

use epoll;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

#设置缓存

server_names_hash_bucket_size 128;

client_header_buffer_size 32k;

large_client_header_buffers 4 32k;

client_max_body_size 300m;

proxy_redirect off;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 16k;

proxy_buffers 4 64k;

proxy_busy_buffers_size 128k;

proxy_temp_file_write_size 128k;

#设置缓存存储路径、存储方式、分配内存大小、磁盘最大空间、缓存期限

proxy_cache_path /home/fastdfs/cache/nginx/proxy_cache levels=1:2

keys_zone=http-cache:200m max_size=1g inactive=30d;

proxy_temp_path /home/fastdfs/cache/nginx/proxy_cache/tmp;

#设置 group1 的服务器

upstream fdfs_group1 {

server 192.168.25.127:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.25.128:8888 weight=1 max_fails=2 fail_timeout=30s;

}

#设置 group2 的服务器

upstream fdfs_group2 {

server 192.168.25.129:8888 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.25.130:8888 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 8000;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

#设置 group 的负载均衡参数

location /group1/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group1;

expires 30d;

}

location /group2/M00 {

proxy_next_upstream http_502 http_504 error timeout invalid_header;

proxy_cache http-cache;

proxy_cache_valid 200 304 12h;

proxy_cache_key $uri$is_args$args;

proxy_pass http://fdfs_group2;

expires 30d;

}

#设置清除缓存的访问权限

location ~/purge(/.*) {

allow 127.0.0.1;

allow 192.168.1.0/24;

deny all;

proxy_cache_purge http-cache $1$is_args$args;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

按以上 nginx 配置文件的要求,创建对应的缓存目录:

mkdir -p /home/fastdfs/cache/nginx/proxy_cache

mkdir -p /home/fastdfs/cache/nginx/proxy_cache/tmp

tracker节点中,系统防火墙打开对应的端口

vi /etc/sysconfig/iptables

-A INPUT -m state --state NEW -m tcp -p tcp --dport 8000 -j ACCEPT

service iptables restart

启动 Nginx

/usr/nginx-1.8/sbin/nginx

设置 Nginx 开机启动

vi /etc/rc.local

/usr/nginx-1.8/sbin/nginx

文件访问测试

前面直接通过访问 Storage 节点中的 Nginx 的文件

现在可以通过 Tracker 中的 Nginx 来进行访问

http://192.168.25.125:8000/group2/M00/00/00/wKgZgVqgKLKAVhNNAABdrZgsqUU428_big.jpg

http://192.168.25.125:8000/group1/M00/00/00/wKgZgFqgJ3CACfMrAAVFOL7FJU4.tar.gz

http://192.168.25.125:8000/group2/M00/00/00/wKgZglqgKLWACQnpAAVFOL7FJU4.tar.gz

六、配置keepalived+nginx高可用负载均衡(22节点和23节点)

说名:每一个 Tracker 中的 Nginx 都单独对后端的 Storage 组做了负载均衡,但整套 FastDFS 集群如果想对外提供统一的文件访问地址,还需要对两个 Tracker 中的 Nginx 进行 HA 集群

yum install gcc gcc-c++ make automake autoconf libtool pcre pcre-devel zlib-devel openssl openssl-devel

使用Keepalived + Nginx组成的高可用负载均衡集群做两个Tracker节点中Nginx的负载均衡

新增了两个节点(22-master,23-backup节点作为负载均衡所用),这两个节点已经安装了nginx和jdk,克隆所得

第一步:配置nginx,只更改红色部分

user root;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 88;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

vi /usr/nginx-1.8/html/index.html

192.168.25.122 中的标题加 2

Welcome to nginx! 2

192.168.25.123 中的标题加 3

Welcome to nginx! 3

系统防火墙打开对应的端口 88

vi /etc/sysconfig/iptables

## Nginx

-A INPUT -m state --state NEW -m tcp -p tcp --dport 88 -j ACCEPT

service iptables restar

测试 Nginx 是否安装成功

/usr/nginx-1.8/sbin/nginx -t

出现下面两行说名配置成功

nginx: the configuration file /usr/nginx-1.8/conf/nginx.conf syntax is ok

nginx: configuration file /usr/nginx-1.8/conf/nginx.conf test is successful

启动 Nginx

/usr/nginx-1.8/sbin/nginx

重启 Nginx

/usr/nginx-1.8/sbin/nginx -s reload

设置 Nginx 开机启动

vi /etc/rc.local

/usr/nginx-1.8/sbin/nginx

分别访问两个 Nginx

192.168.25.122:88

192.168.25.123:88

第二步:安装 Keepalived

上传keepalived-1.4.1.tar.gz文件或者去官网下载 http://www.keepalived.org/download.html

解压安装

tar -zxvf keepalived-1.4.1.tar.gz

cd keepalived-1.4.1

./configure --prefix=/usr/keepalived

make && make install

将 keepalived 安装成 Linux 系统服务:

因为没有使用 keepalived 的默认路径安装(默认是/usr/local),安装完成之后,需要做一些工作复制默认配置文件到默认路径

keepalived默认会读取/etc/keepalived/keepalived.conf配置文件,所以

mkdir /etc/keepalived

cp /usr/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

复制启动脚本到/etc/init.d下

cd /root/keepalived-1.4.1

cp ./keepalived/etc/init.d/keepalived /etc/init.d/

chmod 755 /etc/init.d/keepalived

复制sysconfig文件到/etc/sysconfig下

cp /usr/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

复制/sbin/keepalived到/usr/sbin下

cp /usr/keepalived/sbin/keepalived /usr/sbin/

设置 keepalived 服务开机启动

chkconfig keepalived on

修改 Keepalived 配置文件

(1) MASTER 节点配置文件(192.168.25.122)

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

## keepalived 自带的邮件提醒需要开启 sendmail 服务。建议用独立的监控或第三方 SMTP

router_id edu-proxy-01 ## 标识本节点的字条串,通常为 hostname

}

## keepalived 会定时执行脚本并对脚本执行的结果进行分析,动态调整 vrrp_instance 的优先级。如果

脚本执行结果为 0,并且 weight 配置的值大于 0,则优先级相应的增加。如果脚本执行结果非 0,并且 weight

配置的值小于 0,则优先级相应的减少。其他情况,维持原本配置的优先级,即配置文件中 priority 对应

的值。

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh" ## 检测 nginx 状态的脚本路径

interval 2 ## 检测时间间隔

weight -20 ## 如果条件成立,权重-20

}

## 定义虚拟路由,VI_1 为虚拟路由的标示符,自己定义名称

vrrp_instance VI_1 {

state MASTER ## 主节点为 MASTER,对应的备份节点为 BACKUP

interface eth0 ## 绑定虚拟 IP 的网络接口,与本机 IP 地址所在的网络接口相同,我的是 eth1

virtual_router_id 51 ## 虚拟路由的 ID 号,两个节点设置必须一样,可选 IP 最后一段使用, 相

同的 VRID 为一个组,他将决定多播的 MAC 地址

mcast_src_ip 192.168.25.122 ## 本机 IP 地址

priority 100 ## 节点优先级,值范围 0-254,MASTER 要比 BACKUP 高

nopreempt ## 优先级高的设置 nopreempt 解决异常恢复后再次抢占的问题

advert_int 1 ## 组播信息发送间隔,两个节点设置必须一样,默认 1s

## 设置验证信息,两个节点必须一致

authentication {

auth_type PASS

auth_pass 1111 ## 真实生产,按需求对应该过来

}

## 将 track_script 块加入 instance 配置块

track_script {

chk_nginx ## 执行 Nginx 监控的服务

}

## 虚拟 IP 池, 两个节点设置必须一样

virtual_ipaddress {

192.168.25.50 ## 虚拟 ip,可以定义多个

}

}

(2)BACKUP 节点配置文件(192.168.25.123):

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id edu-proxy-02

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

mcast_src_ip 192.168.25.123

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.25.50

}

}

(3)编写 Nginx 状态检测脚本 /etc/keepalived/nginx_check.sh (已在 keepalived.conf 中配置)

脚本要求:如果 nginx 停止运行,尝试启动,如果无法启动则杀死本机的 keepalived 进程,keepalived,将虚拟 ip 绑定到 BACKUP 机器上。内容如下:

vi /etc/keepalived/nginx_check.sh

#!/bin/bash

A=`ps -C nginx –no-header |wc -l`

if [ $A -eq 0 ];then

/usr/local/nginx/sbin/nginx

sleep 2

if [ `ps -C nginx --no-header |wc -l` -eq 0 ];then

killall keepalived

fi

fi

用意:请求进来的时候,nginx master宕机了,keepalived还没有将协议漂移过去,nginx的backup还没有上位,这时候请求进来还是在master上,请求无法处理,所以这时候要加一个脚本判断一下这个nginx是否正常,如果nginx不正常或者挂机了,进程中没有nginx则将本机的keepalived进程kill掉,让master切换到backup上来,因为真正处理用户请求的是nginx

保存后,给脚本赋执行权限

chmod +x /etc/keepalived/nginx_check.sh

启动 Keepalived

service keepalived start

Starting keepalived: [ OK ]

Keepalived+Nginx 的高可用测试

(1)关闭 192.168.25.122 中的 Nginx,Keepalived 会将它重新启动

/usr/nginx-1.8/sbin/nginx -s stop

(2)关闭 192.168.25.122 中的 Keepalived,VIP 会切换到 192.168.25.122 中

service keepalived stop

ip a 可以查看网络接口中的VIP

Keepalived 停止后,该节点的网络接口中的 VIP 将消失

(3)重新启动 192.168.1.51 中的 Keepalived,VIP 又会切回到 192.168.1.51 中来

service keepalived start

这时候就可以通过统一网址来访问集群了

Keepalived 服务管理命令:

停止:service keepalived stop

启动:service keepalived start

重启:service keepalived restart

查看状态:service keepalived status

其他参考资料:

keepalived 之 vrrp_script 总结:http://my.oschina.net/hncscwc/blog/158746

keepalived 双机热备实现故障时发送邮件通知:http://www.2cto.com/os/201407/317795.html

基于 keepalived 实现 VIP 转移,lvs,nginx 的高可用:http://www.tuicool.com/articles/eu26Vz

至此keepalived+nginx高可用已经配置结束

七、在 Keepalived+Nginx (22、23节点)实现高可用负载均衡集群中配置 Tracker 节点中 Nginx 的负载均衡反向代理

第一步:(192.168.25.122 和 192.168.25.123 中的 Nginx 执行相同的配置)

只需要在配置文件中添加以下配置即可

vi /usr/nginx-1.8/conf/nginx.conf

## FastDFS Tracker Proxy

upstream fastdfs_tracker {

server 192.168.25.125:8000 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.25.126:8000 weight=1 max_fails=2 fail_timeout=30s;

}

## FastDFS Tracker Proxy

location /dfs {

root html;

index index.html index.htm;

proxy_pass http://fastdfs_tracker/;

proxy_set_header Host $http_host;

proxy_set_header Cookie $http_cookie;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

client_max_body_size 300m;

}

重启192.168.25.122 和 192.168.25.123中的nginx

通过 Keepalived+Nginx 组成的高可用负载集群的 VIP(192.168.25.50)来访问 FastDFS 集群中的文件

http://192.168.25.50:88/dfs/group2/M00/00/00/wKgZgVqgKLKAVhNNAABdrZgsqUU428_big.jpg

第二步:如何使用:

FILE_SYS_URL = http://192.168.25.50:88/dfs/

fdfs_client.conf中增加配置,tracker几个添加几行

tracker_server = 192.168.25.125:22122

tracker_server = 192.168.25.126:22122

http://192.168.25.50:88/dfs/group1/M00/00/00/wKgZf1qiVgWAKAofAAF9Se5IIHk477.jpg