ORB-SLAM代码详解之SLAM系统初始化

- systemh

- systemc的SystemSystem

- ORBVocabulary字典类

- KeyFrameDatabase关键帧的数据库

- Map 地图的构建

- FrameDrawer 画图工具

- Tracking追踪

- LocalMapping 局部地图的类

- LoopClosing 闭环检测

转载请注明出处:http://blog.csdn.net/c602273091/article/details/54933760

system.h

这里包含了整个SLAM系统所需要的一起,通过看这个文件可以对ORB-SLAM系统有什么有一个大概的了解。不过之前我们需要对于多线程了解一些基本的东西——信号量【1】和多线程【2】。

具体注释如下:

/**

* This file is part of ORB-SLAM2.

*

* Copyright (C) 2014-2016 Ra煤l Mur-Artal (University of Zaragoza)

* For more information see

*

* ORB-SLAM2 is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM2 is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with ORB-SLAM2. If not, see

#ifndef SYSTEM_H

#define SYSTEM_H

#include这样对于ORB-SLAM我们有了一个大致的认识。

接下来我们看System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer).

system.c的System::System

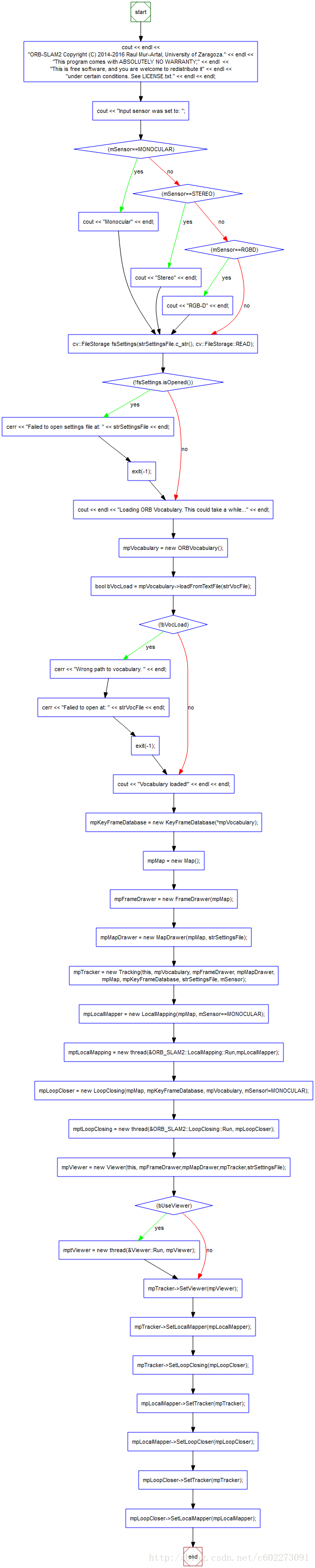

使用understand的control flow,一剑封喉,直接看到各个部分的联系。流程图出来了,感觉看起来很爽。

在这个基础上,我再对整个流程进行注释。

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor,

const bool bUseViewer):mSensor(sensor),mbReset(false),mbActivateLocalizationMode(false),

mbDeactivateLocalizationMode(false)

{

// Output welcome message

cout << endl <<

"ORB-SLAM2 Copyright (C) 2014-2016 Raul Mur-Artal, University of Zaragoza." << endl <<

"This program comes with ABSOLUTELY NO WARRANTY;" << endl <<

"This is free software, and you are welcome to redistribute it" << endl <<

"under certain conditions. See LICENSE.txt." << endl << endl;

cout << "Input sensor was set to: ";

// 判断什么类型的传感器

if(mSensor==MONOCULAR)

cout << "Monocular" << endl;

else if(mSensor==STEREO)

cout << "Stereo" << endl;

else if(mSensor==RGBD)

cout << "RGB-D" << endl;

//Check settings file

// 检查配置文件是否存在

// cv::FileStorage对XML/YML的配置文件进行操作,读取配置文件

// yml的配置文件已经读入fsSettings了

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

// 加载ORB的字典

//Load ORB Vocabulary

cout << endl << "Loading ORB Vocabulary. This could take a while..." << endl;

// 加载字典到mpVocabulary

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//Create KeyFrame Database

// 创建关键帧的数据库

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//Create the Map

// 创建地图

mpMap = new Map();

//Create Drawers. These are used by the Viewer

// 创建视图

mpFrameDrawer = new FrameDrawer(mpMap);

// 创建画图器

mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

//Initialize the Tracking thread

//(it will live in the main thread of execution, the one that called this constructor)

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);

//Initialize the Local Mapping thread and launch

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);

//Initialize the Loop Closing thread and launch

// 初始化局部图线程

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);

//Initialize the Viewer thread and launch

// 初始化显示线程

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile);

if(bUseViewer)

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

//Set pointers between threads

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

}接下来对每个类看一下初始化的效果。从字典类,关键帧类,地图类,局部图类等等看看它们如何进行初始化。

ORBVocabulary():字典类

这个字典的类在Orbvocabulary.h里面定义,但是实际上是定义在DBow2这个库里面。所以目前就不再深究。

typedef DBoW2::TemplatedVocabulary<DBoW2::FORB::TDescriptor, DBoW2::FORB> ORBVocabulary;KeyFrameDatabase():关键帧的数据库

这个定义在KeyFrameDatabase.h里面。

/**

* This file is part of ORB-SLAM2.

*

* Copyright (C) 2014-2016 Ra煤l Mur-Artal (University of Zaragoza)

* For more information see

*

* ORB-SLAM2 is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM2 is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with ORB-SLAM2. If not, see

#ifndef KEYFRAMEDATABASE_H

#define KEYFRAMEDATABASE_H

#include

#include 在KeyFameDatabase.cc中,它的初始化为:这里的mvInvertedFile我不清楚是什么。

KeyFrameDatabase::KeyFrameDatabase (const ORBVocabulary &voc):

mpVoc(&voc)

{

mvInvertedFile.resize(voc.size());

}Map(): 地图的构建

在Map.h里面,对地图的各种操作。主要集中在关键帧、点云、参考点云。

class Map

{

public:

Map();

// 添加关键帧

void AddKeyFrame(KeyFrame* pKF);

// 添加点云

void AddMapPoint(MapPoint* pMP);

// 去掉点云

void EraseMapPoint(MapPoint* pMP);

// 去掉关键帧

void EraseKeyFrame(KeyFrame* pKF);

// 设置参考点云

void SetReferenceMapPoints(const std::vector在Map.cc里,

Map::Map():mnMaxKFid(0)

{

}FrameDrawer(): 画图工具

这个先不用管。画关键帧的。当然还有Mapdrawer,画整个图的。

class MapDrawer

{

public:

MapDrawer(Map* pMap, const string &strSettingPath);

Map* mpMap;

// 画点云

void DrawMapPoints();

// 画关键帧

void DrawKeyFrames(const bool bDrawKF, const bool bDrawGraph);

// 画当前相机采集的图片

void DrawCurrentCamera(pangolin::OpenGlMatrix &Twc);

// 设置当前相机的位姿

void SetCurrentCameraPose(const cv::Mat &Tcw);

// 设置参考帧

void SetReferenceKeyFrame(KeyFrame *pKF);

void GetCurrentOpenGLCameraMatrix(pangolin::OpenGlMatrix &M);

private:

float mKeyFrameSize;

float mKeyFrameLineWidth;

float mGraphLineWidth;

float mPointSize;

float mCameraSize;

float mCameraLineWidth;

cv::Mat mCameraPose;

std::mutex mMutexCamera;

};Tracking:追踪

这一块的东西比较重要,需要重点看看。

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);class Tracking

{

public:

Tracking(System* pSys, ORBVocabulary* pVoc, FrameDrawer* pFrameDrawer, MapDrawer* pMapDrawer, Map* pMap,

KeyFrameDatabase* pKFDB, const string &strSettingPath, const int sensor);

// 对输入的图片进行处理,提取特征和立体匹配

// Preprocess the input and call Track(). Extract features and performs stereo matching.

cv::Mat GrabImageStereo(const cv::Mat &imRectLeft,const cv::Mat &imRectRight, const double ×tamp);

cv::Mat GrabImageRGBD(const cv::Mat &imRGB,const cv::Mat &imD, const double ×tamp);

cv::Mat GrabImageMonocular(const cv::Mat &im, const double ×tamp);

// 设置局部地图、设置局部闭环检测、设置视图

void SetLocalMapper(LocalMapping* pLocalMapper);

void SetLoopClosing(LoopClosing* pLoopClosing);

void SetViewer(Viewer* pViewer);

// Load new settings

// The focal lenght should be similar or scale prediction will fail when projecting points

// TODO: Modify MapPoint::PredictScale to take into account focal lenght

// 把焦距考虑进去改变MapPoint的scale

void ChangeCalibration(const string &strSettingPath);

// Use this function if you have deactivated local mapping and you only want to localize the camera.

// 设置只对摄像头的位姿进行计算

void InformOnlyTracking(const bool &flag);

public:

// Tracking states

// 追踪的状态

enum eTrackingState{

SYSTEM_NOT_READY=-1,

NO_IMAGES_YET=0,

NOT_INITIALIZED=1,

OK=2,

LOST=3

};

eTrackingState mState;

eTrackingState mLastProcessedState;

// Input sensor

// 输入的传感器

int mSensor;

// Current Frame

// 当前帧和图片

Frame mCurrentFrame;

cv::Mat mImGray;

// Initialization Variables (Monocular)

std::vector<int> mvIniLastMatches;

std::vector<int> mvIniMatches;

std::vector追踪这一块的东西呢发现涉及了基本上所有的内容。

LocalMapping(): 局部地图的类

//Initialize the Local Mapping thread and launch

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);这个类就是对局部地图的关键点、关键帧、局部地图的闭环检测进行操作。这里还涉及多线程的线程同步问题,在看代码的时候可以忽略这个问题。

LoopClosing(): 闭环检测

//Initialize the Loop Closing thread and launch

// 初始化局部图线程

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);这里是进行回环检测的部分,解决的就是在地图中检测回环并进行偏移校正。

其实看了这么的初始化代码,主要就是对这个项目有一个大致的了解。接下来就是对程序的主体部分进行详细的介绍。

最重要的就是: SLAM.TrackMonocular(im,tframe); 对这个部分进行非常细致的了解,就可以由这条线抽丝剥茧得到想要的东西。

在类里面有很多private、public、protected这些关键字,看【3】可以知道它们的作用域。

参考链接:

【1】mutex: http://www.cplusplus.com/reference/mutex/

【2】thread: http://www.cplusplus.com/reference/thread/thread/

【3】public、private、protected: http://www.jb51.net/article/54224.htm