kaggle入门之Titanic实战(一)

为了提高自己的实践能力,最近打算在kaggle上做一些开源的项目。同时在blog上记录一下自己的学习过程。

对于新手,毫无疑问Titanic是一个非常好的入门级项目。数据集较小,特征数目也不多,处理起来不是太难。数据集见以下网址。

Titanic地址:https://www.kaggle.com/c/titanic/data

一、首先读取训练集,并观察是否有缺失值

import pandas as pd

import numpy as np

from pandas import Series, DataFrame

import matplotlib.pyplot as plt

#观察数据基本信息

data_train = pd.read_csv('train.csv')

print(data_train.info())结果如下:

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.6+ KB

可以看到在Age、Cabin、和Embarked属性对应的记录有缺失值。随后对数据进行预处理的时候进行缺失值的处理。

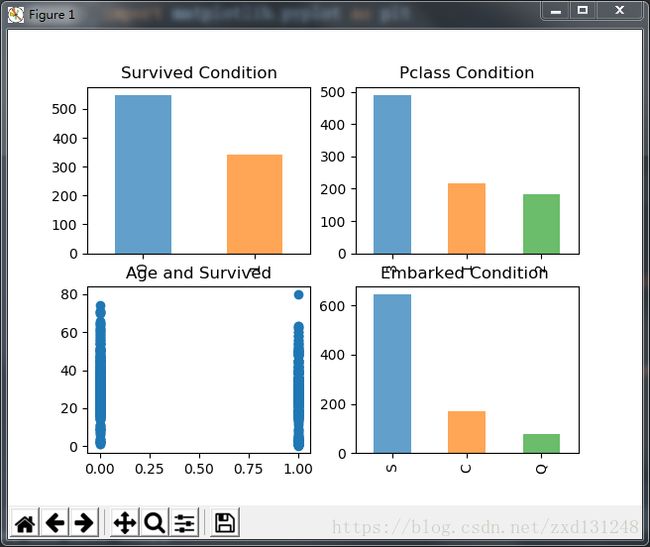

二、 观察各个属性与是否获救之间的关系

fig = plt.figure()

ax1 = fig.add_subplot(2, 2, 1)

data_train.Survived.value_counts().plot(kind='bar', alpha=0.7)

plt.title('Survived Condition')

ax2 = fig.add_subplot(2, 2, 2)

data_train.Pclass.value_counts().plot(kind='bar', alpha=0.7)

plt.title('Pclass Condition')

ax3 = fig.add_subplot(2, 2, 3)

plt.scatter(data_train.Survived, data_train.Age)

plt.title('Age and Survived')

ax4 = fig.add_subplot(2, 2, 4)

data_train.Embarked.value_counts().plot(kind='bar', alpha=0.7)

plt.title('Embarked Condition ')

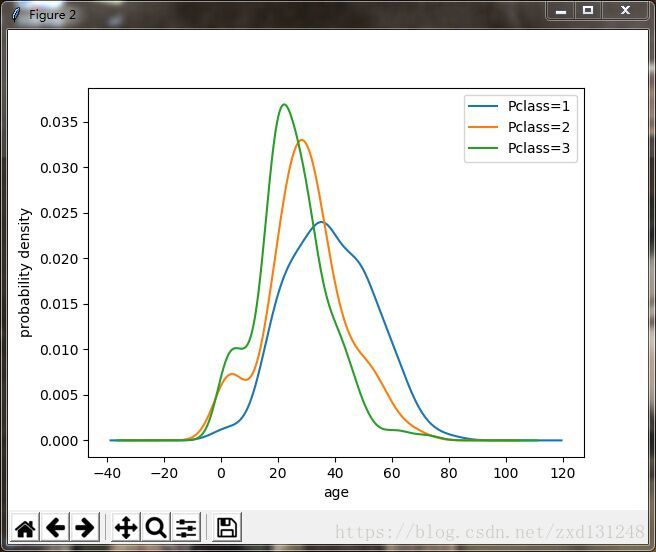

#Pclass与age的KDE图

fig1 = plt.figure()

data_train.Age[data_train.Pclass == 1].plot(kind='kde')

data_train.Age[data_train.Pclass == 2].plot(kind='kde')

data_train.Age[data_train.Pclass == 3].plot(kind='kde')

plt.xlabel('age')

plt.ylabel('probability density')

plt.legend(('Pclass=1', 'Pclass=2', 'Pclass=3'), loc='best')

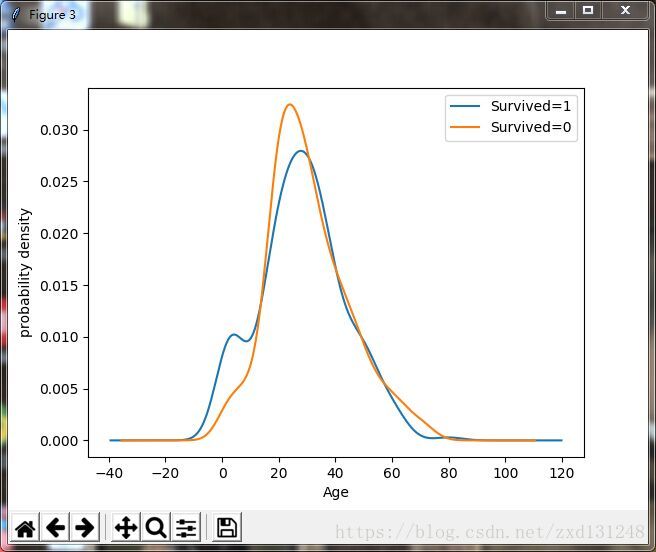

#年龄与是否生存之间的关系

fig2 = plt.figure()

data_train.Age[data_train.Survived == 1].plot(kind='kde')

data_train.Age[data_train.Survived == 0].plot(kind='kde')

plt.xlabel('Age')

plt.ylabel('probability density')

plt.legend(('Survived=1', 'Survived=0'), loc='best')

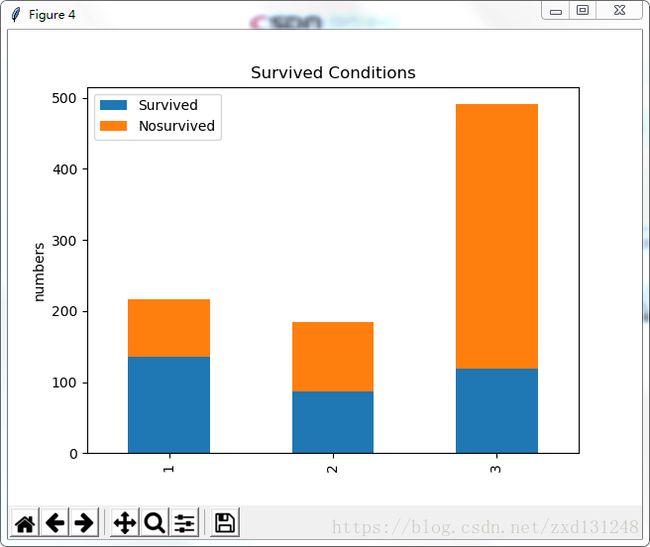

#观察乘客属性与获救结果之间的关系

pcl_Survived = data_train.Pclass[data_train.Survived == 1].value_counts()

pcl_Nosurvived = data_train.Pclass[data_train.Survived == 0].value_counts()

df1 = DataFrame({'Survived': pcl_Survived, 'Nosurvived': pcl_Nosurvived})

df1.plot(kind='bar', stacked='all')

plt.ylabel('numbers')

plt.title('Survived Conditions')

plt.show()

运行结果如下:

由图可以看出被救的人数大概在300人左右,3级舱位的乘客最多,年龄似乎和是否存活关系不大,在S港口登船的乘客最多。舱位等级越高乘客的平均年龄越大。一等舱和二等舱的乘客的获救几率要显著大于三等舱的乘客的获救几率。

继续观察:

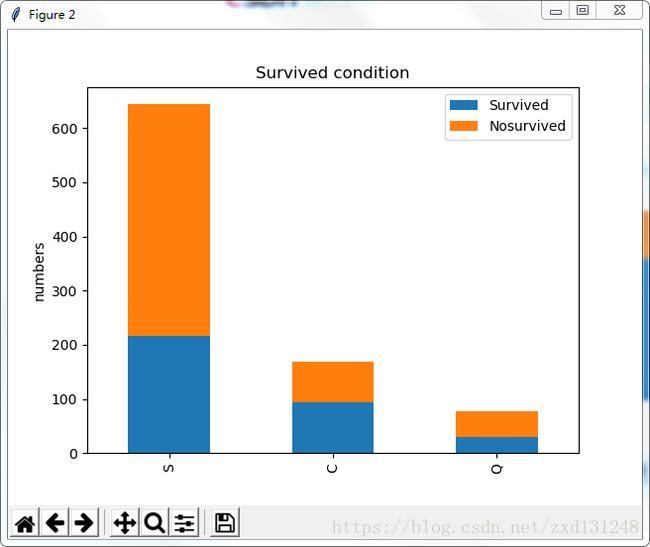

#性别与是否获救之间的关系

sex_Survived = data_train.Sex[data_train.Survived == 1].value_counts()

sex_Nosurvived = data_train.Sex[data_train.Survived == 0].value_counts()

df2 = DataFrame({'Survived': sex_Survived, 'Nosurvived': sex_Nosurvived})

df2.plot(kind='bar', stacked='all')

plt.ylabel('numbers')

plt.title('Survived Condition')

#登船港口与是否获救之间的关系

emb_Survived = data_train.Embarked[data_train.Survived == 1].value_counts()

emb_Nosurvived = data_train.Embarked[data_train.Survived == 0].value_counts()

df3 = DataFrame({'Survived': emb_Survived, 'Nosurvived': emb_Nosurvived})

df3.plot(kind='bar', stacked='all')

plt.ylabel('numbers')

plt.title('Survived condition')

#性别和船舱等级与是否获救之间的关系

fig3 = plt.figure()

ax5 = fig3.add_subplot(2, 2, 1)

s1 = data_train.Survived[data_train.Sex == 'male'][data_train.Pclass != 3].value_counts()

s1.plot(kind='bar', color='green', alpha=0.7)

ax5.legend(['male/high level pclass'], loc='best')

ax6 = fig3.add_subplot(2, 2, 2, sharey=ax5)

s2 = data_train.Survived[data_train.Sex == 'female'][data_train.Pclass != 3].value_counts()

s2.plot(kind='bar', color='red', alpha=0.7)

ax6.legend(['female/high level pclass'], loc='best')

ax7 = fig3.add_subplot(2, 2, 3, sharey=ax5)

s3 = data_train.Survived[data_train.Sex == 'male'][data_train.Pclass == 3].value_counts()

s3.plot(kind='bar', color='blue', alpha=0.7)

ax7.legend(['male/low level pclass'], loc='best')

ax8 = fig3.add_subplot(2, 2, 4, sharey=ax5)

s4 = data_train.Survived[data_train.Sex == 'female'][data_train.Pclass == 3].value_counts()

s4.plot(kind='bar', color='black', alpha=0.7)

ax8.legend(['female/low level pclass'], loc='best')

plt.show()结果如下:

由图可以看出,女性获救的概率要显著高于男性获救的概率,而从哪个港口登船与是否获救似乎关系不大。在第三幅图中可以看出,无论船舱等级如何,男性死亡的概率都相当高,而高船舱等级中的女性获救的概率相当高。相比较而言,在低等级舱位中女性的存活率与死亡率相当。

接下来观察父母/子女个数和表亲个数对是否存活的影响:

# 孩子父母/表亲个数与是否获救之间的关系

parch_Survived = data_train.Parch[data_train.Survived == 1].value_counts()

parch_Nosurvived = data_train.Parch[data_train.Survived == 0].value_counts()

sibsp_Survived = data_train.SibSp[data_train.Survived == 1].value_counts()

sibsp_Nosurvived = data_train.SibSp[data_train.Survived == 0].value_counts()

df4 = DataFrame({'Survived': parch_Survived, 'Nosurvived': parch_Nosurvived})

df4.plot(kind='bar', alpha=0.7)

plt.title('parch_Survived condition')

df5 = DataFrame({'Survived': sibsp_Survived, 'Nosurvived': sibsp_Nosurvived})

df5.plot(kind='bar', alpha=0.7)

plt.title('sibsp_Survived condition')

plt.show()结果如下:

由图可以看出父母/表亲个数为0时获救概率比较低 ,而父母/表亲个数为1时获救概率相对较高。

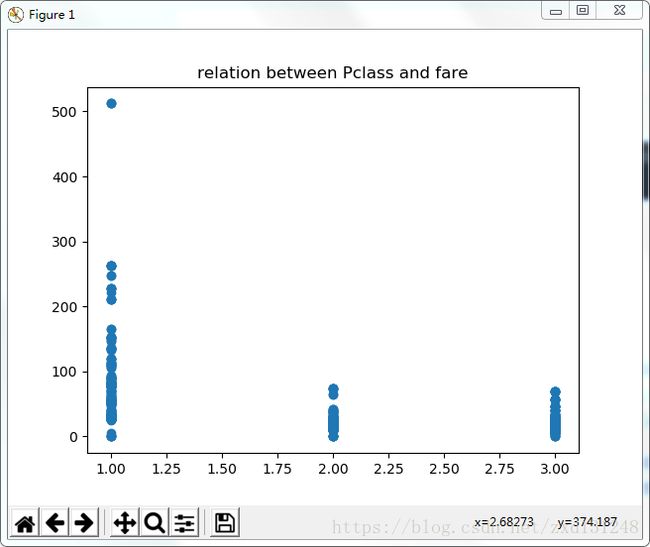

由于猜测舱位等级与票价之间存在某种关系,做散点图进行观察:

#猜测舱位级别Pclass和Fare有可能是正相关,做散点图观察

plt.scatter(data_train['Pclass'], data_train['Fare'])

plt.title('relation between Pclass and fare')

plt.show()结果如下:

由图可以看出一等舱的价格分布区间明显比二等舱和三等舱的价格分布区间大,后续改进的时候可以考虑将票价进行离散化处理,也就是将连续的票价映射到几个区间内。由于这里只是想做出一个baseline,所以不作处理。另外Cabin的缺失值太多了,可以分为存在Cabin记录和不存在Cabin记录来进行处理。

三、进行数据预处理,目标是使用逻辑回归进行分类,所以需要将各个属性的取值修改为数值型,而不能是类别型。其次需要对测试数据和训练数据进行相同的数据预处理。其中对年龄进行采用随即森林进行拟合,对于缺失的两个登船港口记录采用登船港口记录的众数进行填充。

# 数据预处理

#可以采用sklearn中的随即森林对缺失的数据进行拟合

import sklearn.preprocessing as prep

from sklearn.ensemble import RandomForestRegressor

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import BaggingClassifier

def deal_age(data_train):

select_data = data_train[['Age', 'Pclass', 'SibSp', 'Parch', 'Fare']]

known_age = select_data[select_data.Age.notnull()].as_matrix() # 找出Age不为空的部分病转换为矩阵的形式

unknown_age = select_data[select_data.Age.isnull()].as_matrix()

feature =known_age[:, 1:]

value = known_age[:, 0]

Random_Forest = RandomForestRegressor(random_state=0, n_estimators=1000, n_jobs=-1)

Random_Forest.fit(feature, value)

predict_ages = Random_Forest.predict(unknown_age[:, 1:])

data_train.loc[(data_train.Age.isnull()), 'Age'] = predict_ages

return data_train, Random_Forest

def deal_cabin(data_train):

index1 = data_train.Cabin.isnull()

index2 = data_train.Cabin.notnull()

data_train.loc[(index1), 'Cabin'] = 'no'

data_train.loc[(index2), 'Cabin'] = 'yes'

return data_train

data_train, Random_Forest = deal_age(data_train)

data_train = deal_cabin(data_train)

print(data_train['Embarked'].value_counts())

data_train.loc[(data_train.Embarked.isnull()), 'Embarked'] = 'S'

# 应用逻辑回归建模时要求特征值为数值型,需要将类别性转换为数值型

# 比如性别有'male'和'female',则可以拓展得到两个属性,Sex_male和Sex_female

# 使用pandas中的get_nummies来获得哑变量矩阵,关于哑变量详细介绍见《利用python进行数据分析》214页

dummies_Cabin = pd.get_dummies(data_train['Cabin'], prefix='Cabin')

dummies_Sex = pd.get_dummies(data_train['Sex'], prefix='Sex')

dummis_Pclass = pd.get_dummies(data_train['Pclass'], prefix='Pclass')

dummies_Embarked = pd.get_dummies(data_train['Embarked'], prefix='Embarked')

# 接下来将dummies_Cabin、dummies_Sex、dummis_Pclass、dummies_Embarked与data_train沿axis=1进行

# 轴向链接,连接后丢弃原来的对应数据,关于轴向链接的详细解释见《利用python进行数据分析》194页

data_train = pd.concat([data_train, dummies_Cabin, dummies_Sex, dummis_Pclass,

dummies_Embarked], axis=1)

data_train.drop(['Pclass', 'Sex', 'Cabin', 'Embarked', 'Name'], inplace=True, axis=1)

# 对Age和Fare做归一化处理,可以加快梯度下降的速度

data_train['Age'] = prep.scale(data_train['Age'])

data_train['Fare'] = prep.scale(data_train['Fare'])

data_train.drop(['PassengerId', 'Ticket'], inplace=True, axis=1) # PassengerId和Ticket看起来并没有用处

# 在正式建模之前需要对data_train中的features进行处理,转换为numpy.ndarray格式

columns = data_train.columns # 将处理好的columns记下来,之后会用到

data_train = data_train.as_matrix()

values = data_train[:, 0]

values = values.astype(int)

features = data_train[:, 1:]

#对测试数据做数据域处理

data_test = pd.read_csv('test.csv')

print(data_test.info())

data_test.loc[(data_test.Fare.isnull()), 'Fare'] = data_test['Fare'].mean() # 观察可知,Fare有缺失值

select_data = data_test[['Age', 'Pclass', 'SibSp', 'Parch', 'Fare']]

unknown_age = select_data[select_data.Age.isnull()].as_matrix()

predict_ages = Random_Forest.predict(unknown_age[:, 1:])

data_test.loc[(data_test.Age.isnull()), 'Age'] = predict_ages

data_test = deal_cabin(data_test)

dummies_Cabin = pd.get_dummies(data_test['Cabin'], prefix='Cabin')

dummies_Sex = pd.get_dummies(data_test['Sex'], prefix='Sex')

dummis_Pclass = pd.get_dummies(data_test['Pclass'], prefix='Pclass')

dummies_Embarked = pd.get_dummies(data_test['Embarked'], prefix='Embarked')

data_test = pd.concat([data_test, dummies_Cabin, dummies_Sex, dummis_Pclass,

dummies_Embarked], axis=1)

data_test.drop(['Pclass', 'Sex', 'Cabin', 'Embarked', 'Name', 'PassengerId', 'Ticket'],

inplace=True, axis=1)

data_test['Age'] = prep.scale(data_test['Age'])

data_test['Fare'] = prep.scale(data_test['Fare'])

data_test = data_test.as_matrix()四、训练并在测试数据及集上进行预测

#开始训练

if __name__=='__main__':

Log_Re_Model = LogisticRegression(C=1.0, penalty='l1', tol=1e-5)

Bagging_Model = BaggingClassifier(Log_Re_Model, n_estimators=20, max_features=1.0,

max_samples=0.8, bootstrap=True, bootstrap_features=False, n_jobs=-1)

Bagging_Model.fit(features, values)

#利用训练好的模型和处理好的测试数据进行预测

predictions = Bagging_Model.predict(data_test)

result = DataFrame({'PassengerId': np.arange(892, 1310), 'Survived': predictions})

result.to_csv('result.csv', index=False)至此,baseline完成,后续会进行改进。并对blog进行更新。