Data migration Approaches:

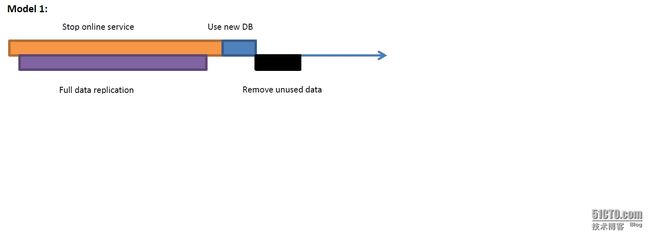

Model1:

Advantages:

Simple

Disadvantages:

Business disrupted

Migration needs to complete in limited time

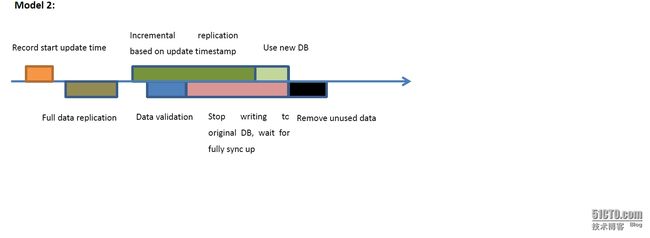

Model2:

Require each table to have an additional GMT_MODIFIED column

Advantages:

Shorter down time, data can be read from original DB when performing full data replication

Disadvantages

Hard to predict whether new DB can fully withstand all read/write load. If after switching to new DB, and it is overloaded, the result can be disastrous

Timestamp based scanning creates a lot of pressure on DB. If scan interval is too large, data is out of sync.

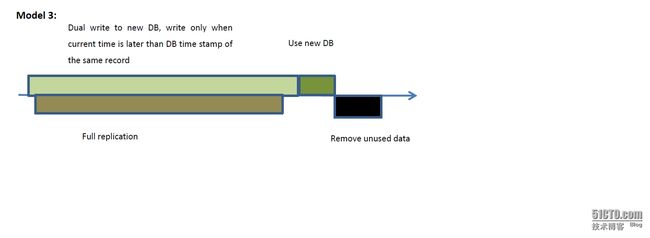

Model3:

Advantages:

Low data sync latency

No downtime

Disadvantages:

Intrusion into business logic to write to both database

Full replication and dual write occurs the same time, data in new DB may have conflict. We need to logically enforce that only records with newer timestamp are written to new DB

Date integrity may be comprosied: writing to old/new database need to be in same transaction. XA is very costly.

Incremental data replication is more widely adopted

For oracle it is harder to replicate journal, so use a two phase protocol

Write to a log table

Write to data table

Write data record id to log table

Asynchronously retrieve log based on id and replicate to new DB

Following modules are needed for data migration program

Control Module

Zookeeper

Timer

Task configuration

Full migration

Data fetch module

Customer defined functions

Data write module

Incremental migration

Data fetch module

Customer defined functions

Data write module

Incremental trigger

Data validation module

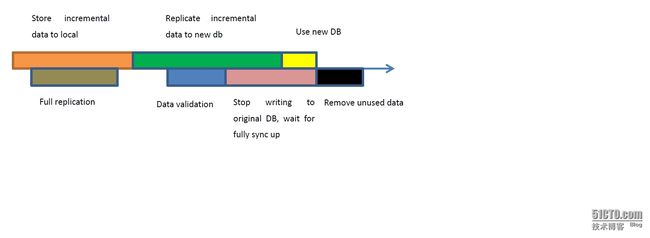

Model4:

Step 1:

Stop updated business data during full migration in local

Step 2:

Start full migration, need to consider exceptional cases where the target database server is down unexpectedly during migration. Solution is to store id range to replicate in zookeeper, so that migration progress can be queried from zookeeper on target server recovery

Step 3:

Start incremental migration, replicate local stored update to target database server until all local updates are consumed

Step 4:

Start data validation to ensure that migrated data and original data are the same (no missing or duplicated data). We can do full validation or sample validation based on importance of data. After validation, we can tentatively switch some of the read to new DB

Step 5:

Swith to use the new DB. After switch, write will be applied to the new DB and it is very hard to rollback

Multi-threaded migration consideration

1. Mulit-threaded migration increases write speed, but consumes more DB resources. Number of reades need to be dynamically adjusted based on time (business load)

2. Multi-threaded migration may cause deadlock, need to ensure that per write thread per table.

3. Data recovery becomes a challenge as it is more difficult to find out data failure point. The solution is to use a central dispatcher to allocate data range to migrate to different worker thread.